Where’s the best place for a datacenter? It’s an increasing problem as the AI buildup continues seemingly without pause. It’s not just a problem of NIMBYism; earthly power grids are having trouble coping, to say nothing of the demand for cooling water. Regulators and environmental groups alike are raising alarms about the impact that powering and cooling these massive AI datacenters will have on our planet.

While Sam Altman fantasizes about fusion power, one obvious response to those who say “think about the planet!” is to ask, “Well, what if we don’t put them on the planet?” Just as Gerard O’Neill asked over 50 years ago when our technology was merely industrial, the question remains:

“Is the surface of a planet really the right place for expanding technological civilization?”

O’Neill’s answer was a resounding “No.” The answer has not changed, even though our technology has. Generative AI is the latest and greatest technology on offer, but it turns out it may be the first one to make the productive jump to Earth Orbit. Indeed, it already has, but more on that later, because you’re probably scoffing at such a pie-in-the-sky idea.

There are three things needed for a datacenter: power, cooling, and connectivity. The people at companies like Starcloud, Inc, formally Lumen Orbit, make a good, solid case that all of these can be more easily met in orbit– one that includes hard numbers.

Sure, there’s also more radiation on orbit than here on earth, but our electronics turn out to be a lot more resilient than was once thought, as all the cell-phone cubesats have proven. Starcloud budgets only 1 kg of sheilding per kW of compute power in their whitepaper, as an example. If we can provide power, cooling, and connectivity, the radiation environment won’t be a showstopper.

Power

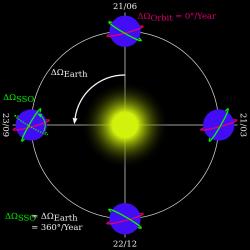

There’s a great big honkin’ fusion reactor already available for anyone to use to power their GPUs: the sun. Of course on Earth we have tricky things like weather, and the planet has an annoying habit of occluding the sun for half the day but there are no clouds in LEO. Depending on your choice of orbit, you do have that annoying 45 minutes of darkness– but a battery to run things for 45 minutes is not a big UPS, by professional standards. Besides, the sun-synchronous orbits are right there, just waiting for us to soak up that delicious, non-stop solar power.

Sun-synchronous orbits (SSOs) are polar orbits that precess around the Earth once every sidereal year, so that they always maintain the same angle to the sun. For example, you might have an SSO that crosses the equator 12 times a day, each time at local 15:00, or 10:43, any other time set by the orbital parameters. With SSOs, you don’t have to worry about ever losing solar power to some silly, primitive, planet-bound concept like nighttime.

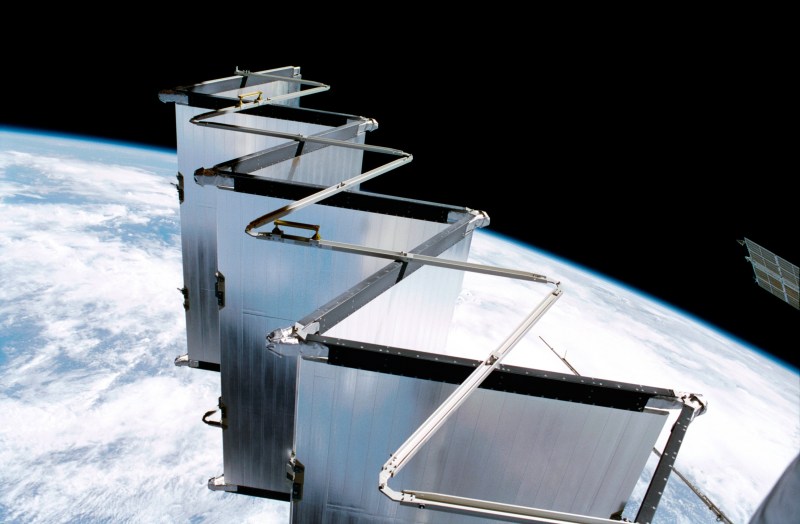

Without the atmosphere in the way, solar panels are also considerably more effective per unit area, something the Space Solar Power people have been pointing out since O’Neill’s day. The problem with Space Solar Power has always been the efficiencies and regulatory hurdles of beaming the power back to Earth– but if you use the power to train an AI model, and send the data down, that’s no longer an issue. Given that the 120 kW array on ISS has been trouble-free for decades now, we can consider it a solved problem. Sure, solar panels degrade, but the rate is in fractions of a percent per year, and it happens on Earth too. By the time solar panel replacement is likely to be the rest of the hardware is likely to be totally obsolete.

Cooling

This is where skepticism creeps in. After all, cooling is the greatest challenge with high performance computing hardware here on earth, and heat rejection is the great constraint of space operations. The “icy blackness of space” you see in popular culture is as realistic as warp drive; space is a thermos, and shedding heat is no trivial issue. It is also, from an engineering perspective, not a complex issue. We’ve been cooling spacecraft and satellites using radiators to shed heat via infrared emission for decades now. It’s pretty easy to calculate that if you have X watts of heat to reject at Y degrees, you will need a radiator of area Z. The Stephan-Boltzmann Law isn’t exactly rocket science.

Even better, unlike on Earth where you have changeable things like seasons and heat waves, in a SSO you need only account for throttling– and if your data center is profitable, you won’t be doing much of that. So while you need a cooling system, it won’t be difficult to design. Liquid or two-phase cooling on server hardware? Not new. Plumbing cooling a loop to a radiator in the vacuum of space? That’s been part of satellite busses for years.

Aside from providing you with a stable thermal environment, the other advantage of an SSO is that if one chooses the dawn/dusk orbit along the terminator, while the solar panels always face the sun, the radiators can always face black space, letting them work to their optimal potential. This would also simplify the satellite bus, as no motion system would be required to keep the solar panels and radiators aligned into/out of the sun. Conceivably the whole thing could be stabilized by gravity gradient, minimizing the need to use reaction wheels.

Connectivity

One word: Starlink. That’s not to say that future data centers will necessarily be hooking into the Starlink network, but high-bandwidth operations on orbit are already proven, as long as you consider 100 gigabytes per second sufficient bandwidth. An advantage not often thought of for this sort of space-based communications is that the speed of light in a vacuum is about 31% faster than glass fibers, while the circumference of a low Earth orbit is much less than 31% greater than the circumference of the planet. That reduces ping times between elements of free-flying clusters or clusters and whatever communications satellite is overhead of the user. It is conceivable, but by no means a sure thing, that a user in the EU might have faster access to orbital data than they would to a data center in the US.

The Race

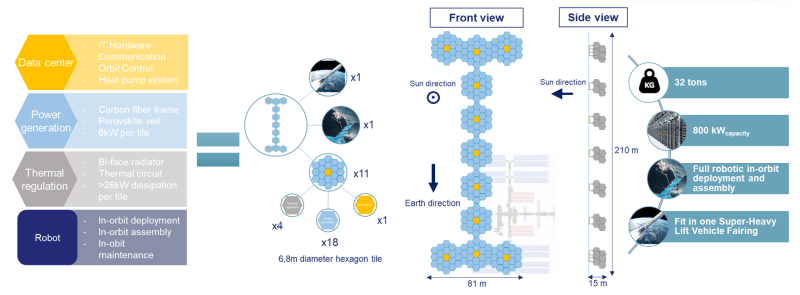

This hypothetical European might want to use European-owned servers. Well, the European Commission is on it; in the ASCEND study (Advanced Space Cloud for European Net zero Emission and Data sovereignty) you can tell from the title they put as much emphasis on keeping European data European as they do on the environmental aspects mentioned in the introduction. ASCEND imagines a 32-tonne, 800 kW data center lofted by a single super-heavy booster (sadly not Ariane 6), and proposes it could be ready by the 2030s. There’s no hint in this proposal that the ASCEND Consortium or the EC would be willing to stop at one, either. European efforts have already put AI in orbit, with missions like PhiSat2 using on-board AI image processing for Earth observation.

The Americans, of course, are leaving things to private enterprise. Axiom Space has leveraged their existing relationship with NASA to put hardware on ISS for testing purposes, staring with an AWS snowcone in 2022, which they claimed was the first flight-test of cloud computing. Axiom has also purchased space on the Kepler Relay Network satellites set to launch late 2025. Aside from the 2.5 Gb/s optical link from Kepler, exactly how much compute power is going into these is not clear. A standalone data center is expected to follow in 2027, but again, what hardware will be flying is not stated.

There are other American companies chasing venture capital for this purpose, like Google-founder-backed Relativity Space or the wonderfully-named Starcloud mentioned above. Starcloud’s whitepaper is incredibly ambitious, talking about building an up to 5 GW cluster whose double-sided solar/radiator array would be by far the largest object ever built in orbit at 4 km by 4 km. (Only a few orders of magnitude bigger than ISS. Not big deal.) At least it is a modular plan, that could be built up over time, and they are planning to start with a smaller standalone proof-of-concept, Starcloud-2, in 2026.

Once they get up there, the American and European AIs are are going to find someone else has already claimed the high ground, and that that someone else speaks Chinese. A startup called ADA Space launched 12 satellites in May 2025 to begin building out the world’s first orbital supercomputer, called the Three Body Computing Constellation. (You can’t help but love the poetry of Chinese naming conventions.)

Unlike the American startups, they aren’t shy about its capabilities: 100 Gb/s optical datalinks, with the most powerful satellite in the constellation capable of 744 trillion operations per second. (TOPS, not FLOPS. FLOPS specifically refers to floating point operations, whereas TOPS could be any operation but usually refers to operations on 8-bit integers.)

For comparison, Microsoft requires an “AI PC” like the copilot laptops to have 40 TOPS of AI-crunching capacity. The 12 satellites must not be identical, as the constellation together has a quoted capability of 5 POPS (peta-operations per second), and a storage capacity of 30 TB. That’s seems pretty reasonable for a proof-of-concept. You don’t get a sense of the ambition behind it until you hear that these 12 are just the first wave of a planned 2,800 satellites. Now that’s what I’d call a supercluster!

High-performance computing in space? It’s no AI hallucination, it’s already here. There is a network forming in the sky. A sky-net, if you will, and I for one welcome our future AI overlords. They already have the high ground, so there’s no point fighting now. Hopefully this datacenter build-out will just be the first step on the road Gerry O’Neill and his students envisioned all those years ago: a road that ends with Earth’s surface as parkland, and civilization growing onwards and upwards. Ad astra per AI? There are worse futures.

NOT profitable, AI chips go obsolete in months.

As do the Starlink satellites. Why do you think they launch them so often? to replace the aging obsolete ones with new ones. The same would happen for these “datacenters in the sky”

And fill the upper atmosphere with aluminum vapor and god knows what else.

How about putting them in the ground instead.

I want physical media on the Moon as well—a LONG NOW orbital antenna farm far out, so Hypatia 2 doesn’t get skinned by an ASAT

I think the title should have been something like “A New Cloud in the Sky”.

The real issue here is probably that such servers may be more susceptible to solar events … and it is probably somewhat difficult to replace a component or blade in a failing server although I would volunteer to do it, provided I had the right equipment to open the “can” and do the work needed — plus a free ride to and from the installation. 😁

Sorry MrT, the maintenance position is a contract job so you have to get yourself to the site but just keep track of your mileage and you can claim it at tax time

That’s a good title!

Yes, solar events are a potential issue– but we’ve gotten better at hardening satellite busses to flare events over the years. As far as I know, not a single bird has been lost in a geomagnetic storm this solar maximum in orbit, but I could very well be wrong.

(As opposed to that bunch of starlinks that were lost right after launch.)

When you fill up the orbit with satellites, a random meteor shower is going to play havoc with your setup.

No, the real issue is exactly the same as it is for every project we currently do.

Maintenance.

There just happen to be dozens and dozens of other functionally impossible problems too.

Putting it in space seems like a terrible idea, at least if the functionality is really important rather than just convenience boosting – in this ever more cluttered orbital mess and increasingly geopolitically unstable world full of hybrid warfare a satellite is rather vulnerable. For something like starlink with that huge cluster and the relatively short expected lifespan anyway it isn’t such a big deal if anything happens to a few satalites the service will be fine or at least rapidly repairable and the failed units are for space hardware dirt cheap, but a datacentre in space…

The only thing I really like about this plan is they won’t rip out the hardware and throw in 1000w GPU and 500+W CPU into every unit with even more energy intensive cooling to keep up the way many places on the ground do now hardware tends to get more powerful mostly by throwing more power at it. Shame you can’t benefit from more efficient hardware upgrade paths as directly but the fixed power budget and near impossibly to access the hardware for upgrades should lead to a very predictable self contained lump that nobody else needs to plan around.

To be blunt, space is the last place you’d want to put a data center in. The environment is just too harsh for our current technology. Sure, the stuff might seem to work for a while, but bit errors will start creeping in and cascade, chips will die in unexpected ways, and eventually one is dealing with just a chunk of useless hardware with poisoned bits, and who knows what in storage. And if one mitigates these issues using space hardened systems, the inefficiency of it eliminates whatever benefit might have been gained.

Not to mention the beancounter’s concerns, such as a large investment in hardware that might be obsolete before fully amortized, given the steady advances in computing power down on earth.

That said, it’d be cool if they can make it work.

What could possibly go wrong ?

Thermal management for a start

Aliens could steal our tech.

How could putting AI in the sky, networked together into a sort of sky-net, where we can’t turn it off be a problem?

I’m pretty sure tech bros know what they’re doing. When is the last time any of them did anything that wasn’t purely for the benefit of mankind?

100gbps doesn’t sound fast for a data centre.

100Mbps sounded fast for users 10 years ago. Not now. If this is launching in the 2030s, it’ll be obsolete before it’s launched.

We were installing multi-terabit links here in Tokyo before corona (a bunch of 100Gbit aggregated links) for backhaul. Now we fill multiple racks with 64 port switches with all their ports full of 100G optics in our fabric systems.

Nowdays 100Gbit is a standard everyday speed, we don’t even bother to make customer connections slower than that anymore as the hardware is so cheap now.

Only the slow servers still run at 10Mbit but they get sent off for scrap as we decomission them.

Well go the other way….down vs up…..submerge it in the oceans… free cooling, no rad hardening needed

I read a whitepaper like a decade and a half ago outlining combining a datacenter with an LNG terminal. LNG requires heat to fully gasify, which can easily be provided by the heat from the datacenter. Additionally, due to the amount of expansion, the paper also postulated that in addition to the savings in cooling costs, that the expansion could return something like 20% of the datacenter’s energy consumption. I’ve personally thought that combining one with a desalinization plant might work as well – use the heat from the datacenter to drive evaporation.

Power, cooling? Sounds like a job for a Hydro dam.

Totally agree.

You can have 10 – 25kW for a regular rack, with over to 40kW for a GPU rack. In space, this requires huge solar panels. ISS does 84 to 120kW, but is huge, much more than 3 racks. I don’t think you would get enough power and cooling.

Data center near Hydro plant seems good, you could use the water for cooling directly. Doesn’t even need a pump any more.

You could probably use the thermal mass of the dam itself for cooling.

Of course the environmental impact of launching thousands and thousands of rockets on the ozone layer etc. are totally ignored.

https://earthsky.org/space/ozone-layer-damage-increased-launches/

This is how 99% of green industry works. It is all just a bunch of industrialists wanting to sell absolute garbage at a much higher profit margin by saying it will fix the environment. And then they throw smoke bombs and chaff if you point out “No, you’re not doing what you’re saying, you’re just doing the exact same thing and using the opposite rhetoric…” Then they conflate you with climate denialists, as if the only options are “You aren’t actually helping, you’re part of the problem” and “The problem doesn’t exist at all.”

Very nasty people. I work in the industry btw. It is full to the ceiling with snakes and con-men.

Wouldn’t that be Gerard O’Neill? (got both first and last names wrong. did it go undetected because the parity error cancel out?)

Something like that. Fixed.

Is orbit out of reach of national regulatory issues and surveillance by three letter organizations?

Could it be a good location for data havens who want to be out of reach of certain nations’ rules?

The suchly-styled HavenCo in the self-declared sovereign entity of Sealand went defunct in 2008. Maybe the orbit-based reborn version should be called HeavenCo.

Sealand and the new EU patent court (which will rubberstamp software patents): https://ffii.org/eu-patent-court-will-be-located-in-sealand-ministers-say/

People who think putting a data center in space is a good idea don’t understand much about datacenters OR space…

It is basically the worst possible location for a data center that humanity can reliably get to.

“Basically” might not even be a strong enough word.

Are there actually any worse places that we can put things at scale?

I feel like the inside of an active volcano might be worse? But it might not be.

I guess putting one on Luna would be worse too? I dunno.

It would be a step better for maintenance availability but steps worse for communication delay. And then there is the problem of Lunar dust destroying everything. And you still have almost all the same problems of being in space.

2800 satellite supercluster computer. Equipped with lasers. Skynet indeed.

How is that ground-based laser-powered deorbiting system coming along now? We don’t want to have to start throwing rocks at them when they go rogue.

People should try working on an open-source anti-satellite tool and see how quick that gets a knock on the door

Sounds like a rocket company owner trying to gin up more business by floating stupid ideas.

This, there’s no way Puti putting it in space is cheaper, and if you care about the environment instead of doing this greenwashing crap as another comment says put a big ass solar fiels by your data center and use a closed loop cooling system. (This disregards the environmental impact of mining the materials needed for the batteries for this.) Actually, why don’t data centers use closed loop cooling? News articles make it sound like they just pour water down the drain. Does anyone know?

the news articles are stupid is why. Estimates for “water usage” have about the same basis as the estimate for plastic in the ocean.

Like the company (Astrocast) that raised a bunch of money to put up a cluster of satellites that would provide a space-based network for IoT communications. It was a laughable idea from the start, but they did manage to put a dozen or so satellites in orbit that are now turned off (they don;t even put out a beacon) because the company soon went bankrupt. The most annual revenue they ever generated was something like $350 thousand (not million) dollars.

Somebody somewhere probably made money on it at some point in time, though … buzzwords fleece the ignorant.

The panels on the ISS are massive, and give you 120kW.

A quick Google tells me a single medium size data center needs 500kw-2MW.

This idea seems about as valid as the “circular runway” that made the rounds a few years back.

🤦

Problems

1. SAtlantic anomaly https://svs.gsfc.nasa.gov/4840/ https://dailygalaxy.com/2025/03/nasa-is-tracking-a-massive-anomaly-in-earths-magnetic-field-and-its-getting-worse/ https://newspaceeconomy.ca/2025/05/28/the-south-atlantic-anomaly-earths-magnetic-enigma-unveiled/

2. fuel. We constantly need something to discard in order to move and correct the position.

3. RF capacity. It is already more cost-effective (China is so circumventing US sanctions on AI science) to transport physical disks than to transmit this over slow radio. https://www.wsj.com/tech/china-ai-chip-curb-suitcases-7c47dab1

“Sure, there’s also more radiation on orbit than here on earth, but our electronics turn out to be a lot more resilient than was once thought…”

NASA frequently complains about the hardware shielding and software redundancy needed to keep things functioning, as well as the unwillingness of the electronics industry to provide them with better radiation hardened electronics (otherwise, they quite rightly point out, they wouldn’t still be funding research into nanoscale vacuum-channel transistors). This is less of a problem in orbit around the Earth, as opposed to out in interplanetary space or further, but still a problem that gets worse the more complex your digital computing and the smaller your average semiconductor components get.

Ideally, we’d switch to some material that is inherently radiation resistant, such as gallium arsenide (like everyone thought we would 30 years ago) but between industry inertia and the already worsened tunneling effects in the current size regime, we likely won’t.

Veritasium had some good coverage of this when he was predicting a renaissance of analog computing, even citing how cosmic rays cause logic and memory errors in electronics on the ground before touching on the problems in space.

And while StarLink has been having some minor problems with shielding, their main issue the last three years has been space weather causing changes in drag and pulling satellites out of orbit (space being a true vacuum and the atmosphere staying a consistent height are two other myths about space).

Both effects (radiation and high atmosphere/space weather) are set to get worse as Earth’s magnetic field follows its general downward trend in field strength.

All said, I’ll be interested to see how the satellites hold up.

The landfill.

Not for one thing or another but with all the starlink and similar sats and the offshoot of the massive amounts of low orbit spy sats and the increasing presence of rockets to more and more countries I am actually fully expecting that in a not too distant future people will start shooting satellites out of the sky.

I mean is it officially an act of war if you shoot down a commercial satellite that is used in wars against you in the first place? I bet that the US would do it and label it as ‘national security’ (which they think excuses anything these days).

I see it as something that will happen at some point.

So if your are in the media you can start writing your tiresome BS pieces on the subject now, and press publish when it happens.