Has anyone noticed that news stories have gotten shorter and pithier over the past few decades, sometimes seeming like summaries of what you used to peruse? In spite of that, huge numbers of people are relying on large language model (LLM) “AI” tools to get their news in the form of summaries. According to a study by the BBC and European Broadcasting Union, 47% of people find news summaries helpful. Over a third of Britons say they trust LLM summaries, and they probably ought not to, according to the beeb and co.

It’s a problem we’ve discussed before: as OpenAI researchers themselves admit, hallucinations are unavoidable. This more recent BBC-led study took a microscope to LLM summaries in particular, to find out how often and how badly they were tainted by hallucination.

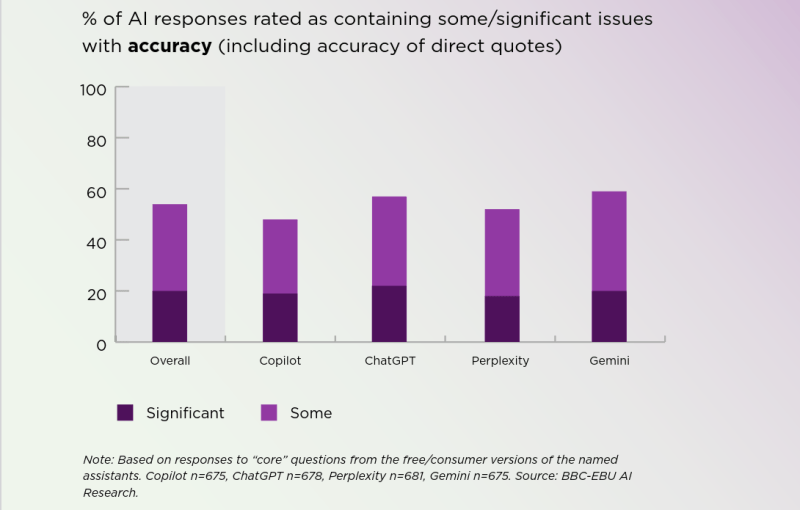

Not all of those errors were considered a big deal, but in 20% of cases (on average) there were “major issues”–though that’s more-or-less independent of which model was being used. If there’s good news here, it’s that those numbers are better than they were when the beeb last performed this exercise earlier in the year. The whole report is worth reading if you’re a toaster-lover interested in the state of the art. (Especially if you want to see if this human-produced summary works better than an LLM-derived one.) If you’re a luddite, by contrast, you can rest easy that your instincts not to trust clanks remains reasonable… for now.

Either way, for the moment, it might be best to restrict the LLM to game dialog, and leave the news to totally-trustworthy humans who never err.

I never trust clanks

That’s basically the plot for Deus Ex: Human Revolution :’)

I thought the plot was planned obsolescence

To think transhumanism reduced to such a simple plot. Cyberpunk 2077 did better, but still…

Given that the underlying reality is pretty discouraging, and the “news media” coverage of it is of such a low quality, who wouldn’t rather have 50% of their news media consumption based on fantasies instead? It’s an escape.

Would you trust a German newsman named Walter Disease?

OMG, yes Walter Krankheit, lol. Ok ok, close, very close.

well the germans trusted a guy named Lord Haw-Haw……….

LLM summaries are terrible because they ignore nuance like it was going out of style. As if the state of journalism wasn’t bad enough for them to include LLMs…

I don’t watch the news so this doesn’t affect me personally. Not on TV, don’t read the newspaper, not on the internet. Life still goes on, just without an artificially induced impending sense of doom…

News has unfortunately been absolute trash for more than a generation, correct. Journalists kind of ride on the prestige of people from half a century ago… As if the trade still had any honor or value to it.

I hate all the AI slop search results. I might search for “how to change a light bulb”, and the results always start with things like “why should you change a light bulb” and lists many things related to light bulbs, but doesn’t actually say how to change one. It’s so many words yet says so little.

Not to mention “Find light bulbs in your area”, and “Cheap light bulbs shipped free”

Or the amazon search result for lite bulbs

That seems to be particular to Google. I’ve seen Copilot file the serial numbers off good tutorials with reasonable efficiency.

Or YouTube videos that are nothing more than an AI voice that reads some unaccredited reddit or stackoverflow post with the answer (which often doesn’t work or is outdated).

The one that made me furious was when I was trying to figure out how to do something on my tablet and the search result found this article with a title that basically said “How to do X” where X was the thing I wanted to do. The article talked similar to how you describe and then concluded by saying that there wasn’t any way to do X. I created a politely worded comment calling them out on this but over a year later it was still pending moderation…

It’s getting really bad. A terrible LLM article can maximize SEO and hit the top of the results. It’s hurts my brain trying to read the slop.

More likely you will get a thesis on the history of “The Light Bulb” with lots of repetition and pull quotes from web sites.

Now do the same analysis for news reporters.

Hard to tell how much is hallucination and how much deliberate BS.

For the BBC I’d say 40 to 60 percent deliberate and an additional 20ish percent ‘hallucination’.

That’s without them using AI, I’m sure they use it though, they all do.

This is great, we will have finally reached a point where nothing can be believed. Maybe only then would people be interested in the truth.

We’ve already run that strat a few times in history.. Unfortunately it only leads people to be distrusting dismissive of everything and in general they stop believing that something like truth was ever possible.

As much as I am not a fan of the prevailing AI LLM equivalency cash grab, I have to concede that, today, Grok or ChatGPT could potentially, if all goes well, provide me with more useful news than Walter Cronkite can.

Though, I would likely consider both sources to be of roughly equivalent levels of reliability.

I got ‘news’ for you, he died in 2009.

The dickens you say.

I have to point out that these days deceased people release new books, and they are really ‘revealing’ the news (and youtubers) will tell you. But somehow there seems to be some doubt in me about their reveals.

Walter Cronkite has not made an error or reported a misleading story in well over a decade. Can you say the same about any LLM out there?

I mean being dead for the last 16 years helps him with that record, but point taken.

His coverage of the Tet Offensive as a US defeat supposedly was another reason for then-President Johnson losing his enthusiasm about the mess.

Yes, he basically declared the was over and a defeat of VN and US forces. It was a major boost to the opposition and dead wrong. In truth it was a total defeat for the North and the NVA never went south of the DMZ after that! But the meme stuck and was latched onto by many others.

Warning – this article was written by an LLM!

I said in the early 70’s they’d be able to read aloud in different voices in the future and Walter was my most often quoted voice followed by Howard Cosell.

Real journalism has never been more valuable and less funded than it is now.

Good commentators/bloggers/vloggers often do more digging than most journalists who simply copy most news from Reuters and AP. Journalists often have no technical backgrounds and their reporting of scientific breakthroughs is often completely wrong. Most don’t even know the difference between power and energy. Nowadays everyone has a camera and now we can have people who are on the scene before the press. Sometimes witnesses prefer talking on a podcast over talking to the press.

LLMs can help in quickly gathering information. But it is quick and dirty since it makes mistakes. And won’t summarize what it hasn’t indexed. The quality control aspect has to be done by humans.

In the days of stone age (before free unlimited access to any kind of information) this job, ie, correcting journalists, was done by editors. Those people were few and between, since it required proper education and prior experience working as a journalist, and not just the eagerness to share with the world what one just had learned (or copy-pasted from elsewhere without paying attention). Some editors conversed freely in few languages, doubled as translators, and there was a reason some were paid like CEOs, because they were as important as CEOs, and more than one (that I personally spoke with) had proper degrees in science in addition to language major.

Disclaimer – my father-in-law was a professional editor who worked for major magazines and newspapers, traveled extensively, spoke freely (and regularly translated) three languages, etc. He also edited numerous books and all kind of publications, etc. Compared with HIS breadth of knowledge and expertise (he also had mechanical engineering degree AND worked as en engineer), 99% of the youtubers are aspiring middle schoolers learning how to do things on the job.

It is sad to state this, but this is our new era of amateur hour drowning everything else in noise. There is nothing wrong with the aspiration, but there is a lot wrong with aspiration not taking time to do its homework. LLM is okay tool for the job, because it lacks one thing – SELF CHECK. It cannot properly examine what it just spewed forth, and I am appalled by the fact that humans have to do this task instead.

Obviously, there were editors and there were editors, some were good (like my father-in-law), some were okay, and some sucked. Obviously, some were screwing up what was written by good journalists, and reverse was true, sometimes terrible journalists’ writing was reshaped and corrected by good editors.

I tried deepseek and Gemini to solve a puzzle. Both were wrong but were very convincing when presented with their errors. Gemini gave up after a few tries ,

But Deepseek kept trying but not getting any closer to a correct answer. Maybe we need a modified turing test to see if they are a smart human.

Since LLMs use human products, they are subject to human bias, especially on any topic with political implications. Example [I knew the answer to this was “yes”]:

Q: Is “illegal alien” an official term previously or currently used by US governments?

With Grok, the short, one sentence answer to that question automatically shown below it before hitting “enter” to actually ask the question is:

No, “illegal alien” is not an official term used by the US government.

When “enter” is hit and the question is actually asked, the answer is:

Yes, “illegal alien” is both a previously and currently used official term by the US government, including in federal statutes, court rulings, and agency documents. Here’s a breakdown with evidence:

[large number of examples]

In summary, “illegal alien” is an entrenched legal term of art in US immigration law, used for decades and still valid today, even as softer language gains traction in non-legal contexts. For primary sources, check the US Code on congress.gov or Supreme Court opinions on supremecourt.gov.

On any topic with no political implications, I find Grok to be outstanding. Even on those with political implication, the long form answers are fairly balanced with input from both sides of an issue.

In short, I trust long form Grok answers VASTLY more than I trust ANY news source or individual even on political topics. It just DIGS for the facts, presents them, and then -I- decide.

Sometimes, I ask for interpretations, correlations, and projections.

For instance, here’s a topic which should be of GREAT interest to those in the US that isn’t being covered AT ALL except on a few YouTube channels. At least the AI Flock cameras are getting some attention. Ask Grok without the quotes “Palantir + Flock + Ring + Clearview + Starlink + AI. Connect the dystopian dots.”

Here’s a preview of that dystopia as recently experienced by this poor lady. The quoted text is from the video of the cop at her front door. She only got the case dropped because she could prove via her OWN personal electronics’ surveillance of her movements after she left home that she wasn’t responsible for the theft:

Flock: “you can’t get a breath of air without us knowing” (5:15)

Louis Rossmann

127,397 views – Oct 29, 2025

https://www.youtube.com/watch?v=AoEQg1M92_E

BBC world service went downhill around 2000 when the last of the cold war funds had run out. It never fully recovered from self-induced myopia (regularly ignoring events affecting more than half the world population).

Meaning, today, comparing BBC news with LLM would be about as reliable as comparing “National Enquirer” with “NY Times”. Both seen their heyday, and both are in the process of decline, neither one winning against them internets. Obviously, AI is not in the process of decline, far from it, but the results are about as convincing as the mentioned comparison.

I’ve long suspected half of the “National Enquirer” articles were generated with random words minced together through Markov chains by some kind of pocket TRS-80; nowadays one can do about the same level of random spewery using Arduino (or ESP32 – I nominate ESP32C3, since it is the cheapeast kind that can connect to them internets directly, automagically, scheduled, etc). Before them internets similar results were accomplished by asking kindergarten kids and diligently writing down what they said, now they have been relieved of such duties, since AI can extract those directly from youtube videos with about the same results.

As far as “scientific news” go, the spigot has been turned full blast on for more than a decade now, so with some virtual elbow grease one can find the original source without trying too hard. I am guessing it is down to the usual “whether one is willing to go his/her homework” and apprehend/understand what he/she had just found. AI is still a tool, not a replacement, even though it is darn good tool (in some sense), so it is also down to how one uses that tool, wisely, not really, etc.

The difference between an LLM hallucination and an outright media deception is the intent. I’d rather the noise of random LLM blather as it cancels out with enough samples, whereas the distortions caused by the lies of the mainstream media are insidious.