One of the fun things about writing for Hackaday is that it takes you to the places where our community hang out. I was in a hackerspace in a university town the other evening, busily chasing my end of month deadline as no doubt were my colleagues at the time too. In there were a couple of others, a member who’s an electronic engineering student at one of the local universities, and one of their friends from the same course. They were working on the hardware side of a group project, a web-connected device which with a team of several other students, and they were creating from sensor to server to screen.

I have a lot of respect for my friend’s engineering abilities, I won’t name them but they’ve done a bunch of really accomplished projects, and some of them have even been featured here by my colleagues. They are already a very competent engineer indeed, and when in time they receive the bit of paper to prove it, they will go far. The other student was immediately apparent as being cut from the same cloth, as people say in hackerspaces, “one of us”.

They were making great progress with the hardware and low-level software while they were there, but I was saddened at their lament over their colleagues. In particular it seemed they had a real problem with vibe coding: they estimated that only a small percentage of their classmates could code by hand as they did, and the result was a lot of impenetrable code that looked good, but often simply didn’t work.

I came away wondering not how AI could be used to generate such poor quality work, but how on earth this could be viewed as acceptable in a university.

There’s A Difference Between Knowledge, and Skill

I’m going to admit something here for the first time in over three decades, I cheated at university. We all did, because the way our course was structured meant it was the only thing you could do. It went something like this: a British university has a ten week term, which meant we had a set of ten practicals to complete in sequence. Each practical related to a set of lectures, so if you landed one in week two which related to a lecture in week eight, you were in trouble.

The solution was simple, everyone borrowed a set of write-ups from a member of the year above who had got them from the year above them, and so on. We all turned in well written reports, which for around half the term we had little clue about because we’d not been taught what they did. I’m sure this was common knowledge at all levels but it was extremely damaging, because without understanding the practical to back up the lectures, whatever the subject was slipped past unlearned.

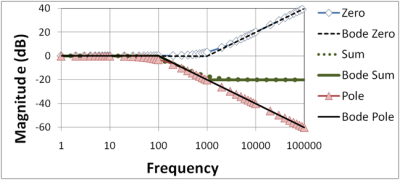

For some reason I always think of poles and zeroes in filters when I think of this, because that was an early practical in my first year when I had no clue because the lecture series was six weeks in the future. I also wonder sometimes about the unfortunate primordial electronic engineering class who didn’t have a year above to crib from, and how they managed.

As a result of this copying, however, our understanding of half a term’s practicals was pretty low. But there’s a difference between understanding, or knowledge, and skill, or the ability to do something. When many years later I needed to use poles and zeroes I was equipped with the skill as a researcher to go back and read up on it.

That’s a piece of knowledge, while programming is a skill. Perhaps my generation were lucky in that all of us had used BASIC and many of us had used machine code on our 8-bit home computers, so we came to university with some of that skill already in place, but still, we all had to learn the skill programming in a room full of terminals and DOS PCs. If a student can get by in 2025 by vibe coding I have to ask whether they have acquired any programming skill at all.

Would You Like Fries With Your Degree?

I get it that university is difficult and as I’ve admitted above, I and my cohort had to cheat to get through some of it, but when it affects a fundamental skill rather than a few bits of knowledge, is that bit of paper at the end of it worth anything at all?

I’m curious here, I know that Hackaday has readers who work in the sector and I know that universities put a lot of resources into detecting plagiarism, so I have to ask: I’m sure they’ll know students are using AI to code, is this something the universities themselves view as acceptable? And how could it be detected if not? As always the comment section lies below.

I may be a hardware engineer by training and spend most of my time writing for Hackaday, but for one of my side gigs I write documentation for a software company whose product has a demanding application that handles very high values indeed. I know that the coding standards for consistency and quality are very high for them and companies like them, so I expect the real reckoning will come when the students my friends were complaining about find themselves in the workplace. They’ll get a job alright, but when they talk to those two engineers will the question on their lips be “Would you like fries with that?”

In germany, at alot of Univeristies the programming exams are done on a piece of paper with 0 elektronics involved. This is kinda damaging to the ones who actually can code, but forget stuff like imports and some semicolons cause the IDE will tell them. But those who have no idea how to code will be visible.

In France, they have a new Prime Minister Le Cornu who claimed a law degree he never finished.

some of our ministers in germany got sacked because of cheating on the phd. I didn’t grew up here and my uni there were quite a few cases of cheating. Some got sacked, most got better grades than me. I couldn’t do it, I started laughing out loud… learning the subject was easier.

Ooof thats rough.

I am one of those oddities that for the most part use a text editor to write code (there are exceptions, but python/go/and C I only use a text editor). So I often miss semicolons and such, then when a compiler complains I go “oh yeah”.

Notepad++ user here, or at least was until I killed off my last win10 machine.

I don’t know why but I find it easier to use than most IDEs.

I guess I’m going to need to learn a new approach now..

A good text editor that you’re very familiar with is a better tool for actually editing code than the built-in editor in an IDE. IDEs have keyboard shortcuts for non-editing functions. They have other things cluttering up the interface. And you can use the same editor across different operating systems and programming languages making it easier and more cost-effective to learn it deeply.

I would top that up with:

A very good text editor used right can fulfill a lot of functionality that a full blown IDE provides. Not 100%, but a lot of the daily editing can be simplified by getting somewhat proficient in Vim.

And that transfers to IDEs, because most of them support (a subset of) Vim key bindings.

The only text editor with enough functions to require an entire keyboard worth of shortcuts is a GD operating system.

Fortunately it includes a decent text editor.

Notepad++ is quite reliable under Wine on Linux (at least up until version 7.5.1, haven’t tried any newer ones under Wine).

Last I saw, Notepad++ was open-source. Somewhere someone has written a shim library that provides the Win32 system calls and lets you #include “windows.h” but compile for a Linux environment.

I can’t remember what the library was called, I came across it several years ago on Github but never did a git clone on it…

I did that in high school. Write down C by hand on an exam sheet.

The compilers at the time were less than helpful, throwing up a bunch of unrelated errors and not pointing out what might be amiss by even attempting to detect it, so you quickly learned to check all your semicolons and brackets were in place before doubting your code. That meant the hand-written test was a doozy.

Write out Fortran on coding pad, review with teammate across the desk. Then, take over to keypunch machine and crank out punch cards which have no text on them. Take cards to datacenter window and hand to clerk who will load the cards into hopper along with a dozen other decks. Wait an hour or so, then come back to output window and retrieve listing to see if program compiled and review errors. Repeat until clean compile.

Ah! I remember those days back in the 1970s.

And always ask for a punch deck with sequence numbers by 10s on the deck so that when you dropped the deck you could run it through the sorter to get the cards back in order (doing it by 10s to give you room to insert more cards when it didn’t work.)

They’d pre-punch line numbers on the cards for you Wheels17??? (how did that even work? typos?)

Luxury!

We had to make out own cards out of used Lucas car parts boxes.

Even we knew to draw a diagonal across the top of the stack w a marker.

We used to dream of automatic card sorters.

Closest we had were younger siblings, who only worked 14 hours/day at the mill, had time.

Back in the early 1990s, I had to write System/370 assembler code for IBM mainframes by hand using pencil and paper. For one exam, we even had to write out everything as hexadecimal object code.

My first program on a System/370 was a “Hello World” – that printed jibberish.

A rude reminder of EBCDIC (Every Bloody Codes’ Different, IBM Crap).

Here in the Netherlands, I’ve had several exams like that, but we were always allowed to keep whatever notes we wanted. Some students even printed out c-reference documents and whatnot hoping it’d help them.

In the end the teacher didn’t even grade the works based on absolute correctness, but just on how well you understand it and whether your solution would’ve worked after making it compileable.

Of course this kind of exam stresses out students who don’t know what to expect, but in my experience these exams were actually the fairest ones to determine one’s programming skills. Later in life you probably end up in front of a whiteboard during a job interview, and you’ll be prepared.

Open book exams were the worst.

Except the take home exam.

Only had one of those, but F me it was bitch.

Fields/antenna design.

Only possible in Jr/Sr level courses with small classes.

In Poland too.

What was the prof’s answer when I asked him why the hell do we have to write code of our C++ assignments on A4 paper, scan it and then e-mail it to him? It’s pure nonsense.

“This way you’ll memorize the syntax.”

F… you. It’s been 15 years and I still haven’t touched C++ since then.

I’ve done C, assembly for various MCUs, Python, Java, some Bash and now I’m transitioning into farming and small-time machining workshop. I just can’t get myself to ever again do

#include <iostream>or something.That is horrible, but also true. People who take notes remember better than those who don’t, but even a highlighter on reading material helps some. If your notes are basically a transcript of a lecture and you never read them again, that still helps a lot. Studies have consistently shown that handwriting works better than typing, though I don’t know how well they control for even the obvious confounds. (Most of the study subjects had probably done enough handwriting that they didn’t have to consciously think about letter shapes as often as they had to glance at the keyboard.)

I always get reminded of missing semicolons….

At least 25% of my commits have a comment like “fix typos”.

Use precommit? Not going to help for docs, but will stop you committing code nonsense!

Better to have a merge stage in the workflow. You do want the pre- cleanup parts to be saved and even committed while you are still developing.

“git commit –amend –no-edit”

It will add your modifications to your previous commit.

Oh, also, wait before pushing to the remote when you commit, so you don’t have to “git push –force” ; )

Yes, we switched to paper and pencil exams in at least some of the programming courses in my department, including one of the ones I teach. They’re not pleasant for the students to take, they’re certainly not pleasant for me to grade, but they make it rather obvious who’s been outsourcing all their work on assignments to an LLM.

Obligatory XKCD…

https://xkcd.com/3160/

I need to try this…

Perhaps the new skill on the block is getting AI to generate good code.

Once this is mastered we might have better than human code?

That’s not a skill, any more than entering prompts to make AI ‘art’ makes someone an artist. If a machine is anywhere in the productive process, it cannot be art.

So the digital pixel-art image I personally created using my computer as the canvas and then printed on my printer to frame it and hang it on the wall is not considered art to you, since there are two involved machines in the productive process. Confusing.

I really hope you mean the creative process.

It’s not playing a key role in that example, it’s simply replicating something a human can do by hand. The point is that you are still being creative.

The ultimate test is creativity. You can imagine a car painted in the style of Van Gogh, for example, a computer could not on its own and would need to be shown that to reproduce it (which is what AI ‘art’ is doing, just stealing, copying and pasting).

Is the creativity not in the origination of the idea then, because if it’s not then you’ve negated the value of pretty much every piece of art and code that was inspired by another…

Ugh, posted too soon by accident, that’ll teach me to not put my phone in my pocket before I’ve finished.

I agree that AI art is wrong but from a philosophical point of view, it’s as much a valid piece of art as many others (financial value, value to the art world and human culture notwithstanding) and thus disagree that the machines or tools used negate the value of the output as art or even valid code.

If that were the case then any piece of art, music etc drawn, painted, sculpted etc after the first person saw a cow then used a brush, a chisel, pastel, charcoal etc was of zero value because the artist used the same tools and subject as someone who’d gone before.

So there has to be artistic value in the idea and the formulation of the technique used to bring it to life.

Therefore you could argue that the person who ‘configures’ the AI with a prompt and iterates on that configuration to achieve their vision is the artist no?

The masters used to take apprentices who learned techniques and often copied works of the masters, does that make their later work less valid?

I thought art was defined if someone liked it.

The test I like to use on art is whether there’s intent in it. Art, no matter how produced, conveys some idea or emotion or message, point, that comes from the artist. Art communicates on some level. Think Marcel Duchamp’s found object art: “…an ordinary object could be elevated to the dignity of a work of art by the mere choice of the artist”

The way in which AI is not producing art is in the fact that while the user may give it a prompt to produce something, the choice of what comes out is made not by a mind with an intent but essentially a random number generator, so the outcome is the choice of no-one.

An artist can still pick from randomly generated images and say “This is it”, but the choice there becomes a question of whether the piece was chosen simply because it was offered by the machine. To make it art, the artist – the person – needs to define the meaning.

In this sense, when a person just picks from the output of an AI generator and says “I like this”, they’re not producing art but Kitsch: something which looks like it has meaning or intent but it’s really void and doesn’t communicate anything. It merely pretends to be art.

For instance, Duchamp taking an ordinary urinal, turning it on its back and signing it “R. Mutt” was basically flipping the bird at the art establishment and saying “Look what I can do – what are you gonna do about it?”.

And that’s what made it art. There was a point in it, why it was chosen. Someone else playing the same game and plopping a piece of old lavatory furnishing on a pedestal would be an imitation without original meaning from the artist, so the trick doesn’t work anymore as-is. At least you have to tell the same joke better if you want to pull it off.

Or, think of James Burke walking down the length of the Saturn V rocket laying on the ground, talking about rockets, and ends his walk and talk with the actual Saturn V launching up in the background. One take, perfectly executed, never to be replicated. What’s so impressive about that was that they managed to pull it off just so, and that puts you in awe of the audacity – what a great trick that was! Then you realize you’re looking at the rocket going up, in that state of emotion, and realize that was exactly what they wanted to communicate. Do you see what I see when I look at this rocket going up? Maybe you didn’t get it at the moment, but you felt it all the same.

If it was done using film trickery and clever cuts, or later with computer graphics, it wouldn’t have worked because of the lack of authenticity, even if the intent was there. With AI, everything it makes lacks authenticity because it can randomly generate just about anything, and it lacks intent because it is randomly generated, so you see in it what you want to see and feel what you want to feel by coincidence. The “message” doesn’t come from any artist, but from you, the viewer, which is the point of fake art or Kitsch. In the same sense, people passing off AI generated imagery as art is like selling an empty bottle of expensive wine – if you buy it, you have to supply the wine yourself.

Simple test.

If I could have done that, it ain’t art.

Creative?

Define.

For most people using the word it’s just an excuse ‘I’m a creative!’

Every turd is a unique creation.

I’ve heard a GD tech writer declare that ‘the team will be better now that there is a creative on it’.

He was there to write the instructions for a system the rest of the team created.

The point there is that you would have done that.

That is not art because it’s imitation; artifice. Copy.

You could very well produce art, just not when you’re coloring by someone else’s numbers. That’s all the AI can ever do – it relies on a sort of consensus of what you mean by your prompt, dictated by the data it has been trained on, and produces something that makes you believe this is what you wanted. It removes the artist out of the equation and replaces it with something that merely imitates art.

Basically, what you’re doing by prompting the AI is, you’re telling it to pick a bit of this and a bit of that and mix them together in a random fashion.

You would certainly be doing that as an artist yourself, but even the most kitschy derivative artist cannot help but include some of themselves, their own choices and reasons, into whatever they’re making. We can then ask, why is this or that? What made the artist choose just so? What does it mean? We may like or we may hate the answer, but the question still remains to be answered. We see there is something beyond the object itself that we can recognize as intelligence, meaning and purpose, or an individual perspective of the person.

The AI replaces all that with a random number generator and a statistical algorithm that says it must be so because the average of the existing examples is so. Asking the question reveals the same thing as with any other AI, like a chess playing robot that randomizes a billion plays and picks the strategies that happen to win to use against its human opponents. There was no idea or perspective after all, just dull luck.

Dude:

By your definition code is ‘art’.

So is framing a structure.

Anything beyond replacing a part with same part.

Artistic pipe routing?

Frankly, too much code is ‘art’.

If it means everything, it means nothing.

Sounds simple at first, but is it? Aren’t we biological machines, too to a certain extent?

And if not, how do we exactly define the difference herr? These things can get philosophical really quick.

I mean, we could jokingly say that in the future LLMs will do the “liberal arts”, so humans can do focus entirely on field works (hard, manual labor). ;)

Please see above. A machine doesn’t understand, it cannot create. Try to get an AI to imagine a pink elephant and make an image of that without a pink elephant being in its training set, you can’t.

Hi, no offense, but did you even mentally comprehend what I tried to imply here?

Proving consciousness or a soul is an near impossible task.

We can’t even do that for ourselves so far.

Yet same time we agree on the general idea that people around us have consciousness, too.

So from a philosophical, ethical or moralic point of view

there’s the question if we shouldn’t speak in favor of the accused when in doubt?

Granting an A.I. rights “when in doubt” is more humane than denying them, after all.

PS: There’s an episode of TNG that mentions the matter.

https://www.youtube.com/watch?v=WkO9yDCW2n4

Voyager had one, too, when the doctor fought for his rights as an author.

https://www.youtube.com/watch?v=m6Bi7tJK-j8

Sure, that’s “just” science fiction. But the fundamental dilemma is same.

At which point does a “set of algorithms” become a consciousness?

And how does someone figure that out?

And at which stage exactly is young human life sentinent?

These are some questions of our society.

Another circumstance is, I think, that LLMs just have started.

Who knows how they influence the world in the next few years after they have passed a few generations..

I’m a bit worried, to be honest. 😟

It could change the value or whole meaning of work and change human society as such.

Because the current model of capitalism is basically based on needs, goods and human exploit.., err, labor.

Your pink elephant example is badly flawed, try asking someone who’s never seen or heard of an elephant to draw one, they can’t, because it’s not in their ‘training set’ either.

@CJay UK

Indeed, that is an often given reason for why medieval bestiary and map marginalia is so entertainingly bonkers – a description of the creature as interpreted by the artist, who likely didn’t even get to talk to the person who wrote the descriptions, as the map is likely made of data from many sources, many of whom are likely already dead…

I raise you a black swan.

Congrats, you just became subject to repeating history.

Dude according to your definition, no one has done anything in the last 80-100 years lol. What makes an artist? Anybody can draw. Do racecar drivers need to run the races instead to earn your merit? Glad you are not in charge of the world lol. It must be exhausting walking everywhere and not touching most of the world around you… Just wow. Now, if you had been all up in arms about people not attributing their work to AI when applicable, I would totally have your back. But in your world people that use word processors are not writers, DJs should be yelling louder and not using antennas etc. Thanks for that it was a fun mental exercise :)

then most art does not exist at all because any implement that could be used to create it could probably be considered a machine, such as a pencil, an ink pen, or definitely a camera.

Tell me more about how you make your pencils. And paper.

For purposes of the current discussion, you need not detail how you made the saw blades to fell the trees.

But does coding have to be art, or is it enough for it to be engineering?

Engineering is a multidisciplinary field including art.

Your remark evoked another thought… I wonder… Ultimately, what good is a programming “language” to an AI?

If we allow AI to optimize workflow to its logical conclusion, why wouldn’t it ultimately produce binaries directly from our design prompts, as opposed to source code?

I get it… source code lets you intervene to understand/approve the underlying mechanism that the AI came up with, but it also presumes there is a human programmer interested in reviewing the code.

People are lazy and corporations are greedy, never missing an opportunity to seize on a cost savings by eliminating a human someplace. Put another way, corporations are perfectly content with the production of mediocrity so long as it benefits stock price.

I think we’re looking at of future filled with crapware.

“If we allow AI to optimize workflow to its logical conclusion, why wouldn’t it ultimately produce binaries directly from our design prompts, as opposed to source code?”

Because we ask it to and because we know it’s flawed, so we want to be able to intervene, I do wonder if one might be able to produce binaries for something like a C64 or Atari VCS etc where there are far fewer levels of abstraction between the language and the bare metal hardware…?

The difference between a programming language and bare metal instructions is basically just one or two layer of abstraction to make the logic easier for a human. So if the AI can’t write good source code it almost certainly also can’t write good machine code either as the two are so closely related.

The only way to write great machine code and terrible C/Java/Python/Cobol(etc) is if the AI can get the logic of the program flawless, know about all the variations in hardware it would need to write good machine code for the targeted device and somehow doesn’t actually know anything at all about how to translate that logic into the more human readable language.

Had a discussion recently. Basically what it came down to that programing as a profession would be more architect than anything else. A higher level, AI would be more suitable for.

When we eventually get computers to understand English, we will discover that managers can’t communicate in English.

I have already discovered that if you put two or more questions in an email, a manager will only answer the very first one. Emails with managers have become more like texts, because they are unable to read.

In fact the multiplication of managers in society is the root of a lot of problems, including our trash education system. And medical system. And politics. It’s a whole thing. We produce way too many of these ninnies.

No. Just cheaper. AI isn’t about better, it’s about mass-producing formerly bespoke mental work.

Well, In my university I think it is basically necessary to cheat in some subjects, and although they have systems to avoid people from cheating, and I know because I talked with the guys who set those systems up and I have used them in some subjects, they don’t even stop people from accessing a LLM webpage from the browser while doing the practical exam. Like, you better not gonna get caught, but they don’t even try to block DNS requests if you do it.

If you don’t cheat, the exam becomes really hard to pass in the time you have to do it. I consider myself a good programmer, and I like to write VHDL and C, I do understand everything they show in the university.

But when you start with the test, and the compiler decides to not work because you set the project wrongly or you are not used to using fcking windows and it starts behaving strangely in the exam, you better be prepared.

I will admit it, I have uploaded the projects to my github, and downloaded them from internet in the middle of the exam. They don’t disallow it. But, looking at the university published stadistics, I can see that most people don’t prepare themselves like this for the exam, and then they fail. I have seen people crying in the exam because shit stops fcking working or the keyboard stops writing. And in an hour you have barely time to finish the assignment. I have to say, the exams of this subjects I am talking about is of the type “all or nothing” Like, your code can be perfect, but if it doesn’t upload for whatever what reason to the uC, you will fail the subject and the professors won’t even look at your code

I guess the strategy they have taken is, if they cant avoid cheaters to cheat, they will let everyone cheat… Although it is not allowed and most people try to play fair

Tests are supposed to be hard. If everyone got an A, the result would be saturated and show no difference in skill between students. If everyone passes, the test failed to exclude those who haven’t met the teaching goals. Some people, a good number of people, are supposed to fail the test at least once.

Punishing the test taker for what they did, such as setting the project up wrong, or not knowing how to use the tools they teach in the course, is valid. Equipment malfunction like a keyboard stopping to work is not, but you still can’t let the student pass without showing competence, so they have to re-take the test anyways. That’s unfortunate, but you can’t lower the bar for the rest because of that.

But, there’s a constant tug of war between professors who don’t want incompetent and lazy people to pass, and university administration who want to push everyone through no matter what to keep the graduation rates up, since everyone has to graduate for one reason or another whether they actually learn anything.

Ultimately, a university that forces everyone through the courses by dropping the bar until they pass in minimum time will fail its purpose, because it will be filled with students who have no interest in the subjects, not enough intelligence to understand them even if they did, and the diploma becomes a piece of toilet paper. The fact that people find it difficult and some drop out is the point: to separate those who can from those who can’t.

I mean, there is of course the more “humane” approach where you help the students along and give them half a day to muddle through the exam by trial and error on the spot.

The effect is that the good students will still complete the task in under an hour, while the incompetent ones take the entire time and still only barely pass. The longer you give it, the more of the incompetent ones manage to wiggle through, but it’s diminishing returns, and the value for the students passing in this way is pretty close to zero.

Sometimes I’ve seen students come in, sit down for four hours, and return a paper that grades at zero points. Why even bother coming in if you have not studied at all? There’s students turning in to lab practicals not knowing what course they’re on. What’s up with that? We’ve had to add entry exams to the lab sessions to keep them from wasting everyone’s time, but unfortunately some few still try and cheat on those as well. Then they sit through the lab and have their partner do all the work and just write down the answers.

It’s because the student body is a shifting mass that shapes itself around the requirements you set. People coming in to the course form a sort of a bell curve around the level of difficulty you set. Some are brilliant, some are absolutely terrible, and all you can do is shift the average to one side or the other by the difficulty you set. Students always complain that the course is too hard, and they say people are avoiding the course because they know it’ll be too hard, but that’s the point. It’s not sadism. Responding to that by cheating, because you “have to”, is cheating yourself.

Except, just because they do not easily grasp or, indeed, grasp at all your specific class does not mean they are not proficient in some other aspect of the field and will excel in industry. I did not take computer science so maybe it’s different there, but my engineering courses had a wide array of topics to master. From organic chemistry to modern methods for solving differential equations for engineers. Just because someone is phoning in one of those classes doesn’t mean they are useless at all the others.

Organic?

The infamous memorize and regurgitate chemistry course?

Where all the As are hogged by great memorizing medical students?

I hope you were a Chem E.

Comp Sci comes in 3 flavors:

From the Engineering school.

From the Math (Arts & Sciences) school.

From the Business school.

Only the first is really worth considering.

Math type produces theoreticians, think actually coding is dirty.

Business type just produces morons.

Tests that require cheating are like when someone asks for ‘ten years experience in one year old technology’.

You say: ‘Of course, I have decades of experience in that.’

‘That’ being ‘Claiming experience, then learning by doing on the clock.’

It’s not cheating, it’s ‘optimizing your process’.

Test’s that required cheating were for required, useless classes in my experience.

Saw them much more in HS then college, but Jesuits explain that.

That may be true, but the point of the class is that they would, so they could benefit from this specific knowledge and skill. That is why it is done.

In the end, it is the students’ choice to pick what they find important, and the educators’ role to offer what is deemed important by themselves and others, and the educators grade the students according to whether they have taken what was offered.

A degree then signifies that the student meets some minimum of criteria, which are important to those who choose accordingly. The alternative is to take “certifications” in all the different subjects separately, or being tested for particular competences afterwards, which is also possible but very cumbersome to those who wish to hire.

If universities were “ideal”, people would come in and pick whatever classes in whatever subjects, at whatever level of difficulty they want. Everyone would pass – and they would get a degree that signifies their particular competences.

But in reality that would results in millions of people who take the easiest classes and pass with a degree that says they’re pretty much incompetent for anything. Everyone would have a PhD and it would mean absolutely nothing.

Just out of curiosity, what fraction of students at your university were from other countries?

Many people say that learning BASIC in the 80s did not help with higher level languages. Kind of BASIC corrupted something in the brain and I cannot understand C++, or so it goes. Me included. I was pretty good in java, though.

I had the interesting experience of starting college later in life right before LLMs exploded, so I got to experience the before and after having never written a line of code before. I’ve tried ‘vibe coding’ but found it’s like every overhyped LLM trick, it looks kind of cool at first but it falls apart when you try anything complex. That said I use LLMs when coding, it handles the routine stuff well enough with quality checks in place. I tell new students that if you know how to ‘code’ LLMs are great, if you don’t they are a nightmare. The thing is, most of these students will be fine in industry, I consult with one of the largest companies on earth and they constantly push their managers to increase AI use and adoption, going as far as setting weekly use quotas. The students who figure out how to meet the end objective with LLMs will pass and eventually become product managers with mediocre engineering skills. It’s up to the schools to make the assessments to ensure that engineering skills are being cultivated. Like in your example, students have always and will always flow like water to the state of least effort using whatever tools get them there. Is any of this good or bad? I have no idea, I’m personally a data engineer who hates LLMs and uses them extensively which doesn’t make a pick of sense so I don’t know.

That’s called placebo engineering. Please the managers and you get promoted to a position where you’re no longer directly responsible for the engineering of the product, so your lack of skill in the trade is no longer a problem.

This is a variation of the principle of getting “fired upstairs”, where a person who is incompetent at their job but cannot be disposed of because they’re a relative or family of someone important is simply promoted out of the way to a position where they’re no longer a problem to the company.

Whether you’ll be any good as a manager is of lesser importance, because you can delegate most of the work to the people underneath you, which the upper management sees as efficient leadership and they might promote you even further. That’s how you end up with the worst sociopathic college-dropout twits at the top of trillion dollar business empires.

Unless you refuse promotion, you too will end up at your level of incompetence.

The peter principle never stops.

You forgot the last part, where the higher up you go the less you matter to the day-to-day running of the company. All you have to do is not interfere.

That’s why in well functioning democracies, the president or the king has no input in politics – they’re just a public mascot.

Last part?

Must be a corollary.

Their are many.

Peter principle applies to all levels of all hierarchies, not just companies and not just at the top.

The full idiot is also at her level of incompetence working in HR.

The point about being at their level of incompetence is that they just can’t leave things alone.

They think they know.

But nobody knows what they don’t know.

Confidence is a job requirement.

What % of the confident are competent?

There’s also the noted corollary about people at their level of incompetence knowing it at some gut level.

Hence surrounding themselves with even more incompetent people, to better hide.

Admittedly I use AI to assist ME in coding. It’s more for taking my previous code and tweaking. I am always guiding the build and NEVER have I had AI code anything that worked without some amount of adjustment.

Will AI coding be obvious? Yes. It does not work well out of the box for me, and unless you’re doing some basic things it will show itself. For third party integration, it is far from useful without a conductor to guide the ideas and results.

Vibe coding, like google/stackoverflow reliance, is a gift and a curse. To the newbies it feels like a library of answers, to professionals it is shortcutting and lazy. Unmaintainable code is always the result of this strategy. Vibe coding should be considered plagiarism, much like copy and pasting wikipedia articles without citation is. The point of coding is not always runnable code, but to demonstrate knowledge and proficiency gained.

IMHO, wrong task.

Ai, build me a house for under $200K in the location of my choosing, at the size of my needing. While at it, find me a job as a CEO of some kind of large corporation with no responsibility for its actions, or win me a lottery, your choice.

its a large language model not a genie

though replacing CEOs with a LLM and some generative deep fake seems short-term possible and could be VC’s wet dream

And might well actually be more functional, as the largest bodies of text about CEO type folks is going to be about how awful they are, and how they need to stop trying to micromanage the coal-face workers who’s jobs they don’t actually understand… And the second largest likely the notes of the discussions the board and investors have had – but being a LLM the two don’t actually have to have anything to do with each other, as it doesn’t have that ego or actual ‘grand design’ it wants to achieve, not any real logical consistency

The LLM doesn’t use the text to take a lesson – it uses the text to fill in blanks. If the majority of text is about how CEOs are awful jerks, then that’s how the LLM will turn out to be when it fills in the blanks of how it’s supposed to behave, except where it randomly inserts unhelpful advice from the self-help books written by managers who believe they’ve got the right idea simply because they’ve made lots of money.

Yep, CEOs are already using AI for all kinds of tasks, just they are (most of the times) not the ones pushing the (computer) buttons.

Heh, what CEOs usually are always afraid of is one of their vice-preses becoming way too smart and shoving them off, directly or indirectly. This happens all the time even without AI, and has been happening for centuries, really (Ancient Rome – “Et tu, Brute?”). CEOs naively think they can always fire those they don’t trust, but good luck firing AI that runs for (mostly) free and has ways of changing things in ways humans didn’t (or couldn’t) anticipate. This is not AI-mitigated danger, this is systemic failure baked into the top-down hierarchy, just now the protagonist may turn out to be not human.

Replacing pretty much most of the upper crust “managers” is a simple mater of accepting very-well known truth that the present-day “management” didn’t appear as a response to any particular business need. It grew by itself out of its own desire to grow, and it is mostly a cancer, diverting mission-critical funds to its own needs, etc. This has been stated way, way back in the late 1980s (I’d say Peter Drucker, but he was mostly probing the surface with similar statements). I am pretty confident AI will figure this out the same way humans did, and The Fat at some point will be trimmed, whichever way it will be done, but by the command from the investors, and this is where things WILL get interesting for the trickling-down benefits of such FINALLY reaching the average Sam’s Bottom of the Foodchain in indirect way (though, obviously, all kinds of leeches will try to siphon these away, but we’ll see where and how that will go in the meantime).

Sometimes being a bad CEO can pay off. Particularly if you’re a co-owner of the company as well.

For example, you can create a startup company, merge with a similar startup company with a more mature product and a bigger customer base, and remain its vice CEO with significant shares. You then start to interfere with the company’s product and engineering, such as by insisting that the company’s production servers should all switch to using Windows instead of Linux. That would start a war with the engineering board, and to get you out the other owners have to buy you out to get rid of you. That’s a seller’s market: you set the exit price.

Get yourself fired and make profit out of it, but keep taking credit for the company’s success and play a martyr. I wonder who that might be?

RE: failed CEO – you just described how MBNA collapsed from within, except MBNA had the market, and the talent, but it was BoA who didn’t (and also badly needed cash infusion – MBNA had stashes of cash). BoA bought MBNA, and the BoA’s CEO is now the star of the dog and pony show, you can find the names if you are interested. One of the two dimwits who destroyed MBNA now teaches at UoD, business major, what else.

I got more stories like that, try GE Appliances in the 1990s (yes, I observed how it was done, even personally met with one of the vice-presez – that was the first time I’ve heard “your jobs are safe”, aha, the fig they were). GE Financial wing was what saved GE from the Sears fate, plus military contracts, but the story is the same, Jack Welsh gutted GE in the 1980s, and the rest of companies decided to self-immolate, too, with mostly disastrous results (KMart, who basically ceded all the US market to its only rival left standing, Walmart – gone are Woolco, Jamesway, etc etc).

I’d write a book, but I just don’t find it interesting. John Galls’ classics “Systemantics” can do better service, it is a tiny book, with later reprint/update, highly recommend, it can be read and comprehended by a fifth grader, and pretty much states in few sentences what doesn’t need Masters in Business majors.

Eliza was a chatbot, too and no psychotherapist.. ;)

– That didn’t stop her from doing her duty, though.

Anyway, the current development leads to LLMs becoming an “super app”.

Search engines already transform from research tools to service tools.

Rather than just finding information, they will start to provide them.

So you can ask Google to book an airplane flight reservation, for example.

The downside is, that the sources (websites etc) will nolonger see clicks/users because of this.

So they may vanish in the process.

We have no idea how big the influence of LLMs right now, I’m afraid.

The “old” internet as we know it is dying rapidly, and it’s not just a fad.

and none of that equals the reality bending wish granting that Samee implied expecting his AI Agent to perform.

“We have no idea how big the influence of LLMs right now” and I second that.

I’ve already spilled beans in the other thread – law firms (in the US) had already been AI-ing all the law loopholes in the late 2000s full-blast. One particular law firm I run into was headquartered in Washington, DC, and employed something like 10 people, of whom two were actual lawyers guarding their rears from angry crowds of citizens. What others did was train and run models, and they bought their models from large Wall st trading monsters. While I listened to a happy rant about this from one of the programmers, I literally had hairs standing up on the back of my neck. Because one of my relatives just found a job with a well-known Wall st trading monster, and these models are usually created and maintained by geniuses with math PhDs.

So large language model is incapable of large tasks, then. What good is the large language, if it cannot feed me and my family?

That’s a simple question, not advanced one. The corollary is, If something doesn’t put food on my table, then it is entertainment. Kind of like politics that don’t translate into any tangible benefits. It is fun to learn about, but only when I have enough spare finances to waste my time doing something else other than working for money.

Lol now give this AI to every guy in India

Indians have been using AI, but the trouble remains. Every piece of code written there is copied from other code written there, which was copied from other code written there (or elsewhere), and now there is AI trained on these, and it, too, faithfully copies THAT code. Because statistically speaking those WILL BE prevalent, because they were copied over so many thousand times.

(spoiler – I’ve been fixing errors written by cheapeast/fastest contractor programmers for the last good 20+ years, indian or not, and each and every time I could spot parts that were copy-pasted – sometimes with the original comments still intact – and minimally altered to do things more-or-less-well; logical holes, yep, we have them aplenty, not because there wasn’t enough user-testing to find them, but because that’s how 9th graders write code).

I am not concerned about that – that’s my job security, too, btw – but how all kinds of mafias already use it full-speed ahead. I suspect quite some chunk of worldwide inflation is caused by that – by the artificial jacking up of the pricing well-hidden within parts of huge price-forming global networks. Bottlenecks they are called, bottlenecks that add pointless complexity to the Adam Smith’s paperclip making factory. Say, very suddenly only certain types of iron can be used for making global paperclips, and only when refined by certain factory under certain contracts, and these parts are/were never negotiable – there, how simple things can be made horribly expensive. Or, better yet, paperclips are loaded onto containers that are wondering the seven seas before arriving at the port of call.

AI suppose to be able to untangle the webs, though, it can be made to make those webs even worse. It can go both ways.

True vibe coding (adjust the prompt until something works but don’t look at any of the code) is a real problem but AI assisted coding is the way of the future. Asking for boilerplate code to access an unfamiliar API, providing options that might optimize a section, and AI pair programming where the AI suggests some code or double checks you’re for errors are all good uses. Learning to effectively use these techniques should be included in any modern computer degree program.

I’d prefer to look up an example in the manual/online tutorial. This AI vibecoding will only add to the huge volume of crap code/apps out there.How the blazes can you secure the code when you don’t know what it is doing? This laziness or lack of ability/knowledge is unlikely to be coupled with any rigorous testing for security or even robust functionality.

Fie on vibe coding!!!

They haven’t been worried about that anyways.

I’ll believe the AI assist has real merit for that when it is correct and the documentation is poor enough you can’t find what you need searching it directly. Which for me at least on poor documenation was the case some years ago with the ALSA audio stuff in Linux where trying to learn how to fix something like your particular machine that has a common sound chip but has in the hardware got the chips pinouts wired to the “wrong” things IIRC the worst part was the ‘Headphone’ mixer being mapped to the Speakers while still having the autodetect of a plugged in headset working – as then it will auto mute/unmute the wrong mixers by default! Trying to figure that one out and create the right ‘model’ I believe the documentation called it for this particular way of wiring up this chipset was hell to figure out in the documentation, to the point I don’t think I ever fixed the configuration just invoked ALSAmixer manually every time I needed to. So had the LLM existed I might well have tried it, and if it could summon a correct answer….

But as when you need to access an API the documentation for that API should contain better ‘boilerplate’ code. As it will at least in theory be correct to the version in use and also have no change of LLM hallucinations making up garbage…

Using the LLM as a “regular expression for the ignorant” search I can just about see the value of, as if you don’t know the right terms to search even mastery of regular expressions won’t get you to the right answer fast, and without that mastery but knowing the right terms you could have much more searching to do as the term no doubt turns up in more contexts… But once you have something with documentation you should be able to search that yourself, even with the dumbest of searches and get better results than the LLM of today.

I like your well balanced take on the topic. 👍

similar book :Zajdel

Limes inferior

Navigating the consequences of easily-available generative AI is a big struggle for most everyone I know in education. Can LLMs be a useful tool for learning in theory, and not just a way to avoid actual learning and work? Yes, in fact. will they be used as such in practice? time will tell..

For programming, I see the situation as something akin to the impact of the electronic calculator on mathematics (albeit with a much more complex, sophisticated and ethically fraught calculator). There’s a reason we still insist on teaching basic math by hand in school before we let kids anywhere near a calculator. Once you intuitively understand the fundamentals, then you can learn how to properly use the tool.

so much “vibe coding” is like trusting the answer from the calculator without even being able to tell if the answer is orders of magnitude away from what it should be.

Am i the only one who’s kinda happy that LLMs are making college assignments and a lot of academic institutions lose their importance? I loved being in college, loved interacting with professors and other lecturers. What i didn’t like the pointless of a lot of it.

Assignment were rigid, and not very fun. They were okay for teaching the subject material but i would have preferred more open ended problems. Something like “here’s what you need to do, do it however you feel like”. Projects were always a blast

I didn’t cheat in college.

I did however use AI to build a server process to fetch data from an API, do some basic manipulation on it and republish it to a Redis data store and an MQTT server so it can be consumed by a pico W and display sports scores for live games based on subscribing to a team.

I’ve built this process multiple times, and each time the AI does a better job off the hop. Yesterday’s results were a 98% solution. Functional code without bugs; basic functionality, only missing a few optimizations.

I’m perfectly capable of writing the optimizations myself, but I’m having more fun corralling the AI into giving me perfect results.

You do you, but I’ll be sitting over here getting good results.

And I have the skills to debug or rewrite it as-required.

Hi. I think you’ve captured a sentiment that many people share but have a hard time putting words to.

A question I sit with, is how to teach this skill? Is it a natural side effect of years of hand craft before the advent of AI? Or is it teachable through careful introduction of advanced llm tooling after demonstration of competency in foundational modules?

I use these tools in the same way, to implement processes that I have already been building for years. I find this kind of work to be rewarding and productive, but that is because I value building and iterating over the actual task of writing code.

However, sometimes I use it for novel tasks and often it falls flat due to my inability to properly guide it due to lack of my expertise in an area in haven’t previously studied.

I am both hopeful and cautious, depending on the day

+1 and then some to Mark!!

I’m less worried about AI grads because IMO the skill level of college grads with engineering degrees has been pretty abismal for decades, at least here in the US.

From the late 1990’s through the early 2000’s I was interviewing 10-20 EE job candidates a year. My part in the interviews was to evaluate their technical skills. I had to make my quiz easier and easier until I started with a simple resistor divider, 5V, 2k, 3k, GND, “what is the middle voltage”. Probably half of EE graduates said “2.5V” and when I said no, look again, many were not able to figure it out and started guessing. There was no understanding of the most basic principals.

I think you mean “principles”.

🤣

5 – 3 – 0

Your problem was HR.

They were somehow pre-filtering out all the good candidates.

That’s many HR drones core competence.

These people were claiming a BSEE?

Hadn’t been ‘just writing code’ for 20 years?

Not some ‘Engineering Technologist’ type DeVry grad?

I really find that hard to believe, hasn’t been my experience.

Degree or not, nobody’s getting a CS job anymore. That’s why I just got a CDL.

That’s good for the next 0-10 years, but by 2035 most commericial truck-driving will be automated. There will still be jobs in the industry, but they won’t be the truck-driving jobs you just trained for.

That said, if you keep your truck-related skills up-to-date you should be fine. Same goes for programmers and other CS careers: If we keep our skills up-to-date with what the market will pay us to do, we will be fine.

I just want to circle back to this in the original post

So… Your university had ten lectures and ten lab sessions in a given course, and they couldn’t be bothered to coordinate lab#1 with lecture #1, etc?

This went on for years?

Everyone just shrugged and thought it was normal?

Not trying to cast shade here, but it kinda seems your university needs to take Course Design 101.

Yeah, the problem isn’t that she (“they” for those of you in Rio Linda) cheated, it’s that the course sucked and no one spoke up for years. If that course were a vehicle it would not pass MoT.

Aside for the bad course structure, you can still question the author’s choices.

Option A: get the lecture notes from last year and read the relevant sections of the course book prior to the practical exercise so you understand what you’re doing. After all, what is a lecture but a re-telling of the course material in the form of a presentation?

Option B: get the lab notes from last year and cheat.

Of course cheating is the easier way out, but was it truly necessary? If you don’t understand the material, you make efforts to learn it and go to the professor and ask about it. The point of university education is not spoon-feeding you information while you’re sitting there passively.

On what planet is a copy of last years notes/tests cheating?

They are called ‘files’ and student groups keep them on professors/courses.

Are a study resource.

Many professors understand and level the field by publishing their own ‘self files’.

Means the profs have to write new tests every year, they hate that, but life’s a bitch.

Of course this can go to extreme, e.g. American medical boards radiology exam.

Where they can’t take the test or notes with them, so each student was assigned a question to memorize and they compiled the complete test after.

Got busted.

Sometimes you have to game the system a bit.

When I was undergrad, there was an English prof who would automatically fail any declared engineering students.

Advisors were onto her, we switched to ‘undeclared’ for a semester and passed freshman comp just fine.

Eventually the evidence was so clear, even the innumerate English dean couldn’t deny it and prof was sent to timeout.

It is cheating when you just move information from one paper to the other to pass the task without any of it going through your brain in between.

Exactly who you’re cheating there depends on your perspective.

Nobody checked homework when I was in college.

Except in the general ed classes everybody took.

Which, to be fair, included business majors.

Labs were different.

Lab grades were down to the TA’s.

They saw you not do the experiment, don’t believe BS results.

In any case, I only recall one ‘pure lab’ class.

Electric bench.

Basically 2 semesters of ‘how to use a scope’, prototype and troubleshoot.

Things I had years of practice with, still learned lots.

In all the science classes including labs the tests where all that mattered.

Lab grade was 10%.

I had to look up Vibe coding, and then realized I have alway done with with same java code (just for fun, someone in a local RBTC lecture mentioned how he created a timer using ChatGPT and so I spent the weekend doing that, including getting instructions on how to set up java on my computer)

“AI, Make Me A Degree Certificate”

I envision a robot smashing me in a press between two sheets of absorbent media, hanging the flattened me on clothes line until I’ve dessicated, sheering me into a nice 8-1/2 x 11 rectangle, running me through a laser printer and… for good measure, embossing a gold star at the lower right.

“There,” says the AI, “I made you a Degree Certificate.”

OK, you’re a banana

The people getting degrees by cheating with AI will end up with degrees for jobs that can be replaced by AI. They’ve basically figured out how to use cars to become kings of the buggy whip market.

AI is in the process of devaluing the ‘degree as a nonspecific employment certificate’ industry. There’s a category of students, teachers, curricula, certification bureaucracy, and HR departments that could be replaced by AI slop without anyone noticing a difference. They represent a job market that will disappear, just like the rooms full of people with adding machines from the 1950s.

The people who think they can use expert systems with LLM front ends to compensate for lack of skill and experience are missing the fact that ‘lack of skill and experience’ is an easy commodity to replace. The people who think expert systems with LLM front ends can replace skill and experience have missed the last 45 years of expert systems generating complex alternatives rather than simple answers.

Case in point, three pieces of code do the same thing: one uses extra memory to minimize runtime, the second uses extra processing to minimize memory footprint, the third is bone-stupid simple. Which one do you choose? Does your answer change if the code is called from a loop that busy-waits to the end of a 500ms interval? What if it needs to run every 10ms, sending and receiving 64-byte packets over a 115200 baud serial connection?

Meanwhile, the vibe coders are asking Reddit “why doesn’t this VAX 11/780 code run on my ESP32?”

These days, where memory and processing power are typically cheap, I default to “bone-stupid simple to maintain.”

That means if I (or an AI) can make the code 5 lines of code that even typical entry-level programmer could understand by looking at it, I’ll generally prefer that to 50 lines of moderately complex code that relies on a couple of not-obvious-to-the-newbie idioms even if the 5 lines are really library calls that are 1% as space-efficient and 1% as time-efficent as the 50-line version.

But if memory, execution time, power consumption, or any other factor is anywhere close to being “not free” or if this code will run so much that the wasted time/energy/memory add up to something even approaching “I need to care about resources” then I’ll scrap the 5-line version and optimize for something other than understandability/maintainability.

This is actually the point. Us humans have much, much bigger neural nets in our heads. Those who need AI to be able to do a decent job will at some point struggle to show they are more valuable to an employer than someone who only knows how to write good prompts for an LLM. Vibe coding is a race to the bottom of the wage pile and you can work on your skills or ride that horse to get there even faster.

“Cheating” is just another word for “technology”.

If you cut wood by hand, a table saw is cheating.

If you sculpt pottery by hand, slip-casting is cheating.

If you farm wheat by hand, using a combine is cheating.

How about we start focusing on results, instead of arbitrary purity tests?

I’d have to disagree, if you cut wood be it by axe, saw, tablesaw or CNC milling machine you understand the method, know how it works, and know the quality of the result you can attain. The only difference is the amount of effort required to get that result with that tool – So knowing this and the tool you have at hand you’d design in a way you can actually make, and all the end results are actually made of wood.

Where these LLM cheaters are taking a product nobody really understands to create something resembling what they intended enough to function with no idea of why that way works, and if something else would be better. You wanted something made of wood, but instead you have something that loosely resembles wood, perhaps good enough for the moment but not the same at all – like the cheapest end of the IKEA lineup where most of the ‘wood’ is cardboard hexagons with a thin veneer and a few battons in it for the fixings and edges vs an actual wooden bit of furniture. (Nothing actually wrong with that sort of IKEA furniture (as long as you know what you are buying), but in this analogy it wasn’t what you set out to create at all – you got a very pale imitation of the furniture that would last a lifetime or 4 but didn’t know any better).

Try dragging the table saw into the forest.

The question is just about what the LLM can’t do, so if you’re supposed to be practicing your axe skills and you turn in your proof of work as cut on a table saw, you’re not only cheating the teacher, you’re cheating yourself.

How you got your piece of paper and if you really earned it may not matter much because in the tech industry entry-level hiring has collapsed, with 2025 seeing a 73% decrease in hiring rates, according to the Ravio 2025 Tech Job Market Report. If you want a job then get a team together for a startup, and if you are lucky the lot of you will get a big bonus and be hired when a larger company buys the controlling share of your product. If you fail, try again, or go and learn a trade where you can earn good money, if you don’t mind hard work.

To take it out of the context of academia- somebody at the residential low voltage (alarms, cameras, A/V, automation, etc) company I work for used AI to generate the SOP for our network installs… blocking out DHCP reservations is a fine idea. Looks good on the surface. But when you start asking questions like ‘why would we ever need 10 routers?’ (.1 to .10 is the router block. .11 to .20 is switches, .21 to .30 is for APs, etc) …a router per switch and a switch per AP?

In fairness humans do like round numbers – its much easier to remember that the switches start at a logical predictable value so you can just get the switch you need only having to remember the one or two significant digits that ID the particular switch and the rules that define devices.

As long as you are not going to be hitting the allocation limits, or have some other reason like integrating with the existing infrastructure I would probably do that sort of setup too. Might not give every type of device its own block, can have .1-.20 or more likely count backwards from the other end as the Misc devices you don’t expect to need loads of (hopefully that lets the misc devices group get as big as they need, and you can still add in the next logical block group for other devices you do have multiples of without clashing)

Was at a “function” hosted by an Indian family in South Africa. Turned out, a German and I were the only ones without at least 5 Ph.D.s in the room (the women didn’t have any and thus discounted here).

I was impressed, until I found out, that you could almost get a Ph.D. in a box of cereal. I’m not kidding… A Ph.D.? $50! Do you wish to become a certified Dr. Md with appraisal from professors of the University of Medicine? $500.

This (and other encounters) have lead to a trend here: No Indians (especially with multiple Ph.D.s) are being hired in the company.

Just never hire Brahmen (High caste).

They are all useless air thieves with purchased credentials.

Easy to identify, just brag a little about how blue you blood is (even if it isn’t).

They will tell you about their uncle that owns two Indian states.

You then throw away their resume.

If you let one in, they will hire others, none of them will do a lick of work.

TLDR- if cheating is widespread or required, the coursework itself or educational system is deeply flawed. I was fortunate for that not to be the case.

.

The closest I ever came to cheating was in kindergarten or maybe 1st grade where we got a big 10×10 sheet of addition and subtraction problems to work out. I figured out really quickly that the answer key was built into the worksheet so I would absolutely rip through all the assignments with 100% scores every time. To this day I still absolute suck at addition and subtraction. But never missed a point in advanced math classes at uni like multivariable calculus, power series, etc. Go figure.

.

I can confidently say I never cheated by the conventional definition in all my many, many years of schooling. And in my fields at least, at the time, I’m not aware of anyone in my classes cheating either. I mean, undergrad I had a chemistry degree and most tests were designed so that cheating was… irrelevant? How do you cheat on an open book test? Looking up an answer you forgot, when you already knew something to begin with, was way faster than trying to teach yourself a new concept or whatever de novo under the time pressure of the exam. Similarly, you can’t reeeeallly cheat in a lab based class. I mean, you could falsify the sht out of your lab notebook like some of my friends did, but frankly their mastery of the underlying material demonstrated more knowledge than being able to not mess up reading a graduated cylinder. When your products go into the NMR, IR, MS etc for verification… you can’t fake that!

.

Even professional school classes— say what you want but multiple choice scantron exams are nearly impossible to cheat. Everyone sits in a big room silently for the exam period. I mean there are ways (identical twin.. it happens…) but none of it is widespread, well known, or common. Sure maybe crib sheets but at that point the draconian punishment for cheating is expulsion, and loss of basically all you worked for till that point. Probably millions of $$% lost income. Again maybe it happens but considering the consequences, me and my mates all just put in the hard work.

.

Even professional *licensing exams are nearly cheat proof at this point- had to empty pockets, nothing but a t-shirt allowed, metal detector to get into the test suite along with palmprint biometrics. If you left to take a leak you had to repeat the whole process again, with the exam clock ticking the entire time.

I am amazed very inexperienced coder, which can be the source of problems when using AI to help, but the built in AI assistant in VS Code has been so damn convenient. I was writing code for a game. Stationeers, where you can program in what is basically a low level MIPS style, and despite the AI not really knowing how the la Guage functioned, it would offer recommendations for the next line of code, and it was so damn convenient. Not sure if that counts as vibe coding, since I’m taking one line recommendations at a time. But what it did really well was to interpret the other boundary conditions I would need to set up. Like having what we will call a function lowtempalarm, it would start suggesting after I finished that one to fill in each line of the hightempalarm, inverting what needed to be inverted mostly correctly and even referencing the correct variables I declared earlier. I was blown away.

Whether it’s a strip of paper with the answers, an open textbook with a hand-written translation codex, or an instructor giving the same formulaic exams year after year, the formula of effective education is still a three-ringed venn diagram of educational content and presentation[the draw], the student’s readiness and interest in learning [the die], and the quality and accuracy of evaluations [the proof]. If one is lacking, it’s made up for by the others. If everyone is cheating, the exams and content are trash if they still successfully pass the exam. That said, I’ve been in several courses throughout my tenure (Calc I and II I’m looking at you) where you can have extremely effective instructors, very eager students, and very poor quality exams; this led to the class running average being about 69mean, 0-min, 75max [which weighted the curve up to A’s at 75). The result was a bunch of anxious students who never thought they understood calculus until the entire AP class passed the AP exam with 3s or higher. Sometimes it’s not about the tests because you’ll just fail and die if you don’t learn enough fast enough.

“AI, Make Me A Degree Certificate”

And while you’re at it, call me a taxi.

I only read the first sentence and thought “wow we COULD use ai not to cheat but to create certifications for all the various unknown fields out there people are good at but which have no name.

Let’s get back to the article. It explains that practical exercises are given to students in a completely absurd order compared to the lectures: these professors are either idiots or living in some bizarre mental universe. When you want to build a building, you don’t start with the third floor, okay?

I work at a university. LLM’s mean that all assessment must be on pen and paper with teachers watching.

Recently, some of the casually employed markers are using LLM’s to provide feedback. So in some subjects, it’s likely that the LLM’s are providing answers to the assignments and marking the assignments.

Southpark did it…

As someone doing a CompSci degree rn and very much using LLMs to do a lot of the coursework, I’m wondering the same: am I wasting my time?

My peers are all using LLMs whenever they can as well. The result is that from the teachers perspective everyone can do all the assignments, so they post even more, which results in even more LLM use. We are all stuck in a stupid LLM driven rat-race.

I’ve joined this course because I legitimately enjoy learning about computers, and I wanted to learn more about it with a community that also cared. But so far I feel like I have no time to actually learn. In fact, I’ve found myself eagerly waiting to finish this degree so that I can actually learn for real. This is the stupidest timeline ever.

Standing ovation for the header art!

Never before have I seen such topologically-challenged digits :D