If you were asked to pick the most annoying of the various Microsoft Windows interfaces that have appeared over the years, there’s a reasonable chance that Windows 8’s Metro start screen and interface design language would make it your choice. In 2012 the software company abandoned their tried-and-tested desktop whose roots extended back to Windows 95 in favor of the colorful blocks it had created for its line of music players and mobile phones.

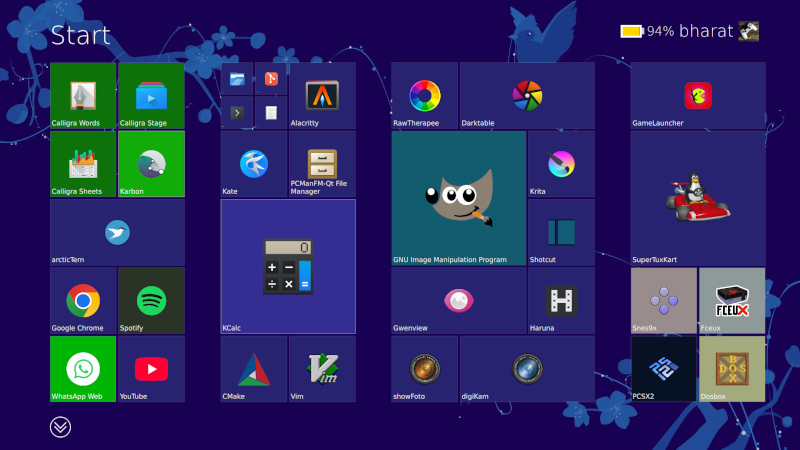

Consumers weren’t impressed and it was quickly shelved in subsequent versions, but should you wish to revisit Metro you can now get the experience on Linux. [er-bharat] has created Win8DE, a shell for Wayland window managers that brings the Metro interface — or something very like it — to the open source operating system.

We have to admire his chutzpah in bringing the most Microsoft of things to Linux, and for doing so with such a universally despised interface. But once the jibes about Windows 8 have stopped, we can oddly see a point here. The trouble with Metro was that it wasn’t a bad interface for a computer at all, in fact it was a truly great one. Unfortunately the computers it was and is great for are handheld and touchscreen devices where its large and easy to click blocks are an asset. Microsoft’s mistake was to assume that also made it great for a desktop machine, where it was anything but.

We can see that this desktop environment for Linux could really come into its own where the original did, such as for tablets or other touch interfaces. Sadly we expect the Windows 8 connection to kill it before it has a chance to catch on. Perhaps someone will install it on a machine with the Linux version of .net installed, and make a better Windows 8 than Windows 8 itself.

it is faster than sailfishos gui? or x11 ?

less resources?

hm…..

“Unfortunately the computers it was and is great for are handheld and touchscreen devices where its large and easy to click blocks are an asset. Microsoft’s mistake was to assume that also made it great for a desktop machine, where it was anything but.”

Least it wasn’t a Minority Report style interface. Directing the wrong kind of traffic.

The start menu you could opt to just not use, but the bipolar PC settings and control panel was sheer madness. Still is, even after more than 10 years.

This, or Microsoft Bob’s interface?

Microsoft Bob was a masterpiece! fond of some of the other shells like it, too. if I had the time and will, I’d make all my web interfaces like it; I tried it on a public web server for navigating to games and utilities but it was just too much of a trouble to maintain and wound up being the fastest I’ve ever abandoned a rewrite (I think it was live for only maybe 2 months).

Not a huge fan of the Win 3.1 interface… but… I did kind of skip the whole Metro UI so…

It is kind of amazing that Win95 still is the standard.

That’s because Win95 was designed by a definite paradigm. Everything had a point and a purpose.

Then everyone started to “think outside the box” and make cool shit instead.

Like most Linux distros that tried to borrow elements from macs and windows machines haphazardly without really thinking what it’s supposed to mean. Is a window a program or a document? Should we have two taskbars or one, or something else? Nevermind, we’ll just add virtual desktops and make them into a spinning cube!

One notable difference in how it was made: usability testing and iteration. Microsoft hired actual designers rather than engineers to build the user interface, and they quickly iterated different designs with actual users, so it wasn’t just software developers going “This is how I would use it.” or “Here’s how Apple does it”.

https://socket3.wordpress.com/2018/02/03/designing-windows-95s-user-interface/

It’s an interesting read of the troubles and problems they had to solve:

Oh my! 😥 Such users shouldn’t never create a child process!

It was when 2/3rds of the population had never used a computer. People had no idea how anything is supposed to work.

Example: user wants to paste a file into the same folder, so they can then drag the duplicated file somewhere else with the mouse. Operating system doesn’t allow that action.

The action makes sense if you think of the files as physical objects: first it needs to exist before something can be done with it. First you make a copy, then you move the copy. The copy of the file existing invisibly “in potential” is not intuitive. You could try to fight the user over this point and demand them to browse to the destination folder before selecting “paste”, but that’s not helpful. Throwing an error message is the last thing you want to do, because that would be hostile to the user. That will just put the user off by denying their intuitions, and stop them from completing the action.

Solution: let the user do what they’re trying to do – just change the file name slightly so both copies can exist in the same folder.

Eventually they’ll learn better ways to it.

Hm. Sure, in some ways that makes sense, I guess. 🤷♂️

But personally: I don’t mean to be a d*ck, but I never had a problem with abstract thinking,

I thought it was a natural skill of all modern humans.

(I started using a home computer age 5-6, used DOS/Norton Commander and Windows 3 at age 7.)

What I think I do really struggle with is that “thinking down” to the level of the so-called “normal” users, apparently.

Like Marvin from “The Hitchhiker”. And trying it hurts me, just like him.

Interestingly, just recently, I’ve found myself into the situation that I couldn’t understand certain puns or jokes

told by some baby boomers because they were too simple to me to understand.:

It caused me headaches, because my mind tried so hard to detect the clever pun/joke/reference

that was supposed to be hidden somewhere but then it turned out there was none.

Same goes for PCs. I often found the user troubles to be strange and trivial,

because everything perfectly made sense if you were thinking rational, logical, step-by-step.:

All you had to do was to simply see things from the other “person’s” point-of-view – the point-of-view of the computer in that case.

And seeing users failing to do that is just disturbing, scarry.

It’s like watching a person with dementia, maybe. Not funny.

To me, most “normal” users are more and more like this (dude on the right side):

https://www.youtube.com/watch?v=baY3SaIhfl0

That reminds me of 90s memes of the broken “coffee cup holder” aka the CD-ROM drive, by the way.

There were users, probably “normal” users, who seriously put their coffee cups on the CD-ROM drive’s drawer.

Because they had no idea what else that thing was supposed to do. Or so it seems. I hope that was just a joke and not a real “thing”.

What is different now?

https://microsoft.design/articles/a-glimpse-into-the-history-of-windows-design/

Translation: “Our bosses don’t bother to read our test reports anymore and just let us do whatever.”

” In the past, design had to prove its value to our business and show how good design positively affects user experience” – that sounds like the way to go, unlike the current mess – where change for changes sake is now the norm.

Nearly everyone e in 2026 still freak out when they click on a link in a chat during a Zoom meeting, and think that they ‘lost” the zoom software. Running multiple programs at the same time, even though you can’t see them, is a concept nearly nobody graps.

Yep, and why people using Android phones have oodles of apps and tabs open, re-opening the same web page over and over…

It replaced Motif standard, I guess. (OS/2 1.2 and Windows 3 were Motif-like, too).

Perhaps it also took inspiration from OS/2 Warp and NeXTSTEP OS?

Anyway, I just remember how PC GEOS copied Windows 95 look&feel pretty soon.

The successor of GeoWorks Ensemble, -NewDeal Office-, used it rather proudly.

https://en.wikipedia.org/wiki/GEOS_(16-bit_operating_system)

Yep, and as the Win95 usability study pointed out:

What’s the main menu feature in Motif? A pop-up menu you get when you click the desktop. The complete opposite of what users found intuitive and useful. That’s why the Start button.

the win2k start menu was about all i ever needed. i really didn’t like that they upgraded it in windows 7, of course that menu kind of grew on me, metro i wasn’t even doing that and i didn’t upgrade to 8/8.1 without classic/open shell.

It sucked at the time but pales in comparison to the ad-riddled nonsense in Win11

What ads? I see no ads in mine. Nor any other “online content” either.

Maybe your just too computer illiterate to change a few basic settings 😂

I unironically enjoyed windows 8.1 over windows 11 ui/ux. The original start menue could be enabled if you want, search was still sane, and notepad launched in milliseconds.

Did you use it on a touch screen? I tried out Windows 8.1 on a friend’s desktop and did not really like it. However, the article mentioned that it was a touch focused UI, and for that it was brilliant. I really still mourn the loss of my Windows 8.1 phone. It was a cheap Lumia but launched programs really quickly, the interface had some very interesting descisions that made reacting to incoming messages, calendar reminders etc. really convenient. I would love to have a phone like that again.

The main problem was the lack of supported apps. In the beginning, a number of apps were available, but then they pissed off their devs (again), apps did not get migrated to the new version (because it was a hassle), fewer apps meant people did not buy the windows phone, so people stopped writing their apps for it and the whole system just collapsed. I’m still mad at Nokia and Microsoft for that.

Same, win 8.1 over win10/11 anyday. And desktop machine, no touchscreen.

It might have been fine, but Microsoft repeated their usual strategy of ramming it down everyone’s throats with no choice whatsoever. It was a bridge too far in this case. It was very different from what people were expecting and used to.

I personally hated it. The presence of a “desktop” where multiple programs can be “sat down” in their own physical space used side by side makes intuitive sense to me. The lack of it felt claustrophobic.

I manage multitasking on my phone ok, but I still hate it.