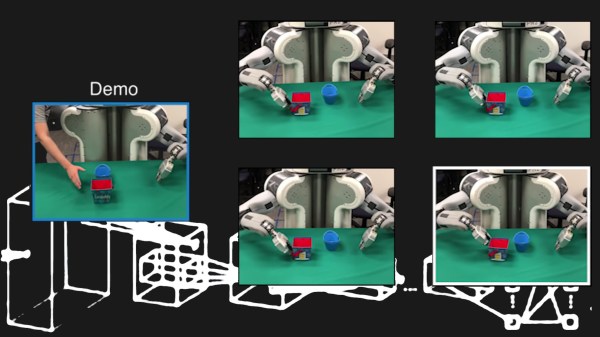

Not long ago, machines grew their skills when programmers put their noses to the grindstone and mercilessly attacked those 104 keys. Machine learning is turning some of that around by replacing the typing with humans demonstrating the actions they want the robot to perform. Suddenly, a factory line-worker can be a robot trainer. This is not new, but a robot needs thousands of examples before it is ready to make an attempt. A new paper from researchers at the University of California, Berkeley, are adding the ability to infer so robots can perform after witnessing a task just one time.

A robotic arm with no learning capability can only be told to go to (X,Y,Z), pick up a thing, and drop it off at (X2, Y2, Z2). Many readers have probably done precisely this in school or with a homemade arm. A learning robot generates those coordinates by observing repeated trials and then copies the trainer and saves the keystrokes. This new method can infer that when the trainer picks up a piece of fruit, and drops it in the red bowl, that the robot should make sure the fruit ends up in the red bowl, not just the location where the red bowl was before.

The ability to infer is built from many smaller lessons, like moving to a location, grasping, and releasing and those are trained with regular machine learning, but the inference is the glue that holds it all together. If this sounds like how we teach children or train workers, then you are probably thinking in the right direction.