We know we’re living in the future because there’s hi-resolution, full color images plastered on every high-density screen in sight. Of course this comes at a cost, one that’s been hidden away by the myriad improvements in the way we digitally represent those pretty pixels and how we push them to the screens. Nobody thinks about this, except those who are working behind the screen to store and light up those pixels. And hey, chances are that’ll be you some day. Time to learn a bit more about image encoding!

[Scott W Harden] put together a succinct primer on representing images in memory. It focuses on the basics of how images are stored: generally with the B before the G, sometimes including an alpha (transparent) channel, and with a number of different bit depths. Having these at the front of your mind is crucial for microcontroller projects, where deciding what types of images to support is often limited by the amount of memory available for frame buffers, and the capabilities of the screen chosen as the device’s display.

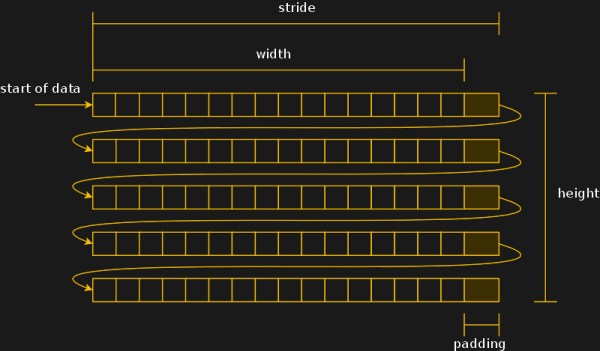

Speaking of display specifics, [Scott] shares some detail about mapping the memory to the dimensions of your screen. If the byte count of pixel data doesn’t line up nicely with the dimensions of the screen, padding the rows out may help in the processing overhead it takes to get those pixels onto the screen. He also has some tips about “premultiplied alpha” which makes the transparency calculation a part of the image itself, rather than demanding this be done when trying to update the screen. Running a test in C# on one million frame renders shows the type of savings you can expect.

Decades of trial and error landed us with these schemes. Looking back is literally an archaeology project, as one hacker discovered when trying to get a set of digital images off of a floppy from a 1990s photo processing service.