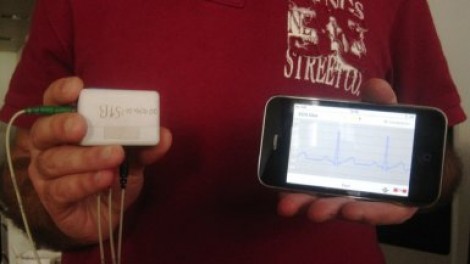

This module is a sensor package for monitoring the electrical activity of the heart. It is the product of an effort to create a Wireless Body Sensor Network node that is dependable while consuming very little electricity, which means a longer battery life. To accomplish this, the microcontroller in charge of the node compresses the data (not usually done with wireless ECG hardware) so that the radio transmissions are as short and infrequent as possible.

[Igor] sent us this tip and had a short question and answer session with one of the developers. It seems they are working with the MSP430 chips right now because of their low power consumption. Unfortunately those chips still draw a high load when transmitting so future revisions will utilize an alternative.

Oh, and why the iPhone? The device that displays the data makes little difference. In this case they’re transmitting via Bluetooth for a real-time display (seen in the video after the break). This could be used for a wide variety of devices, or monitored remotely via the Internet.

[youtube=http://www.youtube.com/watch?v=iURXzBsckOc]

video has no sound, reading the page I almost puked in my mouth (lots or MBA moronsspeak like shifting paradigms, im guessing they try to sell it to some corporation). iphone to be trendy …

Sure it needs a lot of juice when you use separate bluetooth chip instead of 802.15.4

in fact HERE is exactly this, MSP430 + zigbee http://www.youtube.com/watch?v=SEeUu53OHGA

running off two AAAs and without stupid iphone

Oh yeah and you need a full laptop to do the same as the “stupid iphone”. And that stuff does not look wearable. Also the MBA morronspeak you didn’t seem to understand explains they’re actually evaluating compression algorithms: that compressive sensing stuff was recently featured in wired and looks quite cool to me. If you throw in a more efficient communication layer, you can only improve on the system after compression. And compression remains key …

ESL is ENS as MIT is to Stanford. Once upon a time they did useful science, but now they rehash existing technology and tout it as the second coming. Remember how MIT went from being the font of all things scientific to becoming a PR machine designed to suck blood from alumni? Same thing.

MIT: Hey – we reinvented inductance chargers!

Stanford: All we did was spin out google.

MIT: Look – the media lab made yet another stab at becoming a video arcade. Pew! Pew! Pew!

Stanford: Wait – they’re getting all the press! Quick, let’s take the (circa 1860) absorption refrigerator and pretend we invented it to, uh, help, uh, help the starving kids in africa gain access to ice cream. Then we can ask for grants from ADM for expanding the market for HFC.

MIT: Silly rabbits, The USPTO says that we invented that last week. See you in court!

In general, this is the future: After generating all possible permutations of [X is not unique but X with a computer is and should be patented], the VC crowd is about to do the same thing with cell phones.

Next up: doing X with a gene splice, as in pushing aspirin made by bio-culture! Same great effect, but now with extra profit margin!

Inventors – it is not how great your idea is, but how effectively someone else can market it that counts. It is not genius, but the ability to exploit ideas that makes us great.

My tip was explicit about the fact that what is undertaken here is an instance of compressed sensing. To make it short, the reason the draw on power is expected to be low is because the encoding of the signal is done following the theory of compressed sensing. The reason the iPhone is important is because the signal being compressed during the low power acquisition (because of compressed sensing) is then decoded on the iPhone which performs some real computations involving full scale optimization routines with wavelets. The iPhone is central to this set-up because it is used as a full scale computer, not just a screen.

Compressed Sensing is a paradigm shift because the encoding is very low power and most of the work is really performed after the signal has been acquired not during acquisition.

Igor.

I still say its bogus.

>The reason the iPhone is important is because the

>signal being compressed during the low power

>acquisition (because of compressed sensing

So you are compressing EEG signals sampled at ~400Hz? Even at 24 channels you are still sending only 9.6 KB/s.

Then there is this “shimmerTM” that again sounds like a sales pitch. And this coming out of UNI research, but all I can see published are those fluff abstracts plus some slides and no real information about implementation (HOW, not what).

>A normal system requires the encoding of the

>signal (a power hungry process) to be performed

>during acquisition before sending the signal out

Sampling is a power hungry process? From what I understand conventional systems just send raw data because its such a small quantity of information (<10KB/s for 24 channels) that no encoding is required.

There must be something that im missing.

I think the iPhone version looks a little hokey and contrived. Is there any legitimate reason to need an EKG (not EEG – no brainwaves here) on your phone?

If you’re a doctor surely you’ll lose your license for basing medical care on untested BS equipment like this.

Rasz,

I am sure you still have an open mind as you keep on asking questions. The idea is to use less power than just sending raw data. In order to do that you need to compress first before sending out the data wirelessly. Now if you do compression the conventional way, you are likely to require more power than sending the raw data.

With compressed sensing, the encoding is very very cheap in terms of power (because it is fixed i.e. non iterative) compared to conventional compression. The main drawback is to reconstruct the signal once it has been sent off the air. The reconstruction is very power hungry hence the need for a computer like the iPhone.

To give you some numbers, you need to check slide 32 of this presentation:

http://lts2www.epfl.ch/~vandergh/Download/Marseille-2010.pdf

where there is a comparison between conventional encoding, CS encoding (our case) and no encoding at all. In the CS case, the beacon interval is about 2.2 times larger than no encoding. The DWT scheme is much better than CS but then again, the code execution time is 10 times that of the CS. As pointed out in the slide, the values for the power level need to be looked at carefully as the use of another microcontroller is likely to change those numbers.

Hope this helps,

Igor.

M4CGYV3R,

The iPhone is really needed here for its computing power. The fact that it also has a nice ergonomic factor helps in making the point that the system is already miniaturized using off-the-shelf hardware.

Igor.

I’m going to get aboard rasz’s thought-train.

“It seems they are working with the MSP430 chips right now because of their low power consumption. Unfortunately those chips still draw a high load when transmitting so future revisions will utilize an alternative.”

Mike, I doubt it’s the MSP430 that’s drawing more power when transmitting. It’s the bluetooth transmitter. And to the project’s implementers: why use power-hungry and network-poor bluetooth?

It would be a lot easier/faster/efficient to use the PHY and MAC layers presented by 802.15.4.

Also, I didn’t get too deep into the what compression algorithm was (is it discussed/posted?), but with the limited RAM available in the MSP430 family, I doubt it’s a very complex compression scheme.

So far, it seems that instead of just keeping it relatively simple and streaming buffers of samples, this project is compressing those buffers and bursting them in shorter, less frequent packets to save power. This is great for some relatively low frequency sensors like ECGs, temperature, etc.

How about some metrics on how much power and bandwidth actually save?

Goldscott,

You are right, the compression scheme is very simple yet efficient. For some numbers check slide 32 of the presentation I mentioned to rasz above (http://lts2www.epfl.ch/~vandergh/Download/Marseille-2010.pdf ).

As for the bluetooth use as opposed to other means, I wouldn’t know. I am sure they wanted to use an off-the-shelf (full scale) computer such as the iPhone in order to show the reduced complexity of the overall prototype. But as I said, I don’t know about that part.

Hope this helps,

Igor.

Thanks Igor,

I just looked at the slide show. I’m not familiar with much of the numerical analysis (e.g. Gabor, Rudin, Osher, Fatemi, etc.), but I took a short graduate course on compression, so I see a small dictionary is being used with DWTs. I also don’t have any experience with sparse matrices, but it seems like the “sparseness” is being highly compressed and the “meaningful” data is getting a DWT run over it?

Also, what is the icyFlex2 and why haven’t I heard of this chip? Proprietary development at CSEM?

…Sometimes I miss academics.

Goldscott,

You said:

“I just looked at the slide show. I’m not familiar with much of the numerical analysis (e.g. Gabor, Rudin, Osher, Fatemi, etc.), but I took a short graduate course on compression, so I see a small dictionary is being used with DWTs. I also don’t have any experience with sparse matrices, but it seems like the “sparseness” is being highly compressed and the “meaningful” data is getting a DWT run over it?”

Not quite but close. In the generic encoding scheme one would perform an encoding at the sensor level with the DWT dictionary. In the CS case, the acquisition is performed with a “sparse” dictionary that unlike the conventional version does not require iterations (no need for finding the best element in the dictionary as in the conventional case). However, in the CS case, the DWT dictionary is used on the receiver side with the iPhone, as it is used to combine the encoded data and the dictionary to provide the original signal.

In the conventional case, the compression effort is spent on the sensor side while in the CS case, the effort is spent on the receiver side.

“Also, what is the icyFlex2 and why haven’t I heard of this chip? Proprietary development at CSEM?”

I really don’t know about that part, sorry.

Igor.

Iv seen those slides before my first post. They state CS achieves 50% compression. Combined with Bluetooth whole system accomplishes exactly same power consumption of comparable system sending raw data with Zigbee (CC2420 RX:41.4mW TX:36.6mW, idle:1.28mW, bluetooth at least twice that looking at the slide).

Basically using Bluetooth for talking to iphone zeroes any advantages CS might have.

Last but not least I dont think compressing EEG/EKG signals would be advisable when raw data is almost free. I can understand using CS in MRI where it offers faster acquisition times.

So in summary it looks like a solution not only looking for a problem, but creating one (using BT for radio link to artificially inflate power consumption).

rasz,

You said

“..Basically using Bluetooth for talking to iphone zeroes any advantages CS might have.”

Yes they are at that stage it seems but as I have said, the iPhone as a computer is a terribly important part of the whole set-up.

You also said:

“..Last but not least I dont think compressing EEG/EKG signals would be advisable when raw data is almost free. I can understand using CS in MRI where it offers faster acquisition times..”

You are making several assumptions here:

one is that this is the best CS can do. My hunch is that they can do better. They are also interested in changing the A/D converter at the sensor level, with what we have seen in CS,I am thinking we are going to see improvement there as well. Finally, you are making the assumption that one cannot make some observation about an EKG from its compressed data. Unlike conventional systems, CS has the particularity of providing a sound theoretical background that says otherwise. In short, while it is nice to see an EKG traces being reconstructed on the iPhone, one will eventually be capable of making an actual diagnostic from the compressed data before they are being reconstructed. All in all, I agree with the PI of the project that this prototype is likely to provide much better outcome in the future.

In the case of MRI and CS, the hardware was already there, so not much convincing had to be made at the hardware level: the procedure for acquiring data just changed. In most other cases including compressed sensing, we have to change the hardware. This is why CS always look like a small improvement at first sight (or a solution looking for a problem) because you are generally using off the shelf hardware that was not designed for the new purpose at hand (BT in this case). If you are interested, I have ompiled a list of CS hardware being built: https://sites.google.com/site/igorcarron2/compressedsensinghardware

Hope this helped,

Igor.

This is awesome, but iphones are still gay.

I would think that EEG waveforms would be trivial to compress, and bt sniff mode would give you very low power consumption (wake up every few seconds to burst the data).

Where are the schematics/notes on the EEG hardware itself? Is there anything interesting or unique about the actual data acquisition?

icyflex

http://docs.google.com/viewer?a=v&q=cache:ft-XczA8W3AJ:www.csem.ch/docs/Show.aspx%3Fid%3D9221+icyFlex2&hl=en&pid=bl&srcid=ADGEESjhqm1OCI5bgUObzPjKARQqZd5nPjtDxqTiG6Nao8hY-7OKKiaMUUfBWEYehxXPYRrXx0ERmFsljCNwPpHBVUatxk3Ag9Po9SkyawwJ8iSIRLRc5XyCF3QjIlk_ueLKY76YFkm1&sig=AHIEtbTEzlp4sEgzmEQn5QSi7tvVfnhanw

Now that is something I would love to read more about.

I dont like the idea of using lossy methods for medical data (EEG/EKG) when raw is almost free. As I stated above MRI is different because you are actually gaining temporal/spatial resolution with CS as the whole process is often undersampled to begin with. There is nothing to gain when dealing with 200Hz signals.

Basically this paper invented the problem – expensive radio – and then tried to work around it with CS, that didnt work (msp430 results) so they jumped to another platform (icyflex) and somehow pulled 92% number out of their asses when in fact the number is ~25% (icyflex paired with bluetooth, lossy DWT vs lossy CS). I could venture a guess that better optimized lossless compression could match CS on the same platform, after all they concentrated on optimizing only CS.

I understand CS can go <1/8 of Nyquist–Shannon, but why bother when

-Nyquist is easily reachable

-Nyquist is free

-lossless is desirable

I can think only of one or two reasons, fat research grand or patent paid by some corporation :-)

Rasz,

You are missing the point.

There is really nothing to optimize for CS. In fact, optimizing for the DWT scheme is far more expensive than the simple multiplexing occurring for CS. This is so because you have to spend much time and money trying to figure out how to design your sensor to perform the compression in the right kind of basis. In CS, you don’t care about these bases issues until you reconstruct the signal (i.e. much later)

There is also no rule for CS being 1/8 Nyquist, in fact, the CS rate is really dependent on how sparse the signal is. The sparser the signal the lower the rate. In fact, the specific case shown here is valid for one ECG electrode. The CS rate decreases when we add several electrodes as most of the other electrodes share some part of the signal together (joint sparsity). The overall effect of adding additional electrodes becomes more obvious with the utilization of CS.

Finally, you also make the point that Nyquist is free. Maybe so but if you have to devise an AI system on top of this sensor in order to produce some type of alert (as you know some people should have an ECG all day long for some diseases), then you are going to devise an algorithm that classifies this signal. And clearly you want that signal to be as small as possible so that any SVM/Classification algo can be fully tested. The Nyquist rate might buy you the ability to reconstruct perfectly the signal (without any notion of sparsity) but it will be a hindrance for classification purposes (especially with several electrodes) and will need to be done off-line (away from the sensor). On the other hand, CS buys you the ability to, for little additional power, perform classification on-the-fly, next to the sensor.

I hope this helped,

Igor.

I’m concerned about the lossy compression; If you are monitoring for abnormal EEG/EKG would the shape of the waveform not be important? what is the accuracy in reconstruction?

Also this smacks of pseudoscience; what are the real-world measured currents (sensor/transmitter side only) for doing this vs doing a basic (RLE/dictionary/etc) compression of the data? Nobody cares about bytes transfered, as tiny packets will have more overhead (i.e. lower data/mW) due to radio/protocol overhead.

Andrew,

Check the accuracy in the slides I pointed to earlier in this thread.

Pseudoscience ? wow.

Anyway as I said earlier, part of the idea is to eventually have a classification system as close as possible to the sensor. Another is to have many more electrodes than just one. A Nyquist rate signal could not possibly allow for classification without a bulky and power hungry overhead and would eventually be an issue for a larger set of electrodes.

Pseudoscience is perhaps a strong term; there was a lot of academic fluff on “fractal compression” years back that made a lot of claims similar to your own, and that is perhaps why my bs detector was going off.

I looked at the slides; lots of math and theoretical data but next to no actual empirical data aside from that one tiny chart in the one slide.

I didn’t see anything about comparison with simple lossless delta compression nor anything about buffering more data; What was the limiting factor in determining the beacon size? I think that your slides aim at academia and that is fine, but some nice big waveforms and data would have gone a lot farther to dismissing the skepticism you’re seeing here.

Again, I apologize for the pseudoscience jab. Do you have your raw data, DCT and CS data and current measurements available somewhere for the rest of us to be able to poke at? What about the questions I’d asked about accurate reproduction of the original waveform given the lossy compression?

It is very difficult (for me) to understand the benefit of CS, especially given that simple delta compression for a trivial waveform such as ECG should do fairly well with no error.

>Compressed Sensing is a paradigm shift because

>the encoding is very low power and most of the >work is really performed after the signal has been >acquired not during acquisition.

As a paradigm shifts go, this one needs a cane and a hearing aid. Unless you think that compression after data acquisition and post-processing on the other side of the link after decompression is “new”.

The exact same paradigm shift occurred when someone discovered that you could use motors to play tapes of very long and complex telegraph messages in high-speed bursts, for decoding on the other side using the reverse process. Of course, that was circa a few years before WW1, so you’d hardly be expected to have seen your paradigm back when it had a full head of hair and could still get more women than Maurice Chevalier.

And, you probably missed it again when that Turing fellow was trying to bust a move before the big apple, when we used computers to decode radio transmissions. Or when we needed to decode pressure wave data while we practiced giving cancer to half of Nevada.

Like a bad movie vampire, your paradigm hung out with Von Braun and those silly rabbits at Lockheed, before graduating to doing secret squirrel spy stuff with bubbly torpedoes and bouncing recon images off satellites.

But like shag carpet, your paradigm will be back on the scene faster than lady gaga can peel her upper lip clear of hair, and so I would like to congratulate you on your clever iphone hack.

But in the meantime, go buy an ipad so you can find an appropriate audience for your hype. They’ll appreciate it. They dig anything.

You know what’s sad? Dissing a guy who is actually doing research on something near and dear to my heart.

Igor, please, I beg you to skip this bac quality research demo and go work on subcutaneous isolated differential eeg probes that use fiber optic thread to get a usable signal back to the analyzer.

EKG via bluetooth? Man, you’re WAY better than that. EKG has been done to death, as has remote EKG using every flavor of sensor/radio/data/compression AND visualization scheme known to man. Go find the BRAIN, man, and get back to us with the results.

– Abe N.

Andrew,

It’s good to have BS detectors up.

I think the reason you don’t see traces and reconstruction is really that the slide were focused for a different audience. But in CS, the error is well controlled based on the information one has on the sparsity of the signal. I am sure that in the future, the authors will also show real and reconstructed waveforms (I am sure they will read this thread at some point in the future) On slide 30 of these slides:

http://lts2www.epfl.ch/~vandergh/Download/Marseille-2010.pdf

The good and very good limits are judgements made on how the data compares to the uncoded one. The DWT scheme (conventional compression scheme using wavelets) is the best one available, while the CS scheme is worse, but the time needed to run the encoding required for the DWT is 5 times more than for CS. The expectations would be for the encoding power level to also be in that ratio but the total power number given is only slightly giving an advantage to CS (must be the radio).

The point of the presentation is to show preliminary result and the need to know what you are doing when implementing this CS technique. Unlike “Fractal Compression” :-), CS is a different way to perform sampling. It is known that it is not the cure-all solution but rather a different way of doing things. The community at large is still trying to figure out situations where it brings an enormous advantage over current undertakings. MRI is clearly a good example where no hardware change was required that benefited from it, other areas are taking a little longer as hardware needs to be changed (the project shown here is a good example)

So I think the advantage is really that if you choose a simpler encoding hardware as well as a better radio you could really see CS shining through over both no encoding and the lossy DWT approach. The approach will eventually scale very well with multiple sensors as CS will provide additional theoretical arguments for higher compression. Eventually, the thought is that several sensors and a lightweight classification system are the targets to aim for. Again this is a work in progress.

As an outsider to the project, the most surprising to me was the fact that the iPhone could reconstruct the signal in real time. See, up until three/four years ago, this is a feat that just could not be achieved, see for instance the second figure in this blog entry I wrote a while back on the performance of reconstruction algorithms:

http://nuit-blanche.blogspot.com/2009/12/cs-are-we-hitting-ceiling.html

The convergence of tremendous algorithm improvement in the past few years and the rise of small handheld computers like the iPhone makes this ECG system a very unique project

I hope I answered some of your questions, for more, you guys can ask directly the folks working on that project (icyflex,…). As a work in progress, I am sure they will update us on their next iteration which will make the CS system a more obvious winner to different trade studies.

Cheers,

Igor.

Bilbao bob,

Nice rant. In CS, compression and data acquisition are performed SIMULTANEOUSLY.

Cheers,

Igor.

Andrew,

I have responded to you but the comment awaits moderation. Sorry.

Abe N.

I am not involved in the project.

Cheers,

Igor.

This is very interesting project and interfacing it with some popular portable device for data manipulation and display is a good idea too (as side note, I hope that it will be not limited only with one platform in the future).

One question however: how much more power does the receiving device need for decompression compared to regular data receiving?

Kris,

I am not sure how to answer that question but in the DWT case, teh reconstruction involves one matrix vector multiplication, whereas in the CS case you have at least an order of magnitude more matrix vector multiplications.

Hope this helps,

igor.

That is what Igor was so impressed about Kris, looks like iphone handling decoding is a big improvement.

Personally im still not sold and think its just a gimmick to get grand money. Radio or visual domain on the other hand, now there CS could(will?) shine. I was blown away by one pixel camera.

Wier cool!!!

I missed in the discussion one of the trade offs in low power wireless systems:

1.The more data you have to send, the more energy it costs.

2.Compressing the data before sending costs energy as well.

An elaborate algorithm may save on point 1 but cost more on point 2.

Wim

Wim,

The point is that power level for compression in CS is expected to be very low compared to conventional compression (DWT). So the idea is to compress using CS techniques (lower power than conventional systems) and then send as few as possible information over the wire (because the information is compressed i.e. as opposed to no compression).

Where it gets interesting is that CS is not an optimal compression system compared to DWT. In effect, more data has to be sent over the wire with CS than with DWT. DWT on the other hand cost five times as much energy as CS for the compression step.

At the hardware level, the compression step is very different for CS and DWT, one is not just a by-product of the other, the architecture has to be changed. Hence the trade studies…of which this project is part of.

Hope this helped,

Igor.