[Ralph] has been working on an extraordinarily tiny bootloader for the ATtiny85, and although coding in assembly does have some merits in this regard, writing in C and using AVR Libc is so much more convenient. Through his trials of slimming down pieces of code to the bare minimum, he’s found a few ways to easily trim a few bytes off code compiled with AVR-GCC.

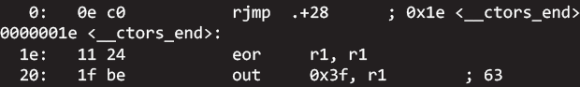

To test his ideas out, [Ralph] first coded up a short program that reads the ATtiny85’s internal temperature sensor. Dissassembling the code, he found the a jump to a function called __ctors_end: before the jump to main. According to the ATtiny85 datasheet, this call sets the IO registers to their initial values. These initial values are 0, so that’s 16 bytes that can be saved. This function also sets the stack pointer to its initial value, so another 16 bytes can be optimized out.

If you’re not using interrupts on an ATtiny, you can get rid of 30 bytes of code by getting rid of the interrupt vector table. In the end, [Ralph] was able to take a 274 byte program and trim it down to 190 bytes. Compared to the 8k of Flash on the ‘tiny85, it’s a small amount saved, but if you’re banging your head against the limitations of this micro’s storage, this might be a good place to start.

Now if you want to hear some stories about optimizing code you’ve got to check out the Once Upon Atari documentary. They spent months hand optimizing code to make it fit on the cartridges.

This would be even more beneficial on the Tiny25 and Tiny45 chips as they have 2KB and 4KB code space respectively.

They’re the same chips, with all code comparable between the three. The only difference is the amount of Flash, SRAM, and EEPROM in each.

I just did almost exactly the same thing on an ATTINY 4313A!….

http://ognite.wordpress.com/2013/11/12/how-small-can-an-avr-c-program-be/

-josh

Am I missing something? How does ORing a 1 in toggle the bit?

The IO ports on AVR chips have an interesting feature where setting a bit in the PIN register will toggle the state of the output pin. From the datasheet…

10.1.2 Toggling the Pin

Writing a logic one to PINxn toggles the value of PORTxn, independent on the value of DDRxn. Note that the SBI instruction can be used to toggle one single bit in a port.”

This is very fast (1 cycle) and very cheap (1 word code), so very handy compared to other alternative ways of doing it.

-josh

*Some* AVR chips. Some of the old ones don’t do this (ATmega8, for instance.)

You need to have the bit in a register to get one cycle toggles. (OUT is on cycle, but SBI is two.)

Oh, and the Reason you use “OR _BV(b)” in the code code is because the AVR-GCC compiler knows to convert this to a SBI (set bit) instruction.

No, if you do an OR on the PINx register, you can toggle up to the 8 pins in one single instruction

Cool. I like how you did it all in C. There’s more than one way to skin a cat…

I feel compelled to point out that while this can be a very powerful tool *in certain cases*, the user needs to be aware of the implications of using this. For example, at least on some processors (been a while since I used the AVR) the initial register values are guaranteed on power-on reset, but not always for other resets. If you soft reset (watchdog, code rollover, etc.) you can get undefined or weird behavior if your code assumes initializations that never happen. Just like the GOTO statement, these are neither good nor bad but make damn sure you know what you’re doing before you use them. That said, kudos to Ralph for having the skills to dig this deep.

This is a good start, however the example code forgets to export the symbols.

The best way is probably to take the original gcrt1.s and remove everything you don’t need:

http://svn.savannah.nongnu.org/viewvc/trunk/avr-libc/crt1/gcrt1.S?revision=2376&root=avr-libc&view=markup

“An unitialized v’ble is like an unmade bed…you never know what’s been sleeping there”. However, if the variable is pre-initialized through some system feature, I guess one can violate this canon of programming. Still kinda makes my “hack”-les rise after 40 years of coding.

I’d not heard about ‘Once Upon Atari’ – how technical is it, say compared to ‘Racing the Beam’, which I though was a fascinating read.

A few months ago Hackaday had a great post about assembly programing for the Atari:

http://hackaday.com/2013/06/05/retrotechtacular-how-i-wrote-pitfall-for-the-atari-2600/

Having been into assembly programming on the c64 back in the 80s, I gained a new appreciation for Atari programmers considering how limited the hardware platform was compared to the c64.

If you are this close to the limit, its time to choose a controller better suited to the application. And as others have said, be SURE your registers/variables will initialize properly before you go relying on this sort of hack.

But good work on digging in. Very good to investigate and gain this level of understanding.

The Atari link is a very relevant one. These shirts of tricks had to be employed regularly.

But gone are those days. If you come up a few bytes short, there’s always another family chip. (Hundreds in the Microchip line for ex). OR likely lots of room to optimize your application code.

But a hack like this stands on its own. “Just because I can” is good enough as a learning experience.

Your point is very good when applied to application development. Especially for one-off projects or low volume production, it’s false economy to pour so much engineering time into optimizations when slightly more expensive hardware eliminates the need.

However, for widely used bootloaders (the subject of this article) and libraries, the economics of optimization change. Those small gains benefit many thousands of users. They enable thousands applications to use more of the chip. They give a mass produced product some additional selling point. (I could plug my own products here… but I’m going to refrain)

Of course, if your idea of fun is optimizing, then economics pretty doesn’t enter into this at all. You could similarly say solving crossword puzzles isn’t a good use of time, but if you have fun solving difficult problems for the challenge or merely sake of doing so, then why not do something love? Because someone on the internet believes it’s a waste of time?!

Exactly my point. Doing it for fun is perhaps the BEST reason.

I can see how in large production, a few bytes saved could allow you to use a slightly cheaper chip, and those pennies add up. Plus there is invested time learning one system and cash investment in tool chains.

But in this day and age, there really are way more chip options than you could want. Very few applications will sit right on that edge, where a few bytes equals more money…

And if they did, then I’d question the need for that boot loader to begin with. Free up that apace with direct loading or have them burned in the chip plant.

Now I can see the situation wherein hardware was finalized before software, chip stock ordered, and THEN the code doesn’t fit.

You could save the day and potentially several hundred thousand dollars.

But someone seriously screwed the pooch in the selection process.

Paul & MRE are both right. I do it for the fun (mental challenge), and for the benefit of others. Although in many cases it’s easier to throw more hardware at problems, there’s still situations where it’s not. For example there’s no pin-compatible replacement for the ATtiny85 that has more memory. And even if there was, new chips are rarely pin and bug compatible…

When I worked at eTek Labs/Forte Technologies on the Gravis Ultrasound/AMD Interwave projects, we had a DOS TSR called “SBOS” that provided Sound Blaster emulation from DOS. The thing would sit at port 220 (or whatever was appropriate), and through a series of heuristics, would interpret various FM waveform requests into wavetable pseudo-equivalents. It did a pretty dang good job. Our extensive library of games all sounded pretty darn good… especially the ones we liked… but they were always pushing the limits of what could fit in the RAM space reasonably.

It was not unheard-of for one of the wizards on that project to report as his weekly status “I was able to shave out 20 more bytes from SBOS.”… an important thing to do if you need to add more features to it.

Congrats for working on the GUS. That was an amazing piece of hardware back in the time.

You could go to a chip with more flash, but sometimes you just need a few bytes and there are external limitations (e.g., you don’t want to increase the price of your product).

Except that now, bigger chips are lower in price, especially Cortex-M0.

I used to love AVR and even program them in assembler nearly 15 years ago but today, I take a Kinetis KL or a LPC800

Getting close, but not quite there yet. Mouser qty 100 pricing for the ATtiny85-20SU is 66c, vs 96c for the MKL02Z32VFK4 (the cheapest Cortex MCU I can find with > 8KB flash)

Regarding those comments suggesting using a different (bigger) chip…

That’s not always an option. You might only find out that you’ve run out of space at the end of a project, maybe even after the hardware has been implemented and manufactured in bulk. If this is the case then you likely have no alternative but to go to extremes to shrink your code down to fit the chip you’re stuck with.

Feature creep may also endanger a product in this way… “Yeah the product is great as it stands but we just realised we also want it to play the star spangled banner in 8 bit 16KHz stereo, so if you can just go ahead and implement that for me that’d be great.”

Noooooo!!!!!

When in doubt blame feature creap. Really. Spec should be finalized before hardware ever hears of the project. No changes! Leave it for version 2.

I used to feel that way. It’s really quite comfortable and convenient for engineers to be relieved of all responsibility for a product’s definition. Just implement to a rigid requirements document, throughly specific in every detail.

That process actually can work in cases where whoever writes the spec is extremely familiar with the target market & customers AND can anticipate every technical detail. At huge companies with incredible marketing people aiming to produce “new” products that are really only minor or incremental changes from well established norms is where this sort of rigid spec works.

But when doing anything unfamiliar, or worse yet, anything innovative, who’s going to write that rigid spec? God? A soothsayer with a crystal ball? Marketing people without technical knowledge?

The worst case happens when engineers try to follow a rigid spec approach on an innovative project. The engineering thought process is to work diligently until the final product is done. But lack of mock-ups & early rapid prototyping, poor dialogue between all parties, and little earnest effort on the part of engineers to truly understand and review the spec early on is what causes terrible feature creep. Engineers of course rarely feel they’re responsible in any way for feature creep. Only near the “end” of the project, when changes are the most difficult and costly, are non-engineering people able to see and experience the product well enough to have useful input. It’s at that moment when feedback makes shortcoming in the original spec apparent. That’s the time when new opportunities are discovered.

This is why startups, where small groups of highly motivated people who are emotionally, and usually financially invested in the project’s success, and who are able to communicate well, are able to be far more innovative than large corporations, where the tendency is an “us versus them” mindset between departments, particularly engineering and marketing.

If you close to an edge when finalizing version 1.0, you should opt for the next bigger chip (assuming the next bigger chip is only incrementably more expensive). That way, you have more room to play with before you HAVE to worry about version 2.0. If you can wiggle from 1.0 to 1.5.3.x.y.z before you NEED version 2, so much the better. If you can’t get beyond ver 1.0.9 before ver 2, that’s poor planning.

But, if the bosses don’t listen to the workers…

There is the business side too such as inventory and profits.

If you are churning out high volume product, even a $0.30 saving in BOM

of not jumping to the next size up could pay for the NRE i.e.

development cost for that effort.

Especially when a product is near the end of a production cycle and your

“features” starts filling up the rest of the memory. What would you do

if you are already maxed out the memory line for that part in that

package? You don’t want to do a board spin and the usual EMC/compliance

certifications when you could tighten the code and milk the design and

the parts you have already bought in bulk for a few more batches of

products.

I’ve worked at place a product was produced in such volume that removing a single smd resistor from the BOM would pay an engineers salary

Oh yeah.. forgot about the boatload buyers. “We can get the a penny cheaper if we buy a million…. so, ALL of our products must use this chip, even if its sub optimal for the project.”

I love optimizations like this. They can be dangerous though.

For some really great 6502 asm code look at Wozniac’s code

for the old Apple2. He was one of the greats and able to do extreme

optimizations on both hard and software. His work on the disk drive

interface was epic.

>if you’re banging your head against the limitations of this micro’s storage

you buy a better micro.

If you take care with your code I don’t see why it’s a big deal to skip the initialization. It’s not uncommon that I write something for the AVR and end up using only some of the registers. Why initialize what you don’t use?

Of course, I don’t make assumptions about the state of a register either.

Both good points.

Correct me if I’m wrong but __ctors_end isnt the name of the function. It the symbol marking the end of the constructors table. The compiler stores a list of function pointers to global constructors to call before the main function. IE

FuncPtr f = __ctors_begin

while(f != __ctors_end){

f();

f = f + 1;

}

What it actually is probably just some function that gets placed after the constructor table. Although it could actually be the code that also calls the constructors, which could cause problems if you ever use them (not that its a huge concern, I’ve never seen anybody use global constructors in c before).

NOTE: I have zero experience with AVR, this is based on my tinkering with compiler stuff while writing an OS kernel, so I could be entirely wrong

Devin, for the reasons you refer to, I would not recommend this for C++ code. And when you are using C++ on any platform, I would strongly advise against global instances. Not only is it bad style, you can’t count on the compiler to handle global instance dependancies (i.e. the constructor for Bar uses a global instance of Foo, and you have global instances of both Bar and Foo)

Except that GCC allows you to set a global constructor in c by adding __attribute__((constructor)) to a function. Although I have yet to ever actually see anybody use since it can easily be implemented by putting in a function call at the top of your main function

It is NOT safe to remove the initial “xor r1, r1”, because C code expects R1 to contain a “known zero”, and the cpu registers are NOT cleared on a reset.

This was actually the cause of a rather mysterious Arduino bug: http://code.google.com/p/optiboot/issues/detail?id=26

You might want to look at some of the other things used in optiboot as well (like OS_main attribute)

Is this an issue with the ATtiny series too? If so, it’s only two extra bytes to fix. I have been looking at the optiboot code, and it looks like the VIRTUAL_BOOT_PARTITION code uses the WDT vector to save the original application reset vector. If the application uses watchdog interrupts, then you’re screwed. ATB and tinysafeboot look like they save the original reset vector in a separate space in flash. I’m coding something similar in picoboot; the last word of the last free page before the bootloader code will be the rjmp to the start of the application.