There are a few ways of playing .WAV files with a microcontroller, but other than that, doing any sort of serious audio processing has required a significantly beefier processor. This isn’t the case anymore: [Paul Stoffregen] has just released his Teensy Audio Library, a library for the ARM Cortex M4 found in the Teensy 3 that does WAV playback and recording, synthesis, analysis, effects, filtering, mixing, and internal signal routing in CD quality audio.

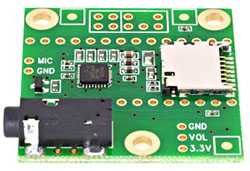

This is an impressive bit of code, made possible only because of the ARM Cortex M4 DSP instructions found in the Teensy 3.1. It won’t run on an 8-bit micro, or even the Cortex M3-based Arduino Due. This is a project meant for the Teensy, although [Paul] has open sourced everything and put it up on Github. There’s also a neat little audio adapter board for the Teensy 3 with a microSD card holder, a 1/8″ jack, and a connector for a microphone.

In addition to audio recording and playback, there’s also a great FFT object that will split your audio spectrum into 512 bins, updated at 86Hz. If you want a sound reactive LED project, there ‘ya go. There’s also a fair bit of synthesis functions for sine, saw, triangle, square, pulse, and arbitrary waveforms, a few effects functions for chorus, flanging, envelope filters, and a GUI audio system design tool that will output code directly to the Arduino IDE for uploading to the Teensy.

It’s really an incredible amount of work, and with the number of features that went into this, we can easily see the quality of homebrew musical instruments increasing drastically over the next few months. This thing has DIY Akai MPC/Monome, psuedo-analog synth, or portable effects box written all over it.

‘…there’s also a great FFT object that will split your audio spectrum into 512 bins, updated at 86Hz.’

Home brew vocoder?

this is exactly what the Boxie was built on — https://www.kickstarter.com/projects/lightshowspeaker/boxie-a-speaker-with-a-built-in-light-show — it’s an extremely powerful platform for not that much $$$

so, have you built a home brew vocoder yet? i see that there’s the fft and also a sinewave synth, but one would have to generate a couple of sines plus maybe add some noise to actually make it sound natural. i’d like to do something like this but i want to check out whether somebody else has done it already.

There was some discussion about such a project, but as far as I know, nobody has done this yet. Or if they have, they haven’t published it anywhere I’ve seen.

The best place to discuss this Teensy project is this forum:

http://forum.pjrc.com/forum.php

thanks paul, i’ll change over to pjrc.com in the meantime, i found a little arduino / processing pair that allows for generating a waveform plus sequences of definable length and pitch with a procssing ui and play it on an arduino through some external resistors etc: https://raw.githubusercontent.com/ahmattox/archive.anthonymattox.com/master/2007-2011-blog/arduino-synthesizer.html here’s also a video: http://www.synthtopia.com/content/2009/05/13/arduino-processing-synthesizer/ the code is not professional, but readable and certainly good enough as a starting point. certainly, i’ll use the teensy with it’s the audio shield (applause for developing that, btw! hope it’ll arrive soon).

I’ve been using the Audio library in a few projects, and I really like the whole AudioConnection model it uses, even though it makes complex effects a bit more complicated than they would be in a more low-level library. I just hope the cruftiness is lower in the full release version, the dev version was definitely dev.

The inter-object streaming is actually done very efficiently by shared buffers, using a shared copy-on-write scheme when one output is sent to multiple inputs. For example, the Synthesis > PlaySynthMusic sketch has 16 waveform generators, 16 envelopes and 6 mixers, connected by 42 AudioConnection objects. While playing the William Tell Overture, which does use all 16 voices in many places, the worst case CPU usage is about 33%.

But yes, on the development roadmap page, buried in “More Waveform Control Signals”, are plans to look into how people actually use the object connections in larger, more complex synthesis projects. Future versions will likely create more sophisticated control signal generator objects, or even objects that integrate things more tightly, for maximum efficiency. So far, relatively few such synthesis projects have been written (and shared), so it’s just too early to do that sort of optimization work. As more real-world projects are made, optimization efforts will work with those projects as test cases.

However, one thing I’ve learned over the last year developing this library, is DSP optimizations on Cortex-M4 are not always intuitive. If you’re used to 8 bit microcontrollers, the idea of writing 256 bytes out to RAM, only to read them back in later may seem terribly wasteful. But on ARM Cortex-M4, the bus writes 4 bytes at once, and it has a burst mode for approx 400 Mbyte/sec, if you read/write blocks of 8, 16 or 32 samples. Likewise, the DSP extension instructions work on packed registers (two 16 bit samples in a single 32 bit register), so often times the “unnecessary” writing and reading of data end up being a huge win, because it lets you leverage the ARM register set with those instructions (for one optimized algorithm at a time) and burst memory access. The hardware has a lot of really amazing capability.

Over the next year, I intend to do much more optimization, and expand the library feature set pretty substantially, as described on the roadmap page. Especially on optimizations, more large-scale projects need to be created and shared before that work can even begin.

I have used my teensy for a lot of audio/synthesis projects, but I was unaware of this library and have so far hacked my own with varying degrees of success. I can’t wait to get into this, great work!

Also, I’d like to say thanks for giving the beta versions a try. It was a bumpy ride at times, especially the API changes over the last several months. I waited for quite some time before making this 1.0 release, because I really wanted start with a very stable API.

You sure are making it extremely difficult for people not to buy a Teensy. (c:

I saw a beta version of this running at Maker Faire this year…the FFT was able to run concurrently with a crapload (few thousand) WS2812 pixels displaying visualizations. Amazing what is possible with tiny chips these days, if you’re willing (as Paul is) to dig into the capabilities.

“There are a few ways of playing .WAV files with a microcontroller, but other than that, doing any sort of serious audio processing has required a significantly beefier processor.”

No it hasn’t; lots of microcontrollers (like the Freescale K20 found in the Teensy) are perfectly capable of doing “serious audio processing” — otherwise this library would be useless!

If you’re going to use uncited material in your ledes, at least spend a moment thinking about whether or not that material is actually accurate.

I see random bits of made-up bullshit all the time in HAD articles. I’m not trying to nit-pick, but this fast-and-loose editing is really bothersome; casual readers may think the (normally quite intelligent) project authors are making those claims.

I second your feelings. Also, in this instance, I’m at a loss to understand how a 72 MHz ARM with 64K RAM doesn’t qualify as “significantly beefier” than your typical $0.50 MCU.

The SoC at the heart of many of the pre-iPod MP3 players was a 72MHz ARM720T. And unlike the MK20 with its fancy-pants floating point, all of the MP3 decoding was done in fixed point math and had ample cycles to spare.

Indeed. I am thinking about the Phatnoise Phatbox media player designed over 10 years ago that I have in my car – a 74MHz ARM 7 SoC from Cirrus, and playing WAV, FLAC, AAC, WMA, Ogg, etc. Of course there’s a hell of a lot more RAM in the Phatbox (it’s also running Linux and a hard drive), but the point is very valid, that the amount of CPU grunt is certainly sufficient. What is cool about this implementation is the library support with a very practical memory footprint that works in a very compact and low cost platform.

Yep, Phatnoise used the EP7312. MP3 players that used a HDD (Phatnoise, Creative Nomad) or CD (Rio Volt) needed extra space for disk buffering and the music database. These wouldn’t all fit in the on-chip 48k SRAM so they would use an off-chip SDRAM. But flash players with smaller storage got away with the cheaper EP7309 which was identical to the ’12 but without the SDRAM interface bonded out.

In both cases, Cirrus provided customers with a decoder stack for MP3 and other formats that could run comfortably out of the on-chip SRAM.

The MK20 used on Teensy 3.1 has DSP instructions but no FPU.

The K20 doesn’t hardware floating point math. DSP, hardware multiply and divide does not imply floating point. (Fixed point DSP exists)

Brian Benchoff didn’t write “typical $0.50 MCU” — he wrote “doing any sort of serious audio processing has required a significantly beefier processor [than a microcontroller]” — which is flat-out incorrect. That was my only point. Random bits of unsubstantiated nonsense thrown into articles for no reason at all. It needs to stop.

Also, a $2.45 K20 isn’t particularly beefy. It doesn’t have an FPU, and is only clocked at 72 MHz. That’s less than twice as fast as most of Freescale’s “typical $0.50 microcontrollers”, which clock at 48 MHz.

You guys are correct, on a purely technical level regarding only hardware. ARM Cortex-M4 has been on the market for a few years, and many dedicated DSP chips have been around for a very long time.

But that’s really taking Brian’s words out of context. This is an article about software, which enables you to easily use the hardware. In the context of a ready-to-use hardware+software combination, “doing any sort of serious audio processing has required a significantly beefier processor” is indeed true. Until now, nobody has published such a library.

Well, except such software has also been around for quite some time, in proprietary form, either embedded inside fixed-function chips, or as an expensive library (the main one is from a company called DSP Concepts). Likewise, the mp3 decoding done inside the iPod, on similar hardware, is proprietary code. Really, the context of this article is free, open source software, and more specifically, open source software meant to be easy for hobbyists to use.

Even then, you might point to the Open Music Labs Codec Shield running on Arduino Maple, or a HiFi library for Arduino Due, which have existed for some time. But if you compare the capability of those libraries to easily implement sophisticated audio projects, well, there’s really no comparison. While they’re nice starting points, providing 1 sample at a time in a low-latency interrupt callback is a long way from interconnecting objects in a GUI and exporting code that runs automatically in the background, with efficient DMA and nested interrupt priorities, so other interrupt-based libraries and ordinary simple (blocking) main program code can be used. Thin abstraction layers than give only simple access to the raw hardware capability are a LONG way from a comprehensive framework that makes high level tasks simple, and allows for easy usage with blocking and high-latency in the main program coding.

Still, your points about hardware are well made, and technically accurate. I only hope you can see you’re nit picking about raw hardware capability, in response to an article that is actually about a software library that is meant to enable hobbyists to much more easily create awesome sound projects on such hardware.

Paul is my hero

Hold on. I’m trying to parse this criticism.

> No it hasn’t; lots of microcontrollers (like the Freescale K20 found in the Teensy) are perfectly capable of doing “serious audio processing” — otherwise this library would be useless!

So, some guy creates a new library for audio processing that’s better than anything out there. In the writeup, I say, in the past, audio processing has required more powerful processors. The criticism, “lots of micros are capable of doing serious audio processing – otherwise this library would be useless” negates my statement of audio processing in in the past being lacking.

There are quite a few leaps of logic to get to that criticism.

But whatever. Lets look at the meat of the statement, “requires a significantly beefier processor to do audio processing.” What functions does this library have that bring previously unheard of capabilities to a small micro? simultaneous playback of different WAV files? I’m not aware of any embedded (and not running a *nix) devices that can do that. Trust me, I’ve looked. Effects processing and envelope filters? Again, that’s the realm of DSP or some really cool chips I want to try out.

I get what you’re saying. You don’t want random bullshit in articles. I’m with you on that. However, this really isn’t random bullshit. It only becomes random bullshit when you take it out of context, and complain about your own interpretation of it.

How about the WAV Trigger by Robertsonics? I’m pretty sure it isn’t running a *nix. Not completely 100% on that, but /pretty/ sure… it does have an STM32 on it, though. “Plays up to 14 stereo tracks … simultaneously” according to SparkFun’s page on it, which is here –> https://www.sparkfun.com/products/12897

Well that’s nifty.

I used one of these in project recently.. It does sound lovely! The quality suprised me.

The WAV Trigger is nothing more than an STM32F4, a stereo DAC and a microSD socket, along with bare-metal firmware highly optimized for reading and mixing WAV files over an SDIO interface. It can sustain 14 independent stereo 44.1kHz tracks, with independent dynamic gain control and built in fades. With a configurable MIDI interface, sound bank switching, a “voice stealing” algorithm, programmable attacks/decays, and a latency of around 8ms, it makes a very playable polyphonic sampling instrument.

Limiting factor is getting data off the microSD card, not processor cycles. Planning to add programable EQ to the output.

ordered the board today, Micro Python Audio Skin., but buying this one as well now, and give it a try hope will get better result. Thanks for the post.

thanks guys for the post , it seems a nice solution for my audio prototype but with micro python. because i already ordered Micro Python board and Audio Kit.

cool

At last the stable release is out! Great work Paul, as usual! I’m definitely going to test the whole thing with my DIY MPC style MIDI controller and see what happens.

More important, I shall now remove the dust from my USB Audio mod for the Teensy and definitely merge it with the official Audio lib!

Cheers,

Mick

Open source libraries are fine, but what happens when a company just stops caring like TinkerKit apparently has and lets their website go away?

You can piece some of it from Github, but a lot of education goes away. The community needs sites to mirror these resources in case the original links go away (like TinkerKit).

Awesome work Paul! Keep up the great open source projects.

(from a previous 8051 MP3 player customer)

Anyone know if this works with the STM32F4DISCOVERY? It already has the 3.5mm port and is pretty cheap (plus I have one in my parts drawer!)

NO, it is designed for the Teensy 3. Why would it work on another random codec?

I guess I was asking if it used Freescale specific instructions/libs, mostly generic C code or ARM/CMSIS type stuff.

I assume it would probably at least need the DAC/ADC and possibly the timer stuff porting, but that could be fairly light work.

Seems to be a lot of talk around the STM32F4 being good for DSP (e.g. diydsp.com) and the STM32F4 Discovery has the “CS43L22 Portable Audio DAC with Integrated Class D Speaker Driver” on board.

Yes, it uses Freescale specific registers and Freescale’s eDMA engine and other Freescale specific hardware features. It’s also built on top of some Teensyduino software infrastructure, which attempts to allow separately developed libraries to coordinate their allocation of those Freescale-specific hardware features.

The library is probably portable to other Freescale K-series Kinetis parts. But porting it to other chips, even Freescale’s other (not K-series) Kinetis chips, would require quite a lot of work.

Unfortunately, highly optimized performance and portability across many different microcontrollers are often conflicting goals. A tremendous amount of work has gone into optimizing this library for excellent performance, but some of that optimization comes at the expense of portability, even to Freescale’s other chips.

I know some about micro controllers, but zip about audio processing. Could this be used to selectively amplify certain frequencies? I’m going tomorrow to get a hearing aid because certain frequencies I hear fine and others I’m nearly deaf in (higher ones where most women’s and kid’s voices fall).

>I’m nearly deaf in (higher ones where most women’s and kid’s voices fall)

and why would you want a hearing aid??? :)))

When I got the initial test done, the dr. started laughing at the read out. At first I was like, “What the hell?” Then he said I have a medical reason for not listening to my wife and he would write me a note if I needed it.

Yes, I believe you could amplify certain frequencies pretty easily.

Open the graphical design tool. This lets you do the audio design easily in your web browser, to plan how it’ll work. Use “Export” to generate the code when it’s designed.

First grab a I2S input. That gives you the live input signal.

Then scroll down to the filters section and grab one or more of the filters. The Biquad filter is probably the simplest, since it has easy functions you can call from setup() to configure it for bandpass (just 2 parameters, the frequency and “Q” for how wide or narrow the band response should be). Or you could use the FIR filter, which is very flexible, and might be a better choice. To configure that one, follow the link in its description to that filter design site and fiddle with the settings to get an array of numbers for the filter response you want. Or you could implement both filters, or even multiple copies of each.

You’ll need a mixer object, to add together the filter output, and the original signal. Then run the mixer to a I2S output, so you can get the summed signal to drive into headphones or an earpiece.

In setup(), call the functions to configure the filters, and use mixer1.gain() to configure how much of the original signal and how much of the filtered signal to pass to the output. You probably want the total gain to be 1.0, or perhaps slightly less if your filter has gain (and in that case, you might need a dummy mixer in front of the filter, to reduce the signal slightly, so it can’t get clipped inside the filter).

If you want to amplify multiple regions, you could create several filters and mix them together with the original signal in pretty much any combination. The FIR filter uses more CPU time (Biquad is extremely efficient), so if you create more than a few FIRs, put a bit of code in loop() to print the max CPU usage, just to check that you’re not getting close to consuming all the CPU time.

If you wanted to get fancy, you could put code in loop() to read the position of one or more pots (using analogRead), and use those numbers to adjust the gain on individual mixer channels, while it’s processing sound. Then you could adjust the knobs for different gain in each filter region. Maybe turn a specific filter gain up or down, for example, when you’re talking with your wife. :-)

Thanks for the reply. This is definitely something I want to explore now.

are there any tutorials/howtos for this library/gui tool combo?

I would love to see something from blank canvas to finished effect, like ‘your first fm radio receiver in Gnuradio’ kind of thing.

A couple videos are in the works…..

There’s also some preliminary plans to expand the generated code to include an example setup() and loop(), where many of the objects will put at least *something*.

But right now, this is a 1.0 release. It’s been in active development for over a year, with a lot of progress, and finally it’s settled to a stable API. Like a lot of 1.0 software, it’s pretty good, but much more will come with future releases.

Thank you a lot, Paul, for your Teensy Audio Library. It allowed me to build my microSynth http://www.hackster.io/user3375/microsynth

There’s a MP3/AAC library which decodes in software: https://github.com/FrankBoesing/Arduino-Teensy-Codec-lib. (Still beta)

Why isnt this board in the HaD store anymore?