Tektronix must have been quite a place to work back in the 1980s. The company offered a bewildering selection of test equipment, and while the digital age was creeping in, much of their gear was still firmly rooted in the analog world. And some of the engineering tricks the Tek wizards pulled off are still the stuff of legend.

One such gem of analog design was the SG505, an ultra-low-distortion oscillator module that [Paul] is trying to replicate with modern parts. That’s a tall order since not only did the original specs on this oscillator call for less than 0.0008% total harmonic distortion over a frequency range of 20 Hz to 20 kHz, but a lot of the components it used are no longer manufactured. Tek also tended to use a lot of custom parts, especially mechanical ones like the barrel switch used to select attenuation levels in the SG505, leaving [Paul] no choice but to engineer his way around them.

So far, [Paul] has managed to track down most of the critical components or source suitable substitutes. One major win was locating the original J-FET Tek used in the oscillator’s AGC circuit. One part that’s proven more elusive is the potentiometer that Tek used to adjust the frequency; who knew that finding a dual-gang precision wirewound 10k single-turn pot with no physical stop would be such a chore?

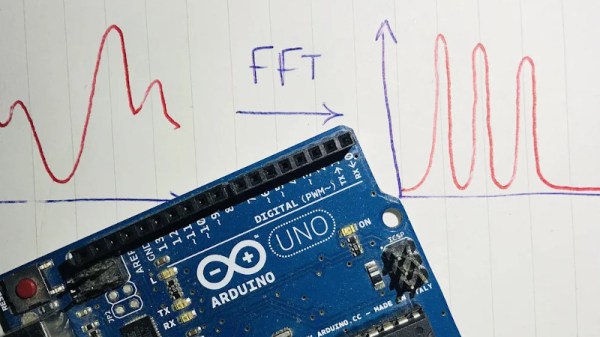

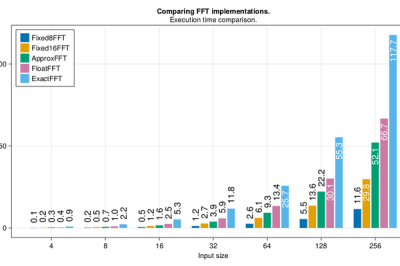

[Paul] still seems to be very much in the planning stages of this project yet, and that’s probably for the best since projects such as these live and die on proper planning. We’re keen to see how this develops, and we’re very much looking forward to seeing the FFT results. We also imagine he’ll be busting out his custom curve tracer at some point in the build, too.