If you’ve used Linux from the early days (or, like me, started with Unix), you didn’t have to learn as much right away and as things have become more complex, you can kind of pick things up as you go. If you are only starting with Linux because you are using a Raspberry Pi, became unhappy with XP being orphaned, or you are running a cloud server for your latest Skynet-like IoT project, it can be daunting to pick it all up in one place.

Recently my son asked me how do you make something run on a Linux box even after you log off. I thought that was a pretty good question and not necessarily a simple answer, depending on what you want to accomplish.

There’s really four different cases I could think of:

- You want to launch something you know will take a long time.

- You run something, realize it is going to take a long time, and want to log off without stopping it.

- You want to write a script or other kind of program that detaches itself and keeps running (known as a daemon).

- You want some program to run all the time, even if you didn’t log in after a reboot.

One of the things that makes Linux tough is that there are lots of options and so what works on one system may not work on another. If you can assume a single distribution, you might have a better chance of finding that things work the same. To keep things manageable, I’m going to focus on the first two items and maybe catch up on the last two at a later date. I’m also going to assume we are talking about command line programs. If you need to run graphical programs after you log out, that opens a lot of strange questions — it’s certainly possible, but it is strange since your graphical user environment will go away when you log out (hint: use VNC or Nx to create a persistent desktop).

I will, however, give you clues for the last two use cases. First, a program that detaches itself is a daemon. The steps to do that can be involved, or you can outsource it. Making a program run all the time can be simple or complicated. Most Linux distributions will have a file /etc/rc.local that runs as root on start up (at least, normal start up). You can add things there if you are just doing a one-off. Otherwise, you need to know if you are using SystemV init, Upstart, OpenRC, or Systemd. There are probably some others to contend with, as well. But that’s a topic for another day. If you can’t wait, try your Portuguese (or Google’s) on this IBM paper.

A Tale of Two Cases

Back to the first two cases, though. Suppose you are going to run a program

Back to the first two cases, though. Suppose you are going to run a program remote_backup and you know you will launch it (perhaps using an ssh session) and then you’ll want to disconnect or make sure it doesn’t stop running if you accidentally get disconnected for some reason. Either way, just launching it from the command line means when your session exits, the program will stop. Or will it?

This can be as easy as running programs in a way that they will be immune to hangups. If you use bash and your options are set right, the answer can be very simple. If you use the & to start a program in the background, or you can suspend a running program (Control+Z) and move it to the background with the bg command, it might run even after you exit your session. This is another case of Linux’s flexibility — many ways to accomplish similar results — getting in the way.

If you run bash, you can see your “shell options” which include the “hangup on exit” settings. Try running this at a shell prompt:

shopt | grep huponexit

If you see that huponexit is set to off, then simply pushing a program into the background will let it survive your session disconnecting. Of course, if you try the same thing on another system, it might not work, and you’ll wonder why.

If you know you are running bash with huponexit off, you can run your program in the background very simply by ending with a single ampersand:

remote_backup &

However, it is safer to expressly prevent the program from dying if you log off. If for no other reason, because you won’t see any output from the program after you log off if you use the above method. If you are thinking ahead you can just run the program with “no hangup”:

nohup remote_backup

If you need arguments, just put them on the end like usual. Nohup will do a few things:

- Redirect stderr to stdout

- Redirect stdout to nohup.out (depending on your version of nohup, that may be in ~/nohup.out)

- Redirect stdin to an unreadable file

- Run the program and return to the shell prompt

The end result is the program will run, can’t get any input, and will put any output into nohup.out. If there is any data already in nohup.out, the new data will go to the end.

The reason all of these redirections occur is because nohup detects that each stream is connected to a terminal. If you already redirect things to a file, nohup won’t disturb them. So you could say:

nohup remote_backup >/tmp/backupstatus.log &

or

nohup bash -c 'echo y | remote_backup >tmp/backupstatus.log' &

That last line will take input from the pipe so it won’t redirect. All output (stdout and stderr) will go to /tmp/backupstatus.log.

Prove It!

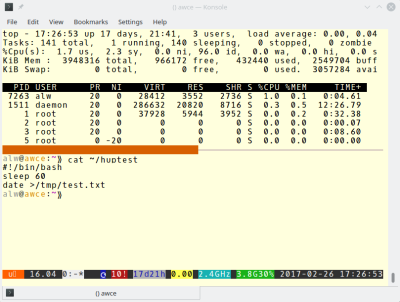

If you want to see the difference nohup makes, log into a Linux server with ssh and type the following at a shell prompt:

If you want to see the difference nohup makes, log into a Linux server with ssh and type the following at a shell prompt:

echo '#!/bin/bash' >~/huptest

echo sleep 60 >>~/huptest echo 'date >/tmp/test.txt' >>~/huptest chmod +x ~/huptest ~/huptest

Before the 60 seconds expire, press the tilde key (~). Then press a period. The tilde is the SSH escape character (if it is at the start of a line). Press ~? if you want to know about more things you do with it. Now, go have a cup of whatever you drink and come back way after a minute. Log in. You won’t find a file at /tmp/test.txt (unless there was one already there, but the contents should make that clear). Your session ending killed the waiting program.

Now try this:

~/huptest &

That tells the shell not to wait for the program to finish. If you are using bash, this will work as long as huponexit is set to off. You can experiment by using:

shopt -s huponexit

and

shopt -u huponexit

The first command will turn the flag on. The second one resets the flag to off.

Finally, you can run the same commands with nohup:

nohup ~/huptest &

Even nohup isn’t perfect. If the program you are running intercepts the nohup flag itself, nohup won’t help you. However, most programs you are going to worry about do not catch the flag and using nohup will work regardless of the shell and its configuration.

There are many other ways you could do this. For example, investigate the at command which will run things at a specified time (which could be a second after the current time). The programs run will use sh, not bash (without some work) but will run detached.

Lack of Planning

There are two cases where nohup doesn’t help, though. First, you may not have planned for this ahead of time. If you start running something and realize it is going to take awhile or that you suddenly have to leave, what do you do? You might also have the case where you need to start a program, give it some manual input, and then want it to run without you watching it.

You may know that you can suspend a running program using the Control+Z key. Bash will tell you that it has created a job and will give you a number for that job. You can force it into the background using bg. For example, if the job number is 3:

bg %3

If you have huponexit off, that’s all you need to do. But in the general case, you need to tell the shell to either stop sending HUP signals to it or to remove it from the job table completely. You can use disown (built into bash) to do both of these functions.

Using disown with no options will remove the named jobs from the job table. If you don’t want that extreme, use the -h option to just inhibit the HUP signal from the shell for that one job. You can also specify -a to hit all jobs or -r to affect all running jobs. This is a built-in command for bash (so man bash to read more) and so it is shell dependent. Once you’ve disowned a background task, you can log off with no fear

Persistent Sessions

As usual with Linux, there is more than one way to go for any given task. You could use screen or tmux to provide a session. This is similar to VNC where you can log in and find whatever you were working on still there. If you do a lot of work at the command line, you might want to try byobu which gives a nicer interface to screen or tmux (see right).

As usual with Linux, there is more than one way to go for any given task. You could use screen or tmux to provide a session. This is similar to VNC where you can log in and find whatever you were working on still there. If you do a lot of work at the command line, you might want to try byobu which gives a nicer interface to screen or tmux (see right).

If you want to try that, log in as usual, run screen, tmux, or byobu. Execute the test script again (with or without the & at the end). And use the ~. trick to kill the ssh session. Then log back in and restart the same program (screen, tmux, or byobu). You’ll see the screen there just like you left it. That’s handy in general. Plus you can build multiple graphic-like windows easily, but that’s not germane to this post.

Options

Part of the power of Linux is you have plenty of options. Part of the problem with Linux is you have plenty of options. The real problem isn’t if you are configuring a single Raspberry Pi where you control everything. You make it work and that’s that. The real issue is when you try to deploy something to multiple boxes with different users and maybe even different distributions.

Of course, this isn’t the only thing that causes you trouble if you are going for portability. If you are trying to write scripts that can go to many different Posix systems, you might read the GNU documentation for autoconf which has a lot of good information about the problems and solutions.

there is more than one way to skin a cat (part of the reason I like OS’s following the “Unix philosophy”) I happen to prefer setsid which is worth taking a look at…

I didn’t know that was in shell. Thanks

Like anything, it is a mixed bag and I almost brought up setsid. The plus side is that it is better than just ignoring SIG_HUP. The downside is that if the program is already a process group leader it will fail, but nohup won’t.

Screen or Tmux would make your life a lot easier

and reptyr, if you forgot to run it inside screen or tmux

I like reptyr but you have to enable ptrace to get it to work these days. Speaking of redirecting to other windows, if you are really at the console, try: openvt.

…and reptyr, if you forgot to run it inside screen/tmux

Tmux was my first thought as well, you can also share that folder with a group of users so they can all access the run too.

Another couple of tricks to add to my sleeve! Thanks!

I’ve been running screen for a number of years (using it not literally running a screen session for years). I run long running tests that take up to months to complete. I love having the ability to connect and disconnect as needed while still running the programs in the screen sessions.

I haven’t used RDP but I wonder if that might help with X GUI sessions? I was using nxserver but ran into a problem where I can’t use it anymore (non-commercial use). I might look into that again.

If you don’t like nx (which is great, but not free) you might think about just tunneling VNC over ssh. Make the VNC server listen locally and then do an ssh tunnel to the VNC client machine and you are off. If you do it right, you can have a persistent session separate from your normal desktop.

What I have done in the past is have different users in the same group so that my desktop with 3 or 4 big monitors is one login and my VNC session is 1024×768 or some other sane layout. A lot of the window managers seem to not think hard about your screen resolution violently switching from huge to small and back.

NX used to be great, but not any more.

You USED to just be able to open up a terminal window with NX, and then launch X apps and have them mix freely with your Windows windows. That was back in version 3.something.

Now, NX seems to work just like VNC, in that you are forced to have a virtual desktop running something like Gnome or KDE. There is no way to have it run without a desktop or window manager (at least no way that I can find).

Too bad, as that was the best way that I have seen to run X programs remotely.

Can anybody recommend something that works like a real X server, but uses compression? Preferably something open-source, maintained, and still reasonably easy to install and use for mere mortals? VNC is out, because using a virtual desktop just sucks when you have multiple monitors.

have you looked at SSH X11 tunneling?…simply SSH into the RPi with the -X flag…i.e. ssh -X pi@192.168.0.34 . You’ll need to have at least xserver installed on your RPi. This just works if your PC runs Linux. If your PC is running windows, you’ll need to install XMing and maybe Putty https://sourceforge.net/projects/xming/

For local work, the best thing that I have found is MobaXterm — think putty and an X server all rolled into one, along with VNC, RDP, FTP, Telnet, and a few others thrown in. Truly an awesome program.

However, a lot of the problem with X is, from what I can tell, not directly bandwidth, but more one of latency. There seems to be a lot of “back and forth” communication where one action can require multiple round trips of data. X compression will not help with this at all.

I remember years ago trying to run a Mentor Graphics tool (one of the ones used for chip design) over a VPN connection at home. Bandwidth was not an issue. Using just X, the remote connection was unusable. With NoMachine NX platoform, it worked like a dream.

I know that “Exceed on Demand” does something similar and works very well, but that is a completely proprietary solution, and reserved for companies with deep pockets.

If you liked NX 3.x then you should try X2Go, which is essentially the NX 3.x libraries under the hood. All of it actively maintained, so you should find a relatively fresh version in your package manager. They have pretty good clients for Windows and OSX as well. It’s served me well and that fact that it’s FOSS is great!

http://wiki.x2go.org

Try xpra. Learnt about this via firejail, another useful tool.

xpra is nice. Just don’t install the repo version. Get it off the xpra.org site. Very cool, I didn’t know that one.

xrdp basically sets up a Xvnc instance and presents that over RDP. Handy if you’re setting up a LInux machine that is going to be accessed by a lot of Windows users as it means they already have the client access software needed.

How does the “nohup echo y | remote_backup >tmp/backupstatus.log &” command work? I haven’t tested it, but it seems like it shouldn’t to me.

First nohup executes “echo y”, the shell gets the output and redirects it to a pipe. Then the shell executes remote_backup, feds the pipe as its stdin and redirects the output to tmp/backupstatus.log. Not entirely sure how the final ampersand is processed, I guess it applies to the whole line (both nohup and remote_backup run detached).

If that is correct then remote_backup should be terminated when the session disconnects because it was the shell that ran it, not nohup.

Did I get this wrong?

No, some quotes got eaten somewhere…. let me look

I see you changed it to “nohup bash -c ‘echo y | remote_backup >tmp/backupstatus.log’ &”, but it still has a couple of problems.

First it will still create a file nohup.out because it is running bash and not redirecting it’s output. The file “tmp/backupstatus.log” will contain the stdout of remote_backup but it won’t contain stderr, that will go into nohup.out.

That is because nohup sees bash is connected to a terminal and redirects all output of bash to nohup.out and all input to /dev/null, but the command that bash executes (echo y | remote_backup >tmp/backupstatus.log) redirects stdin to a pipe and stdout to a file, but not stderr.

I think the correct command would be “echo y | nohup remote_backup >tmp/backupstatus.log”

I believe in Ubuntu distros (maybe also Debian?) you need to uncomment the “EscapeChar ~” line in /etc/ssh/ssh_config in order to use that functionality, no?

Correct, for Debian derived distros.

~ is the default for OpenSSH (see man page for ssh_config) and provided as example. sshd_config even says commented lines are there to show the defaults (great way to write configuration files), ssh_config doesn’t mention it but seems to use the same approach. If you need to uncomment, Ubuntu is doing something different (Debian seems to use the OpenSSH policy).

https://hackaday.com/wp-content/uploads/2017/02/tuxwiz.png?w=192&h=250

… What, the actual FUCK, is that?!

you’re right, it should have a neckbeard instead of a wizard-beard

I don’t like leaving potential error’s unhandled. If you nohup a program there’s a lot that can go wrong. Maybe it’s waiting on an input? Maybe you run two things so the nohup output is overwritten.

Either way if something goes wrong when I get back to my sessions I like the idea of being able to take back control easily.

So another vote for screen for me. The whole multi-tasking thing is a bonus as is the ability to take over a session from another machine.

Nice to see htop in the article photo :)

If you are going to treat a Linux box like an old school mainframe the “at” command can be useful too, with it’s output sent via local mail. See

man atfor all of the details that make itatand it’s brethren powerful.Nowadays there are systemd timers

I’ll just leave this here, https://devuan.org/

A bit of trivia, the ~ escape characters were in UUCP’s cu.

If you want to send ~ in SSH or cu, you double it, so to disconnect a nested session, type ~~. In cu, I find it wise to wait a bit before typing the last dot.

From a security standpoint, giving the program it’s own session is a good idea (with its own credentials and permissions to only what it needs) especially if it’s something that needs to run constantly like MySQL or Apache. Being able to login when it Borja up and have its own logs is nice too.

See also: setting up another user session is easy, from a raw beginners view.

If you’re running systemd which all recent distros run then the nohup or other mentioned methods won’t work if user logs out since logind kills the users processes on logout by default. The default behavior can be changed in logind configuration.

Well, maybe. It depends on how systemd is configured and that depends on the packager. The kill all user processes flag used to be off. Then at some point it was turned on by default. This broke tmux, screen, nohup, mosh, etc. They reverted it last year in Debian: https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=825394

The unfortunate part is that systemd has become a religious war so everyone gets bent out for or against and quits listening to stuff.

Change for the sake of change is meaningless.

Most Systemd installs still rely on the old scripts when you dig into it.

Also, I didn’t like the fact Lenny leaves some firewalls disabled (read: completely open) until fully booted. The systemd script templates usually end up with a dozen manually patched overrides to fix stuff that was considered stable for over 10 years.

Attack surface is actually worse, but the process manager is easier to configure if there are no errors you need to dig for in your log files.

I can see how both groups have valid points, and debian should have never bundled it so soon given it is still beta quality 2 years later.

I may be wrong (and at the risk of offending the faithful), my perception is that everyone flocked to systemd because it could reduce boot times and that’s one of those stats that journalist (well, not HaD journalists) fixate on because on Windows rebooting is a common event. When you reboot your box every six or eight months, that extra 8 seconds on the boot process isn’t really a big deal.

I’m sure someone will chime in to tell me that isn’t the reason, and if they have a reasoned argument, I’d buy it. But that’s the only thing I could parse out of it other than “frill features” like connecting services to sockets, which is nothing new. Just drawing things into one big giant monster service.

I would suggest cron ;)

Agreed: cron and disown would be the most common ways of approaching this. Disown is a bash, ksh and zsh built-in and takes care of the SIGHUP handling, for example. Note that at would rely on the cron daemon running.

If you are not always connected, try http://anacron.sourceforge.net/

Little programs are often left out, dtach is the little screen or tmux, great for keep running a single program after you log off. Great tool. I use it to run rtorrent or axel, although both can be daemonized. But learn tmux too, that’s like full featured toolset.

Just an FYI, it looks like the IBM developerWorks forum is decommissioned, so the “IBM paper” link now sends you to an IBM search page.