[Ramin Hasani] and colleague [Mathias Lechner] have been working with a new type of Artificial Neural Network called Liquid Neural Networks, and presented some of the exciting results at a recent TEDxMIT.

Liquid neural networks are inspired by biological neurons to implement algorithms that remain adaptable even after training. [Hasani] demonstrates a machine vision system that steers a car to perform lane keeping with the use of a liquid neural network. The system performs quite well using only 19 neurons, which is profoundly fewer than the typically large model intelligence systems we’ve come to expect. Furthermore, an attention map helps us visualize that the system seems to attend to particular aspects of the visual field quite similar to a human driver’s behavior.

A liquid neural network can implement synaptic weights using nonlinear probabilities instead of simple scalar values. The synaptic connections and response times can adapt based on sensory inputs to more flexibly react to perturbations in the natural environment.

We should probably expect to see the operational gap between biological neural networks and artificial neural networks continue to close and blur. We’ve previously presented on wetware examples of building neural networks with actual neurons and ever advancing brain-computer interfaces.

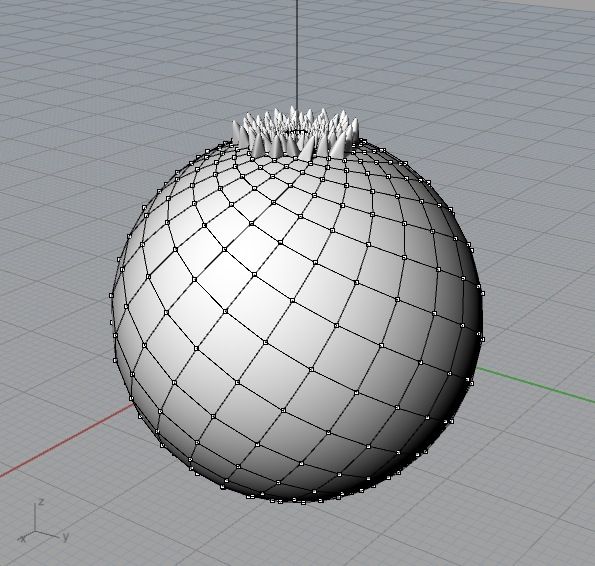

[John] modeled several 3D sculptures in Rhino containing similar geometric properties to those found in pinecones and palm tree fronds. As the segments grow from those objects in nature, they do so in approximately 137.5 degree intervals. This spacing produces a particular spiral appearance which [John] was aiming to recreate. To do so, he used a Python script which calculated a web of quads stretched over the surface of a sphere. From each of the divisions, stalk-like protrusions extend from the top center outward. Once these figures were 3D printed, they were mounted one at a time to the center of a spinning base and set to rotate at 550 RPM. A camera then films the shape as it’s in motion at a 1/2000 sec frame rate which captures stills of the object in just the right set of positions to produce the illusion that the tendrils are blooming from the top and pouring down the sides. The same effect could also be achieved with a strobe light instead of a camera.

[John] modeled several 3D sculptures in Rhino containing similar geometric properties to those found in pinecones and palm tree fronds. As the segments grow from those objects in nature, they do so in approximately 137.5 degree intervals. This spacing produces a particular spiral appearance which [John] was aiming to recreate. To do so, he used a Python script which calculated a web of quads stretched over the surface of a sphere. From each of the divisions, stalk-like protrusions extend from the top center outward. Once these figures were 3D printed, they were mounted one at a time to the center of a spinning base and set to rotate at 550 RPM. A camera then films the shape as it’s in motion at a 1/2000 sec frame rate which captures stills of the object in just the right set of positions to produce the illusion that the tendrils are blooming from the top and pouring down the sides. The same effect could also be achieved with a strobe light instead of a camera.