[Hamster] wanted to take a look at division operations when the chip you’re using doesn’t have a divide instruction. He makes the point that the divide instruction takes a lot of space on the die, and that’s why it’s sometimes excluded from a chip’s instruction set. For instance, he tells us the ARM processor used on the Raspberry Pi doesn’t have a divide instruction.

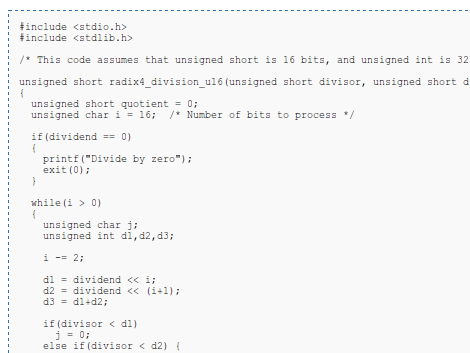

Without hardware division you’re left to implement a binary division algorithm. Eventually [Hamster] plans to do this in an FPGA, but started researching the project by comparing division algorithms in C on an AMD processor.

His test uses all 16-bit possibilities for dividend and divisor. He was shocked to find that binary division doesn’t take much longer than using the hardware instruction for the same tests. A bit of poking around in his code and he manages to beat the AMD hardware divide instruciton by 175%. When testing with an Intel chip the hardware beats his code by about 62%.

He’s got some theories on why he’s seeing these performance differences which we’ll let you check out on your own.

Does his algorithm satisfy IEEE floating point spec?

No, integer only :-).

But the algorithm can divide by zero…. it returns as close to positive infinity as possible – 0xFFFF).

This may or may not be what you want :-)

Perhaps it’s worth adding that quite a few DSP processors feature a reciprocal seed instruction which, together with a division iteration instruction, are capable of performing integer divisions even if the data path itself doesn’t contain a division unit.

“This code assumes that unsigned short is 16 bits…”

This can be solved by including stdint.h!

Variables are defined far more explicitly:

uint8_t — unsigned 8-bit integer

int32_t — signed 32-bit int

size_t — the processor’s word size

It amazes me that this isn’t used as widely as it should in embedded projects.

[edit]

size_t — used for representing array sizes. not necessarily word size. my bad.

This! Combined with

They are standard. Use them. Don’t define your own types, that’s just silly.

in my day we wrote division and multiplication subs in assembler grumble grumble

so perhaps AMD cheated and with a crappy microcode too?

Great article!

I have noticed on an older 32-bit AMD processor that 16-bit arithmetic was surprisingly much slower than 32-bit. I didn’t look into the cause of it, just changed datatypes and moved on. But it was a good lesson, and I’ve found similar cases on other processors since then.

On a x86_64 AMD cpu running in long mode, 32bit arithmetic is slower too! Try comparing sinf() and sin() ..

Nope!

The default data size in AMD64 is 32 bit (except for addressing and stack accesses) and I assure you processors are optimized for the default :)

16 bits operations are often slower on modern x86 processors. Multiplication and division algorithms are optimized for the common case and using 8 or 16 bit variants require setup+fixup code.

Isn’t it pretty unfair to the hardware that his testbench is divisions on indexes in a simple nested loop? Of course turning on optimizations is going to dramatically the speed of his code vs the speed of the hardware; hes able to optimize the function along with the testbench, so things like unrolling and folding in constants are going to significantly increase the speed, whereas for the hardware there will be relatively little speedup. A fairer example would be to turn on optimizations only on his function, and then link in the now-optimized version into the testbench. That, or do calculations against random numbers. As it is currently, you are specifically optimizing for the data set.

Back in the days of core memory, I was assigned a task to fix a bug in a FORTRAN compiler. While digging in the assembler source code for that compiler, I discovered that it used a divide routine that COUNTED how many times it could subtract the divisor. It also had a square-root routine that counted how many times it could subtract sequential odd numbers (1,3,5,7,9,…) [ try it — it works!].

Needless to say, while in that code, I replaced many of the “brain-dead” algorithms with “standard” versions that used some shift instructions…

That’s pretty cool, I like that kind of math. For most real-world micros without a hardware divide function, though, won’t the C stdlib already provide a software division implementation? I’ve certainly written AVR C code that uses division, and I didn’t have to write any division algorithms.

yes it does, given that you properly built your toolchain..

Back in the days, most programming books shows you how to do efficient arithmetic.

Atmel have an application note and library for their 8-bit MCUs which can be used for division quite easily.

Link: (PDF warning)

http://www.atmel.com/dyn/resources/prod_documents/doc0936.pdf

Hi, When you use gcc for micros without a hardware divide the compiler calls a routine that is usually in in libgcc. The standard libgcc divide routine is pretty good – it checks the size of the divisor any that limits the number of loops.

I’m experimenting to eventually put the algorithm into an FPGA, and needed fixed latency so I can use it in a pipeline. I was just surprised how slow my laptop CPU is at divide.

I guessed I answered my own question. As it is a CPU designed for low-power operation the divide is most probably done in microcode not hardware :-(

If you do a lot of division by constants: http://www.hackersdelight.org/divcMore.pdf

Okay, if this thread is attracting “math heads” and “bit twiddlers”, perhaps these links are of interest:

The Aggregate Magic Algorithms:

http://aggregate.org/MAGIC/

Bit Twiddling Hacks:

http://graphics.stanford.edu/~seander/bithacks.html

And for something *really* hardcore CPU hackers, check out Agner Fog’s Optimization Manuals:

http://www.agner.org/optimize/#manuals

Nice links! I now know what I’m reading this weekend…

I keep copies of these web pages (converted to eBook format) in my Kindle!

On the Alpha architecture, there is an integer division opcode defined in the reference manual, but it was never implemented in any of the production hardware. Apparently DEC’s engineers found that they could get better performance by devoting more logic gates to the add/sub/multiply instructions and implementing the division operation in software.

There are several algorithms for implementing a division subroutine. The Linux kernel includes some fairly well optimized ones for architectures that don’t support integer division natively (like Alpha).

http://git.kernel.org/?p=linux/kernel/git/torvalds/linux.git;a=blob;f=arch/alpha/lib/divide.S;h=2d1a0484a99e009e3e198f47f77b06194b50006d;hb=HEAD

Jesus christ and people rant about Arduino’s being overkill, but he’s a real man for implementing division on a chip?

I mean, it’s impressive, truly, but holy hell i couldn’t get arsed doing the same thing.

Back in college we were implementing a DSP on a 4MHz 8088. Naturally, by the time you get a result from the ADC, do some computation on it, and output it to a DAC, it quickly becomes clear that you have precious few clock cycles for the actual computation, which includes a divide instruction.

DIV on that cpu takes 200 cycles which was *far* too long. I went back to the basics of the design and reworked the math so I could divide by 2^n rather than some random decimal value. Dividing by 2^n is really a simple bit shift. SHR on that CPU takes only 1 or 2 clock cycles! In the end I had CPU cycles to spare…

You can also use what I have been exposed to as “binary fractions.”

if I want to divide a 4 bit (lets say 8 1000) number by 1/2 (multiply it by .5) we can do this in the following way. Shift the 4-bit number some number to the left (I usually use 4 bits in this case) and you will have a 8 bits (10000000 or 1000.0000)

Then convert your fraction to a “binary fraction.” Which is easy… The weighting goes similarly to when you were in straight binary numbers (8 4 2 1 1/2 1/4 1/8 1/16). So in the case of 1/2 your multiplicand will become 0000.1000). Then you can multiply these two together and shift back to the right by 8 to get the appropriate answer.

I know the Xilinx boards from Spartan 3 on can handle 18×18 bit multiplication and have a specific (and fast) module to handle these. If you have a static multiplicand then you are set and can do the conversions by hand (there is also an easier way of doing all of this (1000 * 1000) >> 4, would get you the same results as ((1000 <> 8… I have found in implementing this that you will need to dump the results of the first multiplication into an appropriately sized wire… Used this to do enveloping and volume control for an FPGA piano (works like a charm)

(this procedure is for straight binary numbers and I have not tested it for twos complement, since I did not need twos complement in the DSP project I was doing)