[Gil] sent in an awesome paper from this year’s SIGGRAPH. It’s a way to detect subtle changes in a video feed from [Hao-Yu Wu, et al.] at the MIT CS and AI lab and Quanta Research. To get a feel for what this paper is about, check out the video and come back when you pick your jaw off the floor.

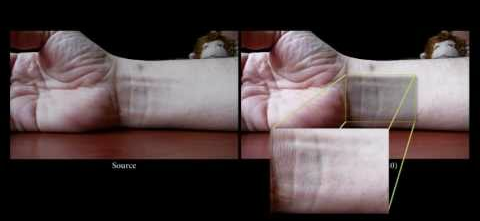

The project works by detecting and amplifying very small changes in color occurring in several frames of video. From the demo, the researchers were able to detect someone’s pulse by noting the very minute changes in the color of their skin whenever their face is pumped full of blood.

A neat side effect of detecting small changes in color is the ability to also detect motion. In the video, there’s an example of detecting someone’s pulse by exaggerating the expanding artery in someone’s wrist, and the change in a shadow produced by the sun over the course of 15 seconds. This is Batman-level tech here, and we can’t wait to see an OpenCV library for this.

Even though the researchers have shown an extremely limited use case – just pulses and breathing – we’re seeing a whole lot of potential applications. We’d love to see an open source version of this tech turned into a lie detector for the upcoming US presidential debates, and the motion exaggeration is perfect for showing why every sports referee is blind as a bat.

If you want to read the actual paper, here’s the PDF. As always, video after the break.

[youtube=http://www.youtube.com/watch?v=ONZcjs1Pjmk&w=470]

Absolutely Fascinating!

Something similar was simulated in the movie “The Recruit”.

Very cool, and slightly creepy, but my wife would love the one that clearly shows a baby is breathing.

Now, where’s the smartphone app? :)

already found atleast one such app

http://itunes.apple.com/be/app/whats-my-heart-rate/id513840650

Huh, I wonder what the results of this would be if you applied these techniques to doctored videos; similar in ways to programs that can give you a rating on how much photoshopping is going on in an image.

Next on the list, Voight-Kampff Empathy Test.

You’re in a desert, walking along in the sand, when all of a sudden you look down and see a tortoise. It’s crawling toward you. You reach down and you flip the tortoise over on its back. The tortoise lays on its back, its belly baking in the hot sun, beating its legs trying to turn itself over, but it can’t. Not without your help. But you’re not helping.

Bang Bang !!!

fail !!!

At last, I now have all the tools required to make my Vampire detector.

Holy 1984 batman!

Thought-crime detector.

That really is jaw-dropping.

But I wonder how well it works with compressed video, which by definition works by preferentially dropping the imperceptible details that this algorithm amplifies.

For example, would it work well enough with the average digital TV broadcast to tell if a politician’s heart rate goes up when answering a certain question, possibly indicating a lie? Makes me wish analog broadcasts were still around.

Uploading the video to YouTube already would have made this unusable. There were a few times when I was watching the video and was “dude, the image on the left isn’t even moving.” Except, it really wasn’t, those frames got compressed out and my monitor was showing the same image again.

I’d have never thought that a normal video has this much information hidden in it (heart rate, etc).

Gotta love science.

“To keep your face expressionless was not difficult, and even your breathing could be controlled, with an effort: but you could not control the beating of your heart, and the telescreen was quite delicate enough to pick it up.”

Reality trumps fiction every day.

Quantitative imaging & compression is a big no-no. Could certainly be modeled and accounted for in a controlled environment but in the application you describe, this is futile.

Could the colour changes also be used to judge blood oxygenation?

I am sure it could be tuned that way. A Pulse Oximeter (the thing they clip on a finger) works off of an algorithm with the data source being driven by the absorption of light at the two wavelengths associated with the 2 states of blood; oxygenated and de-oxygenated. So in theory you could watch for the lack of those colors presence and extrapolate a percentage

Damn. ’nuff said

Average digital broadcast is certainly of too-low framerate and resolution and has too much compression noise and loss for this to be applicable.

On the other hand!

With the upcoming Hobbit being filmed at high-res and 48fps, we should be able to see which actors are most immersed in their roles!

Amazing stuff! Partly financed by Darpa. In the future, expect flying without beta blockers to be painful.

pretty cool, i wonder if you could use this in astronomy to better notice/illustrate the wobble of stars from planets tugging on them or other things like that.

Possibly with insanely high gain. Most of that wobble is detected via Doppler shift, iirc. So, yeah, perfect for this because it’s a change in color.

I’d love to see the first two photographic plates where Pluto was discovered in this thing. It’s just a tiny dot moving a fraction of an inch between two photographic exposures. Very, very hard to see even when it’s highlighted. This project could be awesome for that.

The more I think about this, the more uses it has.

Anyone have a guide to OpenCV programming?

Wow! That is some seriously impressive work.

Batman level tech? More like Star Trek level tech. Think of the convenience in an emergency room where a setup like this can be pointed at a patient and get pulse rate and other vital signs without anything having to touch the person.

You could also monitor the waiting room to see if anyone has a sudden change in heartrate or blood pressure (blood pressure could probably be approximated by the *amount* of color change) and warn the doctors/nurses that someone is going into shock.

Court room reporters speculating on witness testimony, hospital waiting room triage. They’ve really opened some doors with this one.

Dang… should have paid more attention in my signal processing class.

Am I the only one thinking poker ?

The only problem I see is the limitation small motions. People either have to be asleep/unconscious or very very still for this technique to work as it currently stands to monitor heart rate.

If only there were some simple technique to compensate for predictable movements. But this even for one person walking this could be very complex.

e.g. A person walking through frame from left to right. As this is happening there average speed could be calculated and the average applied to translate all images to the same origin. But as people walk their body move up and down in a sinusoidal like pattern. This would need to be translated for as well. Also as they move from foot to foot their body twists/waddles slightly from right to left. The only way I could think to translate for this would be to capture their motion in 3D and compensate for this rotation. And all that assumes that they are not talking/breathing heavily.

P.S. I do love the concept of heart rate monitoring from video.

Face and eye and headsize tracking is common now, it should be doable to know the movements with that.

Why only OpenCV, this should be doable with avisynth I think.