[Courtney] has been hard at work on OSkAR, an OpenCV based speaking robot. OSkAR is [Courney’s] capstone project (pdf link) at Shepherd University in West Virginia, USA. The goal is for OSkAR to be an assistive robot. OSkAR will navigate a typical home environment, reporting objects it finds through speech synthesis software.

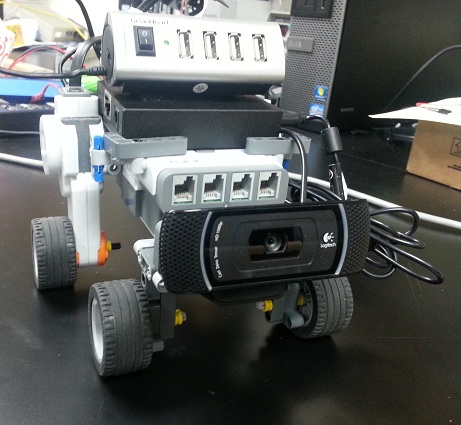

To accomplish this, [Courtney] started with a Beagle Bone Black and a Logitech C920 webcam. The robot’s body was built using LEGO Mindstorms NXT parts. This means that when not operating autonomously, OSkAR can be controlled via Bluetooth from an Android phone. On the software side, [Courtney] began with the stock Angstrom Linux distribution for the BBB. After running into video problems, she switched her desktop environment to Xfce. OpenCV provides the machine vision system. [Courtney] created models for several objects for OSkAR to recognize.

Right now, OSkAR’s life consists of wandering around the room looking for pencils and door frames. When a pencil or door is found, OSkAR announces the object, and whether it is to his left or his right. It may sound like a rather boring life for a robot, but the semester isn’t over yet. [Courtney] is still hard at work creating more object models, which will expand OSkAR’s interests into new areas.

[Thanks Emad!]

Awesome robot :D

Cool almost bought the same hub for a quasi related project but wasn’t sure how the hub and linux would go about assigning multiple video inputs so I went with one that has individual power switches per port. Still need to get around to modifying that thing :/

If there are any WV hackers reading this, I am looking for folks wanting to start up a hackerspace in the Greenbrier/Monroe County area.

She should combine this with the Jasper project listed yesterday, and a grasper. “Rover, fetch me a pencil.”

Three comments? I think OSkAR needs some more comment love so I’m throwing this comment out there. It looks like the person who put it together put a lot of work into the project.