VGA, DVI, and HDMI ports use Display Data Channel (DDC) to communicate with connected displays. This allows displays to be plug and play. However, DDC is based on I2C, which is used in all kinds of electronics. To take advantage of this I2C port on nearly every computer, [Josef] built a VGA to I2C breakout.

This breakout is based on an older article about building a $0.25 I2C adapter. This adapter hijacks specific lines from the video port, and convinces the kernel it’s a standard I2C device. Once this is done, applications such as i2c-tools can be used to interact with the port.

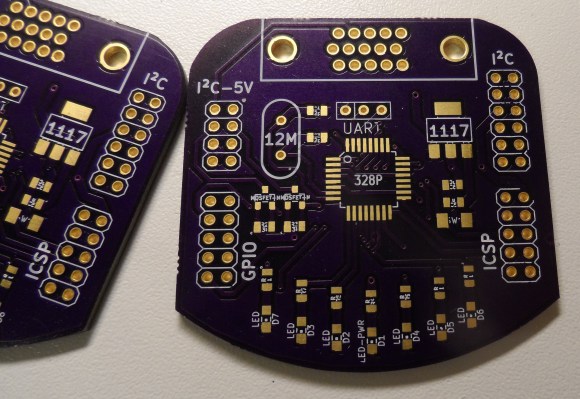

[Josef] decided to go for overkill with this project. By putting an ATmega328 on the board, control for GPIOs and LEDs could be added. Level shifters for I2C were added so it can be used with lower voltage devices. The end product is an I2C adapter, GPIOs, and LEDs that can be controlled directly from the Linux kernel through an unused video port.

Who has unused video ports?

Literally all of my servers do. The video port may have been used for all of 30 minutes to load up an OS, assuming I didn’t just boot it off an ISO over the network via the remote management card instead.

In fact my servers utilize way more serial ports to talk to remote sensors, and rs232 has plenty of limitations compared to i2c for such low bandwidth tasks as sensors in another room.

Even my NVidia SLI gaming rig is only utilizing one of the four DVI ports to drive a 4k monitor. While I would question the second port on the main card being usable this way, the second card is used purely for the GPU cores and memory, those two DVI ports have never had anything connected to them.

(Though I admit I personally can’t think of any uses for i2c on my gaming PC, but that’s my own problem)

Howabout something like this? https://www.youtube.com/watch?v=huj5bD-x-2c (ambient light behind monitor)

Tons of uses for I2C on a gaming rig.

Custom controllers, haptic feedback, those cool light things that light the room up based on monitor colors… The list goes on and on!

Laptop users…

Almost every laptop and most computers I own have an unused VGA port :-)

Not that you need an unused one. Even with a monitor hooked up, the bus still has 125 unused addresses.

And you could add add features to the monitor with this. Put the interface inside a flat screen and maybe you could add say an RS-232 port to your monitor or ambient light effects.

If you used one of the VGA color wires as a clock and another one of the color wires as a data signal, you might be able program a microcontroller that way. The color signals would be inherently in sync because they’re designed to be parts of the same pixel. Also, the VGA spec says the color wires are kept low during non-image parts of the signal, so you should be able to do everything you want using an image alone. You could even have a normal full-screen web-app produce the data. Some level-shifting might be necessary, but a few BJTs could handle that.

Not showboating, but you just gave me a brain-boner good sir/ma’am

Hate to burst your bubble but the color signals are analog on a VGA connector (this is why there’s usually a DAC chip or resistor ladder DAC coming from the digital side). You could accomplish what you’re looking to do by using an i2c GPIO expansion chip, however.

hate to burst your bubble but digital signal is also analog with two predetermined levels.

I guess you could do that? You’d be counting on display gamma ramp to be predictable enough to get the right voltage levels.

The color signals top out at .7v. Once you factor in the extra hardware needed to convert that to TTL voltage levels you’ve basically built a 2 bit ADC. The expense and complexity of doing that is higher than just using an i2c GPIO breakout chip.

He might as well use his jtag, but that’s not the point, now is it? We’re talking about a *hack* here, not necessarily the best solution.

Doge, using the i2c bus off of VGA to talk to an i2c GPIO expander is a pretty cool hack. It’s simple and cheap. My personal definition of a hack is a feat of engineering where you’re doing something with hardware that no one expected it to do, and you get extra points for doing it in an awesome, solid way. I could ship a VGA to GPIO pass-through that used a chip and it’d be awesome. Doing something hacky that requires taking over a display’s output as well as sitting on the i2c bus doesn’t get as many brownie points for me because you can’t ship it. Maybe I shouldn’t have used the words “hate to burst your bubble.” My intent here was to call out what wouldn’t work, but I got a response from one person saying that digital is analog (sure) where they hadn’t actually done any research about what the analog color signal looks like, or the voltage range, and your response equating spending the couple dollars to hack together an i2c GPIO chip based programmer that sits off the VGA with buying an expensive JTAG programming cable. It’s great to see users defending hacks but I think you’re misreading my intention.

That tops out at a 0 to 0.7Volt range. Plus there is a nasty amount of analog components that will cause slew It’s not designed for digital. Now pin 9 for EDID? that will work just fine.

I was going to make similar comments earlier, then I realize that analog VGA signals are 0 – 0.7V. So going from dark to full on each of the R/G/B channels could barely turn on a transistor and might be able to do something.

Ideally you want a comparator or even abuse a LVDS receiver if you want any speed out of that ’cause transistors isn’t all that great for switching speeds.

Opamp makes a piss poor one and I would never recommend it to be used when a comparator is specifically designed for logic type of switching.

This… is actually a pretty sweet hack. Can you get multiple I2C buses running or just a single one?

This is a neat hack for sure.

This may be a stupid question, but if you’re going to go through that much trouble (putting a uC on the board) why not just mount it as a USB device and do normal USBI2C? Seems that would be more useful rather than hacking into the VGA port.

Umm that’s supposed to be USB I2C but apparently those get filtered out. If that also gets filtered out, try USB<->I2C :-)

This is actually what I have on my i-might-do-this-one-day list. Also, if you already have uC with USB then why stop at I2C? You can expose all the low-speed peripherals to the host computer relatively easily. USART, SPI, CAN bus, TFT controllers, DACs, ADCs, crypto accelerators, RTCs… everything which already does have kernel subsystem can be just plugged in (and the rest can be accessed via libusb from userspace). Think of the possibilities! TWILight was originally just breakout board, but more as an afterthought, the AVR was added (I needed to use it somehow anyway).

What kind of circuit protections are necessary (or advised) for something like this? I could see possibly frying a VGA chip this way.

You could use an I2C isolator chip.

I have found aluminum foil (conical configuration) works well for isolation of neural networks. It might be possible to make a surface mount scaled version to prevent unwanted signals/transmissions to the chip in question.

I’ve been wondering for quite a while whether any video drivers (which have to parse the DDC information) have security vulnerabilities, such that a malicious projector in a conference room, for instance, could compromise laptops plugged into it.

And this doesn’t stop with VGA, of course, HDMI and DisplayPort both have auxiliary data channels of their own.

Probably not going to work too well. Worst that can happen is that the OS would stop reading the EEPROM when the buffer is full.

Even if the driver is that badly written, how tiny can you make your code for something do non-trival to have a security risk in the first place? A few hundred bytes?

This is, by far, one of the coolest projects i’ve seen on HAD.

Already explored the possibility of DDC malware, upshot is although it might be possible in theory the OS would have to be rooted already.

It is not possible to embed malicious code in a laptop’s LCD panel or other device without modifying the parameters so much that the screen wouldn’t work at all.

I did find a while back that some older laptops can be hacked using the memory chip on the RAM (24WC02) but this only works with some BIOS versions.

Its an interesting mod though as it meas you can force users to only use certain RAM chips (cough aitch pea /cough) and WiFi adaptors.

Some netbooks if you disable the onboard 24C04/02 can then take 2GB RAM which is quite handy, this was done because they have 512MB onboard and often couldn’t take even a 1GB stick due to chipset limitations imposed by the manufacturers.

It essentially disables the RAM entirely so it becomes invisible so if this part has failed the compact motherboard can still be used for homebrew Beowulf clusters etc.

Re. programming micros using VGA is damned clever :-)

I had wondered if such a thing was possible, as there are three outputs available which can indeed be driven with resonant signals and an LM567 used with external clock to detect the some 20 MHz signals if the external clock is phase locked to the sync signal utilizing a reverse biased blue LED based tuning diode as the feedback element.

Also the venerable LM1881 although running out of spec can indeed detect and separate VGA speed clocks so this might help folks who need to program a micro but have no or non working dedicated programming hardware

One thing I always wondered…. why is it not a normal thing to be able to control the parameters of your monitor from the PC through this I2C?

Agreed mate

All of this seems silly, for the same or less money and effort you just add a damn I2C interface to your USB port, the DDC trick is mean to be a quick cheap hack.

maybe someone wants to add this to a monitor? You could add say an RS-232 port to your monitor, ambient lighting, or even control the volume if it has built in speakers.

Or maybe you want to add an amp and external speakers to the monitor and control them.

If you read the specs… DDC was actually intended, at one point, to be used for things like touchscreens/digitizers… It never really caught-on, so some OS-level DDC implementations couldn’t possibly implement it, due to artificial speed-constraints… A shame, really… I2C is 400kb/s, *plenty* for anything RS-232…

This is very exciting I used a lot during last year.

Recently I built an I2C expander using a PIC which has 11 ADC, 9 Digital i/o and 5 PWM .

On the PiC side I used MikoPascal and the master access is done thru Python (Anaconda/ Spyder)

It works super under Linuxa and with any capable I2C “Master” but I would really want to do the same thing under windows (7 ?).

Do you guys have any suggestions ?

look for my blogs : CR2875

i2c terminal over VGA: https://dave.cheney.net/2014/08/03/tinyterm-a-silly-terminal-emulator-written-in-go

Thanks , the exemple is unde Linux though..I was looking for Windows 7… I guess W10 soul work..