First person video – between Google Glass, GoPro, and other sports cameras, it seems like everyone has a camera on their head these days. If you’re a surfer or skydiver, that might make for some awesome footage. For the rest of us though, it means hours of boring video. The obvious way to fix this is time-lapse. Typically time-lapse throws frames away. Taking 1 of every 10 frames results in a 10x speed increase. Unfortunately, speeding up a head mounted camera often leads to a video so bouncy it can’t be watched without an air sickness bag handy. [Johannes Kopf], [Michael Cohen], and [Richard Szeliski] at Microsoft Research have come up with a novel solution to this problem with Hyperlapse.

First person video – between Google Glass, GoPro, and other sports cameras, it seems like everyone has a camera on their head these days. If you’re a surfer or skydiver, that might make for some awesome footage. For the rest of us though, it means hours of boring video. The obvious way to fix this is time-lapse. Typically time-lapse throws frames away. Taking 1 of every 10 frames results in a 10x speed increase. Unfortunately, speeding up a head mounted camera often leads to a video so bouncy it can’t be watched without an air sickness bag handy. [Johannes Kopf], [Michael Cohen], and [Richard Szeliski] at Microsoft Research have come up with a novel solution to this problem with Hyperlapse.

Hyperlapse photography is not a new term. Typically, hyperlapse films require careful planning, camera rigs, and labor-intensive post-production to achieve a usable video. [Johannes] and team have thrown computer vision and graphics algorithms at the problem. The results are nothing short of amazing.

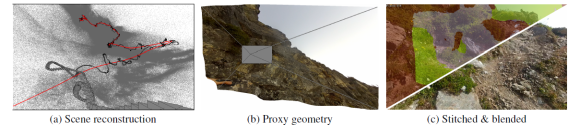

The full details are available in the team’s report (35MB PDF warning). To obtain usable data, the fisheye lenses often used on these cameras must be calibrated. The team accomplished that with the OCamCalib toolbox. Imported video is broken down frame by frame. Using structure from motion algorithms, hyperlapse creates a 3D models of the various scenes in the video. With the scenes in this virtual world, the camera can be moved and aimed at will. The team’s algorithms then pick a smooth path that follows the original cameras trajectory. Once the camera’s position is known, it’s simply a matter of rendering the final video.

The results aren’t perfect. The mountain climbing scenes show some artifacts caused by the camera frame rate and exposure changing due to the varied lighting conditions. People appear and disappear in the bicycling portion of the video.

One thing the team doesn’t mention is how long the process takes. We’re sure this kind of rendering must require some serious time and processing power. Still, the output video is stunning.

Overview Video

Technical details

Thanks [Gustav]!

That’s actually pretty awesome.

I remember poking around with some PC-only video editing software—Hitfilm, I think—that could automatically extract 6D camera paths by using similar techniques, but this was much further back, around 5 years ago.

It’s nice, the bucking video they made, feels almost fake but not quite, like watching a scripted camera fly through a video game world, or a drone fly through a real world.

*biking not bucking. Hmm.

Jack Crossfire’s experiments must feel pretty inconsequential after seeing this.

At least they didn’t call the POC GoProLapse \o/

Comment of the week.

Yay Siggraph – on this week in Vancouver… I miss it :(

“One thing the team doesn’t mention is how long the process takes.” Well, the paper (pdf linked above) does give stats for the processing of one of the videos: 13m11s (23K frames) processed in approximately 3h (1h of which is a parallel procedure operating over 24 batches — so add a day if you do it in serial). And they note that the code used is proof-of-concept and they expect substantial speed up to be possible.

Whops – you’re right. Updated the article to reflect that.

I imagine that if a cam had some motion/orientation sensor data it would be easier, since you’d avoid having to construct a path map using image data. And talking of which, they say they use a depthmap, but how do they get a depth map from 2D footage of a gopro? You would have to do a complex calculation over several frames, and it will just take hours and hours on a regular (but speedy) computer.

So does gopro or similar make, or plan to make, a gopro with accelerometer/gyro/magnetic sensor data logging? Or would i t just be easier if people used phones/tablets to record the footage.

It appears from the article that they calibrate their software with the fisheye lens on the camera. If you know the exact shape and distance of the lens you should be able to extrapolate some depth information based on the image distortion.

The results aren’t perfect? Come on, the results are fantastic. This is a remarkable process.

I’m with you. It’s probably not going to be much use in Hollywood productions, but for home and even professional videos, it’s amazing! I found the artefacts created by it to be acceptable, they were much less distracting than the jolting in the standard timelapse.

What happens when the background is dynamic, say at a football game or where paths cannot be easily calculated, such as the POV of a pilot moving at high speed? It seems like the MRF stitching requires the background data to be largely stagnant and reasonably close by. How would it handle clouds?

I would imagine not well.. GPS+GYRO+ACC+MAG combo would give the algorithm a hand to understand what could be happening to the camera (shouldn’t be relied on but would certainly help it understand if most of the scene was moving but the camera was not (clouds, passers by, etc)

You can already see artifacts in the video, most notably in the mountain climbing scene.

I still think it is amazing though.

Saw this on Reddit yesterday – the computation time for each small clip can be in the range of hours but it’s a neat project. MS labs does some great stuff – their ICE compositing program is superb.

From the “First Person Hyperlapse Videos” paper (pdf listed in posting above)

Table 2: Approximate computation times for various stages of the

algorithm, for one of the longer sequences, BIKE 3

Stage Computation time

Match graph (kd-tree) 10-20 minutes

Initial SfM reconstruction 1 hour (for a single batch)

Densification 1 hour (whole dataset)

Path optimization a few seconds

IBR term precomputation 1-2 minutes

Orientation optimization a few seconds

Source selection 1 min/frame (95% spent in GMM)

MRF stitching 1 hour

Poisson blending 15 minutes

Since this is a research paper demonstrating the general algorithm for the technique (as opposed to a paper demonstrating a performance enhancement for an existing technique), I’m assuming that there is probably a pretty massive amount of potential optimization that could be done if they were looking to implement it into an actual product. This is, of course, ignoring the significant hardware advancements that would be expected to occur before such a product would be ready to hit the market.

Yes, they mention working on a release version which I’m assuming will be a more useable program, and which would probably include a bit of optimization (or what are multicores for?). Personally I’d be happy with even these rendering times given the results – I hope they get the go-ahead.

There is a similar result of sorts be created with video orbits and mono slam methods used in robotics today. Their execution in the video above is very nice but they would have got a cleaner video by combining multiple exposures. You can read more about some of the methods used in the videoorbits project it can use fairly large amounts of memory to process large format pictures. However, I have not kept up with new techniques used in robotics but I hope this can help get one started. http://hi.eecg.toronto.edu/orbits/orbits.html and there is more information in Steve Mann’s book Intelligent Image Processing. Steve Mann has demonstrated methods and novel applications for wearable computers and implications in society at large for the last 34 years. I wish I could have taken his classes as his research has kept me dreaming for the last 13 years about wearable computers.

This is extremely impressive and works very well I’m wondering if two or more different cameras footage could be combined in this process.

Seems to be kind of the same thing like Photosynth..:

http://vimeo.com/80088893

Some of the tech is the same.

I really hope they actually do something useful with this. Microsoft’s miss-use of photosynth merely as a fancy social image gallery is criminal.

I never use this word, but this is truly awesome. I wonder how a sequence done on “Black Friday” along 5th Ave in NYC might look.

When can I use this for my videos? (In an idiot proof editing program of course.)

that is very very cool! I would say within a month, you will have some pretty big names offering to buy this tech.

Ausgezeichnet. Well done MS. Gives us hope that they will add hardware accelerators to PCs an games to enable this advanced technology.

waaait so it’s research by Microsoft that starts saying about google glass in the first seconds?

It’s Microsoft’s R&D arm, and it’s hard to argue that what is probably the most popular wearable face computer out right now is a potential candidate for this technology. I agree it feels funny though,

Would data from a Kinect be able to give better results, given a more accurate point cloud and, a wider FOV and more angle choices?

Maybe, but who goes rock climbing with a kinect strapped to their head?

Hackers. Hackers do.

Oddly enough when I watch the ‘naïve’ sample I can see it doesn’t look good but I’m OK, yet when I watch the hyperlapse sample fullscreen I can feel myself slowly going towards seasickness.

I think it’s the blending. But perhaps that can be tweaked? A little less blending when the blending becomes too noticeable maybe?

An app called HyperLapse is now available at Apple’s App Store . While not as sophisticated as the HyperLapse videos here, I think it’s still pretty impressive and it’s free. The only thing I would like is the ability to edit the separate chunks.

A new product (basicaly a sensor box) called SteadXP is using pretty much the same principle, but the the help of physical location recording.

http://steadxp.com/

It should greatly improve the compution time and not having a kinect strap to the head.