Smartphones are the most common expression of [Gene Roddneberry]’s dream of a small device packed with sensors, but so far, the suite of sensors in the latest and greatest smartphone are only used to tell Uber where to pick you up, or upload pics to an Instagram account. It’s not an ideal situation, but keep in mind the Federation of the 24th century was still transitioning to a post-scarcity economy; we still have about 400 years until angel investors, startups, and accelerators are rendered obsolete.

Until then, [Peter Jansen] has dedicated a few years of his life to making the Tricorder of the Star Trek universe a reality. It’s his entry for The Hackaday Prize, and made it to the finals selection, giving [Peter] a one in five chance of winning a trip to space.

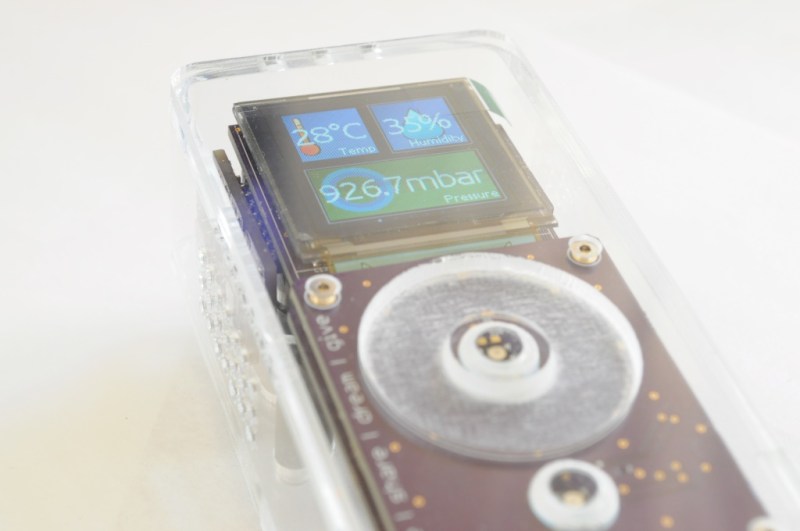

[Peter]’s entry, the Open Source Science Tricorder or the Arducorder Mini, is loaded down with sensors. With the right software, it’s able to tell [Peter] the health of leaves, how good the shielding is on [Peter]’s CT scanner, push all the data to the web, and provide a way to sense just about anything happening in the environment. You can check out [Peter]’s video for The Hackaday Prize finals below, and an interview after that.

This is your fourth or fifth revision in almost as many years. and the latest version has a lot of sensors that aren't found in the Mk.I version. Is this just a function of cost, or are device manufacturers really pushing out newer and more capable sensors?

You’re right — I think the Arducorder Mini is my seventh or eighth prototype (some of them don’t make the site), which is about one a year since I started designing them. For me it’s very exciting that this is the first “complete” design since the Mark 1, which was built nearly eight years ago — the others were experiments (or learning experiences) in different aspects of design, from designing handheld linux-powered systems, to incorporating different graphical requirements, to experimenting with sensor fusion.

I think the past few years has really been the start of a renaissance in off-the-shelf sensors. What excites me the most isn’t so much slightly smaller sensors or devices with higher resolution, but sensors that come completely out of left field and add embedded capability that simply wasn’t there before. The new microspectrometer that Hamamatsu released this year was a great example of this, as is the Radiation Watch Type 5 (a photodiode-based radiation sensor, which is MUCH smaller than tube based systems). The AMS lightning sensor is also very cool, although because there are few storms where I live, I’ve mostly used it to detect when large electrical items (like the air conditioner) turn on. A few other parts have existed, but the price point or availability hasn’t been accessible — the single-chip inertial measurement units from Invensense that are an order of magnitude less expensive than they were only a few years ago, and the recent low-resolution thermal cameras are great examples of this.

If you could describe what is missing from your tricorder, what would it be? What sensor is on your wishlist?

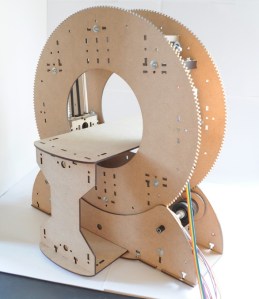

Before going into the sensors, after the first design (the Mark 1), each of my “tricorder projects” has examined a different aspect of the design of a pocket-sized handheld multisensor device that I thought was important. The Mark 2 examined beautiful graphics and visualization capabilities, and what it was like to SSH into your handheld instrument to develop code for it. The next iteration tried to be much less expensive and have modular sensor boards, but failed horribly — it was under designed, and far too limited in almost every way (including graphical capabilities). The Mark 4 came about right as smartphones became popular, and asked whether it would be better to pair a small keyfob with a smartphone or tablet instead of having a separate device. On the other end of the spectrum, the projects in the last few years have focused on much more complicated devices, one of them linux powered — but it became clear that I was basically trying to replicate making a smart phone with sensors, which I think isn’t the path to go for two reasons. The first is that I’m not sure the same user model that applies to talking, texting, and playing games applies to massively multimodal sensor data (as many other wonderful features as smartphones have, like incredible visualization and communication capabilities, as well as a large community of developers). The second reason is that even if it were the right user model, I’m a single human being, and smartphones are made by massive companies with hundreds of hardware and software developers. By making this mistake twice, I spent many months developing devices that barely got past showing their first “Hello World” before hitting some critical roadblock in WiFi drivers or graphics processors that would require months of redesign work. It’s terribly disheartening, and I decided to “take a break” and design the Open Source CT Scanner as a result. Humility is important, and I definitely was in over my head with an intractable design path, and not making my mistakes cheaply at all. That’s why I liked the development of the Arducorder — a set timeframe from start to finish (5 months) with major milestones along the way, to keep it tractable and exciting.

Before going into the sensors, after the first design (the Mark 1), each of my “tricorder projects” has examined a different aspect of the design of a pocket-sized handheld multisensor device that I thought was important. The Mark 2 examined beautiful graphics and visualization capabilities, and what it was like to SSH into your handheld instrument to develop code for it. The next iteration tried to be much less expensive and have modular sensor boards, but failed horribly — it was under designed, and far too limited in almost every way (including graphical capabilities). The Mark 4 came about right as smartphones became popular, and asked whether it would be better to pair a small keyfob with a smartphone or tablet instead of having a separate device. On the other end of the spectrum, the projects in the last few years have focused on much more complicated devices, one of them linux powered — but it became clear that I was basically trying to replicate making a smart phone with sensors, which I think isn’t the path to go for two reasons. The first is that I’m not sure the same user model that applies to talking, texting, and playing games applies to massively multimodal sensor data (as many other wonderful features as smartphones have, like incredible visualization and communication capabilities, as well as a large community of developers). The second reason is that even if it were the right user model, I’m a single human being, and smartphones are made by massive companies with hundreds of hardware and software developers. By making this mistake twice, I spent many months developing devices that barely got past showing their first “Hello World” before hitting some critical roadblock in WiFi drivers or graphics processors that would require months of redesign work. It’s terribly disheartening, and I decided to “take a break” and design the Open Source CT Scanner as a result. Humility is important, and I definitely was in over my head with an intractable design path, and not making my mistakes cheaply at all. That’s why I liked the development of the Arducorder — a set timeframe from start to finish (5 months) with major milestones along the way, to keep it tractable and exciting.

To the sensor wishlist — a spectrometer and high-energy particle detector have been big on the wish list since the Mark 1, so I’m very excited that those are incorporated. I would love to see a small embedded laser distance measurement tool, as well as a small CCD camera with an integrated framebuffer and an SPI interface. Even 640×480 resolution would be wonderful. There are modules that are inches in size, but it would have to be down to cellphone-camera sized to be small enough to incorporate into the Arducorder.

In the far future, I’d love to see a tiny nuclear magnetic resonance spectrometer, or miniature versions of other conventional lab tools. The community has also asked for a software defined radio module many times, but I’m afraid I don’t have much experience with RF — I hope that someone will design a sensor board with this, and incorporate it into the Arducorder.

You have a machine that can do an incredible number of measurements, but for any user, simply knowing what these measurements can tell you is the limiting factor. Do you see the tricorder as simply a lab you can put in your pocket, or as something more like a Star Trek tricorder that automatically tells you what you need to know? Is it a combination?

This is a good question. There really are three components — the hardware and sensors themselves, which are capable of sensing a great many things (individually or in combination), (2) software written by folks with some understanding of those measurements (like scientists or engineers) that interpret them and give you measures, like interpreting the spectrometer wavelengths that correspond with the photochemical reflectivity wavelengths as a measure of leaf health in the video, and then there are (3) the users.

For people who already have some knowledge (or interest) in science, this is a fantastic tool, and it’s beautifully reconfigurable so that you can quickly add to the software (or hardware) to meet new applications. I’m excited to see some of the new tiles that folks come up with!

But there is this fundamental limitation in science, and in math, and statistics, and engineering, that we hammer into undergraduates — but if you’ve never been formally exposed to this material, then you may not know. And it’s that every test, every algorithm, every statistic, has certain assumptions, and if they’re not met, then the answer that comes out is meaningless. If you point a spectrometer at a television and ask it to calculate the photochemical reflection index, it will still give you a number — but if it comes out positive, it doesn’t mean that there is any chlorophyll present — one of the assumptions of the PRI test is that you’re pointing it at a leaf. Similarly, if your data isn’t normally distributed (ie. shaped like a bell curve), then you can’t use many statistics — the numbers they give you will be invalid. We hear about this all the time — people doing bad science, bad statistics. It’s like trying to translate from English to French using Google — if you give it jibberish in, then what you get out may look like French, but it will be similarly meaningless. It’s easy for us to detect this because we use language all the time, but unless you use science (or math…), you might not know jibberish when you see it. And so one of our continual tasks in science education is to teach you to recognize when what you’re seeing is valid, and when it’s not — both so that you can do good science, but also so that you can help others when they’re not (or, detect when someone is trying to pull the wool over your eyes).

Believe it or not, I think this is a fantastic thing. I think the most important lesson in science is something incredible and cool that gets the student excited, but the SECOND most important lesson is how to actually do experiments and interpret data. I want people to walk around with these devices and learn more about their worlds, and get excited. And then I want them to point the thermal camera at a metal that’s reflective in the long-IR like aluminum, and ask why they’re seeing a thermal reflection instead of the temperature of the aluminum (and, in turn, learn about emissivity and reflection). And to point the spectrometer at their cat and wonder why it still gives a number (and learn about spectroscopy, and chemical identification). I especially want kids to do this — to learn about the /process/ of good science as doing it. I want this so that they can be good critical thinkers from a young age, and also learn a fluency with these basic processes that will let them be even better scientists than we can be today.

Any scientist will tell you that 90% of science is designing experiments that don’t work out as you’d first planned. I’m a postdoctoral research fellow, which is a fancy title for someone with a PhD who spends a few years doing research before finding a professorship. Most of what I do is design and perform experiments that don’t pan out at first. The difference between me and a novice scientist is that I make many more mistakes than them — but I’m extremely good at making my mistakes cheaply, testing more alternatives far quicker, and recognizing when something is likely a true result, or when a research path is unlikely to pan out. It’s all part of exploration, the scientific and critical thinking process, and it’s wonderful!

Hypothetical, and we're not going to hold you to whatever answer you give. You win the grand prize, a trip to space or about $200,000 USD. Which one to you take, and what is your reasoning for doing so?

What would make a better story, “scientist pays off student loans”, or “scientist releases open source science tricorder, travels to space”?

The Hackaday Prize has already allowed me to cross something off my bucket list — designing and completing a modern open source science tricorder that’s incredibly capable, manufacturable, and that lets folks share their science almost instantly with others. On top of that it’s modular, and through great effort, Arduino compatible, to help make it accessible and tinkerable to a much larger community. And all of this in only five months, from start to finish. I just turned 32, and it’s incredible to think that something I’ve tried to make happen for half my life is now sitting on the desk.

For even longer — as long as I can remember — I’ve wanted to go into space. If space adventurer were a career, I’d take it! (In Canada they select two astronauts only about once a decade, and there are strict age requirements so they can get enough missions out of you to make it worth all the training. I was born in the wrong year!). How often do you get to cross two things off your bucket list?

First you describe the device as used by the crews from Star Trek the next generation. Then you show a publicity still from the original series. The simpler ones were from the original series.

The design of peter’s first builds were HEAVILY influenced from TNG. TOS tricorders had the same capabilities, though.

Also I’ve been rewatching TOS recently. Deal with it.

Only if the tricorders were in the hands of the SCE (Starfleet Corp of Engineers). The ones issues to the regular fleet were some what simpler in design. I mean someone could take one and easily scan for life in the room, and in our case discover he was surrounded by individuals like the EMH. And under some circumstances also use the same thing to check his surroundings. I grew up with the series and am completely familiar with it. I might be using a Doctor Who callsign here, but I’m a fan of the series, deeply, and of course Doctor Who and then Star Wars.

There’s something else, the user would need to be taught how to use it. Its not like simply using an electronic gadget here in this timeline.

Ultimately though that’s a software issue. You could write software with a simple enough interface to give the user simple instructions (“point sensor at a leaf”) then a list of options for what information (which is data with context) he wants (“hydration level”, “nitrogen level”) as, say, a bar graph with “good” in the middle in green. If the spectrometer can identify elements, have a list of them show up, or a spectrograph with elements marked on it.

Point is, it’s all software, and anyone can develop that. If a scientist has a purpose in mind he can write or commission software, himself.

Similarly with how you want the data represented, primary-school and middle-school tookits would be possible.

And of course with open source, if it’s popular people will build on each other’s work and it could end up able to represent anything it can detect, in whatever way the user can understand.

And that’s what’s good about software.

“aboot” !!!!

I love it !!!!

One of the most useful features a tricorder could have would be a gas sensor. Detecting CO, CO2, O2 and cooking gasses would make it instantly useful in every household.

According to the hackaday.io project page, the device does include an SGX-Sensortech MICS-6814 multi-gas sensor.

From the data sheet:

Detectable gases

• Carbon monoxide CO

• Nitrogen dioxide NO2

• Ethanol C2H6OH • Hydrogen H2

• Ammonia NH3

• Methane CH4

• Propane C3H8

• Iso-butane C4H10

1 – 1000ppm 0.05 – 10ppm

10 – 500ppm 1 – 1000ppm 1 – 500ppm >1000ppm

>1000ppm >1000ppm

Neat device, I hope it is sold as a kit some day.

But it is not clear to me that it can actually DISTINGUISH between these gases. The device has 3 independent gas sensors, so if you know what you are measuring you can figure out how much there is, but it cannot tell you what is there in detail. It is NOT a mass spectrometer… (that would have been awesome)

There’s a lot of cool sensors on this, but the display and interface will always be mediocre compared to a smartphone screen. A sensor only tricorder with a data link to a smartphone would be ideal.

I’m sure I remember a few years ago reading about someone with such a setup in an alpha stage, a module with multiple sensors in connected to a phone via bluetooth.

The amount of sensors in modern phones is quite something, I’ve played with seismic/decibel/lux aetc. pps on my android phone but realistically I can’t be sure the readings they’re showing have any real accuracy beyond the phone telling me “yes I can feel movement, hear sound and see light”, so an external, properly calibrated sensor module would be ideal.

I’m in the process developing just that. Most of the sensors are already talking to the µC. I wrote a small program in Delphi to test the serial connection to get and graph measurements. Now I am developing an Android App to connect to the µC via Bluetooth.

Additionally, the App will display data of all available built-in sensors of the phone.

The device will have most of the sensors the Arducorder and a few more. I began development on my project before I heard of the Arducorder, but I admit taking it as an inspiration along the way :)

The device will have its own battery and I plan on adding an SD-Card so it can log data independently of the phone.

At the moment, I use a development board for the µC and all the sensors attached via jumper cables as I want to make sure that the software is functional before I start designing a PCB. I hope to make the PCB the size of the average smartphone, so it can be attached to the back.

When the App is working and pretty enough to show, I will release more info on the sensors.

This is is what I remembered, originally a successful Kickstarter in 2012 that’s grown into a company http://variableinc.com/

The NODE+ is a core module with 3 axis gyroscope/magnetomoeter/accelerometer built-in with a port on each end to attach one or two extra (sold separately) sensor modules onto it depending on your needs. They look great but they sure ain’t cheap.

It does have that wifi chip, so that’s doable. It would make sense to just show mode / options data on the tricorder screen, and stream all the sensor data to a phone or tablet.

The interview tells the story of how I’d been exploring this alternative in recent years — build something like a smartphone (or, build something that /interfaces/ to a smartphone), because they have more computational and visualization capabilities, so “it must be better”. After trying this in previous experiments, I came to realize that (1) For a handheld wand device, Bluetooth is far too slow to stream data from all of the sensors at full speed, or (in many cases) even one of the sensors, like the spectrometer, so it would just be a novelty rather than designed for real use. (2) For a backpack device that physically connects to the back of the phone, there are far too many phone shapes and camera locations to make this elegant or tractable, and they’re constantly changing. You’d likely end up with 20 different enclosures (not sustainable), or one resizable one that looks really clunky. (3) As a scientist, I only ever use a desktop or laptop to do any /real/ in-depth analysis on data — it would take tens or hundreds of times as long on a phone or tablet. I decided that from a usability perspective, the primary function of the thing in your hand is to collect the data, and get that data to the user quickly and elegantly (as well as any preloaded measures, like the photochemical index in the video). Even with an oscilloscope that has a large screen and a million buttons, I find it’s pretty rare that I use any but the simplest of the internal math functions except maybe the FFT. More often if you’re not looking for something simple, you save the data, fire up MATLAB, and bang out your analysis much more quickly.

Still, I like to cover all the bases, so I designed the Arducorder Mini to have a simple, attractive, and intuitive interface to get an overview of all the data from its sensors in only a few seconds. For the students, teachers, or scientists like me, the data can be instantly transmitted to Plotly to allow folks to do what analysis they can in a web browser (on a smart device, tablet, or desktop), shared on the devices of all the folks around you, or easily imported into something like MATLAB for really playing with the numbers. For tinkerers (or people who really want the sensors physically connected to their smartphone or tablet), all of the sensor boards are modular, and one could design something new for them to plug into with ease. Whether that’s an ultra-simple tablet backpack with nothing but a microcontroller and a serial port to transfer the data from the sensors, or a motheboard with the new Intel Edison, with far more RAM and computational horsepower (and, power requirements…), is entirely up to the tinkerer. You could even make a holder for it and have it control your Open Source CT scanner, if you wanted. Part of my work with the Arducorder Mini is that I tried to design not just a device, but an /ecosystem/ centered around the device, that’s flexible enough that there’s something for everyone, whatever the use case. I really hope that ends up being the case, and I’m excited to see what happens.

With all due respect the fact that you think “real scientists will use matlab” will severely hamper this project. An interface to a mobile device (smartphone/tablet) is a must have.

You say bluetooth is too slow and that may be true (although bluetooth has some fast protocols too for transmitting audio and cotrolling devices)

You can use a cable connection or wifi.

The interface you created is fine in the absence of another and it is ABSOLUTELY necessary so the device can be used standalone but a simple data connection to a smart device will allow tinkerers to build infinite versions data processing apps for your device. These apps will be able to create sensor fusion! Just imagine the possibilites: mobile internet connections, augmented reality, onsite processing, maps integration, etc.

The possibilities are endless. The killer app for this device will probably come from this smartphone connection.

-Since you can send data to plotly what exactly makes streaming data too slow?

-Why would you want to backpack this sensor to the phone?? The phone would just be another source of interference.

-Assuming budget is a constraint, you are sacrificing extra sensor capabilities for a blocky LCD screen, the data lines to said screen and your power budget.

-If you want an HMI portion of the tricorder, it should be built as an extension to the base tricorder.

-You did not mention how the user would calibrate this device for serious scientific work, or how a student would calibrate it before an experiment. Without calibration all you have made is a brick.

Without calibrations, your sensors would be the errors quoted on the datasheets assuming the part is within electrical/environmental conditions for usage.

It would be very hard/expensive to calibrate all the different types of sensors in a production line. e.g. How do you expect a regular production to have different gas mixtures for ICS-6814 multi-gas sensor?

Without a good detail documentation/story on this, this project might not score too well on the “how ready is it for production” criteria. This certainly not going to be cheap because of this.

Furthermore I add that science is hard very hard to do. Getting your equipment to work properly is a PITA. I am not convinced from your video that you have done the following:

-Created a 100 of these and made sure they have matching sensor readings under ideal conditions.

-Tested these sensors in different humidities and temperatures (a star trek tricorder works perfectly on volcanic and ice worlds)

-Have a scaling production process to guarantee all these sensors in one box work effectively. The more sensors you add the more testing processes you have to run.

For real scientific work you have to at least these 3 points and more. So at this point I would not see any scientist publishing papers using data from this tricorder and passing the peer review process.

How about you contribute meaningful feedback instead of bitching about what he has accomplished and acting like you are the resident expert on science.

Typically, you do corner cases for operating environment, temperature cycling, humidity, vibrations to make sure your device works.

Not sure if you need a population size of 100. There are different stats distributions you can use and less degree of certainty. Not sure why you are comparing the sensors with each other (instead of a proper calibration standard/against a calibrated sensor)? If they are off the same way because of the way the sensor operates, you might not know.

Dude, let him finish! Captain Kirk won’t be along for a little while yet, all that stuff can be done in time. He hasn’t even got a prototype yet, it’s still an experiment.

came to say same thing

cool pack of sensors combined with funny UI, and when I say funny I mean WTF??!?!

after recent news regarding space travel, I don’t think hackaday prize winner will be risking his/her life to go to space.

Xcor might be flying soon, and that’s only about $100,000 for the same trip as Virgin Galactic. Take the cash, get $100k and a ride to space.

I’d still ride SS2. I’m not going to speculate before the NTSB report comes out or Burt Rutan says something, but the feathering mechanism *did* deploy early, and a number of engineers have said it wasn’t the engine.

It’s not a real spaceship til it’s at least killed somebody. All the good spaceships did.

It’s like a christening.

Its fine, unless the one that hackaday sends a person up in crashes. That would be a PR nightmare.

but a good article on FAIL of the week…

I was under the impression they were using a balloon, so it’s “space”, technically. You’d see a nice bit of the Earth. But it’s certainly not orbit, so no weighlessness. Balloons are pretty foolproof, with just the one main failure mode, and that doesn’t seem to happen much.

The first one? http://www.tricorderproject.org/index.html

This is the same dude.

What are the chances, eh?

I’m gunna be completely honest: this is basically a LabQuest with wifi capabilities and fewer data collection and storage options. High school students use these every day and have been doing so for years.

Yeah but labquest didn’t have nearly that many sensors built in and wasn’t open source. It was basically a handheld display for other vernier sensors, which aren’t exactly cheap.

Because the tricorder design is open source, its actually possible for it to be used in more sophisticated applications than just excel and lab view…

My PC’s just an ENIAC with less tubes.

So now I had everything plugged in, connected to the internet,

and ready to go. The Microsoft connect is a fantastic piece of hardware and one that should

be a lot of fun for games but for a large part

of the computer world when they see a piece of technology like the Microsoft Kinect the question is not what will developers do with it, but what can I do with it.

S-Video will look better, but the audio cable is expensive ($80 – $100), so RCA is the most practical.

Toshiba made a mini low-cost CMOS camera: TCM8230MD, 640x480px, measures 6x6x4.5 mm.