With Samsung’s new Gear VR announced, developers and VR enthusiasts are awaiting the release of the smartphone connected VR headset. A few people couldn’t wait to get their hands on the platform, so they created, OpenGear, a Gear VR compatible headset.

The OpenGear starts off with a Samsung Galaxy Note 4, which is the target platform for the Gear VR headset. A cardboard enclosure, similar to the Google Cardboard headset, holds the lenses and straps the phone to your face.

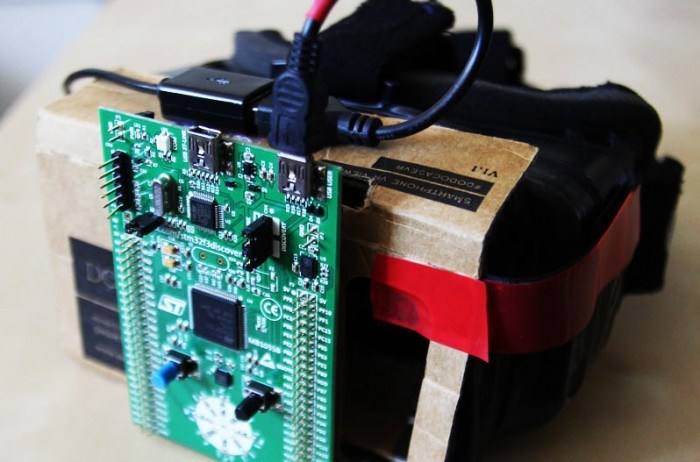

The only missing part is the motion tracking electronics. Fortunately, ST’s STM32F3 Discovery development board has everything needed: a microcontroller with USB device support, a L3GD20 3 axis gyro, and a LSM303DLHC accelerometer/magnetometer. These components together provide a USB inertial measurement unit for tracking your head.

With the Discovery board strapped to the cardboard headset, an open-source firmware is flashed. This emulates the messages sent by a legitimate Oculus Rift motion tracker. The Galaxy Note 4 sees the device as a VR headset, and lets you run VR apps.

If you’re interested, the OpenGear team is offering a development kit. This is a great way for developers to get a head start on their apps before the Gear VR is actually released. The main downside is how you’ll look with this thing affixed to your face. There’s a head-to-head against the real Gear VR after the break.

[via Road To VR]

>The main downside is how you’ll look with this thing affixed to your face.

but you can’t see that when you are using it.

Underrated comment

Why couldn’t the sensors onboard the S4 be used?

Because Samsung have to justify the high price of his VR Gear. By the way, Google Cardboard is already doing head tracking with onboard sensors.

Because mobile phone sensors run at 100 or 200 Hz at best and are not calibrated, whereas the sensor in the Samsung Gear VR is calibrated with high precision robot arms at the factory and has a 1000 Hz refresh rate.

This gives very high accuracy/precision and low-latency (< 20 ms end-to-end) which is required for VR and the reason why Google Carboard is plain simply bad in this regard (~70 ms), among others (low precision/accuracy, high latency, bad optics, bad distortion and chromatic aberration correction, no time warp, no asynchronous time warp).

Some apps such as those written for Durovis Dive or Google Cardboard DO use the smartphone internal sensor, and they work quite well. However, to most of those apps do not do lens warp correction, so it is harder to notice how accurate the head tracking really is.

I think after 25 years of Virtual Reality hardware (and honestly, it really hasn’t gotten much better in all that time) that yet ANOTHER iteration of the same thing is just useless.

What IS missing is actual, practical applications for it. The software. VR software sucks. Apparently the software guys are just not interested in VR, so we build yet another HMD the same as the last…

If I were cynical (wait… I am) I would say that this is why VR has suffered repeated failure to launch over 25 years.

Likely the new younger guys that haven’t live through or learnt the old lessons.

I was the proud owner of a VFX-1 HMD nearly 20 years ago. Had the modified powerglove, etc…

But I quickly lost interest in walking around a low res maze swinging my hands. And here we are 20 years later, and it really isn’t much different.

One technology that has so much potential, but just can’t seem to ‘get there’ for some reason. As is most often the case, especially nowadays, hardware is cheap and easy. It is always the software/firmware that matters. If I didn’t hate programming so much (and didn’t suck at it), I’d almost switch careers.

You should really check out the new games like elite dangerous and the UE4 demos coming out. Then say nothing has changed in 20 years.

You mean I can get motion sickness in high-definition now?

Regardless of the new quality of graphics, it is still just more of the same. You might not realize this, but those graphics were groundbreaking for us back then, too.

Yawn…

But high def motion sickness is so much more awesome! ;-)

Yes and no. Current VR “Platforms” are inadequate. Be it unity or unreal engine with a bunch of modules, or some of the new “vr” engines, there are entire categories of problems that just aren’t being addressed. To this day, we still don’t have a simple/standard system to a) build 3d stereoscopic UI, and b) deals with all forms of tracking/prediction/timely sensor fusion. c) Prioritized rendering management.

And this is understandable. 20+ years ago when academics started looking at this, they discovered that there’s an entire laundry list of base concepts/problems you have to fix before you even start coding your “app”.

1)Tracking multiple points/orientations (head, joints hands, etc) using multiple sensor systems, all with different levels of spacial resolution, ranges, “confidence”, delays. Some have absolute frames of reference, others are relative with compounding errors. All have to be combined to determine the user’s position. God help you if your head tracking is off by the tiniest amount.

2) Rendering and pre rendering. Be it with a vr “cave” or a HMD, you need the images to arrive at the user’s eye at exactly the right time. 20 years ago, it was possible to roll custom graphics rendering chips… overclocked and liquid cooled… but you had to pick between resolution, frame rate, and latency. 2x for stereo rendering (even if you did use some of the weird short-cut techniques to cut down on the work load.)

3)Want multiple users in the same sensor stage? In the same video game? Or multiple render heads? Good luck with 90’s networking! Same constraints as a multiplayer video games, but now with more timing constraints. Physical (as well as virtual) collisions. All this in the era where the best connection you can get is an ISDN line and pings are in the high 500s.

Want an example of a vr platform done right? Look at “Vrui” http://idav.ucdavis.edu/~okreylos/ResDev/Vrui/ by the famous “Doc-ok” guy.

When you install it and get it working, it’s underwhelming. But when you actually check out the source code, you realize that these guys have thought of EVERYTHING. Every possible sensor arrangement, every distributed rendering scenario, multi user interaction in a client-server model, every possible joystick button arrangement, and all the base objects for “windows” style UI design in a 3d world.

So why doesn’t anyone use this fantastic platform? Because Vrui leaves the job of next gen graphics to the devs… Yea… you need to implement/port 10/15+ years of graphics research into Vrui to give it “next gen” level graphics.

It’ll happen eventually. Someone will merge a great game engine with a sane vr platform, and that’ll become the de facto standard for lack of any better choice…

But don’t hold your breath.

Once again… VR hardware is not really the problem. It already far outvances the applications intended for it. Just because a crappy game has photorealistic graphics and full motion video does not make a crappy game awesome.

Games will indeed get the technology adopted for general use. I agree with that. But it becomes a fad and fads die out. Which is, once again, the reason VR fails to launch every time.

I don’t care if the graphics are 8 bit with a 16 color pallete as long as the application is useful, intuitive or entertaining. THAT is what will motivate advances in hardware. Otherwise all we end up with is photorealistic crap like 90% of the games out today.

There once was a time when programmers abused the crap out of hardware to squeeze every last bit of juice out of it. Now, we just build the next gen game console before we barely even scraped the capabilities of the old gen hardware. And the result is crap on the old gen and crap on the new gen. Pretty crap, but still crap.

Let’s just admit it – this HMD is the same as before, with just a new phone to stick in it, promising a better screen. I repeat myself – the hardware is NOT the problem.

What we need to do is figure out what we really want to use the hardware FOR. What should virtual reality look like? What should it do? What can it do to augment our everyday lives? And then we can focus on advancing the hardware.

But, nevermind me, it’s not like I haven’t watched this happen over and over again. It’s hard to get excited about it.

> Let’s just admit it – this HMD is the same as before

No, it’s not the same as before and it’s the reason why it’s garnering attention today while other consumer HMDs failed to do so for the past 20 years. The key word here is “presence” and it starts with a ~80° field of view. Every consumer HMD in the past had a ~50° FOV at best.

You can read more about what is required to get presence in this presentation from Michael Abrash : http://media.steampowered.com/apps/abrashblog/Abrash%20Dev%20Days%202014.pdf

Basically, all the following are required :

– wide field of view (> 80°)

– adequate resolution (> 1080p)

– low pixel persistence ( 90 Hz)

– optical calibration (correct distortion and chromatic aberration compensation)

– rock-solid tracking (submillimeter and 1/4 degree accuracy)

– low latency (< 20 ms)

EDIT: replace

– low pixel persistence ( 90 Hz)

with

– low pixel persistence (< 3 ms)

– high refresh rate (90 Hz)

Fredz… great. Improved hardware.

Something huge just flew right over your head, and you missed it.

I’m done making my point. In 15 years, perhaps I will come along again to say, ‘I told you so.’

*shrug* I’m out

Exactly.

This and we do have great applications coming out for VR. It’s slower than I like, but it’s happening.

Elite Dangerous is one of the best examples. It is a great use of the hardware, it’s a sitting experience, it enhances your awareness of what goes on around you in a dog fight and you actually look to the different elements of the UI which is super intuitive. The immersion factor is pretty huge being able to see your body and look around the cockpit too.

Then we have games like Windlands which are pretty breathtaking and as far as I can see don’t actually make you nauseous at all despite flying around the map attached to a hook.

Other examples are VR Arcade, Vox Machinae OH and Altspace VR. That blew my mind.

Altspace VR is a social experience with funny floating body entities which have full head tracking sent to all clients so you can make direct eye contact with people and fully directional audio which diminishes at a distance. It’s a subtle social experience designed to run on things as lowly as Macbook Airs. It’s hugely immersive and it feels so… human. When I talked to the developer I was looking into his eyes as we spoke, like we would in a real room… That really sold me on social VR.

The main thing in the way right now is input and standardisation. We need an input that incorporates the body and works in a small space along with an xinput like system to make sure that every game works with each individual system in the same way. That’s the next challenge for me. Right now it requires direct developer to developer co-operation which seems truly impractical to me.

But yeah, these naysayers are plain wrong. If you think this gen of VR is the same as the last, you clearly haven’t tried some of these experiences OR you’re in the unfortunate situation where you get motion sick easily, which a lot of us who have the Rift don’t. Only bad experiences like HL2 VR make me sick – and it’s not even really bad, it’s just too fast for VR and the screens don’t have the resolution for it yet.

@Justice_099

You’re actually the one missing the point of this new wave of HMDs. Like I said, the difference is that the hardware is now able to give presence, and that’s enough to make the software relevant. You’re no longer looking at a distant display, you actually feel like you’re in another place, even at a subconscious level.

I’m old enough to have experimented HMDs in the first consumer VR wave in the 90’s and I’ve been unimpressed as well. This time it’s different because the people doing it actually understand what is required to actually deliver virtual reality. It’s no wonder that people like John Carmack and Michael Abrash are involved this time.

ok

“This is a great way for developers to get a head start on their apps before the Gear VR is actually released.”

Umm… What’s up with that? I ordered my Gear VR from the Samsung Website for $200. I have ordered 5 of them for various folks and we all have them now. That is a lot less that the $350 price at amazon.com and other places for a “preorder”. And Samsung offers free shipping (or $20 for overnight shipping).

However, an Open Gear VR device is cool too, though the cost of the phone to go with it (without a 2-year service contract commitment) is a bit pricey (with the Verizon version costing about $125 less than phones locked to other carriers (also with no service plan). Beware that the international version of the Note 4, while less expensive uses a different processor and may not be up to the task of running some Gear VR apps. Samsung DOES plan to support other models of phones later. I am using StraightTalk wireless on my AT&T-locked Note 4 phone (about half the monthly price of other plans with no term commitment).

We need to support lots of phones, with interesting apps that do not need so much resolution and computing power.

For software that I want for my VR, I have little interest in games, which bore me quickly. I want VR environments to use as a tool, for doing things BEYOND what I can do in real life, using virtual tools and components that I do not have room to store, and being non-physical they can be reproduced quickly and easily and at little or no cost. I am a software developer, and I have plans (and a substantial non-proprietary code base to build on). All I need is time…

“Umm… What’s up with that? I ordered my Gear VR from the Samsung Website for $200. I have ordered 5 of them for various folks and we all have them now.”

If you are in Australia (like me when I started the project), there was no way to get a GearVR except expensive shipping companies. With the European launch this week, the situation has become better, but there are still many countries that will have to wait for quite a while for an official launch.

The F3 board ont he other hand ships in most countries overnight :).

Ahh… I see. I just went to the Samsung order page and you cannot select a country — only a state. I also tried amazon, where they DO let me choose a non-US address but then put apologize that they cannot ship to this address for reasons including this:

“The following types of items can’t be shipped to buyers outside the U.S.: video games, toy and baby items, electronics, cameras and photo items, tools and hardware, kitchenware and housewares, sporting goods and outdoor equipment, software, and computers.”

So perhaps Gear VR qualifies as one or more of those items that amazon refuses to ship outside the US. Bummer.

Exactly. So you either have to wait until it becomes available in your country, use a shipping company (which I did for my GearVR, shipping cost was $100 in total to Australia, for a $200 product and it took 14 days to get here), or buy a $15 F3 board and reuse your Goohle Cardboard (or buy a new one for $5). So $20 you can start toying around with it in a couple of days.

I am also currently designing and 3dprinting a proper case. It will still use Cardboard lenses, since they are cheap and easily available. But the design will have swap-able inserts for other lenses as well.

The hackaday subscription management system uses secure links into this website, like this one to here:

https://hackaday.com/2015/02/05/build-your-own-gear-vr/

However, clicking it causes a web browser warning — the hackaday secure cert is borked! I need to override the browser warning to view this page (almost) securely.

Mods: Y’all should fix your cert (or change the subscription management system to provide non-secure links?)…

The cert is coming from WordPress.Com. I’m guessing that means WordPress is actually hosting the site.

Hey, I’ve had an F3 Discovery board without a use for a long time (not into quadcopters and why else would you want motion sensors / compass?)

Now I might just cobble together my own headset.

Many of the Durovis Dive apps work great in Google Cardboard, and in the GearVR too. Though for Tuscany Dive the headset keeps causing touchscreen events that change display complexity (and FPS) even when the phone is placed UNDER the microUSB (i.e. 11-pin MHL) jack instead of plugged into it. I wonder if those rubber pads the phone screen rests on are conductive…

Thanks for picking up my Road to VR article! It’s nice to see the Open Gear project is finally getting more notice :)

Hi,

I am the initatior of the OpenGear project.

Thanks for reporting on this! Awesome!

There is a lot confusion in the comments what OpenGear/GearVR is. GearVR is not just a phone holder. It includes a special IMU for headtracking which updates at a 1000Hz, where most phone sensors only do 100HZ or 200Hz. On the software side, John Carmack is responsible for a low level kernel driver for the IMU. This means GearVR apps bypass the Android stack and have direct access to the IMU. Also, the screen has something called “low persistence”, which is only enabled in GearVR apps. Effectively reducing motion blur by a huge factor. All of this is currently only available in either Note4+GearVR or Oculus Rift DK2. (as a side note: I Have tried their newest prototype, Crescent Bay, and my concerns regarding motion sickness are completely gone. Zero latency, zero motion sickness)

To summarize what makes GearVR different from phoneholders:

– 1000Hz tracker -> much smoother, more accurate tracking

– low latency kernel driver for tracking -> less delay between movement and updated eye image

– low persistence -> far less motion blur

– much better optics which are properly calibrated -> little to no distortion

– higher quality of apps which adhere to a set of health&safety guidelines

So what is OpenGear? It is meant for developers to jump on the GearVR train early. When I started the project, GearVR was only available in the US. Me being in Australia and wanting to develop for it, made me “create” (all the hard work was in fact done by yetifrisstlama way before the GearVR was even announced) the OpenGear, which is software compatible to GearVR.

OpenGear does not have low persistence, even though it could be enabled quite easily I pressume. It does not have properly calibrated optics. It is not meant for the consumer. But developers can use it to test their apps with headtracking on the actual phone, create apps and get a headstart on development.

I am currently designing and 3dprinting a proper headset that will fit a Note4 + F3 board. It will still use Cardboard lenses, since they are cheap and have very similar distortion.

Follow me on Twitter (@skyworxx) if you want more updates :).

Cheers,

Mark

is there any way to trigger the VR mode in a Samsung device (note4, S6) from a PC with usb connection? I don’t care about the sensors data, just want to make it think I have a gearVR connected and trigger the oculus mode…