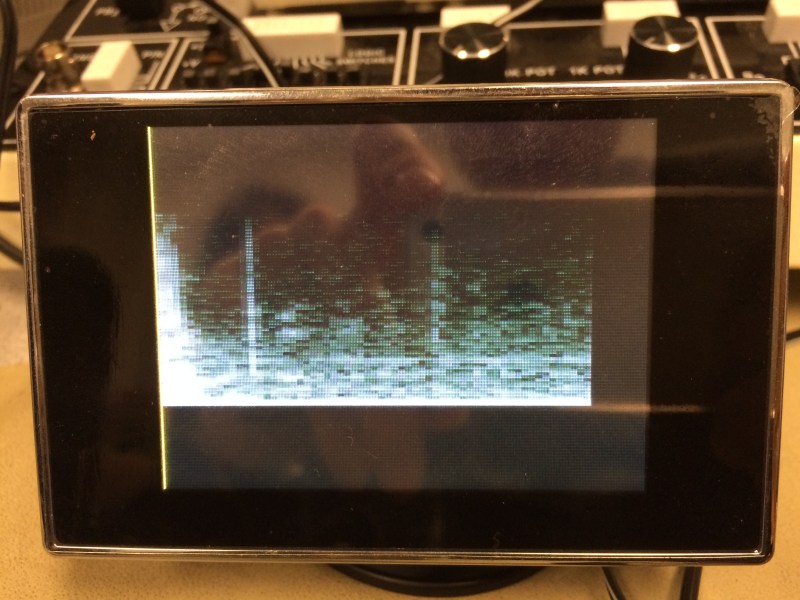

For their ECE 4760 final project at Cornell, [Varun, Hyun, and Madhuri] created a real-time sound spectrogram that visually outputs audio frequencies such as voice patterns and bird songs in gray-scale video to any NTSC television with no noticeable delay.

The system can take input from either the on-board microphone element or the 3.5mm audio jack. One ATMega1284 microcontroller is used for the audio processing and FFT stage, while a second ‘1284 converts the signal to video for NTSC output. The mic and line audio inputs are amplified individually with LM358 op-amps. Since the audio is sampled at 8KHz, a low-pass filter gets rid of frequencies above 4KHz.

After the break, you can see the team demonstrate their project by speaking and whistling bird calls into the microphone as well as feeding recorded bird calls through the line input. They built three controls into the project to freeze the video, slow it down by a factor of two, and convert between linear and logarithmic scales. There are also short clips of the recorded bird call visualization and an old-timey dial-up modem.

Bird Songs

Dial-up modem

They need to line-in some Aphex Twins and freak out their design commitee

it would just look like a spike because the hidden pictures are mere fractions of a second in length.

That’s the finest atmega spectrum analyzer yet. Nicely done

If you’re interested in doing the exact opposite of this, I made a lil prezi about it a few years ago: https://prezi.com/bzylzhp3n484/image-based-spectrographic-processing/

Anybody can make pictures in spectrograms like Aphex Twin if you’ve got Matlab or Python (or probably R but I never tried that one). You can read the whole (three page) report about it here: http://issuu.com/dissonance/docs/imagebasedspectroproc

Awesome.