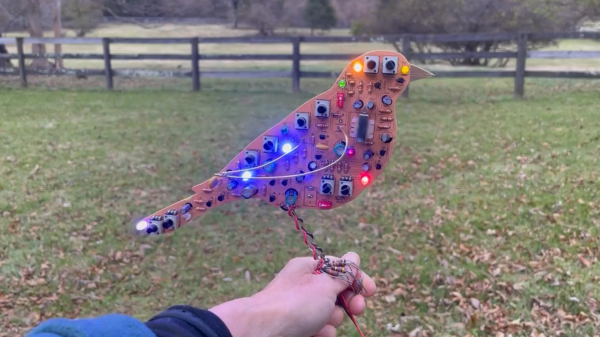

On our favourite low-attention-span content site, [Kelly Heaton] has recently started sharing a series of “Printed Circuit Birds”. These are PCBs shaped like birds, looking like birds and chirping like birds – and they are fully analog! The sound is produced by a network of oscillators feeding into each other, and, once tuned, is hardly distinguishable from the bird songs you might hear outside your window. Care and love was put into making this bird life-like – it perches on Kelly’s arm with legs woven out of single-strand wire and talons made out of THT resistors, in the exact same way you would expect a regular bird to sit on your arm – that is, if you ever get lucky enough. It’s not just one bird – there’s a family of circuit animals, including a goose, a crow and even a cricket.

Why did these animals came to life – metaphorically, but also, literally? There must be more to a non-ordinary project like this, and we asked Kelly about it. These birds are part of her project to explore models of consciousness in ways that we typically don’t employ. Our habit is to approach complex problems in digital domains, but we tend to miss out on elegance and simplicity that analog circuits are capable of. After all, even our conventional understanding of a neural network is a matrix of analog coefficients that we then tune, a primitive imitation of how we assume human brains to work – and it’s this “analog” approach that has lately moved us ever so closer to reproducing “intelligence” in a computer.

Kelly’s work takes a concept that would have many of us get the digital toolkit, and makes it wonderfully life-like using a small bouquet of simple parts. It’s a challenge to our beliefs and approaches, compelling in its grace, urging us to consider and respect analog circuits more when it comes to modelling consciousness and behaviours. If it’s this simple to model sounds and behaviour of a biological organism, a task that’d have us writing DSP and math code to replicate on a microcontroller – what else are we missing from our models?

Kelly has more PCBs to arrive soon in preparation for her NYC exhibit in February, and will surely be posting updates on her Twitter page! We’ve covered her work before, and if you haven’t seen it yet, her Supercon 2019 talk on Electronic Naturalism would be a great place to start! Such projects tend to inspire fellow hackers to build other non-conventional projects, and this chirping pendant follows closely in Kelly’s footsteps! The direction of this venture reminds us a lot of BEAM robotics, which we’ve recently reminisced upon as something that’s impacted generations of hackers to look at electronics we create through an entirely different lens.

Continue reading “Printed Circuit Bird Family Calls For Us To Consider Analog” →

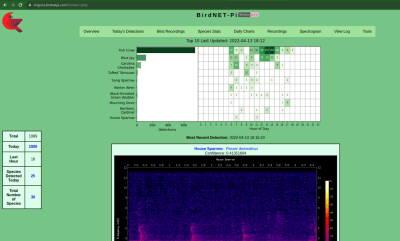

About that Raspberry Pi version! There’s a sister project called

About that Raspberry Pi version! There’s a sister project called