The Raspberry Pi is a great machine to learn the ins and outs of blinking pins, but for doing anything that requires blinking pins fast, you’re better off going with a BeagleBone. This has been the conventional wisdom for years now, and now that the updated Raspberry Pi 2 is out, there’s the expectation that you’ll be able to blink a pin faster. The data are here, and yes, you can.

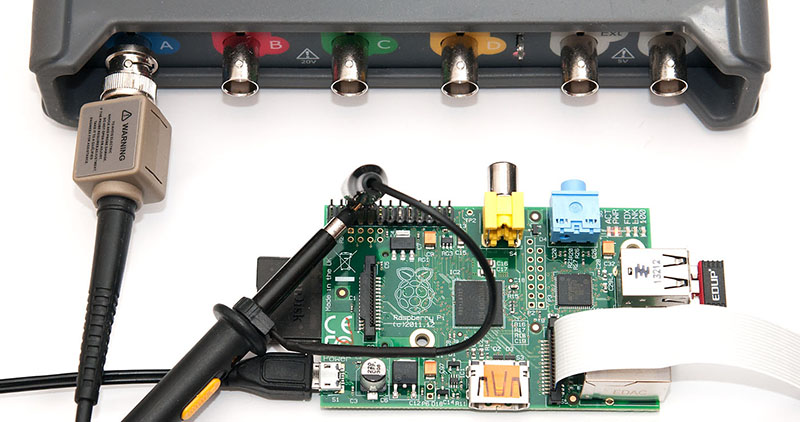

The method of testing was connecting a PicoScope 5444B to a pin on the GPIO pin and toggling between zero and one as fast as possible. The original test wasn’t very encouraging; Python maxed out at around 70 kHz, Ruby was terrible, and only C with the native library was useful for interesting stuff – 22MHz.

Using the same experimental setup, the Raspberry Pi 2 is about 2 to three times faster. The fastest is still the C native library, topping out at just under 42 MHz. Other languages and libraries are much slower, but the RPi.GPIO Python library stukk sees a 2.5x increase.

Is it just me or is it disingenuous to say this is testing I/O speed? Isn’t it just testing… O? How fast can it sample?

From what I’ve read I think that the i/o read and write commands take the same number of clock cycles on the CPU. But to use the value you read by comparing or storing will affect the amount of time your loop takes immensely. So the Pi 2 will probably sample much faster, and so have more capability, but ymmv depending on how crafty you are when you are storing or using your information.

Spotting the PicoScope there, I knew they’d have bunged one your way – checked your site: ‘sponsored by’!

I wish they’d kept their promise for me – nice bit of kit ;-)

Was the test done with OOTB settings? Does the speed scale with overclocking, or are the GPIO scaled down wehen overclocked.

“when overclocked” – (makes more sense)

Haha, yeah Picotech has been a long-time partner of my site, awesome scopes!

Yes the Pi is basically “out of the box” Raspbian setup. I would assume the speed scales with overclocking, hard to imagine the ARM chip would have independent GPIO clock.

Why would you even attempt a performance benchmark with Python. Python is not fast. It will never be fast. It wasn’t intended to be fast.

There seems to be some trending obsession of “do everything in Python even if it is not a task it is suited well to” it’s like the arduino of the software world

The Raspberry Pi is DESIGNED to teach programming with Python, so there is a good reason to be using it on the Pi – all sorts of library’s are already there. Python is used to teach intro programming at places like MIT, and Standford- so no “obsession” – people starting to work with more demanding hardware projects just need to learn the correct tools.

Nope, LabView is the Arduino of the Software world…:p

I suppose Microsoft Excel is the parallel port bodge-wired to a breadboard of the software world

I am not a MicroSoft minion in any shape or metric, but I’m surprised at what people can do with Excel, like starting their car, or running complex electromechanical machinery.

After I’ve seen mechanical simulation done in access, nothing will amaze me.

It is benchmarked for exactly the same reason the faster languages are — to see how fast it is so that people can make informed decisions about whether it fits their needs.

It’s a useful comparison and it’s worth noting that it’s an order of magnitude faster than shell scripts. Discounting the language for speed doesn’t really make sense. Create or use time-critical components written in C or Fortran and call them with CPython’s ctypes. That’s how it’s intended to work and it’s trivial to implement.

CFFI instead of ctypes, other than that: +1

Actually python can be extremely fast. I’ve written near real time code with it, that was used to monitor RFID.

Will it be faster than C? Probably not, but it’ll be easier to read, and faster to write.

I tried to do that with the PI (1) and it worked for a while but it was no where near perfect … it would miss reads

I’ve had no issues with the R PI b+. My only complaint was that the RPI B+ was a little slow for the database work that I was trying to do. That’s more of an issue with the framework, and not the 700-1Ghz processor in the RPI B+.

Have to admit, yeah. For a proper ultimate speed test, really it should be done in assembler, with no OS loaded. Just a simple few bytes. It can do that, right? Anything above that is software slowing it down.

I wonder how fast a java program can toggle the IO? Maybe Java can achieve a toggle rate of well, 1/10Hz?

Nothing prevents someone from writing a bare-metal program on the SD card and booting that, as one wouldn’t have to deal with the IO overhead of a full operating system.

It is hysterical when people think a well written java program would be slower than a Python script….

Even JavaScript running in a decent browser can run at incredible speed with something like asm.js running.

Whats javascript have to do with java?

In speed references its generally even slower.

For maximum speed, the IO should be toggled in java, running on a java interpreter written in java, running on a java interpreter written in java. Adding more recursion should make it even faster! Everyone KNOWS java is FASTER than C!

Could you please explain how Java can be faster than C for an operation like setting a bit?

More on that here https://en.wikipedia.org/wiki/Sarcasm

The language differences are what you would expect. Other than C, nothing else mentioned is suitable for “real- time” control. Of course, how fast you require for “real-time” control depends on the application. The other, equally important issue, is how deterministic the system is. You need a kernel/OS made for real time applications if you are going to run a machine tool or something else with critical timing/motion control etc. There is work being done running LinuxCNC on a Pi, with a suitable kernel- it is not quiet there yet….

I agree.

I personally don’t actually think LinuxCNC will ever be quite there, though. The Pis run a SoC designed for tablets and phones, aka not real-time, multi-purpose, and focused on working together with its GPU to make some nice multimedia magic happen. In my opinion, trying to make something like that run real-time is a fool’s errand.

It’s a chip that executes instructions. Tuning Raspian like they did for the usual LinuxCNC distro should result in usable speeds. Real-time and multipurpose are somewhat countering each other. Realtime simply means that timing specific tasks are predictable. That is up to the OS, and generic Linux is not suited to that, just like Windows and OSx aren’t (out of the box). There are implementations of Mach3 running under Embeded Windows XP without issue on weaker cpu;s.

The problem isn’t software so much as hardware, the Broadcom chip isn’t just a CPU, it’s a SoC that contains a CPU, GPU (and more). The CPU and GPU share a number of resources and are hard-wired together in a number of ways (For example, some of the timers in the ARM are linked to the GPU clock, so if the GPU decides to change clock (which it does depending on load, in order to save energy) your timers are suddenly running at an entirely different speed). Now, I’m sure that if you know exactly how the SoC is put together, you could figure out how to make everything run stable or at least know when it won’t, but Broadcom is unwilling to share all the necessary details so that is not going to be easy.

I want to say, you are joking right? But that would be a sarcastic. I have been around mainframes since 1965. What you describe is a computer system. It is about how you configure an OS to operate the combination if peripherals. Whether it is on a single chip or spread across 3 floors doesn’t make any difference. LinuxCNC runs under a limited version of Linux today. Mach3 runs on XP embedded on low end hw. Pi 2 is more than enough hardware to do the same.Someone needs to expend the effort to do the configuration work to remove . An AtMega328P is more than adequate to run > 30k steps per second running Grbl, the Pi 2 is up to the task with considerably more horsepower.

Yes, the Pi is not really suited for CNC- someone somewhere is going to get excited about a $35 computer, and take up the challenge of getting it to work with their favorite application. If it works or not, they still have learned plenty trying.

You’re right of course. I guess my wording was a bit harsh.

Running in a multitasking general purpose OS is not “suitable for real-time control”.

True, however there are tricks used with industrial control systems that let you use both. One example I have used is Beckhoff Automation’s “Twincat” – it wedges itself in alongside MS windows (as poor an OS for “real-time” as it gets) and hands out time slices to the automation tasks and MS windows. It works.

Computers have interrupts,

And you can write code in assembler making it ten times as fast as any C type language.

So yes it is possible even with a multitasking OS to do such things. In fact it’s done all over the place 24/7. Even using windows.

You’re completely missing the point. Multitasking means that your task can be preempted at any time – even if just by the kernel rather than another process – which means your ‘realtime’ task may go on hiatus for 20 milliseconds without notice.

And no, assembler is not “ten times as fast as any C type language”.

How does bare-metal asm do?

Maximum speed is ~125Mhz @ 1.2V, so asm should reach max!

asm is the lowest level to control the cpu, if it isn’t possible by asm, it’s impossible (assuming asm access is as fast a a dma access)

just to be particular : machine code is the lowest level, an assembler is one small step above that!

Meh… There’s (normally) a 1-to-1 mapping between each instruction in assembly language and machine code, so they are on the same level. On the other hand you have programmable ‘micro operations’ on some cpus, and that is something that’s lower than both assembly and machine code.

Shouldn’t be any difference to the C code. Maybe you need to unroll the loop to save a cycle.

On SoCs in that performance region the GPIO block and the bus connecting it to the CPU core are rarely clocked as fast as the CPU core. A loop executed from the 1st level cache therefore usually doesn’t add any overhead.

good point.

Yes, the Peripheral bus is most likely a lot slower than the CPU, hence this 1 cycle for the loop shouldn’t make any difference.

Maybe someone should spend an hour or two profiling Python and some of these other lethargic interpreters to fix the bottle necks? Speeding up the clock is not the solution.

Gosh, I’m sure nobody’s ever tried doing any performance improvements on Python before! Good idea!

At a 72khz benchmark, apparently nobody has. Believe it or not, high level languages cam achieve high performance if properly optimized in the compile/jit/runtime. It’s just hard to do.

Yeah but there’s a limit. And as ever, speed vs versatility is what you’d be adjusting, once you’ve optimised out the slack.

I still maintain that if you’re relying on anything timing critical from inside a multitasking OS like linux, you’re doing it wrong. Use an external MCU, or a coprocessor like the BeagleBone’s CPU sports.

Or an arduino. Everyone loves a pi-duino combo :)

That is the most straight forward solution. Use Python or whatever you like for the higher level tasks, pass off time critical tasks to a microcontroller or FPGA.

And why not use 3 cores for Linux and 1 core as MCU?

I am curious why we don’t hear about this being done more. Especially if the core dedicated to “MCU” tasks has top priority for IO and memory access, this should be able to do “real time” as well as any dedicated MCU?

Becuase the memory, IO, and other resources are shared. A MCU has exclusive deterministic access to its own RAM. Since it’s SRAM, a read ALWAYS takes N cycles, there are no cache misses to account for, no refresh cycles, it’s all 100% deterministic. I can run the same program on a MCU 1000 times, and each iteration will take eactly N clock cycles, under all conditions, barring interrupts, etc.

When you add DRAM into the mix, and cache misses, and OS resource polling, you introduce non-determinism.

That’s why nothing “real time” actually runs on a computer, dedicated ASICs or MCUs handle it, they don’t have all the overhead.

Well… Theoretically speaking even a dedicated ASIC or MCU is non-deterministic unless the external events are in perfect sync with the clock of the MCU. So you’ll have a reposne time with up to one clock cycle in jitter.

1 clock cycle, 100 nS, 1 uS, 10 uS, 100 uS, 1mS? How much jitter do you tolerate before it’s not a real time system?

This is the right answer. It is trivial to assign a pthread to a CPU core.

Alternatively, you can use a RT kernel. It’s amazing what you can accomplish in a “lowly” multitasking OS.

There are a great many very important (as in even life threatening or million dollar risk) time-critical systems running on linux I’m sure.

Although maybe for the amateur or other low resource outfit a MCU based solution is indeed the better choice, but it’s silly to dismiss it as not possible. As I said earlier in this thread: interrupts, they do exist..

Isn’t this a task better suited for a native hardware PWM pin from the CPU (if you want to toggle at a frequency / duty cycle) or with DMA output to a pin (if you want a particular sequence)? Software bit-banging is always going to be slower.

In this case, no, because this was a test so see how fast the pins can be switched. It’s not a test to see how fast you can get a PWM signal, it’s a test for ad-hoc access to the IO pins. How fast you can switch a pin on or off. Say you wanted to bit-bang HDMI or something (no idea if that would work). In this case a constant stream of pulses was used to make it easy to pick up on the scope, but in practice it might not be a constant stream.

Has anyone seriously considered what the true potential of the RPi is, if it weren’t hobbled in its ability to be programmed in Assembly language?

It doesn’t take a rocket scientist to determine that there is information regarding the RPi which is not being considered, to wit:

As I read Broadcom’s datasheet(s), I/O instructions are 1-cycle instructions.

At a clock frequency of 900 MHz, this works out to 1.11… ns.

To toggle a bit on, and then off, requires 2.22…ns.

This gives (big surprise) a maximum toggle frequency of 450 MHz.

Quite a bit faster than ONLY 42 MHz available using C, huh?

Why is no one REALLY programming the Raspberry Pi in native assembly language?

Personally, it’s cos I’ve no idea how to write ARM assembly, or how to get it working on my friend’s Pi. Why aren’t you doing it?

Perhaps if you worked as hard at learnimg assembly language as you do at jumping to conclusions, you’d be an assembly-language genius.

You should have stopped your response at one sentence, COS where’d you get the information that I’m not writing assembly language code?

From your preceding post. Or don’t you count as anybody? Don’t be so hard on yourself, guy.

If you’re trying to wiggle GPIO pins at deterministic speeds inside a non-deterministic, non-real-time operating system like Linux, you’re doing it wrong. These sorts of benchmarks/discussions are a good way to send newer programmers down a path to misery (“I’m trying to do software PWM on a raspberry pi using PHP, but it’s not working…”).

Put a 70-cent MCU on the i2C bus to offload the real-time tasks, and get on with your life.

It’s not “trying” anything. It’s just a curio. To show how fast a pin can be toggled. It’s not an application, it’s meant to inform other people of the possibilities.

Realtime,and even hard realtime: XMOS.