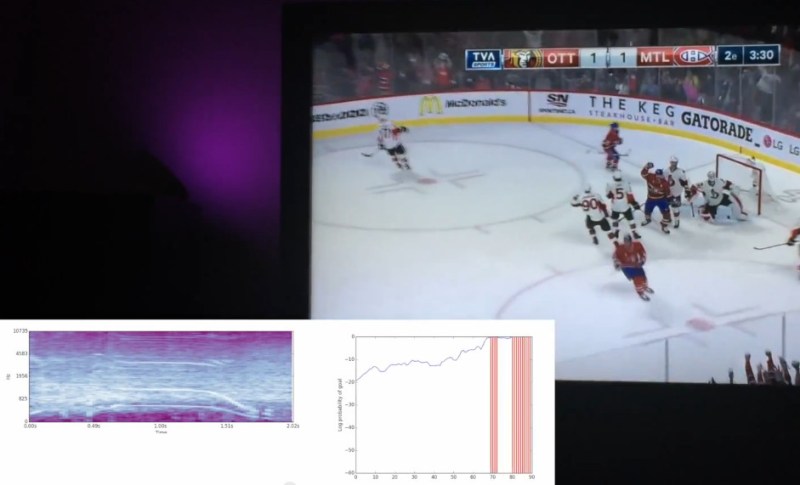

[François] lives in Canada, and as you might expect, he loves hockey. Since his local team (the Habs) is in the playoffs, he decided to make an awesome setup for his living room that puts on a light show whenever his team scores a goal. This would be simple if there was a nice API to notify him whenever a goal is scored, but he couldn’t find anything of the sort. Instead, he designed a machine-learning algorithm that detects when his home team scores by listening to his TV’s audio feed.

[François] started off by listening to the audio of some recorded games. Whenever a goal is scored, the commentator yells out and the goal horn is sounded. This makes it pretty obvious to the listener that a goal has been scored, but detecting it with a computer is a bit harder. [François] also wanted to detect when his home team scored a goal, but not when the opposing team scored, making the problem even more complicated!

[François] started off by listening to the audio of some recorded games. Whenever a goal is scored, the commentator yells out and the goal horn is sounded. This makes it pretty obvious to the listener that a goal has been scored, but detecting it with a computer is a bit harder. [François] also wanted to detect when his home team scored a goal, but not when the opposing team scored, making the problem even more complicated!

Since the commentator’s yell and the goal horn don’t sound exactly the same for each goal, [François] decided to write an algorithm that identifies and learns from patterns in the audio. If a home team goal is detected, he sends commands to some Phillips Hue bulbs that flash his team’s colors. His algorithm tries its best to avoid false positives when the opposing team scores, and in practice it successfully identified 75% of home team goals with 0 false positives—not bad! Be sure to check out the setup in action after the break.

Goal! Eh?

Wouldn’t it have been easier to do some OCR on the counter at the top of the screen? That way one should be able to get a 100% detection rate.

Maybe the text is updated slower than the shout of the commentator, I am not sure on this. If not, it might have been easier. However, I still love this solution. Pretty cool home hack!

Yes, the counter is much slower. The commentator and goal horn are immediate!

25% error will still be too embarrassing when it cheers for the opponents scoring.

Hey Whatnot. I’m the guy who did this.

The 25% error rate is actually detecting 3 out of 4 goals FOR the habs. The the only consequence of that is the light show not starting when it should. I have a USB button hooked up to the system so I can start it manually when that happens.

The error rate for goals of the opposite team is much lower. Based on the simulations I ran, it should wrongfully go off only once per 4 games. But I’m working to improve that. Hopefully Carey Price will do his part to and get more shutouts :)

As for your other comment about the twitter feed, I have found no API, feed or any service that can give me reliable notifications within 2 seconds of a goal. If it takes 5 or 10, the moment kind of passed…

Cheers!

François, this is amazing. I tried to do something similar for my hometown Blackhawks by scraping a scores API and it was just way too delayed.

I feared as much about the delay with twitter, although I thought maybe there might be enthusiasts that are very dedicated to be the first, but I guess the twitter system itself has a delay too.

But hey, maybe it can at least be used to kill the cheering machinery if it’s wrong after 5+ seconds, if someone wants to try that.

You could just watch the game on a 10-15 second delay and give the Twitter thing “prescience” (from your point of view), then sync the light show to the goal buzzer sound, but only for your team.

So sorry you didn’t have much opportunity to test it last night….

I lied… I’m not sorry. :)

quote:

successfully identified 75% of home team goals with 0 false positives

it’s 25% error rate for undetected home goals (should detect but doesn’t)

and 0 false positives (completely none opposite team goals mistaken as home)

in short: sometimes (1 of 4 times) doesn’t detect home goal with no false alarms

Maybe there is some popular twitter feed which tells you which team scored to increase reliability? ‘If there is a feed and it says the opposing team scored then don’t run the celebration routine’ basically.

I love this hack but I still hate the Habs!

Wouldn’t the ESPN Channel on IFTTT be enough to automate this? I’ve set IFTTT recipes to do all sorts of things when teams score.

Never mind, I read his comments here–he wants something more immediate. Kudos for figuring it out!

Even the budweiser goal light has a delay of about 10 seconds depending on which station you are watching, and which media (cable, satellite, etc) so this concept is great because it syncs to what you are watching, so even on a recorded game it works :) . Does it take into account both stations playing the game? (rds and tvasp) Does it make the difference between “goal” “Scores” and others? Did it trigger last night after our horrible loss of 5 to 1 ? We will finally eliminate the senators on sunday?

Let’s hope so!

I only trained it on TVA games since they are the ones airing the playoffs. I tried it for fun on an RDS broadcast and it didn’t detect a single goal. It’s commentator specific because of they different way they yell so I’ll need to put together an RDS dataset for next season.

I’ve added examples for both “goal” and “scores” so they both work as well as far as I can tell.

I was at the game last night. Hard to watch. I’ll run the recorded game through the system tomorrow if I have time to see if it would have gotten any false pos, and get more training examples!

Of course! This ends tomorrow. Go Habs Go! :)

Nice. Though I wish I understood what was actually being done here. I can at least follow through the MPS, but promptly got lost beyond that. But that’s not [François]’ fault. We hackers really need a robust method of keyword recognition, implemented and provided in a way that doesn’t require arcane knowledge to use. General speech recognition programs like Sphinx can be used for that, but false positives and negatives go through the roof with any background noise. What I’d most like to see is the neural-net based keyword recognizer for Google Now, pulled out so it can be used separately, along with a tool that when provided with training sets can teach the net a new keyword. Or at least something very similar.

Hi François–

Did you happen to take a look at the closed-captioning stream to see if it had useful data? It may not have been as immediate though. Back in the 1990s I co-invented an ad elimination technology for VCRs called Commercial>>Advance; one of the things we considered as an input to the heuristic expert system (such that it was back then) was closed captioning. It was possible because that system skipped ads on playback, so a delay didn’t matter. Ultimately we didn’t need the input as our other detection means resulted in 99.4% accurate detection with 0 false positives by the time we shipped without using it.

Now, 25 years later, perhaps the closed-caption stream or some of the data contained in it is immediate enough for your application…?

Hey

Didn’t actually look at that to be honest. It would be hard with my setup since I’m streaming the game. I’m not even sure cc is available with the service I’m using.

It would be super interesting to look at though. A bunch of people commented on other sites about different ideas with NLP. Even if the cc is delayed a bit, it could maybe still be used to determine which team has possession of the puck. I was thinking of doing it more with a simple image analysis but cc may be another avenue to explore. Would probably also be less computationally intense which is important since it’s got to run in real-time.

Knowing where the puck is would be extremely helpful as an extra feature to the classifier. Then it would probably be possible to increase the accuracy a fair bit. Currently, the goals I’m mis-classifying are because I’ve had to make tradeoffs with the sensibility with regards to is it a goal by the habs or the bad buys. A couple are really hard to distinguish. Knowing where the puck is in the rink would essentially solve that problem completely and maybe then we’re at 99% ?