If you’ve ever been to a capture the flag hacking competition (CTF), you’ve probably seen some steganography challenges. Steganography is the art of concealing data in plain sight. Tools including secret inks that are only visible under certain light have been used for this purpose in the past. A modern steganography challenge will typically require you to find a “flag” hidden within an image or file.

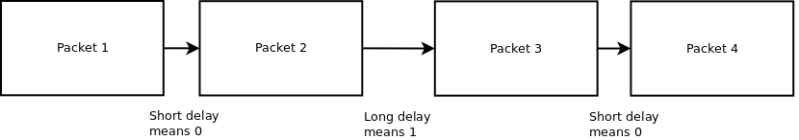

[Anfractuosus] came up with a method of hiding packets within a stream of network traffic. ‘Timeshifter’ encodes data as delays between packets. Depending on the length of the delay, each packet is interpreted as a one or zero.

To do this, a C program uses libnetfilter_queue to get access to packets. The user sets up a network rule using iptables, which forwards traffic to the Timeshifter program. This is then used to send and receive data.

All the code is provided, and it makes for a good example if you’ve ever wanted to play around with low-level networking on Linux. If you’re interested in steganography, or CTFs in general, check out this great resource.

Beutiful! too bad NSA will know to look for these

Thing is with all the data that there snorting up will they also be capturing the delays in the packets? I doubt it. Even if they implemented a method of capturing the data today all the data that they have captured in the past would get away with this… Unless this was put out by them so that they know which data to flag for looking at!!! THERE EVERYWERE :P

They’re*

you forgot one, THEY’RE*

;)

Would a big surprise if data logging isn’t done with a time stamp. That’s the first thing you would do if you are logging data especially at different secret locations to correlate them.

Yes, but how granular will the timestamp be? And will the men in the black suits know where to look (which is always the thing with any sort of steganography)?

What amuses me is that they feel probably forced to collect every bit of spam on the world since you could easily disguise a message as spam.

So the US government is spending billions upon billions and using enormous power resources to store spam and ads.

I guess it’s a better use of resources than storing youtube comments though, which is another billion of your american taxpayer money right there.

seems only viable for very short messages – the userspace netfilter queue itself adds ton of delay, but I guess that is predictable. The real problem is random delay along the path. Limiting yourself to binary is of course not optimal, but then again random delay limit your reasonable range of transmittable values. What you can also do is to allow change in ordering (if packets are ordered like ICMP ping, TCP or DHCP requests), which would limit overall message transmission time and wider range of possible values per single delay.

You can’t change the shannon limit by using a different numeric base instead of binary. The delay threshold will be different, but the noise contamination will stay the same. This is true unless of course one can show, or it is reasonable to assume, that a higher packet transmission frequency(as with binary delays instead of a higher base) increases the noise contamination of the channel by means of less deterministic delays.

What I wanted to say is to get better throughput you have to adjust numeric base inversely proportional to delay variance, as there is clearly space for improvement beyond binary (assuming you are not flooding network with short messages in small intervals).

I like the idea of maskiing the delay time. Setting a random delay and say anything below 30% is a zero and anything above 70% is a one. With other misc data added to obscure the signal unless you know what to look for.

Or give yourself an interval, and generate a shared pseudorandom value in that value: anything within the 1/2 of the interval (wrapped at the ends) around that key value is a 1, anything else is a 0. No visible correlation beyond a bounded variance assuming a good key sequence.

Routers sometimes discard packets as traffic rate shaping or out of buffers or just corrupted packets. So packets might be retransmitted and thus the ordering might change. Sometimes the individual packets might get different routes with different delays and ended up a different order too. The big cloud is dynamic…

Let some drunken russian milkmaids do some math:

I want to transfer the passwords for crypted containers, logins etc. Do some innocent ntp request every 60 seconds for short(0) and 65 seconds for long(1). This gives about 180 bytes per day. Way enough space for one password and error correction per day. Glue short/long to hour (odd 0/1, even 1/0) and voila.

I like your idea, which once again is defeated by sharing it publicly. You should have kept your mouth shut, and given it to somebody like snowden or something somehow instead.

I get the tendency though, I also though up a scheme after reading the comments, and almost shared it but decided to keep those that might be using it right now safe.

Inter-AS routing is based on BGP paths that are static for hours or days. Even within an AS, equal-weight multipath routing is uncommon.

People said the same thing about satellites, once upon a time :) You could come up with a scheme to implement QPSK, using for instance the length of the packet as your X axis, and the delay delta as your Y axis. Theoretically you could use these to modulate up to crazy rates like 256QAM, if the noise was low. Can’t stop the signal!

This reminds me of the method used by TI’s CC3000 wifi chip Smart Config. You are able to communicate network SSID/password information wirelessly from your phone to the CC3000. It works having the phone transmit opaque packets of varying length to the router, using the packet length to encode the info (AES encrypted with a pre-set shared key). The CC3000 simply watches for those special packets, and decrypts the length-encoded data. Then it is able to set its own SSID/password, and join the network properly. Obviously, this technique only works for devices that are in close proximity, but it’s a clever way to communicate the information fairly securely.

Just post random kitten pictures on FB, with data hidden in some chosen pixel rgb vales. There are many ways to this, and would-be decipherer will have to try all of them. Those in the know can change their coding scheme often.

Facebook reencodes images using lossy JPG compression when you upload them. I’ve done some experiments, and I plan to write an article about the need for lossless image transfer. I’ve made some pretty pictures of checkerboard patterns that progressively decay when being transferred by Facebook or iPhone->Save To Camera Roll. Nag me, and I’ll write it up!

Most all image hosting sites mess with the picture, (even the pirate bay image hosting experiment changed PNG to JPG for some reason.)

I wonder why that is? Maybe precisely because of defeating chances of private communication?

Years ago I wondered if it was possible to “modulate” the TTL (time to live) parameter into a normal data flow (http, etc) to let information slip under firewalls but have no idea if someone actually implemented it.

They mention using a threshold to determine zero. Guess the commenter that suggested that earlier didn’t read the source page. But did anyone notice the

“It is important to ensure in this case, that the only ICMP packets that are being sent, are those sent by the ping command we have just run.”

So one errant ICMP packet from the NSA and your secret channel is interrupted in this version.

The NSA or other in-between would have to insert your source IP-address though, and if you sit between the receiver and sender and have the ability to inject then you could just as easily drop the whole stream completely.to interrupt it.

And incidentally, many routers block pings, to ward off attacks, so that would make using ping a bit difficult. Other routers limit the stream instead and block it after letting through some of it.