We have padds, fusion power plants are less than 50 years away, and we’re working on impulse drives. We’re all working very hard to make the Star Trek galaxy a reality, but there’s one thing missing: medical tricorders. [M. Bindhammer] is working on such a device for his entry for the Hackaday Prize, and he’s doing this in a way that isn’t just a bunch of pulse oximeters and gas sensors. He’s putting intelligence in his medical tricorder to diagnose patients.

In addition to syringes, sensors, and electronics, a lot of [M. Bindhammer]’s work revolves around diagnosing illness according to symptoms. Despite how cool sensors and electronics are, the diagnostic capabilities of the Medical Tricorder is really the most interesting application of technology here. Back in the 60s and 70s, a lot of artificial intelligence work went into expert systems, and the medical applications of this very rudimentary form of AI. There’s a reason ER docs don’t use expert systems to diagnose illness; the computers were too good at it and MDs have egos. Dozens of studies have shown a well-designed expert system is more accurate at making a diagnosis than a doctor.

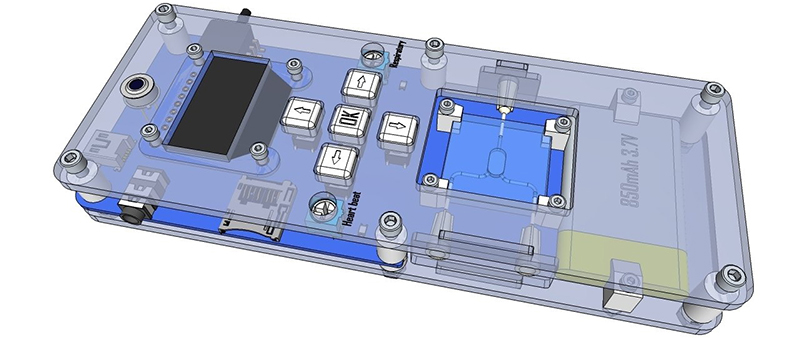

While the bulk of the diagnostic capabilities rely on math, stats, and other extraordinarily non-visual stuff, he’s also doing a lot of work on hardware. There’s a spectrophotometer and an impeccably well designed micro reaction chamber. This is hardcore stuff, and we can’t wait to see the finished product.

As an aside, see how [M. Bindhammer]’s project has a lot of neat LaTeX equations? You’re welcome.

Does it make the sounds?

You have a problem with your security certificate on the https links. it states :

This organization’s certificate has been revoked.

Clear your cache. We just generated new certs and revoked the old ones.

I have a doctor in the family (and no not one of those ego-stroking rich doctors who spends more time on the golf course than making rounds or doing surgery, a proper doctor doing real work) and I can tell you that there is no way I would trust any machine to make a decision on my health over a decision by a proper doctor.

Do I think machines (whether it be a simple sphygmomanometer or a state-of-the-art MRI machine) can make it easier for doctors to see whats wrong and improve outcomes for patients? Yes. Would I trust my health to a machine alone? (even a super-sophisticated machine like the AI in Star Trek Voyager) No way.

Suppose machines made a correct diagnosis statistically more often than human doctors – would you still want the human doctor?

Self-driving cars will be the same way. If they’re statistically less likely than human drivers to get into an accident, then insurance companies will demand them.

We’re at the beginning of this technology, but it wouldn’t surprise me in the least if a computer would eventually be more reliable than a human doctor. There are more than 30,000 human diseases, and no human can consider all of them.

We’ve heard stories of how people go from doctor to doctor, getting different opinions, trying this and that until they hit on the proper explanation. Treatment is usually straightforward, but only if you can get the right diagnosis.

Care to tell us why you would trust a person over a machine? Gregory House isn’t real, and no doctor will take the trouble to really figure out what’s wrong. If it’s not something they recognize, it’s up to the patient to follow through.

Depends — when the human doctor makes mistakes, do they tend to make large ones?

I’m likely to trust the computer, provided that when things do go wrong, the effect of their error tends to be low.

What I mean is I’d like a human to sanity check it. So I don’t go in with a flu and have it tell me I’m missing an arm because of a quirk in the machine learning.

The assumption of replacement is fallacious. This will become a diagnosis tool, supplemental to the doctor, perhaps in the same way a nurse practitioner or other healthcare professional will do an initial evaluation, with the doctor checking their conclusions and handling the statistically oddball cases. To assume the machine is better and therefore will replace the doctor is akin to expecting a 6-in-1 screwdriver to replace everything in your toolbox.

this post wishful thinking at best.

comparing A.I. to humans isn’t comparing a 6 in 1 screwdriver to a tool box. it’s a comparing a super computer to a desk top. there is NOTHING a human can do that A.I. and robot (in time) can’t do better the end.

So many level that are wrong with this.

– liability. A doctor has insurance for liability. If he/she messed up, there are huge consequences and as a victim, you can expect to be able to sue for malpractice. A software/hardware developer hides behinds a license agreement that void all the responsibility. Even worse if it is open source project for someone with no insurance and unknown contributor. Who would you trust here to take real responsibility?

– training. Do the developer have a medical practice and went through the same level of training as a doctor? A high school drop out can write a program or make a gadget. Do the person even has training with design stuff when a life is on the line?

It is not just about making diagnostics, there are other human factors in the education – how do you tell a patient and/or the family that he/she has only 3 months to live?

Then there is the big question: how good is it anyway?

If anyone here is entirely happy with tech support over the phone from script reader, please speak up. This is exactly what you would expect the current state of software scripts to do for diagnostic. There are no deviations from what’s on the screen. Do you RMA a patient or randomly send replacement parts?

most of the doctors in my country don’t have the basic talent, they’re only in it for the money, and only have what they have been taught(and not much of that), the drive…passion to cure people, help people isn’t there for most of them, its just a big pay check, and as such, some of them can’t diagnose the most basic of problems (the first example that comes to mind is an infected scrape from a close call with concrete, in which a doctor couldn’t diagnose a simple infection that was blatantly obvious)

I’d rather have a machine give me the possible problems than leave it to idiots who are only interested in a paycheck

all this is besides the point tho, this is surposed to be a tool, tools need someone to use them.

And that’s the problem with patients. They are idiots. They’d rather feel comfortable with their doctor and die from misdiagnosis than be healed by a superaccurate AI. I don’t care if my doctor is a grizzly bear… as long as he/she is right.

I’ve noticed that he’s using the naive Bayes assumption, which is that the attributes are conditionally independent. I don’t think this is valid for medical symptoms.

Different symptoms are not conditionally independent. If a fever indicates flu with 70% probability, and sore throat indicates flu with 40% probability, then having both fever and flu simultaneously doesn’t mean 110% probability. The probabilities overlap a little in terms of information content. Fever is not conditionally independent of sore throat – if you have both, you have to compensate for the overlap.

I suspect he needs to deduct the mutual information from the probabilities before doing the calculations. (For more info, see the wikipedia entry on “mutual information”.)

Any researchers (or doctors) who can comment on this?

Are medical symptoms independent attributes?

Naive Bayes classifiers assume in general that attributes are independent given the class. Despite this unrealistic assumption, the resulting greatly simplified classifier is remarkably successful in practice, often competing with much more sophisticated techniques. Naive Bayes has proven effective in many practical applications including medical diagnosis.

This is an excellently made project, but if it wasn’t for the hype of Star Trek , nobody should care about it. Would I like to have a device like a real tricoder? Of course yes. But this one, what is all the hype about it?

It measures the 3 easiest things to measure which are extremely incomplete for most medical examinations. More than that, heart rate and respiratory rate can be measured with a watch and you can buy a thermometer anywhere. You can even skip the watch, the smartphone can do heart rate an respiratory rate. Then put the intelligence in an app on the phone. And this is where it really lacks…so far.

As others have pointed, machines are better at diagnosing a disease compared to a doctor, but only if they start with the same data. Doctors still have the huge advantage of observing and gathering extra data.

@Bogdan

“Doctors still have the huge advantage of observing and gathering extra data.”

This is the key, I think. Given a written list of symptoms and data points a computer system may be better, but a human doctor has a gigantic advantage when it comes to *acquiring* the data. Sometimes patients lack the vocabulary to describe symptoms accurately, have personalities that make them unable to admit certain symptoms, or have been dealing with the earliest symptoms so long before the others arose they haven’t realized the connection and don’t think to mention it. A human’s social skills can “read” patients and steer/weedle to get at the info they need.

I really wish the editor had included a link for the claim that expert systems can diagnose problems better than a doctor. As it is, that paragraph is the only one without a link, so we’re left to speculate on what’s wrong with the obviously-bullshit claim that it’s just doctors’ egos holding us back.

The one test that could have been included that can’t be done with the back of a hand and the palp of a finger is oximetry. Continues to be a pity because it can actually differentiate between influenza pneumonia and endocarditis (for example)

As a physician, I often consult diagnostic flowcharts and some institutions (nurse/physician groups, hospitals, insurance companies) prefer them in order to promote a more uniform and cost-effective diagnostic/treatment process. I’d be interested in a well-vetted, specialty cognizant, expert system; however, it’s important to recognize it takes some medical education to avoid the “garbage-in, garbage-out” phenomenon. IMHO, an educated provider can better assess observations and turn those into appropriate “AI” input. Without too much imagination, It’s clear that automation will someday serve as an adjunct, rather than replacement, in the practice of medicine.

Perhaps we’ll see several levels of AI. One level appropriate for the general public (creates a large differential diagnosis list based on a general description of symptoms), another level for general practioners (who, using the patient’s general symptoms and additional observed/meaured signs, narrows the list of diagnoses) , and finally a level for specialists.

Will there ever be a “tricorder” that aids with any of these levels and always arrives at a correct diagnosis? Does anyone ever use the word, “Never”?

This project has very less to do with Star Trek. No hype. I started this project, because my father died of bowel cancer, my younger sister got terminally ill of breast cancer and I got a thrombosis after my last 12 h flight. None of the 3 basic vital measurements are easy – especially respiratory rate under load and body temperature evaluated from skin temperature are everything else than trivial.

The article is misleading. The reason we don’t use AI in medicine is it doesn’t work and it isn’t more effective than expert practice.

Ultimately the difficulties lie in either;

A) Difficulty in differentiating the common (musculoskeletal chest wall pain), the serious but uncommon (cardiac Ischaemia) or the hideously rare (pulmonary hypertension)

Or

B) creating an appropriate investigation and refining differential diagnoses

One older example basically just diagnosed everything as heart attack

So basically they behave like lay people who go the WebMD to see what their itchy flaky skin is, then come away under the impression it’s leprosy. Figured as much.

Or they ask the local grizzly bear, which confirms the diagnosis they are most biased towards (lacerations) and follow it up with writing blog articles about how “My doctor told me it was just contact dermatitis but I was sure they were wrong and now my vague symptoms have been diagnosed as Lacerations instead”

I was wondering, is there a link, or a title maybe to these “dozens of studies”? No sarcasm, just being curious.

hackaday prize entry: webmd + smart phone

Wait, you don’t have to have a finished product? Then may I enter my time machine?

If you have a working prototype time machine, you wouldn’t disclose of its existence nor make it open source. HaD prizes are too small for that. Just go back in time, buy lottery tickets and enjoy your rich life.

No time machines exist because of the butterfly principle. Go far enough back in time with a time machine and you change the conditions under which a time machine would develop. You’re left with just the one time machine… Hope it never breaks down.

As proof of your prototype go back in time and change your post from “could I” to “may I”.

Heh.

Tricorders again? holy cow, people love that name, hun ?

I think it’s mostly due to the fact that ‘Tricorder’ is effectively in the public domain. Roddenberry had a clause in the Paramount/ToS contract saying that anyone who could make one of these things would have the rights to the name ‘tricorder’.

That, and Trek is better than Wars.

Woah woah woah, Wars is better than trek and day of the week. =P

Interesting idea, but Is this not just a boxed version of the 20 questions idea with a medical slant?

Speaking of tricorders, what ever happened to that ‘science tricorder’ that had a kickstarter campaign? SCIO was it? Did it turn out to be over-sold in its capabilities?

An RGB led and a Light sensor does not make a spectrometer. It’s amazing to me that someone who seems to have this good of a grasp on math can also have such a poor understanding spectroscopy. There are other projects here that use actual spectrometers. But this one is a joke in that respect.

It is amazing that I wrote spectrophotometer and you read spectrometer.