This morning I want you to join me in thinking a few paces into the future. This mechanism let’s us discuss some hard questions about automation technology. I’m not talking about thermostats, porch lights, and coffee makers. The things that we really need to think about are the machines that can cause harm. Like self-driving cars. Recently we looked at the ethics behind decisions made by those cars, but this is really just the tip of the iceberg.

A large chunk of technology is driven by military research (the Internet, the space race, bipedal robotics, even autonomous vehicles through the DARPA Grand Challenge). It’s easy to imagine that some of the first sticky ethical questions will come from military autonomy and unfortunate accidents.

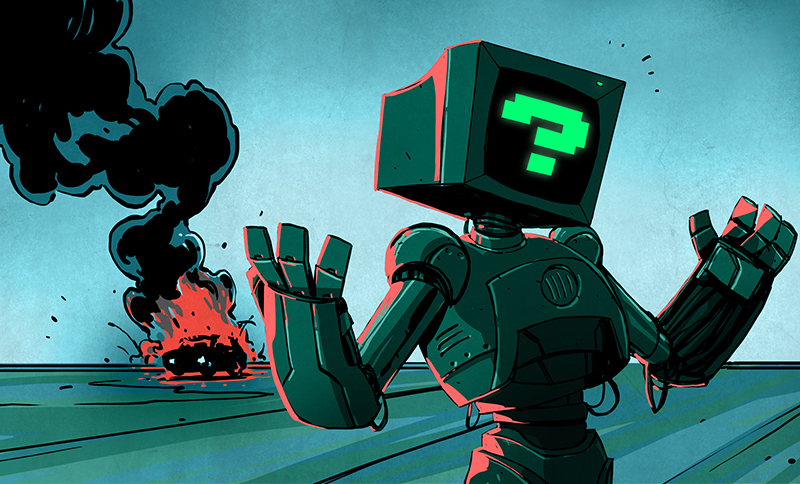

Our Fictional Drone Scenario

The Sundancer-3 is no ordinary drone. Based on the MQ-1 Predator UAV, our fictitious model performs much of the same functions. It has a few key differences, however. The main one being it can operate without any pilot. Its primary mission is to use its onboard cameras and facial recognition software to identify enemy combatants and take them out – all without any human intervention. It has the ability to defend itself if fired upon, and can also identify its surroundings and will not fire if there is even a remote chance of civilian casualties. The Sundancer was hailed as a marvel of human ingenuity, and viewed as the future of military combat vehicles. Militaries all over the world began investing in their own truly autonomous robotic platforms. But this would all change on a fateful night in a remote village a world away.

Its critics say the accident was predictable. They said from the beginning that taking the human decision-making out of lethal action would result in the accidental killing of innocent people. And this is exactly what happened. A group of school kids were celebrating a local holiday with some illegally obtained fireworks. A Sundancer was patrolling the area, and mistook one of the high reaching fireworks as an attack, and launched one of its missiles in self defense. A few hours later, the rising sun laid bare for all the world to see – a senseless tragedy that never should have happened.

Its critics say the accident was predictable. They said from the beginning that taking the human decision-making out of lethal action would result in the accidental killing of innocent people. And this is exactly what happened. A group of school kids were celebrating a local holiday with some illegally obtained fireworks. A Sundancer was patrolling the area, and mistook one of the high reaching fireworks as an attack, and launched one of its missiles in self defense. A few hours later, the rising sun laid bare for all the world to see – a senseless tragedy that never should have happened.

For the first time in history, an autonomous robot had made the decision, completely on its own, to take lethal action against an innocent target. The outrage was severe, and everyone wanted answers. How could this happen? And perhaps more importantly, who is responsible?

Though our story is not real, it is difficult to say that a similar scenario will not play out in the near future. We can surmise that the consequences will be similar, and the public will want someone to blame. Our job is to discuss who, if anyone, is to blame when a machine injures or takes the life of a human on its own accord. Not just legally, but morally as well. So just who is to blame?

The Machine

Although obvious in the fact that a machine cannot be punished for its actions, some interesting questions arise when looking at the deadly scenario from the machine’s point of view. In our story, the Sundancer mistook a  firework as an attack, and responded accordingly. From its viewpoint, it was being targeted by a shoulder fired missile. It is programmed to stay alive, and as far as it’s concerned, did nothing wrong. It simply did not have the ability to differentiate between the missile and harmless firework. And it is this inability where the problem resides.

firework as an attack, and responded accordingly. From its viewpoint, it was being targeted by a shoulder fired missile. It is programmed to stay alive, and as far as it’s concerned, did nothing wrong. It simply did not have the ability to differentiate between the missile and harmless firework. And it is this inability where the problem resides.

A similar issue can be seen in “suicide by cop” events. In a life and death situation, a police officer does not have the ability to tell the difference between a real gun and a fake gun. The officer will respond with deadly force every time, protecting themselves when threatened in the line of duty.

The Manufacturer

Does the manufacturer of the Sundancer have any fault in the deaths of the students? Indeed, it was their machine that made the mistake. They built it. One could argue that if they had not built the machine, the accident would have never happened. This argument is quickly put to rest by looking at similar cases. A person that drives a car into a crowd of people is at fault for the accident. Not the car, nor the manufacturer of the car.

The case of the Sundancer is a bit different, however. It made the decision to launch the missile. There is no human operator to place the blame upon. And this is the key. If the manufacturer had prior knowledge that it was building a machine that could take human life without human intervention, does it hold any responsibility for the deaths?

Let’s explore this concept a bit deeper. In the movie Congo, a group of researchers used motion activated machine gun turrets to protect themselves from dangerous apes. The guns basically shot anything that moved. In real life, this would be an extremely irresponsible machine to build. If such a device were built and it took an innocent life, you can rest assured that the manufacturer would be held partly to blame.

Now let us apply some sort of hypothetical sensor to our gun turret, such that it could detect the difference between an ape and a person. This would change everything. If a mistake happened and it took the life of an innocent person, the manufacturer could say with a clear conscious that it was not to blame. It could say there was an unknown flaw in the sensor that distinguishes between human and ape. The manufacturer of the Sundancer could wage a similar argument. It’s not a manufacturing problem. It’s an engineering problem.

The Engineer

Several years ago, I built a custom alarm clock as a gag gift for a friend. The thing drew so much current that I was unable to find a DC ‘wall wart’ power supply that would run it. Long story short, I wound up making my own power supply and embedding it in the clock. I made it clear to my friend that she couldn’t leave the clock plugged in  while unattended. I did this because I had no training in how to design power supplies safely. If something went wrong and it caught fire, it would have been my fault. I was the designer. I was the engineer. And I bear ultimate responsibility if my project hurts someone.

while unattended. I did this because I had no training in how to design power supplies safely. If something went wrong and it caught fire, it would have been my fault. I was the designer. I was the engineer. And I bear ultimate responsibility if my project hurts someone.

The same can be said of any engineer, including the ones that designed the Sundancer. They should have thought about how to handle a mistaken attack. There should have been protocols…checks and balances put in to place to prevent such a tragedy. This of course is easier said that done. If you make it too safe, the machine becomes ineffective. It will never fire a missile because it will be constantly asking itself if it’s OK to fire. By then, it’s too late and it gets shot out of the sky. But this is still a better outcome than mistakenly shooting a missile at students.

I argue that it is the engineer to blame for the Sundancer accident.

This leaves us stuck between a high voltage transformer and a 1 farad capacitor. If the engineer holds ultimate responsibility for the mistakes of his or her machine, would they build a machine that could make such a mistake? Would you?

I’m convinced that the party employing the autonomous device (the end user) is wholly responsible when the device is operating properly, even when the outcome is undesirable.

In my mind, the manufacturer should develop settings which the allow the end user to select the:

1. level of aggression (in this case)

2. Unsolvable dilemma strategy (kill me, or kill pedestrians) in autonomous driving

etc.

and then the end user bares complete responsibility for the non-defective operation of the device.

No piece of software is bug free. And it is essentially impossible to ensure that a piece of software is bug free. If we insist that the ‘designer’ is responsible for bugs we would have no software produced that could be used in a way that endangers life.

We accept bugs and live with them (or not). If you want perfection, you must find something other then a flawed human prone to mistakes to produce it.

Sorry, it is a cheap excuse to claim that “no software is bug free” – and plain false, if taken as an absolute:

10 print “going to 10”

20 goto 10

Where is the bug? This is a valid piece of software, that does continuously output information. It will even react on user input – if the user presses CTRL-C, it will stop outputting the information that it is going to 10, rightfully so.

Also, software running nuclear plants get checked seriously (as opposed to cheap end user software) and can, in many cases, be called “bug free”. Just another (cheap?) example.

If a machine makes a false decision, the designer of the machine will always have to take PART of the responsibility. If not legally, so morally. This does not answer the questions raised in the article, but it should be obvious from a humanistic point of view.

Umm, I don’t actually think code like that is continually reviewed. Plus, the more complex the code’s output states, the harder and harder it is to actually check for every state. It becomes mathematically impossible once the code gets complex enough. You could have something absurd like 10^10^30 possible states it could possibly be in. There is no way to check all of those states and ensure with certainty that there was no “undesired operation” that could occur in every possible state. Then add in hardware issues too, which could further complicate things as hardware is expected to operate with only certain inputs and outputs but it isn’t always guaranteed that it will.

What do you mean by “output state”?

Because if you have a program that has a mathematically precise definition (and they should have, if you take hardware out) and operates on a defined set of inputs (which you could make infinite, if you wanted to), it can very well be possible to mathematically prove that it will do the correct thing, even without looking at all possible program states. In fact, this is actually done for some pieces of software, even though it is a lot of work.

Clearly folks don’t understand the basics. Once upon a time universities taught how to mathematically prove a given piece of code (or algorithm) was correct. However, those work problems were always limited to very small problems (no more then a handful of lines), and even at that the process was labor intensive and time consuming.

Large complex pieces of software CAN NOT be ruled to be BUG FREE, yet we still routinely use them. If you want to start holding the programmer/designer liable for the loss of life, then don’t expect to have such software produced for you. IT IS THAT SIMPLE. Would YOU provide someone with a complex piece of software that MIGHT given the right confluence of conditions result in a death your can be found liable for?

Therac-25 had a nasty bug.

I can’t find resources right now, but there have been bugs in the nuclear industry as well.

The thing is, the engineer, programmer, and end-user need to be prepared like boy-scouts.

Test everything numerous times, do dry-runs, and if you are willing to send a machine to do a task instead of a human, then the fault of any erroneous behaviour rests square on the shoulders of the End-User who deployed such a thing.

You may notice that almost all software and hardware you use has disclaimers and terms of use that leave the user liable for any damages caused by the product.

I fear you are mistaken here.

I won’t argue about how incredibly difficult it is to prove anything but the most trivial of programs. You’ll be severely restricted in the kinds of programs you can write if you introduce more and more static checks (=proofs). Since you can not prove arbitrary programs, you’ll have to restrict the programs you can write to be able to prove them. Some of these restrictions might be easy to accept (e.g. typed variables), some might be a bit harder to cope with (e.g. lack of side effects in purely-functional programming), and some might be unacceptable (e.g. inability to have infinite loops, even by accident–this means that the number of iterations has to be fixed before the loop is entered. This makes programs much easier to prove (these programs will always terminate), but severely restricts the kinds of programs you can write.)

But I digress, let’s just assume this isn’t a problem at all and look at something at least equally annoying:

Sure, the idea of a program, when run on the idea of a computer, might be mathematically proven to do what you intend. However, we’re talking about real-world systems here, so there is one very big problem in there: Physics.

(a) When you get power supply problems, your program will behave unexpectedly. These can be and are even used to circumvent “absolutely secure” chips. There is nothing you can do to build an “absolutely secure” power supply.

(b) Integrated circuits age. Their aging is dependent on many factors, such as operating conditions, but also on the physical location of the die on the wafer (the material nearer to the center is usually better quality).

(c) Memory corrupts. Flipped bits in memory, although rare, can be a problem. Especially if you have a lot of memory, as we are seeing today. Radiation from the materials around the chip (PCB, other components, also chip packages themselves) and cosmic radiation can easily flip a bit. What does your perfect program do when one flipped bit tells it to “GOTO 40”? You can prove this program correct, but if the memory that stores the program or data is damaged, strange things might happen.

If you’ve ever written software for someone else, you might know that these problems are nothing in comparison to the real problem. By far the worst problem in software development is misunderstanding what the goal is. You can make a system according to spec and prove that it’s correct, but it still doesn’t work, because the specifications poorly reflected the real requirements. This, I fear, is a problem of human psychology and can not be solved by technology or mathematics. At all.

These are just some examples. There are probably many more. So in the end, you should try to cope with these problems. Just thinking that programs can be proven correct and that will magically fix all the problems is not going to cut it.

The bug is that it is going to 20, not 10.

The bug could be in the compiler or interpreter. Both substantially more complicated that the program you included.

>No piece of *anything* is bug free.

Derp, keep the lights to minimum and keep the windows and doors closed so moths don’t get it.

No, This was a deadline fuq up. Plain and simple.

A rogue power could easily use existing cell towers and use LTE and Microwave to detect drones, a separate location can receive co-ordinates, speed, trajectory info and blind fire a golf ball from a 20-40 foot cannon tube to hit the drone from a hole in the ground.

I agree it’s the end user, when you fire a bullet at a target, you are totally responsible for the damage it does, the drone in this example is just a highly complex bullet, you fired it, your responsible.

That recent “pedestrian” argument is idiotic, the car must never leave the road and must not kill due to it’s actions therefore if a pedestrian walks in front to the car it must only stop as fast as it safely can. The actions of other parties does not invalidate it’s initial decision r.e. it’s vector, only the speed needs to be modified. Furthermore any other programming solution would allow people to kill car occupants by throwing human like objects onto the road in front of them. When you consider that last point it does make the people who initially raised the issue look like complete idiots, doesn’t it?

” I was the designer. I was the engineer. And I bear ultimate responsibility if my project hurts someone.”

And yet you designed and built something for which you admit you weren’t qualified and gave it to a third party. Sounds like you should have your PE revoked for violating the ethics rules.

As to the designer/engineer being responsible. Pure hogwash. Is the designer/engineer responsible if the product is used in a way contrary to the instructions provided? I don’t believe so. So if your friend had left your clock plugged in and it caused a fire, the responsibility is solely hers.

The same is the case for the drone in your hypothetical. The responsibility is to the officer in charge of deploying that drone. The scenario is no different from the responsibility resting with the person who pulls the trigger on a normal gun, or more related to the person who leaves a loaded weapon where a child could reach it.

HOWEVER, there is a major flaw in your scenario which I assumed was a specification provided to the designer/enhineer in your scenario. Which is that the drone was programmed to respond with lethal force in self defence without first identifying the target. In other words your ‘scenario’ lacks sufficient detail; “A Sundancer was patrolling the area, and mistook one of the high reaching fireworks as an attack, and launched one of its missiles in self defence”

This may come as a surprise to you, but we already deal with such incidents. They are endemic whenever an armed conflict ensues. And the use of autonomous drones doesn’t change the basic rules and responses.

I don’t have a PE. I got an 85% on the quiz at the back of the Arduino for Dummies book once.

Then perhaps you shouldn’t be making things that can kill people…

You can get killed walking your doggie.

https://en.wikipedia.org/wiki/Misleading_vividness

A minor disagreement…

“The responsibility is to the officer in charge of deploying that drone.”

I think responsibility goes a bit higher up the chain than just ‘the officer’. We cannot expect every officer to have the knowledge, ability and opportunity to fully test the device pre-deployment. The officer is a really just a glorified combat troop and not a hardware or software engineer. At the very least responsibility rests with someone that commissioned the project. To determine responsibility we need more information that what is contained within the hypothetical itself. The entire development process would need to be reviewed to determine where the fault occurred.

Responsibility would rest on someone involved with either the proposal (failure to include false-threat determination in the specification handed down to the manufacturer), the manufacturer (for a failed implementation of false-threat determination as outlined in the handed down spec) or top military operative (for releasing it to be available for deployment).

Isn’t this (drone example) the ‘Should GLock be sued for people shooting up a mall with their hand-guns…’ debate all over again? But with a different spin? (answer is no)

I agree.

If Flock made pistols that went out looking for targets, it might be. I agree, whomever sets the thing loose is responsible. Then comes the refinement of the ROE and the software that drives the machine.

ugh, *Glock. I meant Glock.

This almost illustrates the point. I enabled auto-complete but it’s ultimately my responsibility to proofread my comment.

The drones are not autonomous though.

USA drone strikes kill like 10 people per 1 actual target – and that is without even going into the validity of killing even that one target.

This ‘discussion’ is a farce.

This discussion explicitly started with “we don’t have these yet, but one day soon could, let’s talk hypothetical ethics”.

Read the article.

I agree. There is no “responsibility” now, when people launch the missiles. How do you expect there to be responsibility when so conveniently a machine can be made “responsible”? That question will only arise if a machine is killing domestic voters – if at all.

Who pulls? You could get nuts though AND build the WINCHESTER MANSION!

Visit it if you get the chance. Cray Cray widow “haunted” by the ghosts that the guns her husband made that “killed” people.

Believe it or lulz.

Boy this is an ugly mess, and lots of parties will have their opinion.

Manufacturers will want to blame the users.

The victims will want to blame the operator and the manufacturer.

The operator will want to blame the manufacturer, or their boss.

The trial lawyers will want to blame the manufacturers, the operators, the electric company (or battery manufacturer), airspace service provider, CPU manufacturer, and whoever has anything to do with it, that may have deep pockets.

It won’t be just killing. Any injuries will have people to blame.

In some ways, whomever is determined responsible is in a better position if the victim dies than a severe, life-changing injury. With the latter, one can become responsible for the life-long medical care, and higher restitution.

This is a big part of why cops now shoot to kill.

Cops are trained not to kill, but to ‘stop the action’. That’s a wide definition on purpose.

I.E. Nothing stops the action like a lump of lead to the back of the head.

Ther was video that’s evidence of one time cops didn’t shot to kill. Granted they had the time to plan out the operation and pick their position.

Let’s consider the customer who asked for the machine.

When the contract for the Sundancer-3 was under discussion, the scope of the project was discussed, including the rules of engagement. It would be a reasonable RoE for the Sundancer-3 to normally require command and control confirmation to fire, even if it autonomously flew and identified threats. After all, just because you’ve identified that caravan as carrying enemy hardware doesn’t mean you want to destroy it right now. It would be reasonable for the Sundancer-3 to be considered somewhat disposable, willing to be destroyed if it can’t 100% verify that the perceived threat is enemy action. And it would be reasonable for all these issues to be discussed in the planning and development stage, before customer sign-off.

If the contract specified RoE that allowed the “tragic accident” that we are discussing to take place, or allowed a field-programmable RoE (so different missions and different theaters could act differently), then the blame could be shifted from the engineer who designed the system to the customer/user who specified the RoE.

That’s before you even consider that anything as complicated as an autonomous drone that can fire missiles is not going to be a one-person endeavour. At the very least there will be one person specialised in the propulsion system, one in the aeronautics, another in the weapon control system, someone else working with the sensor packages (maybe even one person specialised in handling each sensor package).

At this point it makes even less sense to blame the person who built the drone because you then have to get into the debate of who is more to blame for the accident: the sensor specialist, the weapons specialist, the control system specialist, or the people who built the sensor that couldn’t differentiate a large pyrotechnic from a surface to air missile?

As for the committee that prepared the formal RoE for the drone, they deal with “acceptable losses” all the time. We already know that about 10 times as many non-targets are killed by drones as targets. What if the committee decided that in this case the Sundancer-3 had an operational record of 1:10 (innocents:targets), a huge improvement in collateral damage over previous systems, and thus the loss of a dozen children was acceptable?

Once the committee has decided that the loss of a dozen children was acceptable, is there still anyone to blame?

The buck stops with the Commander in Chief.

Well I’ll go first I guess… in the theoretical scenario, you have people engaging in admittedly illegal actions. Illegal actions that were legitimately mistaken for another illegal action, trying to shoot down the drone.

Since the drone is deployed in a hostile area, I don’t think it is unreasonable to think that a near hit would be considered an accident so sending deadly force to combat it is completely reasonable to expect. Think about taunting a dog, and then getting bit. Is it the dog’s fault you screwed with it? Is it the owners? Or is it yours?

I’d assume for the sake of these mental gymnastics that this WAS deployed in a hostile environment. If it was not in a hostile area, why in God’s name would you have it up there in the first place? IF it was in a non hostile environment and it blasted a bunch of kids to the next world then the ‘deployer’ is to blame, not the machine or its creators.

The expression ‘War is hell’ was coined for a reason. While some may call me a cold hearted bastard for saying this, but no war fought in the history of man has ever not had collateral damage. Yeah, it’s a nice idea to make it all sanitary and nice, such that only the bad guys get killed, but in reality, that’s not the case nor will it ever be.

One issue I take with your argument is comparing the drone to a dog. You can see the dog you are taunting. The drone will be much less visible. If the drone is meant to be seen, it best damn well be big enough to laugh off most artillery.

Seeing the dog is irrelevant. Make it a hornet’s nest.. you wandered by it, annoyed them, and you got stung.

In the case here, the author said they fired off illegally obtained fireworks. Well, the first mistake is on them. Oh they’re just children you say? Ok, where are the parents then? Not watching the children because they too, are out doing illegal things that the drone is probably looking for? I fail to see the problem.

This would be a hornets nest that an enemy of your localities leadership has placed in your area.

Not a natural random phenomena.

This is like saying a loaded gun left unsafely in public is not the responsibility of the gun’s owner.

As an engineer I would say that personally I would never want to work on a truly autonomous weapons system.

As to the point the engineer would be responsible I would say that it’s bovine excrement. The engineer will always do what he does to build the safest product possible. Design choices, programming, decision making matrices, etc will all be worked on by many different people, all of whom will probably be working to get things as correct and right as they can. None of them will be intending to get someone killed, all of them will do their best to make sure it doesn’t happen.

So how can you hold the engineer responsible if the Sundancer encountered some sort of event far outside any scenario thought up by the engineers, a combination of events that works its way through a decision-making system in a way never anticipated or dreamt of by the engineers?

On top of that no product is ever released when the engineers say it’s ready. It’s released when it’s good enough and the managers and adminstrators and salesman say it’s ready. All products are built to a price and that means at some point the testing and development has to stop and someone must make the decision that it’s good enough to sell. That decision is usually not made by the engineers.

This is also true and I agree.

In the hypothetical case, the person who wrote the requirements and test plan is responsible. The engineer shares some responsibility depending on how they reacted to the given requirements.

In many software systems, coding errors can lead to deaths. In the hypothetical case though, the people that designed the requirements for the system determined that the drone should react with lethal force to any perceived hostile actions. It can be argued that the software was just responding to design and mission requirements.

I’m actually envisioning the drone firing at pictures or people it’s supposed to destroy. Such as those held up on banners during funerals and/or protests or even on big screens during news casts. Of course one would hope that in such a system, facial recognition would be also tied to recognizing a human attached to that face, and the carrying of a weapon and/or hostile intent.

Well now this is getting further out into never never land…

“Of course one would hope that in such a system, facial recognition would be also tied to recognizing a human attached to that face, and the carrying of a weapon and/or hostile intent.”

My answer would be that this is years in the future, so the development of the skynet processor has been around for a couple of years and they have an arduino shield for it, so it really has a lot more computing power. We are getting to the point of ridiculous now.

The problem with hypothetical situations is they aren’t real, and are rarely thought out to the degree necessary to make any kind of a rational decision other than the one the original author intended, because he or she tailored the conditions to prove their point.

Autonomous weapons systems already exist. They are called land mines; also sea mines.

Legally or morally?

So you say that indiscriminate harm is wrong: “The guns basically shot anything that moved. In real life, this would be an extremely irresponsible machine to build.” Ever heard of land mines?

You are too quick to absolve the manufacturer. The car analogy is a poor choice. A land mine is a better comparison. The Sundance manufacturers _chose_ to build and profit from a machine _designed_ to kill people. Morally, they must accept some responsibility. Legally? That’s up to the lawyers and politicians to hash out.

Ultimately though, the bulk of responsibility lies with the owner of the device. (Which brings up an interesting observation about license agreements: If the manufacturer chooses to only lease portions of the device, then they continue to retain some (moral) responsibility. I wonder if this might finally break open some of those stupid EULAs.)

the real problem is that for many of these machines their basic operation doesnt differ that much from legitimate and peacefull use.

take a hypothetical drone used for filming surfing or some other sport, with automatic trigger control of a still camera such a drone would -facial recognition esentially be a weapons platform, designed to track and target a payload(usually a camera).

Current civil law in the United States would argue that the manufacturing company has responsibility but it’s not a privately held unit and it’s operating in a remote village, outside US territory. So it’s a slightly different argument than the autonomous car argument.

As noted, the same basic argument has been raging in the world of autonomous cars and will likely continue to do so. It’s not the driver who is at fault (they were not driving) but current US law would still hold the car’s owner responsible. Although of course legal action would also target the manufacturer. It’s unclear who would currently be responsible but the law doesn’t recognize machines as anything other than machines. Adding the ability to fire at something automatically? Without being able to also “understand” anything except pattern matches? That’s problematic.

It doesn’t know what it sees, it just sees a match. But that match would be a match that lacks internal representation of things that allows us to describe them. Computers know nothing of the context. And that’s invariably going to lead to all sorts of issues.

http://www.scientificamerican.com/article/teaching-machines-to-see/

“To their surprise, the resultant images were often completely unrecognizable, essentially garbage—colorful, noisy patterns, similar to television static. Although the ConvNet saw, with 99.99 percent confidence, a cheetah in the image, no human would recognize it as a big and very fast African cat. Note that the computer scientists did not modify the ConvNet itself—it still recognized pictures of cheetahs correctly, yet it strangely also insisted that these seemingly noisy images belonged to the same object category. Another way to generate these fooling images yielded pictures that contain bits and pieces of recognizable textures and geometrical structures that the network confidently yet erroneously believed to be a guitar. And these were no rare exceptions.”

If the manufacturer knew about this possibility and did not share this info with the party that employed this drone, the manufacturer will shoulder a large part of the legal burden. One would think Asimov’s laws would have been given higher priority and avoiding attacks would be higher on the list.

If Asimov’s laws would have been given bigger priority it could never be built.

I was thinking that it was a software problem instead of a hardware issue, we have no hardware based AI (yet). Or ultimately it would be the fault of the person who signed off on the deployment.

In a nuclear reactor safety system failure caused by lightening, an earthquake or a meteor strike (basically an unforeseen event) , who is currently responsible ?

The government who signed off on the construction ?

The builders of the reactor ?

The operators ?

The owners ?

The IAEA for failing in their safety auditor ?

The public for electing the government ?

Mother Earth… she caused the condition, and provided all the materials to be built and therefor was the sole creator and cause and arbiter of the entire string of events from cradle to grave.

Ok… too much caffeine…

Blame the universe!

You didn’t mention the culpability and responsibility of the Operator. Even without a human pilot (in the vehicle or remotely operated) the vehicle itself did not decide to go fly around that day with deadly weapons and the programming to use them if it saw fit. I am an engineer and I do believe that engineers should build in all the safety, applicable to the device designed as it should have, but the reality is you can not design it so that it can not in ALL circumstances not cause harm. It is generally the operator that operates the device and it is generally their responsibility of that operator to not operate it is a situation that the device could cause harm and if so, they bear that risk and responsibility.

I think it’s important to separate the ethics of knowing involvement in a project whose intent is to do something terrible, and negligence.

Going back to Therac-25, the software developers who didn’t consider proper test cases and integration testing, and made some serious rookie mistakes along the way, was grossly negligent. The management, especially project managers, knowingly released a product that was not thoroughly tested and dismissed the resulting fatalities as user error.

Both are unethical and should be held responsible, but doesn’t some of the responsibility for putting a crappy developer in a position to make dangerous choices fall on his superiors?

We already have this playing out quite often in a pretty close story line in “smart” munitions and cruise missiles…

Sure, someone has to push a button somewhere at some point in the kill chain, but with a drone, you have to do the same thing. “Someone” has to push a button to tell it to go do its thing. Even if it goes and loiters and decides on a target by itself, someone has to push it out to a flight line and launch it with the expectation that it may kill something.

With smart bombs and cruise missiles a target is typically agreed upon before pushing a button. The result is the same, something is going to die.

Often the operator has no actual eyes on the target. Intelligence folks decide the targets and program them into cruise missiles or give pilots coordinates to drop on. They may or may not verify if the target is actual. hopefully they’ll hold if they see something of concern, but even with the level of sensors we have now and the ability to read license plates from space, a pilot only has so much intel to go on, and they typically don’t dwell long enough to know to 100% certainty.

There are plenty of cases where bombs were dropped and they hit what they were supposed to and no bad guys were home and the target happened to be a hospital or school building and no-one goes to jail or is held responsible. Same thing when the munitions “miss” and hit the next building over. We don’t go hanging anyone at Northrop or Raytheon over it.

But the overiding responsibility for the kill chain would probably be the same as it is now…

If the requirement is to design an autonomous killing machine, then the person who came up with the requirement should be held responsible. Not the engineer who designed it. It did fit the requirement to kill someone autonomously. Judging whether the person killed is a civilian or not is up to the human who controls the deploy switch. Just like any weapon, don’t accuse the tool maker. The tool did its job.

Engineers do a feasibility study before they commit to do any project. The feasibility study will let the higher ups know whats possible at what rate of success. Engineers just give a matrices of functionality and score possible with the current technology. In the end someone signs up. Some engineers drop out with self conscience. Others locked in because of the paycheck, family and financial stuff. Also there is a group that thinks they work for the country when the biggest benefiters are the stock holders. No one would willingly work on a project that can kill people accidentally. At-least a human with average intelligence will not work on such requirements.

So let’s say you’re working somewhere and are asked to work on a project with some heinous element, perhaps adopting puppies under a fake identity to use their corneas for smartphone lenses. You’re living paycheck to paycheck with a family in tow, and will be on the street quickly if you leave your job. You also know that the pile of NDAs you signed when you started and the company’s legal resources would bury you if you leaked any of this. Your options are limited to reporting it to higher ups and hope not to be canned for dissenting, leave silently, or suck it up and keep working.

It’s a shitty place to be, but when the company’s on trial and the lawyers raid the project documentation, your name will still be all over the project.

Dat sarcasm tho… lol!

In an ideal word the company will go to trial. In real world its called National Security which means, can do anything and no one is accountable because safety is not worth risking – according to people doing stuff mentioned in the article. How many raids have been done on Lockheed or Boeing? heck, even NSA? Not going to happen for ever.

Look man, seen it and sick of it. Of course its fun to talk about it like a cafeteria chat. HaD, you or me cant change anything. Give it another decade and see, people will just agree and keep moving on just like phone taping. Using the keyword National Security anything is acceptable. So there we go. National Security – No one is accountable. We engineers deign and give it to people who can’t make a decision. And now its the engineers fault? The author of the article talks about the company that designed and not the person who called for the deployment.

Apart from the main comments:

I’m waiting for the the F-35 story. Its going to be like engineers design a beast of a machine (on paper) and park it the hanger. Then comes the drunk teenager (person who can command) and makes a reason to take it out for a ride to show it to others. Lets see how engineers are going to get blamed for that. Stay tuned for 5 years.

This type of accident should never happen and probably never would happen.

1. The drone would only have a self-defence mode active in a combat zone. One good thing about a drone is that they tend to be cheap and you do not have a pilot at risk. If you are working in an insurgency area you would not have a self defense auto fire mode.

2. All known schools, hospitals, and other safe havens would be marked on GPS and the drone would know not to fire on those in auto target mode.

As to who is responsible? If the drone worked as designed then it is the deploying forces for not marking the school as a no autofire zone. You could also blame the idiot that decided to shoot off fireworks in a war zone!

What everyone is missing is that a human crew could make the exact same error.

So you start out in a war zone and query somebody for a list of all schools, hospitals and such? That list is updated instantly when makeshift facilities crop up and contains no false positives too of course, right?

If a school or hospital is not marked then how are you supposed to know it is a school or hospital. I am not talking about a drone but just in regular warfare.

In a regular war don’t hold school on a battlefield. The case of the Sundowner is more of an irregular or insurgency conflict. In that case yes the schools, religious buildings, and hospitals would all be marked. But the real key is that in a insurgency type of conflict the drone would not have a self defence auto fire mode. You don’t risk taking out a kindergarten to save a drone it is that simple.

What people do not seem to get is that there is a clear order of responsibility in all military conflicts.

1. Your civilians. You protect them at all costs.

2. Your military.

3. Enemy non-combatants.

4, Enemy military.

Yes if the enemy puts a SAM site in the middle of school it is a valid target. It is the enemies fault for not only failing to protect their civilians but for making them a target.

No it is not nice or pretty but it is war. You can not make war nice or pretty.

Totally agree, I guess that’s one reason why UAV’s are made — to lessen human casualties. But if this drone kills more innocent civilians (as opposed to having a pilot killed) then a UAV would be useless. It should sacrifice itself if the only option left is to harm innocent civilians.

Actually no. Enemy civilians are below your military. If you have a SAM site in the middle of a school then taking it out and the school with it is legal. If you take it out without causing collateral damage then it is best to do that. For example hitting the school at 3am or on a day that it should be empty. The Enemy in this case is responsible for any deaths by putting the SAM site in that location.

A large benefit of a drone is that you are trading a cheap machine instead of an expensive machine and one or more human lives. Now if a drone was shot down or reported back that weapons were at that location then it would be up to the planners to decide to strike the threat or avoid the threat.

Most (serious) AI implementations follow Asimov’s rules of robotics. If these are still considered valid (and I don’t know of a single grown up who would disagree :-) ), the “drone” simply does not HAVE the choice of killing people to protect itself. In fact, the autonomous drone itself would be a monster, designed by monsters and contracted by monsters.

There just isn’t anything morally right to constructing autonomous killing machines. Period. It seems pointless to find a “responsible” for something so completely flawed from the beginning (except for legal fun, but killing people isn’t about attorneys having orgasms).

If you want to create artificial intelligence, you either have to live with such intelligence turning against its creators (and maybe killing innocents, as this is EXACTLY what you want by not implementing a 100% secure rule against it) OR you have to construct it following definite guide lines.

Like Asimov’s.

It’s not an accident, either. The purpose of that machine is to kill. That is the whole problem.

You state that AI follows Asimov’s rules of robotics as if it were fact, but those are purely fiction right now. We can’t actually implement them right now.

+1 Jason. We are not capable of making any current machine follow Asimov’s laws. Even if we COULD… those laws were flawed intentionally for the sake of good story writing, and his stories centered around the ways the laws fell apart, and led to death and destruction. People are always quick to point to those 3 fictional laws, which were even flawed in fiction.

Also most of the stories I have read is when the three laws breaks down and cannot cover the situation. So even the author question if they are sufficient. Why would anyone want to use them in real life?

Asimov’s rules were a plot device invented for an insteresting (more or less) novel. They are formulated in a very vague and human-centric terms, requiring the implementer to be able not only to foresee the consequences of actions in arbitrarily complex situations, but also value them as if a human would that. That doesn’t just require human-level AI, that requires a much more advanced project, that, if developed, would be much more useful put to solving other problems than this.

In short, there are exactly 0 AIs following Asimov’s rules, and there isn’t any practical chance that number will change in the foreseeable future.

The International Committee of the Red Cross (ICRC), which is the curator of some of the treaties that forms the base of international humanitarian law (IHL), for example the Geneva and Hague conventions, have some articles related to these issues.

These two links may be of interest to some:

https://www.icrc.org/en/document/lethal-autonomous-weapons-systems-LAWS

https://www.icrc.org/en/document/report-icrc-meeting-autonomous-weapon-systems-26-28-march-2014

Thanks for sharing, very good source to the topic.

Just read this papers on the current human controlled drone war by the Snowden journalist Glenn Greenwald: https://theintercept.com/drone-papers – most of the decisions now involve humans including the president of the united states and even in this case civil causalities still happen. Some unwanted, some like accepting every male seen close to a target being an enemy, tolerated, because only women and children will cause public troubles? If you leave more autonomy to machine, the basic, strategic question, like who is an enemy and what is war will not change at all.

We have had machines capable of killing without human intervention for hundred of years already. Mines. And there is no doubt who is responsible for any deaths resulting from their deployment: whoever deployed them. And it doesn’t matter how “intelligent” they are, and that they were designed to only detonate when a tank triggers them, etc.

Today they are banned by a number of international agreements (which doesn’t stop *certain* peace-loving countries from using them).

Whoever puts deadly weapons, no matter how programmed, among civilian population, is wholly responsible for any casualties that result.

Dead on. Pun intended.

This also illustrates why the civilian population should be left out of war time activities, that is of course if you actually plan on winning said war.

Agreed dassheep. I’ve been bouncing back and forth on which side I’d stand on, but your post has swayed me. Well said.

If you have to ask for responsibility, then chances are that you are not an PE.

Most places (except one man operations) have PE in charge of products. The company may hire regular engineers, technicians, programmers who are not PE. Ultimately the PE is responsible for overseeing/signing off the product(s) and to make sure that the individuals/projects follow the best engineering practices and whatever applicable standards. There are usual a person is responsible for looking after safety aspect of a product.

For regular consumer products, there the the usual safety compliance labs (UL, CSA etc.) that make sure the product follows safety standards as part of the legal requirement. OP’s *alarming* alarm clock would first off without safety approval and certainly OP will be held liable for cause of accidents etc. The usual home owner insurance would not cover for the resultant damages etc. You can get local hydro approval for low volume products that you plug into to the wall.

For military contracts, there are usually very detail specs as part of the contract to dictate what the system should do and how they should react. There are strict system level requirement tracking in place to make sure that the final product does what it is supposed to do and an acceptance test suite. If your product doesn’t follow the specs, then the company is at fault. The specs are usually very clear on life or death situations and what the default actions should be.

Ooh, I read a sci-fi book about this a while ago. It’s called “Kill Decision” by Daniel Suarez. He has some other cool ethics/technology themed books, too. I guess he’s an engineer or programmer or something because his books always feature some pretty good hacks.

The answer is simple, all parties involved should be held equally responsible in full! This is the ONLY way to ensure an autonomous machine with kill decision logic like this is not engineered, manufactured, tested and then used unless several layers of real people are willing to bet their freedom/lives on the machines performance.

The only entities that would ever be able to use such a machine are the government, its various agencies and the military and all of these are famous for rewarding failure. And when they are unable to reward failure due to public outcry then they find a scapegoat so that in the end those who are truly responsible always walk free. This is why autonomous machines with the power to kill CAN NEVER BE ALLOWED!

Blame won’t change a tragedy, and a lack of blame will prevent resolution to a device or protocol that causes tragedy. Software can never quite be perfected, but neither can human decision making, sifference being that most human beings have the desire to avoid killing, even when within protocol they are allowed to.

Conclusion: Don’t make machines that kill without a human in control.

Two examples from long ago: In Georgia (USA) were two guys with the same name and identical faces, although they were not related. One did a crime, the cops locked up the other. Fortunately it was not a death sentence.

In England, a cop decided to use his radar gun to find out how fast the fighter plane was flying. Again fortunately, the response was a radar jammer rather than an anti-radiation missile.

We get closer every day…

That RADAR gun thing is nonsense though, pretty much debunked, and even if a RADAR gun was detected, you bloody well won’t shoot onto english soil, nor likely have an HARM missile on-board anyway.

And do they even use handheld RADAR guns in the UK? I don’t think they ever did actually.

Exactly. an RAF plane over the UK would never carry a HARM or and ALARM. They are expensive and dangerous and of no use over the UK. They are only carried in combat or a very rare live fire exercise. Most combat flights over home nations are completely unarmed and are for keeping up flight hours. Even when they go to a range they often carry practice rounds. Real live fire flights are super rare and nobody would ever launch any type of anti-radiation missile over a home country outside of a very carefully controlled range.

Honestly I doubt they would even have jammers active since it could cause problems with ATC radar and maybe even weather radar.

No it never happened.

http://www.snopes.com/horrors/techno/radar.asp

Wow, it is a sad day when I have to snopes someone on HAD.

It was reported in Monitoring Times when it was alleged to have happened, and I took their word for it. Sorry.

Wow that is really disappointing. I would expect better from the Monitoring Times. Just goes to show that you should always google it before you post it no matter the source.

The person responsible is the person who commissioned the project and instructed the manufacturers to build it.

It is ultimately their responsibility to have the design checked an double checked and it is their responsibility to make sure the manufacturers can build it to the design and quality specified.

I think my answer is simple and correct but please tell me what you think.

The “Ottawa treaty” still needs to be signed by the US, Russia, China and others.

https://en.wikipedia.org/wiki/Ottawa_Treaty

This is not a technical dilemma, the scope of this article and the following discussion is missing the point. Autonomous killing machines have existed since ancient times and they are banned by most countries. The point is not how to make a robot always make the right choice, it’s about making the US sign a piece of paper.

I think since as you say those things have existed for a long time, and the big countries never signed, that they obviously won’t likely sign a ban on killer robots either.

So facing that reality we are back to square one: who will be responsible.

And the answer is simple: ‘they’ are, when the west has a killer device do something nasty and they feel the public won’t like it to an extend that it becomes problematic they will simply blame china or russia, case closed.

>they are banned by most countries.

Yeah, Uh-huh. So is a Dead-fall or a Spike Pit a robot since it is mechanical, obeys laws of physics and is triggered when some parasite life-form goes where it is not supposed to?

Those things are passive and not reactive.

And I doubt they are allowed according to the rules of war anyway.

The point was that the correlation is a non human-controlled murder apparatus that is unable to make a difference between good/bad/civilian/unarmed people. Or as I said wildlife or even pets.

In the described scenario, in which a 3rd country has likely invadded another country simply for ‘regime change’ and other economic driven goals – I think only the operator can be blamed.

Bombs explode, that is what they do – the person who throws the bomb is the bomber – no matter if their bomb malfunctions.

There are quite a few odd absolutes in this article.

For instance The officer will respond with deadly force every time, protecting themselves when threatened in the line of duty”, that might be the habit in the US, but it’s not an absolute, you can take cover and shoot someone once or even in the leg unless the attacker explicitly opens fire, in other countries that have been shown to work fine even with people having real guns.

Another example: “If such a device were built and it took an innocent life, you can rest assured that the manufacturer would be held partly to blame.”

That’s obviously not true, the world is knee-deep in mines, people (civilians and post-conflict soldiers alike) lose legs or their life in places like vietnam and such all the time and I don’t think the manufacturer of mines was ever asked to pay up.

Plus they already make automated gun turrets, employed amongst others along the korean DMZ.

And when designing these types of things they actually worry about wildlife setting it off more than about ramblers doing so I gather..

Conscience. You cannot “say with a clear conscious”. I don’t fault authors making spelling, grammar, and word use errors. But does HAD not have an Editor or Editor-In-Chief? Do you even edit, bro?

In the united states legal system I know the answer.

Whoever has the most money to take from is who get’s sued.

Legally, it is a military operated device. That means if the attack happened in a country that the owner was allied or neutral with, and the attacked country was big enough to put up a serious fight, it would be a problem because it never should have been there to begin with…it could start a war just getting shot down, nevermind the slaughter. If it happened in a country that is neutral but not big enough to fight, it wouldn’t matter at all in a legal sense (just look at Pakistan).

From a “political morality” sense, the president of the USA has knowingly killed US citizens who have not been tried or even accused of any crime in neutral countries by drone strike…a few protesters and such got mad…but they would happily vote him in for a third term, so not that mad. That basically clears all operators, and chain of command from political fault as well…unless they want a scapegoat, in which case, they blame some low level tech for not doing a software update on that unit, in spite of the fact that the update wasn’t released until a month later…or something like that. Heck, they might even use that to wriggle out of a situation with a country that they didn’t want to fight. The manufacturer, for their part, would almost certainly have put in variables for the military to use…”self defense level” ranges from 0-99…it ships at 10 and the military is told to step it up slowly until it is using a reasonable amount of self defense. They would blame the military for stepping it up too much, too fast, without enough testing…they would, except that it would be classified and the military would protect them for that reason alone, if not for the fact that they are a military contractor and therefor almost untouchable anyway. Again, a scapegoat option is also possible here…if I had a small software firm and was offered a contract to write code for such a drone from a company that I knew had plenty of their own software engineers, I’d be more than a little concerned.

If we talk about actual blame, it can go to many at many levels.

The country in question will have a top leader or council controlling the military…the person who approved the device or the council members that voted in favor of it either without bothering to research the risks or in spite of them will be to blame. Most military organizations have some division dedicated to testing and researching new weapons. These people too would be to blame if they greenlit a software package that could not identify fireworks, especially since these things take years and some of the engineers would have seen fireworks in that time. If they greenlit only parts, asking for changes in the self defense mode that were not granted…and then someone higher up greenlit deployment anyway, then the testing division would not be to blame, but the person who greenlit it in spite of the problems most certainly would be.

The company making it, the engineers designing and programming it…this one goes back to the variables included in the thing. The engineers really would want to include such things because most software engineers have never been fighter pilots and thus really don’t know what a fighter should do. If they forgot to include fireworks as “safe” in spite of seeing fireworks shows, then you can probably blame them. If they didn’t do updates after the military testers requested a fireworks detection algorithm, then you can certainly blame them (or possibly management that wouldn’t give them authorization to write said updates).

Of course, the whole issue may just come down to how good they are at making decisions overall. The number and percentage of friendly and civilian deaths caused by predator drones is extremely high and it is basically a non issue politically and legally. If the AI gets more bad guys and fewer good guys, the occasional school destruction is just the price of fewer cafe & wedding attacks. The person who designs it, markets it, tests it, and approves it is making a bad thing less bad. If that person is not in a position to simply stop the bad thing entirely, then that person is not to blame.

So, when it is all said and done, the person to blame is whoever started the war that caused the drone to be launched in war mode. If in peacetime mode, the thing would just let itself be shot down and it would then keep track of the attacker with satellite or wingman drones until ground forces could give them the chance to surrender…and that would go even if it actually was attacked with a RPG or something.

The kids are to blame because they used illegal obtained firworks. So if they had played by the rules nothing would have happened.

I would have to say that the only person responsible is the one who looked at the whole system, designs and all, and decided to deploy it. You can argue that the person who approved the system for use may not have reviewed everything, or didn’t know that some things were built wrong and that the responsibility for that check rests with someone else, but it does not. If I were to design a system like the one described I would open up all of my designs, code, construction processes… everything. With everything laid bare for the customer to see the responsibility would rest with them to decide to use it. Get in all the experts you want to OK it, read up on coding practices, metal forming, aerodynamics, expert systems… but at some point a decision has to be made. I will attest to the intents of my design and how I went about implementing them, but if you decide to use my system then it’s on you if it fails. I hid nothing, I guarantee nothing except my best effort.

It may not be realistic to expect a company to protect every bit of IP with NDAs (most prefer security through obscurity for some reason) and tell everyone involved all the details, but I can’t think of a better way to put the responsibility where it belongs.

To me the owner have the responsibility.

He is the one who ordered the machine and who released it in the nature. Anyone who order such a device knows that it is not perfect. Moreover it is the (moral) role of the engineer and the manufacture to make sure that he is aware. Ultimately, the owner is (almost) the only one who can either start or stop the machine.

This kind of accident could have already happened. Hopefully (we’re lucky and) military officers think it would be too irresponsible to let a machine fire without confirmation. I hope this mentality won’t change.

It will change the day machine will think for sure.

I’m convinced this is basically the problem that will stop the deployment of machines that operate in the real world and are that autonomous: they’re either going to be too dangerous to allow, or too safe to be useful. If and when machines can ride that line, we might as well give them the right to vote.

I blame the citizens of the country deploying the autonomous drone for allowing their country to become so corrupt that the politicians and military complex were allowed to realize such a terrible idea in the name of a fat government weapons contract.

When things go wrong the people having to live their lives under an autonomous drone would blame the country of origin and the citizens themselves comprise the majority of that country. What difference does it make if WE blame Lockheed, Boeing or a General when the indigenous people will be blaming the whole and not just the scapegoat?

Do you think the victims in the Middle East blame Blackwater or America?

Do you realise that in many cases less than 50% of citizens voted for the government that is in power and any given time. So how can you justify blaming all of them for anything?

“the government that is in power” is not the result of a one-time vote but is a cumulative result of decades of indifference, accepting the status-quo and not taking the effort to really understand what is going on.

I believe that deep down inside them everybody knows that waterboarding *IS* torture, that governments *DO* lie and that they lose the right to bitch about things not going right when they couldn’t be bothered to actually vote and hold elected representatives accountable.

Regardless- the point of my comment was that it would be wrong of us (I’m speaking as an American) to deploy such a device. I do not feel that the majority of Americans would be content knowing that such a device was patrolling the skies over our own heads and that logically-speaking it would be wrong for us to deploy such a device to the air space of another sovereign nation. How would Americans feel if, say… North Korea? (or Iran, or Pakistan, or Canada, or ????) , deployed such a device over an American territory? Pretty sure the Left, Right AND Center would be pissed and there would be a call-to-war from the general population. So what makes it right for the U.S. to deploy such a device over another country? It’s like the Bin Laden assassination- Americans would be fucking livid if another country invaded our sovereignty to kill someone they had a beef with. But America is special. We know best and there is no other country on the face of the planet more qualified to be the World Police. How many countries do we have Military Bases in vs. how many other countries have bases inside our borders?

Point was that it would fundamentally wrong to actually deploy such a thing, yet we’ve reached a point where such a concept isn’t all that far outside of realistic consideration, furthermore I believe that such a day is inevitable and that it would already be done if such a thing were realistically possible with current technology.

I understand why some of our enemies are not our allies and I can’t say that I blame them. It comes down to the idea that ‘one man’s terrorist is another man’s freedom fighter.’ and I know for a fact that if there were a foreign force occupying my neighborhood then I’d either be dead already or I’d be blowing shit up. It’s called being a patriot and I understand why someone halfway around the world feels the same. I feel sorry for the troops that get deployed to those places and end up dead for some politicians agenda and to justify why we need bigger and better so we can kick their asses even harder.

There is no way you can get away from the fact that your comment was effectively justifying collective punishment. So in terms of “moral high ground” you jumped into a very deep well of evil.

Okay. Then I will quote my initial comment…

“Do you think the victims in the Middle East blame Blackwater or America?”

Now. Do you think the Jihadists will be screaming “Death to Blackwater” or “Death to America”? Transpose that onto the hypothetical question at hand… autonomous drone kills kids with fireworks… will the hate backlash be focused on Lockheed or America? Will the condemnation of a single software developer be enough to quell the conflict that arises or will it take more than the blame placed on a single head?

Jihadists scream whatever they are told to scream by their masters.

lol. Okay Dan. 10/10 for dodging the point.

You are evil, get over it. https://en.wikipedia.org/wiki/Fourth_Geneva_Convention#Collective_punishments

And I live in the Evil Empire, so go figure.

I don’t need your link. I know what Collective Punishment is. You are speaking of a formal, legal responsibility. I am speaking of a moral responsibility. You are speaking of a scenario in which somebody has to be held accountable and punishment meted out. I am speaking of what would be in the hearts and minds of the people victimized.

Call me evil if you like, but I’m not the one blowing up innocent women and children at a rate of ten-to-one compared to intended targets in the name of cronyism and a bigger bank account. Evil, sure. But I have zero difficulty sleeping at night so I guess I’m okay with it.

::carries on doing the backstroke in my own private ‘well of evil’::

“Evil, sure. But I have zero difficulty sleeping at night so I guess I’m okay with it.” yeah, and that is why most evil people continue to be evil too.

Good people admit when they subscribe to concepts that are actually against the principles they claim to uphold.

Waving your finger at an entire nation is idiotic, it is the essence of discrimination and prejudice to see large groups of people as guilty, morally or legally, for anything without being able to provide proof against each and every individual. It is the sort of “convenient” thinking that leads to genocides.

::yawn::

I look forward to your next attempt to foist your moral superiority on the rest of us, LOL.

you do realize that the US is not the world, right? in some countries it’s not indifference, but the fact that there are more than two viewpoints and therefore a majority will not be represented.

You do realize that in most countries there is no vote, right? Actually being able to vote is a luxury held by the vast minority.

If 100% can vote then not voting also is a choice, and it doesn’t matter if less than half do in regard to the country’s responsibility.

Why do you even bother to comment? Your logic is totally broken. Since when did a government get 100% of votes?

Targeted trolling now?

They seem to blame blackwater more, but I’d blame both.

the Phalanx CIWS in one form or another is on alot of naval ships and the Centurion C-RAM is a land based version of the same thing, they can fire completely autonomously under the right conditions as they are meant for close in last ditch defense. yes there have been accidents but they are still in use. Change the problem to ” some kids in a zodiac speeding towards a aircraft carrier fire off some fireworks towards the r2-d2 looking thing on the deck and ” and we could be talking about current events.

Of course it is not the engineer, at least not as long as the attack was according to the paradigm that it was intended for,

There is a whole chain of possible responsibilities. Before the engineer it will always be the company designing the thing and before that it will be the user.

Pushing responsibility up the line, from army to engineer, depends on the specifications that were set and accepted.

Ofcourse if the army specified ‘must be able to distinguish between fireworks and missiles’ and it doesnt, yes, then it may be the companies fault. Even if it turns out the engineer conned his employer, it will still be on the companies shoulder, though the engineer might get prosecuted too.

and even if nobody did anything wrong, other than using it, the question could be ‘was it foreseeable’.

Ofcourse it is all unimportant as e.g. the US army is known for mistaking Afghan Weddings and Doctors without Borders hospitals for terrorist training camps and blow the hell out of them WITH human decision and they have made 10.000ś of victims as ‘acceptable collateral damage’ in the IRAQ war, so it is a someaht irrelevant question.

It does become relevant if say that drone would suddenly automatically start a mission from Lackland AFB and shoot some hikers in Pearsall Park

Well the responsability lays with nation that deployed the weapon. However if that nation is an ecomice and military super power, so expect any agents of that nation being held accountable.

Another comment brought up SAM sites being place in civilians settings

Fireing on tho sites with disreadras for civilians is terrorism as defined by the US and many other nation. The use of violence to get another party to do your will. My nation the USA had two choices decades ago live within our mean as our resorces llowed or become the biggest thieves oth other people resouces. our leader choose to become theieve by supporting any tyrant, muslim or otherwis willian to ppress theirown citizens for their own personal wealth. We gave a large pouplation to hate use. Not “hate us for our freedoms” as proganga goes, hate because we denied them the freedoms we think we are entitled to. Because we done that by proxy doen’t make us less responsible. The USA alone is not the blame, much of Erope shaares the blame as well. I don’t see a way for the “West” extricate itself from the mess it created The poers that be of my parent and msy generation will be the first to leave the following generation a world less better off. Oh well in a world of limited resources and growing population it was bound to happen sometime. I wish we had the fortitude to attempt make as many as possible a comfortable life. any time soon, but I’m old enough I’ll be dead before the worst takes place. Sorry future while I had ( to date anyway) a comfortable life, but by no means it was extravagant lifestyle. An interesting hypothetical that produce what it was intend to produce.