There’s a truism in the security circles that says physical security is security. It doesn’t matter how many bits you’ve encrypted your password with, which elliptic curve you’ve used in your algorithm, or if you use a fingerprint, retina scan, or face print for a second factor of authentication. If someone has physical access to a device, all these protections are just road bumps in the way of getting your data. Physical access to a machine means all that data is out in the open, and until now there’s nothing you could do to stop it.

This week at Black Hat Europe, Design-Shift introduced ORWL, a computer that provides the physical security to all the data sitting on your computer.

The first line of protection for the data stuffed into the ORWL is unique key fob radio. This electronic key fob is simply a means of authentication for the ORWL – without it, ORWL simply stays in its sleep mode. If the user walks away from the computer, the USB ports are shut down, and the HDMI output is disabled. While this isn’t a revolutionary feature – something like this can be installed on any computer – that’s not the biggest trick ORWL has up its sleeve.

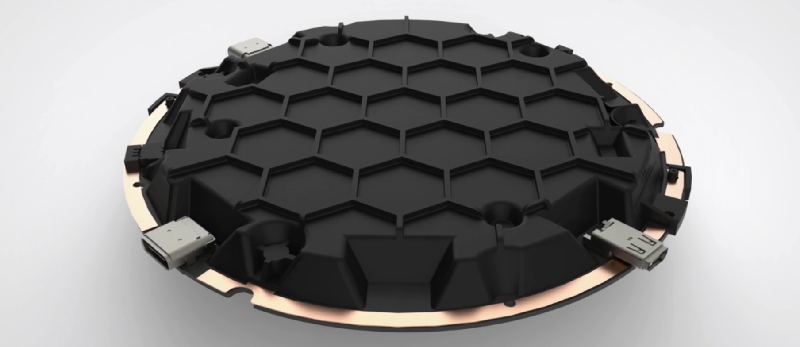

The big draw to the ORWL is a ‘honeycomb mesh’ that completely covers every square inch of circuit board. This honeycomb mesh is simply a bit of plastic that screws on to the ORWL PCB and connects dozens of electronic traces embedded in this board to a secure microcontroller. If these traces are broken – either through taking the honeycomb shell off or by breaking it wide open, the digital keys that unlock the computer are erased.

The big draw to the ORWL is a ‘honeycomb mesh’ that completely covers every square inch of circuit board. This honeycomb mesh is simply a bit of plastic that screws on to the ORWL PCB and connects dozens of electronic traces embedded in this board to a secure microcontroller. If these traces are broken – either through taking the honeycomb shell off or by breaking it wide open, the digital keys that unlock the computer are erased.

The ORWL specs are what you would expect from a bare-bones desktop computer: Intel Skylake mobile processors, Intel graphics, a choice of 4 or 8GB of RAM, 64 to 512GB SSD. WiFi, two USB C ports, and an HDMI port provide all the connections to the outside world.

While this isn’t a computer for everyone, and it may not even a very large deployment, it is an interesting challenge. Physical security rules over all, and it would be very interesting to see what sort of attack can be performed on the ORWL to extract all the data hidden away behind an electronic mesh. Short of breaking the digital key hidden on a key fob, the best attack might just be desoldering the chips for the SSD and transplanting them into a platform more amenable to reading them.

In any event, ORWL is an interesting device if only for being one of the few desktop computers to tackle the problem of physical security. As with any computer, if you have physical access to a device, you have access to all the data on the device; we just don’t know how to get the data off one of these tiny computers.

Video below.

Or simply take the key fob from the user

Obligatory XKCD: https://xkcd.com/538/

Haha yeah, but if you travel with sensible data it is a good way to know if your machine has been compromised tho!

Ever seen Demolition Man, check out 1:20…

https://www.youtube.com/watch?v=CbM–4-z0cs

Yeah but if you keep the key with you while the device is at your home/hotel you can come back and verify the hardware integrity, and that is something you just can’t do with other computers.

So bridging the traces is not an option?

You could but as the article states, “the digital keys that unlock the computer are erased” upon breaking the traces in the first place.

I think Daniel was suggesting bridging them before breaking them, so the connection is not interrupted. A quick glance suggests it is a single continuous trace, which would only require a single bridge. Presumably it actually employs enough independent traces to make this a chore though.

I must be missing something. If the traces are encapsulated within the cover then how do you see or access the traces and components without cutting/altering the cover itself and thereby breaking traces? I’m not very good at visualizing things and usually have to have them right in front of me. So I apologize if i’m asking a simple question.

You could use x-ray or acoustic microscopy. You could also chemically remove the casing without removing the traces. A skilled tech can mechanically polish down the casing without disrupting the layer of the traces underneath. Etc.

So you’re saying that someone would have to steal it and work on it at their leisure and that said person would really have to know their stuff.

@Nick

Not necessarily. Anyone who wants to steal something like this and crack into it would do some sort of research, and a chemical removal was a good idea but I was personally thinking about using a very small drill bit, and drilling into the middle of one of the traces and establishing a connection that way.

@Sean exactly my idea, doesn’t even need to be in the middle of the trace. Drill till you hit copper, countersink enough to fit a wire into it dipped in a conductive adhesive then tap to one of the outer traces. That is unless they are measuring resistance and not continuity.

It wouldn’t be difficult to build a simple circuit that detects changes in the trace length assuming that it is a continuous trace. The first one that pops into my head is a little resonant devices that uses the trace as an inductor and when the resonance shifts sufficiently, it trips from a PLL style detector. Whole thing could be put together dirt cheap if you are integrating it onto an existing board during the design phase.

Or you can measure the impedance or propagation delay.

Both of which are influenced by nearby objects.

Also, what happens when you put a cellphone next to this, and it starts inducing the familiar tah ta dah into the circuit?

But if the microcontroller is connected to the trace, surely +/-50v at 50Hz ought to produce an interesting result?

You might even be able to do this inductively, with less risk of getting the SSD fried.

Oh, and don’t forget those USB-C ports; you can’t wipe the SSD without your PCIE bus, nor your i2c bus which is exposed to the HDMI.

In short, you might wipe the 1st one, but the black hats would tear the second one to pieces.

So I just bridge the traces with a circuit that emulates the intact trace path?

Here is some logic for you, the people who may want your data are better resourced than the people making devices to protect your data therefore this device’s protection is illusory.

As far as a circuit that emulates the trace path, how about a second copy of the trace path? Buy your own from whoever produces this solution, perfect!

As far as zapping it with 50V, you need the micro intact and happy to output the encryption keys. Killing it won’t help at all.

If I want your data, and I can’t compromise the system remotely (LOL), I can always buy a few of these units and reverse engineer them to learn how to get the drive out without it being destroyed. I then take your drive from your computer when you are not looking and drop your drive into an identical machine to which I have added a bios level root-kit. I then place the compromised machine back in your home/office and wait for you to log in with your key and send your unencrypted data to me over the web. Just as well I don’t work for the NSA eh?

Not worth it, the amount of time and money to get around the semi-random pulses shield is enormous. If something goes close to it it wipes the keys…

> I then take your drive from your computer when you are not looking and drop your drive into an identical machine to which I have added a bios level root-kit.

The cryptographic keys are in the keyfob smartcard and the secure microcontroller inside the machine, not only derived from the user password. The keyfob will not send anything unless it has authenticated the microcontroller. You are not getting keys out like that.

The MCU is not secure if you can get at it, if you are the sort of organisation that regularly needs to (lawfully) get into such devices.

The idea of objects that stay secure once you no longer have possession of them is a delusion. The only secure object is one that you never put down and can destroy before it may be taken from you. Leave the device in a home or office and go away and you can never be sure it was not messed with.

The problem with this product is conceptual, it tries to be “harder” than just an encrypted hard disk but it can’t be if the person who takes it is more than just some random junky who has broken into your house. Only something intelligent can guard and protect data completely, and even then it could still be outwitted by a superior intelligence.

to Aussie Lauren:

First I did not want to report, I just wanted to answer.

Second: This 50V will kill the CPU, yes, so the keys are lost. The SSD does not need to be wiped, it is already encrypted. If you tamper with the device, the keys get lost.

Commercially, things like cryptographic processors use a membrane with a labyrinth of conductive ion traces embedded into them – vastly harder to detect with xrays or MRI. Of course, commercial devices are also have scintilation detectors so you can’t xray them. See the IBM 4764 for an example of how this is done commercially. Some military devices have more positive wiping features (=bang!).

Anyway, if you aren’t running an OS built to resist concentrated attack, this is all just cuteness.

> Anyway, if you aren’t running an OS built to resist concentrated attack, this is all just cuteness.

Remote and physical vectors are vastly different. While physical compromise does not scale, it is trival to do and very attractive in many scenarios. Engineering a robust operating system is a completely different job that nobody knows how to do. You can always compile your own grsec kernel, but we will not take the responsibility of maintaining a “secure OS” (whatever the hell this means).

Aside from the purely defensive stategies, we also have in mind verifiability and compromise recovery. We want to allow anyone to easily and externally verify the integrity of embedded firmwares, exfiltrate them for analysis and reinstall them if they are modified. This is completely impossible to do on other PCs, at least not without hours of disassembly which makes frequent verification impractical.

So the thing to do is drill into the traces under liquid metal like mercury or gallistan.

Okey so i have to attack the backup. And there will be one ,because without one my data are definitely gone if there is a hardware problem :/

The user can buy 2 of these machines, 1 for working on and 1 for the backup.

Backup drives should always be encrypted.

I’d like to see some thermite or magnesium dust and an igniter. Far more interesting to watch when the computer or data drive self-destructs. But that may include the building it’s housed in too :/

In the US army, I worked with artillery targeting computers with 1.5lbs of thermite spread out inside of them. It was intended as a last resort self destruct device in case your position was about to be overrun by the enemy. All one needed to do was enter the right commands and you would have a smoking puddle of aluminum and plastic in about 30 seconds.

You could rig something your self. I recommend putting a small charge on top of the HDD, and housing both in a ceramic box to contain the resulting slag. You could rig up a USB operated servo to set it off remotely, or a dead man switch tied to the case door. As a back up, just make the Ignition system battery operated so disconnecting the power won’t stop the self destruct device if someone tries opening it unpowered. You could disable the whole thing with a keyed switch, or if your really crafty, a series of unmarked toggle switches that need to be set in the correct position before you open the case. That way you can still do maintenance.

Maybe make something like this?

http://hackaday.com/2015/09/21/this-is-what-a-real-bomb-looks-like/

That’s exactly where my thoughts went as well after I wrote about toggle switches.

FWIW, this approach to physical security has been successfully used in the payment industry for the past 10 years for credit and debit card terminals.

There isn’t just one trace that goes across the board (at least in the payment terminals) there are dozens. If you bridge the wrong ones, poof. If it detects excessive vibration, poof. If it detects unusual electrical or thermal characteristics, poof.

Oh, there’s also a known resistance to each trace, so if you do somehow manage to bridge it, but the resistance changes, poof.

That likely explains how it would poof on thermal changes.

There is a dedicated temperature sensor inside of the secure microcontroller.

Yes, this protection exists in the gamble indsutry too….and was cracked some times….

It’s difficult to crack it, but you can crack mesh on chips, why you shouldn’t crack it on bigger devices ?

Is that to protect from skimmer, protect IP, or protect some super secret encyrption key?

Not applicable to the environments a typical PC user frequents. One mistake and poof, no more PC.

It is pretty robust, and specified to resist a 0.60 meter drop on marble. It is probably more shock resistant than a laptop with a traditional hard drive.

A tamper event will destroy crypto keys, but hardware being fallible you should always have backups anyway. Destroying the keys does not brick the machine forever, it just erases the data and temporarily make the machine unbootable.

Verifone’s VX series of small credit card terminals has a tamper circuit. when a VX550, for example, is disassembled the protection circuit is broken between the two halves, the NVRAM chip(s?) storing security keys looses power and even if reassembled the unit will boot up with a “TAMPERED” warning flashing in the middle of the screen. also any terminal applications are lost. said unit gets sent back to verifone for maintenance where the security keys are transfered back in via serial port.

an aging terminal can do this on its own though when the battery dies so the maintenance would include resoldering a battery.

If it loses data without power, it’s probably not NVRAM! Just RAM.

Interesting description of the Verifone, though.

Literally, yes. It’s battery backed SRAM. So as long the device is not tampered with, it’s NVRAM, otherwise it’s EmptyRAM

Look Bond, this is the most advanced secured computer case. It’s made out of glass, with a vacuum deposited metalized wire film on it. If the wire is broken, it will instantly erase all the data. Just don’t drop it, and it’ll be OK.

Oh, and by the way, you don’t plan to install Windows on it, right?

It is specified to resist a 0.60 meter drop on marble.

We never pretended to solve remote software vulnerabilities, we are working on different vectors. As much as I love free software, I would also argue that Windows is the most secure off the self operating system you can get. The cost of getting ring 0 execution on Windows from Chrome cannot be compared to the cost of compromising a machine in a completely undetectable way with physical access. It should not be this way.

Transparency and openness are also critical, and a prerequisite to security. We are working on it!

How small is the spacing would several dozen accurately placed 0.1 mm drill holes evade the protection and gain access to traces on the PCB underneath ?

^^This.

Unless the traces are randomized for each S/N, buy one, map out your drill points, cat’s whisker JTAG and Bob’s your uncle.

0.2mm, but the plastic is very brittle and designed to break if drilled. The traces are a bit randomized with probably 8 different models. JTAG ports can be deactivated, and should not lead to a compromise of the MCU secrets.

Oh, there is a eevblog fan…

Eevnlog fan, eh?

Just thinking that one solution would be to have a second wire on the other side in the space between the traces.

Or you could use a gun and shoot the security controller before it has time to delete the digital keys. This was a favourite at a security testing firm around here.

The keys are stored in the security controller in encrypted NVRAM. The encryption key for the NVRAM itself is stored in flip-flops, so the moment power is gone you will loose all information, even if you can analyse the rests of the disintegrated die.

If the keys are on a volatile storage media, you’ll have to super-cool it first. And then get the data off before the media decays… But really, I think any kind of gun is more likely to just obliterate the whole deal.

lol – Orwell!

Intel based eh? Its only as secure as the smm. This is a novelty not a secure device.

Pretty much. There’s enough SMM/UEFI exploits out there to make pretty much all the fancy toys on this thing useless.

There is no known generic platform vulnerability that allow SMM takeover like The Memory Sinkhole (BH 2015) on this chip. The SPI flash content is also entirely verified at boot by the microcontroller before powering up the Intel processor, so it is not possible to drop a stealthy persistant backdoor in SMM or add an UEFI module. The only thing left is to exploit a vulnerability in SMM code to temporarily run high-priviledged code, something you are probably more likely to use ring 0 for. We are in close contact with the UEFI vendor and will patch all public vulnerabilities.

Of course you have control of the SPI verification mecanism and can, if you choose, load what you want in SMM or use another bios image.

Freeze it. Then open at your leisure.

Detect temperature drop and trigger. The RAM freeze trick works in the negative temperatures so all that you need to do is trigger at a set high and low temperature and erase the keys.

Stasis field!

So it has built-in WiFi. No external antenna.

And a faraday cage.

Yeah, I like that…. makes perfect sense.

https://media.giphy.com/media/65fiHpjKxyBgc/giphy.gif

Dude, the antenna is outside http://tinyurl.com/oou9ltg

It is not a faraday cage, while we have steel and copper shields inside the mesh, the system as a whole was not build to be tempest-proof. The security MCU has side channel mitigations so you should be able to run crypto operations without fear of power analysis or eletromagnetic emanations on it.

If lots of people come along with their own unique key fobs does it think you’re trying to brute force the code?

I wonder what the delay is between tries.

I guess you could listen to the hum of the processor with a sdr, do a transform to triangulate the position of the signal and gain some insight.

I wonder how it copes with a usb-be-gone style killer.

Perhaps the shell it’s self could be a weak point. If it’s connected to a data pin.

Better still, if it doesn’t trip the security, position several coils surrounding the device, then create a precise field that cancels itself out apart from a tiny point so you can toggle data lines wirelessly. Maybe just use it to cook the offending security chip.

isnt that part of what the wire cage helps protect against?

No, it is not identification with the keyfob simply sending a code to the microcontroller, it involves strong asymmetric cryptography with the keyfob authentifying the MCU and vice versa. There is no need for delays or to limit the number of interactions.

No idea about the usb-be-gone, but if your only intent is to break things you can use a hammer. You cannot “create a precise field that cancels itself out apart from a tiny point so you can toggle data lines wirelessly”. This is not how an electromagnetic field works. You can always disrupt things, but that will destroy the keys. The MCU is likely to detect errors in the mesh traces and attempts at power glitching if your interferences are strong enough.

Steal the keyfob. I’m sure if you can steal a car key you can steal a fob.

And beat the user for the passphrase.

I liked it !

If you are really this paranoid about security, just use one of those thumb stick PC’s and carry it around with you. The only true physical security is to have it on your person, in sight, at all times.

+1 security is in the eye of the beholder.

Perhaps rectal-PC is going to be the secure format of the future.

Keister key store?

No the only secure system is to not have a need to protect the system. No data stored, no need for exploitable hardware.

Am I the only one thinking of a Sherlock episode right now?

Sounds like a fun obstacle. Would be interesting to attack it on its power source.

1. What if we wait until its battery goes down?

2. What if we pour liquid nitrogen over it, so the battery goes down?

And then cut and connect.

There is a backup battery to maintain the physical scanning for 6 months without power. We also have temperature range detection that will trigger an event if frozen or overheated.

So if I wanted to steal some information from a locked system, I’d have to leave it unplugged for many months before I could safely open it? Or can the battery trigger self destruct if it starts to get run down?

Why wait months when you can just do a very slow CT scan in a shielded room using radiation levels within the range of normal background radiation, then once you have a clear set of engineering data you use a very powerful and tightly focused laser to penetrate the device and disable it in a very selective way.

You can leave ORWL not powered for 6 months and still access your information AND protected. You will need the key once you power it again.

If you do not plug power for 9 months, then the keys are lost, the data are left encrypted and the unit will not start.

You can arm the device with new keys again and restart like the first time you received the device.

If the battery wears down, the keys are lost. If you want, you can call this “trigger self destruct” :-)

1. The microcontroller with the keys has his own battery to continue scanning the mesh with at least 6 month of autonomy. If this battery goes down, you loose the keys (that are stored in flip flops).

2. Thermal changes, up or down, will trigger a tamper event and key destruction.

Securing the physical device is missing the point. What use is a PC if it doesn’t communicate with the wider world. As soon as HDMI is enabled, I can intercept it. Ditto USB. Share information with a third party? Effectively game over. I genuinely don’t understand what problem this technology is trying to solve, even if it was contrived by much cleverer people than I.

It might be useful for minor criminals. You don’t need internet access to keep a spreadsheet of illegal goods/customer/source.

Probably useful for pedophiles too, as long as you aren’t high profile enough to have a legitimate state agency take interest in you.

I was thinking the same thing. Man in the middle attack. Keyboard and monitor are exposed. Analyze data during the fob key exchange.

The idea is that you cannot always be in physical control of your devices. They are usually trivial to covertly backdoor and if unencrypted, easy to read. When they are encrypted but sleeping, same thing.

ORWL will not make software security any better, or help you if someone intercept you communications as you use the computer. It will however prevent people from backdooring and extracting information from your computer, even when you just go to S3 sleep or your keyfob exit a 10 meter range.

I wonder if these guys did any market research before wasting time with this design? The people who actually “need” this kind of security already have their own proprietary implementations that outperform this.

This would likely be sufficient to stop most criminals from accessing the data. But it is not going to stop a State agency.

Who is this intended for?

Are they really relying on “people who don’t know any better” to be their primary market?

It works for apple.

But Apple users ALREADY think they have digital invincibility.

> The people who actually “need” this kind of security already have their own proprietary implementations that outperform this.

Everyone needs computers that are verifiable, encrypted and hard to backdoor in 60 seconds with physical access. This is not an NSA-proof paranoid project but should be the default design for personal computers.

I do not know of any general-purpose computing platform that uses this kind of technonology. Just like with software, it is prohibitively expensive to develop your own solution. No government has a “better Linux”, or processors that are both faster and less expensive than consumer ones. Most state agencies around the world just use Lenovo or HP laptops running Windows. They rely on two things you do not have: serious physical security (SCIF manned 24/7), and heavy network/endpoint segmentation and monitoring.

> But it is not going to stop a State agency.

If you have motivated state agencies against you, I suggest you stop using computers.

“Everyone needs computers that are verifiable, encrypted and hard to backdoor in 60 seconds with physical access.”

I disagree. (see the last few paragraphs)

“…proprietary implementations that outperform this…”

I was referring to the people that actually DO need a reasonably secure physical presence somewhere that they have non-continuous control over. As you can see by the many posts above this one, the list includes banks (and ATMs), gambling establishments, and other similar entities.

“If you have motivated state agencies against you, I suggest you stop using computers.”

Unless you ARE a state agency.

The point of my first post was that the groups that DO need solid physical security of their machines, already have it. 2-man integrity systems, SCI controls, live camera feeds, and other procedures all provide much more security than this implementation would.

On the other end of the spectrum, it is FAR more important that my gaming PC is upgradeable and has well designed cooling, than it is for it to be ‘physically hardened’. Making this system ‘more secure’ would detract from its ability to do what it was BUILT to do.

And that is the point.

Security is always a balancing act. Every piece of a security system will use some resources that MIGHT be better used elsewhere.Threat and risk must be taken into account BEFORE you design your security.

I just don’t see the market for this product. There are so many other angles of attack that can be used, and so many other ways to add physical security to your computers (would the added cost of these machines be more, or less than hiring a security guard?).

NOTE: I’m being very critical of this project. That does not mean I don’t have respect for the people who made it. It is a novel implementation, and it is quite interesting for us to unravel. I just don’t think it has a good real world use.

Unless each unit has a unique trace pattern in the cover and they have no bugs reachable from USB or HDMI(which guaranteed are ring0 trumping FS and kernel policies) than they should hope enough people never believe them and use this..

The USB ports should be physically disconnected by internal switches when the user keyfob is more than 10 meters away. There is multiple trace patterns (probably around 8) so you will need x-ray to know which one you are attacking.

Which are controlled by software or firmware which are susceptible to things like reverse engineering and memory corruption.. It has to be a generic design on some level, so attacks have their own and RE it and find attacks..

The switch is directly and only controlled by the MCU. If you have code execution on the security MCU, you do not need to open USB access to compromise the system, you already are at the deepest level. Chicken and egg.

RE is not meant to be hard on an open source platform, as we do not rely on obscurity. Simplicity is much better.

Considering ALL orwl devices possibly have the same trace length one could map out the length and know exactly what RF the traces resonate at and would act as antenna primitive antenna

Mod a existing non-compatible keyfob for USB3/HDMI/DisplayPort speeds..

TEMPEST

Scan the orwl via a laminate sticker receiver antenna OR mod the HDMI based display to collect data or USB keyboard/mouse.

TX via SDR/NFC/RFID begin Radio fuzzing and interference via the modded keyfob.

That’s if it even bothers checking impedance. You could monitor across the traces with a meter set on microvolts or the like, see if a voltage is constantly applied, it’s probably just a DC voltage, dunno if impedance-checking would be easy to do on a micro, or reliable, especially in mass production.

TEMPEST is defeated by a Faraday cage, dunno if it has one, but all those traces could well act as one. And since we got rid of CRTs TEMPEST isn’t nearly as useful.

Not sure what modding a keyfob would achieve. Or the laminate sticker. Interfering with the keyfob signal is just going to get a “no” response from the reciever internally. The data you’re theoretically after is encrypted, and the keys are volatile, with the micro programmed to zap them. None of your suggestions would help with that.

As someone mentioned, bridging the traces would be hard if there’s traces on the other side too. But not impossible. Depends if there’s several sets of traces, not allowed to be connected to each other. You could theoretically X-ray that, though all the PCBs inside would make it annoying, you’d need a sensitive X-ray to not also pick up a load of unwanted PCB tracks.

If you knew where to drill, you could use a non-conducting drill bit to get to the other side, and bridge somewhere opportune. Though keeping the contact in place, since you can’t exactly solder to the other side, while you mess about cutting bits off, would be a challenge.

Yeah setup a sdr type of logger on the monitor or keyboard/mouse.

Don’t restrict your thinking to only use a microcontroller as the priority is not trying to minimize parts counts.

http://www.analog.com/en/products/rf-microwave/direct-digital-synthesis-modulators/ad5933.html

>1 MSPS, 12 Bit Impedance Converter Network Analyzer

Most high-security MCUs already have this sort of mesh on the silicon or chip packaging level. Usually a bit safer, simply because it’s a lot easier to prevent tampering with tiny wires rather than with macroscale traces you could just kind of jumper away. Hard to get access to these MCU specs though, in part because some of the security comes from obscurity. I believe some of these might even have encrypted buses (if they don’t, that’d be a great project to work on).

Truth is, when it comes to physical security, obscurity helps a lot – I mean, if they publish the trace layout, it’s not difficult to design a custom mechanical jig that allows you to quickly bypass any broken wire detection and drill past the cover. Obscurity basically puts a fairly substantial cost barrier to a problem that could be solved with enough money, whenever some product is mass-produced. Or maybe have an open source design that is one-off manufacturable (expensive, unfortunately) with a custom anti-tamper layout / system known only to the end-user.

Besides that sort of obscurity, there’s the classic psychological kind. If you want to hide a man, put him in a crowd. Something like this, that looks like a carbon-fibre UFO with USB ports, is kindof attention-getting. Better security would be keeping your private stuff on a micro-SD card, properly encrypted, then just hide it somewhere. Use whatever software to make sure stuff like virtual memory doesn’t hold any traces.

Then again I can’t think of a case where an ordinary user would NEED physical computer security. What’s worth stealing? Governments aren’t going to buy geek-made stuff like this.

Your will need to connect your SD card into something at some point. What if this computer is backdoored while you are not looking? Not that we solve all software security problems, but for firmware integrity and key protection the benefit is significant.

It is quite small (about 0.84 metric bananas) and can fit in a pocket: https://imgur.com/ogaGG6D

> Then again I can’t think of a case where an ordinary user would NEED physical computer security.

Maybe most people put their data in the cloud, and trust all these services. Maybe they do not even need computer security. They have nothing to hide right?

Still some people have to store information locally. They even store other people’s information: Journalist, lawyers, physicians do not benefit from the same kind of physical protection that a big financial institution can provide. They are ordinary users in many respects.

Physical attacks are much harder to use to build a surveillance society because they do not scale the same way as software exploitation and traffic interception. Still in many scenarios, physical access is too easy. For blackbag professionals, for people you know or work with, it is a matter of minutes. Unlike software exploitation, hardware attacks do not leave logs and do not trigger any IDS, so you will never know.

> Governments aren’t going to buy geek-made stuff like this.

Do not be fooled by the kickstarter, this was designed over the course of two years by a team of 40 engineers at Design Shift that have a lot of experience in building consumer devices and high security banking terminals. It is manufactured by Quanta Computer, the largest notebook manufaturer of the world. https://en.wikipedia.org/wiki/Quanta_Computer

Not exactly my definition of a geek project. :)

It uses one of these high-security MCUs, the MAX32550. It also has a die mesh protecting the silicon. It has encrypted internal RAM but unencrypted buses, what threat do you think this would this protect against? The topology and interfaces of every bus are very clearly documented, so you know which subsytem has access to what. It is extremely difficult to design a mecanical gig that bypasses die tamper protection, and it raises the bar for attackers willing to extract keys from it by many orders of magnitude compared to standard PCs. It does not rely on obscurity as much as you think.

The MCU is a 108Mhz ARM microcontroller on which you have control, so you should be able to put GPG keys on it for example and have an embedded HSM in your computer.

The whole “physical security” part seems to be a red herring.

So what this basically does is:

1. Key fob transmits some code to authenticate to the computer.

2. Computer uses encryption to store the data and keeps encryption keys secure.

So how does this differ from:

1. Key fob contains encryption keys for data.

Consider, if the encryption algorithm is broken, erasing the keys does nothing good. If the key fob is compromised, the physical security does nothing. A piece of software that encrypts data and takes a key file from usb drive accomplishes the same.

To read your USB key you are going to need an unencrypted bios and kernel that can modified by an attacker. Your USB key is also not tamper-proof, so if someone has access to it he can trivially extract the secrets. Even if you encrypt it, getting your password will allow an attacker to decrypt the usb key image.

The keyfob has the same level of physical security than the rest of the computer. It has a smartcard.

The secret could be distributed between the Key fob and the microcontroller so authentication is not the only thing that happens. A password also needs to be entered so a part of the secret can be derived from that.

The code to authenticate and encrypt the communication between the key fob smartcard and the microcontroller is coming from generic payment stacks, we did not write crypto code ourselves. It is based on asymmetric crypto, it is not simple identification. For the disk, AES256-XTS is weird but it is not broken yet. ;)

You have control of the secure microcontroller, so apart from storing SSD keys you can use it to perform crypto operations for you and act as an embedded HSM for GPG or other long-term keys. Wouldn’t it be nice if free software developers stopped signing code with keys stored in their laptop ~/.gnupg?

All that security and they’re still giving it built-in networking?

Computers are useless for today’s consumers without networking. It was not really an option.

Networking is not that much of a problem in itself, wireless transceivers are if you want to build an airgapped machine.

We agree that turning it into a proper airgapped computer will require to open it to disable the wireless transceivers and the keyfob-based boot. A detailed procedure to do that will be published. Of course people that are willing to have an airgapped computer will probably want to open it anyway, to verify the lack of physical implants and double-check all the firmwares (that will be signed and published).

Of course when I say firmwares will be signed, I do not mean that they will be locked into place by secure boot, simply that we will allow you to verify that we published them.

The secure boot mecanism on the MCU should allow everyone to provision their own keys if they want to, we are still working out the architectural details with Maxim. In any case we will not prevent people from running their own code into any chip of their own machine if they want to.

So this is a secure computer noone can verify is secure…

You can open it in order to verify it, you will simply loose the SSD keys and content.

There will be a unique procedure to externally verify the integrity of firmwares (MCU, PMIC, UEFI SPI, etc) without executing a single instruction from these firmwares. The MCU boot rom can load signed code directly into RAM from an UART port (exposed on USB connectors), and this enables you to verify and access all the firmwares as frequently as you want without disassembling the machine.

The gerber files are already on github, and the schematics will follow very soon:

https://github.com/orwlorg

Q-switched Nd:YAG laser ablation is used to expose molded chips for failure analysis. It’s an expensive undertaking but I can imagine. I wonder how counter measures to less destructive attacks like stripping the plastic would look like.

There would also be the possibility to take x-ray pictures (indistinguisable from TSA scanners from a tamper detection standpoint) and subsequently drill microholes (laser (no short circuit detection with laminated layers possible), conventional carbide drill, down to 0.1mm) to insert isolated tungsten needle probes to contact relevant points on the PCB.

… It’s an expensive undertaking but I can imagine… one could open up a window without breaking the meandered interlock traces.

That seems like a credible attack scenario. Seriously expensive of course. There also is steel and copper shields below the mesh so your tungsten needle will need to penetrate them.

The thing is that accessing the PCB test points or traces should not allow a compromise of the MCU (that has internal flash and die protection). You could reprogram the other components (PMIC, UEFI SPI flash, probably the EC, etc) but that will be detected at the next boot by the MCU verifications.

The traces are only 0.2mm, so at this scale even a 0.1mm drill bit is large. The plastic is made to be extremely brittle, so any incipient crack will propagate if you apply pressure on it. The drill bit method has a very high change of breaking the whole shell, this is something this technology is engineering against. This is not your usual chip epoxy btw. Anyone got a big yag to measure absorption ? =)

The tamper mesh pictured is formed by Laser Direct Structuring – where the tracks are made by using a laser to carve grooves into the plastic surface such that a copper layer can be electroplated in.

This means that the conductive path is only microns thick, and bonded to the plastic shell. It makes a great tamper mesh as you are highly likely to destroy the trace trying to access it as you’re removing the support material.

Also; the tamper signals are dynamic and random, so you can’t just short them to a fixed reference point.

This type of design is extremely common in modern payment terminals, a dynamic mesh enclosure around the secure processor to protect the cryptographic keys.

So having said i like the design from a physical security standpoint; i still have many concerns:

The MAX32550 uses digital signature verification to check it’s bootloader. It checks the signature on bootloader using the “Customer Root Key” – which intended to be the same for all chips. Now who controls the Private Root Key? do we all get our own? How are they protecting this key?

Additionally the datasheet and associated information for this processor are heavily NDA’d and restricted (i know – i’ve signed it). This means that programming the secure processor to do what you want is likely to be out of the picture.

I was going to purchase one to play with – but after my interactions with the designers have decided on a wait and see approach.

I don’t Think a shared signing key on the boot loader is a issue.

The signing key is intended to prevent malicious modification of the boot software in transit. Eg, when you order this device, it has to be shipped. The key is preventing any malicious modification, and the system will, as Leo said, allow for external verification that the bootloader is legit.

A malicious party that have access to these keys, or are subdued by the government, can only modify new devices. Not prior devices.

After you start using the computer, the bootloader will be technically “frozen”. This because opening the Shell on the computer will ofcourse erase the keys, and what I have understand, it will not be possible to load a bootloader in any way without erasing the keys. So a attacker might be able to compromise your computer and add a password-sniffing bootloader, but will in the meantime also destroy the data they were going to get access to, so once the malicious bootloader is in, they will have no use of either the keyfob or password.

The Customer root key is not cleared on tamper; the secure memory is cleared – where the SSD key is stored.

This key pair is generated by the manufacturer and signed by Maxim, which then is loaded into the processor OTP memory.

The signing private key is held by the manufacturer and used to sign the bootloader.

The problem is one of trust, I want to be able to sign my own bootloader code with my own generated key; not have to be concerned that the ORWL team have received a NSA letter, or had the key extracted etc.

(Yes this is extremely paranoid, but that’s what this device is trying to sell itself on)

Changing the key requires that Maxim sign the new key, in a process that is not partial to automation.

The greatest and hardest questions! We still do not have definitive answers for all of them, but I will try what I can. Updates will arrive over the coming days.

Indeed, the MAX32550 can only boot code signed with the Customer Root Key (CRK – NIST P256). In order to program the CRK and get full control of the microcontroller you need to get your public key signed by the Maxim Root Key (MRK). You can update the CRK, but of course the new CRK also needs to be signed by the MRK.

> Now who controls the Private Root Key? do we all get our own? How are they protecting this key?

Design Shift, generated and stored in an HSM. If there can be one key per device, per batch or for all is not clear. One key per device means we would have to sign releases with every single key ever generated. It does not really matter anyway, because everyone has to trust us in this case.

> A malicious party that have access to these keys, or are subdued by the government, can only modify new devices. Not prior devices.

Secure boot verifies the firmware signature, it does not prevent reprogramming.

>The problem is one of trust, I want to be able to sign my own bootloader code with my own generated key; not have to be concerned that the ORWL team have received a NSA letter, or had the key extracted etc. (Yes this is extremely paranoid, but that’s what this device is trying to sell itself on)

This is not paranoid and is the central problem: how do we remove ourselves from the equation? You can never proove that you didn’t sign something malicious. Even if we provide a mecanism to externally verify the firmware so you could realize if we signed an unpublished fw, it is still too cumbersome to properly replace secure boot.

This should give us multiple scenarios:

– You use the original CRK

— You can boot the original signed firmwares.

— You can verify the firmware by loading a small signed applet directly into ram to verify you are not running a malicious fw that we have signed but not published.

— You can boot unsigned code using a small bootloader that wipe the ssd keys, display “unsecure mode” on the OLED screen and jump to an fixed offset without verification after confirmation from the user.

— We could write a minimal bootloader that implements secure boot that does not rely on the MRK, and let you lock it to the flash. This is obviously not ideal.

– You generate your own CRK, send it to us to get it signed (we’d love to work around that too) and burn it. We cannot make promises on this scenario yet.

We are working on the datasheet too, and will provide updates as soon as we can.

If you have ideas about better architectures, please share! You can directly contact us or we can setup a public mailing list for longer exchanges if you want.

Question to Leo:

What will the computer tell the OS once the key moves away more than 10 meters? Will it emulate the “Sleep” button being pressed, or will it emulate the “Power” button being pressed?

Or will it just turn off the HDMI and USB ports, making the computer running “headless” in the background?

Is this configurable in any way? It would be nice to be configure this so instead of putting the computer into “sleep” or “Power save” mode, it will instead send a Power-button press, effectively forcing the computer to completely shut down when the key is more than 10 meters. (But it will still shut down the computer gently, and allow the OS to update, save settings and such, at shutdown)

How is the password entry secured? Eg, it has to enable one or more USB ports to allow the keyboard to enter the password, and also possible enable the HDMI to ask for password on-screen. Will the USB ports then be routed to some MCU that will not allow anything else than a keyboard to be present?

Wouldn’t it be better to design a dedicated PINpad somewhere. Maybe even a touch pinpad on the display on top.

Also, what will happen at tamper detection? Will it just erase the keys and once tamper detection is reset (eg, mesh restored again), it will put itself in a “uninitalized” state, allowing you to enroll keyfobs and create new SSD encryption keys to start using the computer again fresh (of course, you would lose all data and have to reinstall Everything)? Or will the security chip “brick” itself making it useless?

And also about keyfobs: Will it be possible to enroll and/or delete keyfobs for the computer?

Actually being able to backup the keys, so that if it does fail by accident, you can get back at your data, would be an important feature I’d think. Store your keys somewhere safe, maybe with PGP with a password you remember. Or whatever, key management is a separate issue.

Or you could at least have that as an option, the other option being “once it’s gone, it’s gone”, so even the owner can’t be forced to unlock all his child pr0n once the cops have opened it.

It just seems inconvenient to lose all your data when a fuckup happens. That’s PCs, they’re always going wrong.

It seems much easier and less dangerous to let people do encrypted backups with their favorite software than allow to export the key (you cannot easily memorize it, an storing it anywhere will make the secure MCU useless). Having to extract the M.2 SSD and decrypt it manually is a pain compared to traditional backups. PCs do go wrong, so you should already have such a backup strategy.

> What will the computer tell the OS once the key moves away more than 10 meters? Will it emulate the “Sleep” button being pressed, or will it emulate the “Power” button being pressed?

I think we will implement all three (run, sleep, shutdown), we need to manage S3 anyway and some people want to leave their computer running or shut it down completely. I should mention that the accelerometer will shut the power down if the computer is moved while it is locked in all cases, so you cannot move it even with an UPS.

> How is the password entry secured? Eg, it has to enable one or more USB ports to allow the keyboard to enter the password, and also possible enable the HDMI to ask for password on-screen. Will the USB ports then be routed to some MCU that will not allow anything else than a keyboard to be present?

The MCU has an USB2 controller, but I do not think it supports host mode and it would expose too much surface anyway. The password entry is managed by the UEFI, whose integrity is checked before it is executed. Of course there will still be vulnerabilities, but a dedicated hardened keypad was too impractical to add. A funny solution that was proposed is to tilt or rotate ORWL, using the accelerometer and the screen to compose a short PIN.

> Also, what will happen at tamper detection?

The SNVRAM encryption key is wiped, and you will have to enroll the keys and generate a new SSD encryption key again. The machine is not bricked.

> And also about keyfobs: Will it be possible to enroll and/or delete keyfobs for the computer?

Adding, not without going through the initial enrollment procedure. Revocation would be nice, but needs to be authentified to avoid DoS. I’m taking note. :)

Is the name supposed to recall ‘Orwell’ or is that just me?

I think it is.

The traces need to be an integral part of the glass shell, just like that self destructing glass chip in another article.

any attempt and the stressed glass shatters breaking all connections.

I enjoy all the comments along the lines of “well, I can’t think of how to break into it, so it must be secure!”

From what I see it does not have ethernet port. With a security focused device, wifi only option is incredibly stupid.