We had some incredible speakers at the Hackaday SuperConference. One of the final talks was given by [Kay Igwe], a graduate electrical engineering student at Columbia University. [Kay] has worked in nanotechnology as well as semiconductor manufacturing for Intel. These days, she’s spending her time playing games – but not with her hands.

Many of us love gaming, and probably spend way too much time on our computers, consoles, or phones playing games. But what about people who don’t have the use of their hands, such as ALS patients? Bringing gaming to the disabled is what prompted [Kay] to work on Control iT, a brain interface for controlling games. Brain-computer interfaces invoke images of Electroencephalography (EEG) machines. Usually that means tons of electrodes, gel in your hair, and data which is buried in the noise.

[Kay Igwe] is exploring a very interesting phenomenon that uses flashing lights to elicit very specific, and easy to detect brain waves. This type of interface is very promising and is the topic of the talk she gave at this year’s Hackaday SuperConference. Check out the video of her presentation, then join us after the break as we dive into the details of her work.

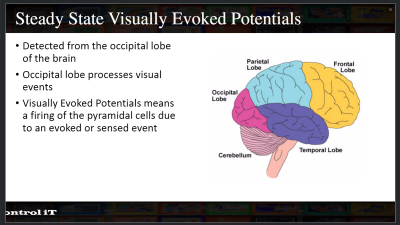

[Kay] is taking a slightly different approach from EEG based systems. She’s using Steady State Visually Evoked Potential (SSVEP). SSVEP is a long name for a simple concept. Visual data is processed in the occipital lobe, located at the back of the brain. It turns out that if a person looks at a flashing light at say, 50 Hz, their occipital lobe will have a strong electrical signal at 50 Hz, or a multiple thereof. Signals as high as 75 Hz, faster than is consciously recognizable as flashing, still generate electrical “flashes” in the brain. The signal is generated by neurons firing in response to the visual stimulus. The great thing about SSVEP is that the signals are much easier to detect than standard EEG signals. Dry contacts work fine here – no gel required!

[Kay] is taking a slightly different approach from EEG based systems. She’s using Steady State Visually Evoked Potential (SSVEP). SSVEP is a long name for a simple concept. Visual data is processed in the occipital lobe, located at the back of the brain. It turns out that if a person looks at a flashing light at say, 50 Hz, their occipital lobe will have a strong electrical signal at 50 Hz, or a multiple thereof. Signals as high as 75 Hz, faster than is consciously recognizable as flashing, still generate electrical “flashes” in the brain. The signal is generated by neurons firing in response to the visual stimulus. The great thing about SSVEP is that the signals are much easier to detect than standard EEG signals. Dry contacts work fine here – no gel required!

[Kay’s] circuit is a classic setup for amplifying low power signals generated by the human body. She uses an AD620 instrumentation amplifier to bring the signals up to a reasonable level. After that, a couple of active filter stages clean things up. Finally, the brainwave signals are sent into the ADC of an Arduino.

[Kay’s] circuit is a classic setup for amplifying low power signals generated by the human body. She uses an AD620 instrumentation amplifier to bring the signals up to a reasonable level. After that, a couple of active filter stages clean things up. Finally, the brainwave signals are sent into the ADC of an Arduino.

The Arduino digitizes the data and sends it on to a computer. [Kay] used Processing to analyze the signal and display output. In this case, she’s performing a Fast Fourier Transform (FFT), then analyzing the frequencies of the brain signal. Finally, the output is displayed in the form of a game.

The video game [Kay] designed allows the user to move a character around the screen. This is done by looking at one of two blinking lights. One light causes the player to run to the right, while the other causes to the player to move upwards.

[Kay] has a lot planned for Control iT, everything from controlling wheelchairs to drones. We hope she has time to get it all done between her graduate classes at Columbia!

I wonder.. Put something like this at the back of a head mounted display strap.

Have the icons on the HMD flash at different rates.

Detect which one the user stares at for longer than trigger time.

You’d have something like Glass, but without the annoying “OK Google” or touchpadding or suchlike.

I feel like detecting which light someone is looking at should be simpler. I’d guess detecting which direction someone is looking is a lot easier than detecting brain signals. Or is this for people who can’t move their eyes at all?

Eye-tracking works if you’ve got a static frame of reference, but this works outside of those confines.

One of these with Wi-Fi & brains could remotely control items in your home. The rig wouldn’t need to calculate where you were, where your eyes are, and what you’re looking at. It’ll just be “Oh, you’re staring at the blinds controller. Better operate them.”

Imagine the applications with people with paralysis… they’d get so much control of their life back, instead of relying on a carer for everything.

Reading the wiki on EEG, i’m surprised to learn that all the analysis is done on very, very low frequencies, all < 100 Hz.

Has no one ever explored higher frequencies ? I simply can't believe there is nothing above 100 Hz going on in our brains electrically.

Sure, an arduino is fine for sampling signals < 100 Hz, but the dynamic range will still be terrible (8bit ADC).

So for higher frequency components; With cheap, high bandwidth SDR devices these days i'm surprised no one has tried this yet. All of them have a LNA built-in and newer ones have a 12bit or even 16bit ADC.

I got my SDRPlay RSP recently but the lowest tune-able frequency is 100kHz, for the most common RTLSDR tuners it's ~20MHz (E4000), and a USRP is probably still not in the price range of the average tinkering hobbyist.

So, I wonder, what other electrical signals are there to explore in the human brain ?

Any doctors in the house ?

It seems the “Direct Sampling Mod” (eg. bypass the tuner, feed the ADC directly) on some RTLSDR’s is able to go all the way down to DC, and still have a 2MHz bandwidth.

I am a doctor (MD, MEng (Electronics)) but not an EEG expert :-)

That said, have a look at TI’s ADS129x range of chips. They offer a maximum of 32kHz of sampling at 24 bits/sample. Those are specs that highly exceed what is necessary to detect well-known EEG features like the various types of ‘brain waves’ that are relatively high amplitude, low frequency signals reflecting some sort of synchronised firing of many neurons. Even fast firing neurons do not exceed a few hundreds of Hz in frequency. The temporal (and spatial) low pass filters formed by the skull and the electrodes will attenuate even these low amplitude, ‘high’ frequency events. So yes, it is possible to capture this kind of data, albeit attenuated and eroded by all sorts of noise. As far as I know, a fait bit of research is currently directed towards multichannel EEG combined with 3D/4D electrical models of the cortex and skull. Related work uses fMRI or FIR (both based on blood flow rather than electrical potentials).

Thanks for sharing those insights, especially about the low pass filter. And as you say it’s a very noisy medium. Maybe some phased-array technology can be used to determine direction and polarization so one can better isolate a signal by those properties.

You’re ADC suggestion (32kHz, 24bit) also made me realize a reasonable soundcard is up to the job as well, most audio devices can sample up to 192kHz and some high-end ones also do 24 bit.

I have been pondering the soundcard route as well. Trouble is that you need very high quality DC reject since the electrodes and the skin on the head create a DC voltage that far exceeds the AC signals that you are looking for. We’re talking microvolts here.

And please keep in mind that some form of galvanic separation is required between your skull and mains power.

What about a number of lights, each at a different frequency, preferably prime numbers like. 47, 53, 59, 61, 67, 71 and 73Hz? Depending on the occipital EEG response from flickering lights in the peripheral versus central visual fields, this could offer the opportunity of selecting one of a number of options by focusing visual attention to one of these lights.

How about gathering big data sets with color cards and then using it to think of a color as a button press. Eye tracking + multicolor button presses. Plenty to work with.

This is awesome,

thank you for sharing Kay.

Excellent work and its teh low cost way, without throwing ton of money into the project…