Security assumes there is something we can trust; a computer encrypting something is assumed to be trustworthy, and the computer doing the decrypting is assumed to be trustworthy. This is the only logical mindset for anyone concerned about security – you don’t have to worry about all the routers handling your data on the Internet, eavesdroppers, or really anything else. Security breaks down when you can’t trust the computer doing the encryption. Such is the case today. We can’t trust our computers.

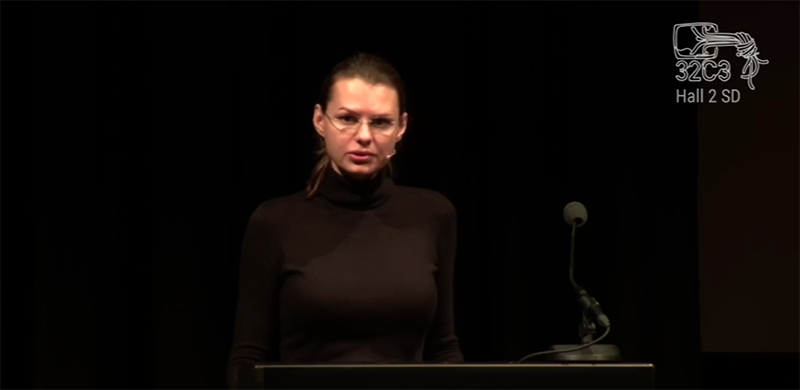

In a talk at this year’s Chaos Computer Congress, [Joanna Rutkowska] covered the last few decades of security on computers – Tor, OpenVPN, SSH, and the like. These are, by definition, meaningless if you cannot trust the operating system. Over the last few years, [Joanna] has been working on a solution to this in the Qubes OS project, but everything is built on silicon, and if you can’t trust the hardware, you can’t trust anything.

And so we come to an oft-forgotten aspect of computer security: the BIOS, UEFI, Intel’s Management Engine, VT-d, Boot Guard, and the mess of overly complex firmware found in a modern x86 system. This is what starts the chain of trust for the entire computer, and if a computer’s firmware is compromised it is safe to assume the entire computer is compromised. Firmware is also devilishly hard to secure: attacks against write protecting a tiny Flash chip have been demonstrated. A Trusted Platform Module could compare the contents of a firmware, and unlock it if it is found to be secure. This has also been shown to be vulnerable to attack. Another method of securing a computer’s firmware is the Core Root of Trust for Measurement, which compares firmware to an immutable ROM-like memory. The specification for the CRTM doesn’t say where this memory is, though, and until recently it has been implemented in a tiny Flash chip soldered to the motherboard. We’re right back to where we started, then, with an attacker simply changing out the CRTM chip along with the chip containing the firmware.

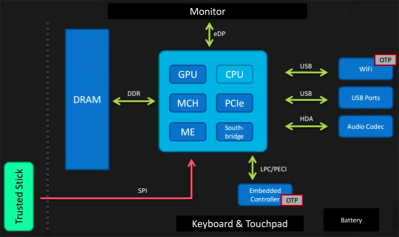

But Intel has an answer to everything, and to the house of cards for firmware security, Intel introduced their Management Engine. This is a small microcontroller running on every Intel CPU all the time that has access to RAM, WiFi, and everything else in a computer. It is security through obscurity, though. Although the ME can elevate privileges of components in the computer, nobody knows how it works. No one has the source code for the operating system running on the Intel ME, and the ME is an ideal target for a rootkit.

Is there hope for a truly secure laptop? According to [Joanna], there is hope in simply not trusting the BIOS and other firmware. Trust therefore comes from a ‘trusted stick’ – a small memory stick that contains a Flash chip that verifies the firmware of a computer independently of the hardware in a computer.

Is there hope for a truly secure laptop? According to [Joanna], there is hope in simply not trusting the BIOS and other firmware. Trust therefore comes from a ‘trusted stick’ – a small memory stick that contains a Flash chip that verifies the firmware of a computer independently of the hardware in a computer.

This, with open source firmwares like coreboot are the beginnings of a computer that can be trusted. While the technology for a device like this could exist, it will be a while until something like this will be found in the wild. There’s still a lot of work to do, but at least one thing is certain: secure hardware doesn’t exist, but it can be built. Whether secure hardware comes to pass is another thing entirely.

You can watch [Joanna]’s talk on the 32C3 streaming site.

Why bother with x86? it’s a legitimate question because there is nothing about x86 that doesn’t exist in more/fully open architectures. ARM chips are way more open and ARM laptops (chromebooks) actually exist. I would love to see RISC V chips get made but it is still possible to run it on a beefy FPGA.

Whatever the bad qualities are, they are all non-significant when you consider count of existing x86 hardware, hardware in development and architecture support among software. Most of us are stuck with x86. That said, I’d donate to coreboot development if I had money to donate. It’s too important to be stopped as a project.

The fact Intel decided to start modifying hardware features on a per-user basis violates the very foundation of having a standard. Since desktops are now outsold by mobile chips 4:1, as a company they have done things to their user base out of desperation that no sane CEO should allow.

Additionally, the whole BIOS level “improvement” concept is still a ridiculous notion. Yet most of industry turned a blind eye to the collusion between Microsoft and Intel to bring in a concept proven architecturally flawed by IBM & Dell in the 1990’s. The fact SoCs are taking over makes sense, as the echo system of proprietary buggy motherboard hardware becomes irrelevant to the design cycles.

As I write on a 3 year old computer still more powerful than anything I can now buy retail, I begin to question if Moore’s law fell and no one else noticed. The age of good-enough computing power has diluted the market for high-end chips.

I sold my stock in MSFT years ago, and dumped INTC when they attempted to sell $120 mobile chips to compete with a several year old $5 ARM6 SoC. Now its MSFT’s turn to try selling yet another mobile OS to compete with a free OS that’s already running 75% of mobile devices. I often wonder if these guys are shorting their own stock on a dare from peers.

Well said, sir!

I have a feeling that one of these days, people will realize how important lightweight software is, as Intel’s tick-tock slows and transistors approach the size of an atom. Moore’s Law does indeed only go so far, and if we want more productivity the best way to do it is not to get more power, but to clean up the crud that makes us need that power.

Of course, certain things do need more power: games, simulations, AI. But for these, parallel processing is much more effective, so we can just use GPUs or something like Knights Corner.

TOR sadly runs on x86 architecture only!

I’m not quite seeing how it is x86 only ? Any more details as to why ?

https://gitweb.torproject.org/tor.git/log/?qt=grep&q=arm

It’s not x86 only. They’re full of shit :).

On the contrary. It’s even available for Android.

Android isn’t a processor architecture. In fact I believe x86 port of android exists, or will exist.

Yes it does, however, relatively few devices running it use Intel chips. Most of them are ARM-based, hence saying something runs on Android almost always means it can run on ARM.

Nothing prevents you from compiling TOR to different architectures, it’s all Linux. TOR’s excuse is that the the architecture and kernel code has to be completely documented and tested, including the RNG. preferably the 86x should not be to resent, think 286 or 486! Running Tor on android on a phone is beyond palmface!

But Tor is only so so safe, even if you do everything right. FBI and other agencies have total mapping capability on the exit nodes, so if you are starting a drug webshop with lots of traffic, don’t bother using TOR, traffic volume analysis is going to pinpoint you eventually. Once in awhile, as in “I’m a reporter in a dictatorship (which is opposed by the US)” you are home free!

Dedicated hardware exists that emulates the old x86 processors at modern age speeds! Such a Tor node is available for 800$ from some guys in Spain (open source).

You can download an image to have your raspi be a TOR server.

I’ve got a Samsung NP900X4D with i5 capable of AES-NI. Guess what? They locked it in BIOS. I now need to reverse-engineer it a little bit to re-write the value in MSR to a correct one, and I’m not even sure that is going to help.

The question is – if I cannot trust manufacturers not to disable features I need while configuring BIOS, how can I trust them to keep my privacy interests in mind on the same thing?

Oh, and I’ve got an Asus EEE 900 which disables PCI-E slot if the card in it is not wireless. I was planning to watch videos With Broadcom HD decoder installed but after a week of tinkering understood it’s not gonna happen, and I’m not the only one. Hey, anybody capable of RE an AMI BIOS here? I’m not the only one with this problem, and I’d need some pointers at which tools to use to disassemble the code and what would be the best practices, where to search for that code etc…

I think you will struggle with getting a bios modded here, Try bios mod forums.

This “trusted stick”… what if someone hacks it?

Tamper proof security coprocessor must be bug free, un-hackable and un-modifiable. It is a MUST. The idea is you keep it small and easy to audit, then go through any possible exploit in it, from typos in sources to reading each bits out from sliced chips using SEM, and hope you have covered every case it will ever encounter.

Exactly. Nothing is impossible, but some things are harder than buying a rubber hose.

What if we make our own? Mooltipass 2?

And so it begins. The future, a point of fundamental trust. Not governed or controlled but honest.

It doesn’t matter how good the software is, if the hardware is acting as a double agent.

It has to be from scratch because the hardware is created by untrusted software.

The hacker community will have to create our own network of independent communication.

It’s the only way to regain what was signed away in unread licence agreements and the privacy that has been taken without consent or justification.

It is indeed a huge task. The government will fight all the way but we cannot give up and accept ignorance.

“Although the ME can elevate privileges of components in the computer, nobody knows how it works. ” – well, “nobody” is too strong expression. Ideal place for some “government approved security software”, for greater good

Indeed. My trust just hit zero at “small microcontroller running on every Intel CPU all the time that has access to RAM, WiFi, and everything else in a computer” – they managed to ring just about all my alarm bells without providing one single reason I should trust this hyper-hypervisor to work for me and not against me. “Just trust me” is the quickest way to have me “NOPE!” the hell out of there…

+1

I thought they were describing a backdoor at first, not a security feature

In first place… Thank you Joanna Rutkowska, it has been a very very clear explanation about how it could be done.

But… Future? It’s the present! I have a Teclast X98 Air II and I’ve seen this problem in action. The tablet suicide the BIOS if you try to install a Windows x86, and you needs to re-flash manually the chip to return it to live.

How it works?

This tablet was built with a Intel x64 architecture and a BIOS (that was the reason why I bought it) and this BIOS controls nobody uses Windows x64. How?

There is into this BIOS a Windows BOM (hahaha, but it’s his name, it’s not a joke) provided by American Megatrends. How I discover this “issue”? Using a hexeditor, dumping with a Raspberry Pi the content (SPI is used for other things…), regarding to a hard-coded path, searching into google (I appreciate the hard work of searching into pan.baidu.com), and downloading the partial leaked source code about this BIOS. Into this package you will see an American Megatrends help file for a partner to how enable it.

You can download this leaked code from here http://www.bdyunso.com/fileview-3jro8r/ . The code is from his brother, the Teclast x80HD, but uses the same tPAD core, and the same cert to sign this BIOS.

So… it’s not a joke, it’s a reality, and it’s today big problem.

>Joanna

Hahaha oh wow.

Joanna Rutkowska has done technically the most hardcore RE stuff ever done on x86, but you’ve probably never heard of her because she’s not pop culture friendly like all the mobile and gaming console developers, or the lazy but critical crypto and ring zero entities around x86 who talk about how crap the industry is but are lost when asked about progressive design..

I’d like to see her do more around TXT and TPM and virtualization. Some researchers will handle all the overflow protections like NX and that new Skylake integer overflow protection with quick hacks and who really cares about memory protection where there is a debug API and extremely bad ACL and MAC design even in restricted mode?

Please read Ken Thompson’s Turing award address, “Reflections on Trusting Trust”:

https://www.ece.cmu.edu/~ganger/712.fall02/papers/p761-thompson.pdf

Then consider if someone executed the hack on a copy of gcc and got it into circulation in a major Linux distro.

Trapdoor compilers are theoretically possible, yet most compiler code generation is developed on several regression based test environments. Thus, some vulnerable attack vectors may be inserted, but the operation of the countless binary optimization options, performance-tracking and fuzz-testing utilities make it highly unlikely to go unnoticed. Multiple public-key based encryption signing variants also make modification of the existing binaries problematic.

Such a technique would only really be possible if and only if the entire existing open-source code base came into existence after the exploit was deployed. Therefore, this unlikely event can only really succeed on a “new” architectural platform porting project where no current software deployment existed yet, and the platform cross-compiler binary output were never checked by the compiler developers.

Note most modern laptops do come with Computrace enabled in the BIOS, and users can’t truly get rid of it…

This allows a remote user to do pretty much anything (silently re-infects and can delete a hard-drive) at the hardware level, and as it is a “Slow” RAT it generates minimal network traffic over several days. Anyone who buys a used laptop or an infected hard-drive is vulnerable to this simple issue. Note, 3 out of 4 DELL laptops our IT staff got off newegg had this problem pre-activated… while Acer and Toshiba enable this feature at the factory…. Indeed, there are known payloads that target this hardware, and have been shown to exist in the wild.

The iPad users are probably the most vulnerable this type of issue, as the OS won’t even boot unless the cloud instance is enabled by the owner. It does cut down the theft problem by bricking stolen hardware, but also makes it nearly impossible to use without Apple’s authorization. So thieves will usually try to return bricked hardware to stores and appropriate the account of an unused unit. Eventually, this hardware will cease to function too when the servers go offline, and most incorrectly assume only Apple can sign software for the device.

Primarily, people also mistakenly assume a RAT needs persistent flash-storage to be effective. There is proof of concept code that shows GPUs can operate a RAT completely independent of the main processor, and can randomly reinfect from a local attack vector like a compromised router.

RAT persistence reminds me of a somewhat recent retailer hack. It was something big like Walmart or K-Mart. All the registers / point-of-sale systems would reset on every boot like Deepfreeze but the attackers used a remote exploit and would simply re-infect the machines on every boot.

I would settle for a secure OS. I guess I’m a dreamer.

It is quite easy to have a fairly secure OS. Disconnect all Ethernet cables, and pull out all wireless cards. Security through disconnectivity :)

That didn’t work out too well for the Iranians and their uranium separation centrifuges. Stuxnet bridged that gap with a 0day on a USB stick.

At least you said “fairly secure” and not “completely secure.”

Trust intel? Really?

Why don’t you also ‘secure’ your data on dropbox :)

By the way you make a small piece of code to secure an infinite amount of code. Like 240KB of signature verification in hardware isolation. You don’t try to make all code secure.

The tech for that already exists and is used in embedded just typically poorly, except by IOS and MS hardware which is undefeated in those regards just poorly designed in the rest.

The tech also exists to kill memory corruption but it’s not profitable to sale chips that can do high bandwidth memory hashing. This would kill any type of ROP, stack, format string, heap, integer vulnerability and nothing malicious even sql injection way up on the OSI could even begin..

Does anyone know anything about AMD chips in this regard?

I have not watched her talk yet. But what if instead of “hoping for a truly secure laptop”(article) and “hoping you have covered every case it will ever encounter” (@numpad0’s comment) we just admit that there is not even a battle to fight as there is no victory possible. I do not mean to give up research, tools or practices of computer security. I mean that we could instead integrate that idea, and change how we use, or we plan to use, or we fantasize how we would dream to use computers. Because to me, hoping is really not the approach I like when I think about science or even just technology. And yeah, that is mainly an open question, I will not give you THE answer yet of why 42.

I must admit I’m both fascinated and annoyed. Fascinated by Joanna’s work and frustrated by the absolutist and abstract stance on trust being projected onto hackers.

A political problem has never been solved by a technological solution. The solution must reach an adoption to the level of being mainstream, being cultural, before it influences not policy but political direction, terminology and practical-theoretical framework.

Fascism isn’t crushed by any turn key technology. Any working, completely trustworthy computing scheme can be made illegal, immoral, subject to peer-to-peer snooping (DDR is a good example) by the mainstream culture. Liberalism is the unceasing work against fascism, against tyranny, through grassroots culture.

We don’t need to trust our computers. We need to create an environment in which the entire concept of trusted computing is obsolete. And we won’t get there with technology alone. We have already seen how encryption, bitcoin, wikileaks are systematically associated with criminality, terrorism, and fascism (which is weird, a technology is politically, legally and morally neutral – it’s a tool). Unless this challenge is met by a change in culture, any technological advances in trusted computing is moot, ad hoc, peripheral and headed for extinction.