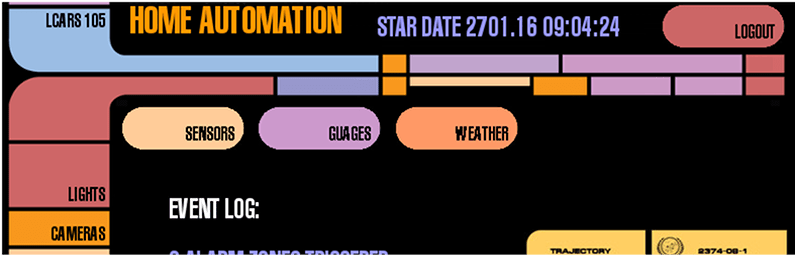

Every time we yell out, “OK Google… navigate to Velvet Melvin’s” we feel like a Star Trek character. After all, you’ve never seen Captain Kirk (or Picard) using a keyboard. If you get that same feeling, and you have a Raspberry Pi project in mind, you might enjoy the Raspberry Pi LCARS interface.

You can see the results in the video below. The interface uses PyGame, and you can customize it with different skins if you don’t want a Star Trek look.

Obviously, you’ll need a touchscreen to make the system work effectively. The library has a few configuration variables that allow you to move widgets around by touch or show a cursor for debugging.

If you crave more Star Trek projects, we had quite a few entries in our Sci-Fi contest. If none of those are hardcore enough for you, you might enjoy the Enterprise as a submarine (which predates the opening scene of Star Trek: Into Darkness by quite a bit).

It’s cold out, reroute all power to climate control!

Did someone else notices “Gauges” written “Guages” in the video ?

Ha. Oops.

Caught it on the post pic ;p

Awesome!

I can see this working oh so well with one of those mirror setups, just with a touchscreen overlay so you can still interact with it.

I was thinking of an LCARS interface for a Pi-based carputer but was going to change the name to PiCARs or maybe PiCAR(D)s

Something isn’t right with the fonts and the curves on the buttons is pixilated (but seems to be okay on other curved items.0. They are small things, but can be quite irritating.

Well spotted – issue is lack of anti-aliasing. For the buttons, the simple colour-substitution algorithm causes the pixellation.

Takedown issued in 3…2….1….

https://en.wikipedia.org/wiki/LCARS#Legal

Good point, downloaded!

Credits and Notes

LCARS graphical user interface is copyright CBS Studios Inc. and is subject to the fair use statute

LCARS images and audio assets from lcarscom.net

Sample weather image from the SABC

The Android Tricorder app specified was taken down, despite not using any assets.

Unfortunately “fair use” doesn’t enter into these things either

(and in case you think the name did it – no, they specifically cited the interface)

Yeah, I miss that tricorder app.

Ok. This is awesome! But… (yeah, the buts) this is a potential “copyright violation” with the usage of LCARS. It’s stupid we have a system like this, where creative derivative works are forbidden. So, it’s now mirrored in InterPlanetary File System

https://ipfs.io/ipfs/QmPuF4iP6Yp7YomXpLhNosuxV7hS9tLxttB6x1GLR3h52B/rpi_lcars.git

This works whether you are running IPFS locally or not. If you are, it redirects to your local client and swarms from there. Else, your computer will use the ipfs.io gateway. The coolest part is that you can do this from your command line:

git clone https://ipfs.io/ipfs/QmPuF4iP6Yp7YomXpLhNosuxV7hS9tLxttB6x1GLR3h52B/rpi_lcars.git

Have at!

Thank You. For some reason my mind black-holed any knowledge about the InterPlanetary File System. It’s very interesting and a perfect fit in this case.

I thought it was very suiting to play a video via IPFS. I was provided a link to the newest Star Trek movie: Star Trek Horizon.

It was released 5 days ago, on Youtube. It’s a fan-made file on a budget of $25k. You’ll need VLC (but really, you should be using VLC :) and play this as a network stream. And damn, is it awesome! And how suiting that a fan-made Star Trek film is transmitted via an interplanetary file system :-D

https://ipfs.io/ipfs/QmYoGSNh2vnSkuq41NmcnnEhN553tzKKRihBx3Dygzbdrd

It cut off my text…

What sold me on the “Alpha” project of IPFS was that it could seamlessly handle large objects, and played on multiple endpoints at any time… and just work! I’ve never seen an alpha anything that wasn’t crashy and buggy. And yet, when I watched this video locally on 1 machine, I tried to watch it on the other; lo and behold, DHT kicked in, and my computer gave the data to the one requesting. The result was that it cost 0 bandwidth for the second copy!

I also have IPFS mounted as a filesystem object, and can interact with it easily as unix objects. It just works, and works well. What is missing is any sense of ‘users’ on a computer, but that is being argued right now. The implementors don’t want to screw this up.

My next project is to port jor1k Linux emulator into an IPFS share. That allows computation in the browser, at a good ‘ol Linux prompt. Even includes networking. Now, make that IPFS aware, and you have something very interesting indeed. Add blockchains for shared data and Zookeepers for assigning data to ‘jobs’ (read instances of jor1k running) and you have a hyper-distributed computer running in peoples’ browsers.

Hmm, I’m a little disappointed that it is done with static images. I’ll spend a little time on a programmatic LCARs interface. What I would like is to have a computer voice that sounds somewhat like Majel’s.

The reason for the static/gif images is so that you can replace it with, for example, a JARVIS interface, or that interface from The Arrow or Jurassic Park, etc. LCARS was just meant to be a simple example.

Programmatic generation of the interface doesn’t preclude other interfaces.

It is always assumed that the LCARS interface is meant as a system that arranges and re-arranges itself automatically depending on the needs of the user. The reason why this is often omitted in builds is because nobody knows how this actually should work or look.

I’ve been experimenting with okudagrams for some time, and getting it to do something that is both cool and logical is pretty hard : https://hackaday.io/project/7128-experimental-okudagram-interfaces

The best example I came up with is the date-time sequence : https://www.youtube.com/watch?v=wgSnokdyVJI

The main screen shows a bit of everything (ideally on the basis of relevance at that time). The date-time sections “morphs” into a more “advanced” interface whenever a function is called that depends on this feature.

Doesn’t Data have WiFi? Why does it ever have to look at a screen?

That is cool. Nice job! :)

This is sooooo awesome! Love the work you’ve put into it.

This looks awesome.

Would it be possible to run this on Android instead of an RPi? I am working on my own home automation setup, and was planning to use a couple old/cheap Android tablets as interface consoles.