Dr. Claude E. Shannon was born 100 years ago tomorrow. He contributed greatly to the fields of engineering, communications, and computer science but is not a well known figure, even to those in the field. However, his work touches us all many times each day. The network which delivered this article to your computer or smartphone was designed upon important theories developed by Dr. Shannon.

Shannon was born and raised in Michigan. He graduated from the University of Michigan with degrees in Mathematics and Electrical Engineering. He continued his graduate studies at Massachusetts Institute of Technology (MIT) where he obtained his MS and PhD. He worked for Bell Laboratories on fire-control systems and cryptography during World War II and in 1956 he returned to MIT as a professor.

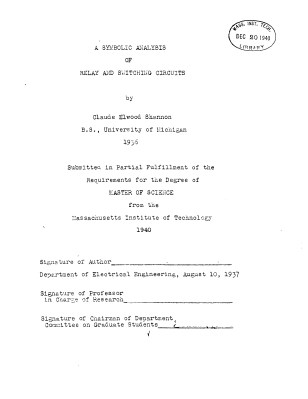

Shannon’s first impactful contribution was his masters thesis which took the Boolean Algebra work of George Boole and applied it to switching circuits (then made up of relays). Before his work there was no formal basis for the analysis of switching systems, like telephone networks or elevator control systems. Shannon’s thesis developed the use of symbolic notation to represent networks and applied simplifying rules to optimize the system. These same rules later translated to vacuum tube and transistor logic aiding in the development of today’s computer systems. The thesis — A Symbolic Analysis of Relay and Switching Circuits — was completed in 1937 and subsequently published in 1938 in the Transactions of the American Institute of Electrical Engineers.

Shannon’s first impactful contribution was his masters thesis which took the Boolean Algebra work of George Boole and applied it to switching circuits (then made up of relays). Before his work there was no formal basis for the analysis of switching systems, like telephone networks or elevator control systems. Shannon’s thesis developed the use of symbolic notation to represent networks and applied simplifying rules to optimize the system. These same rules later translated to vacuum tube and transistor logic aiding in the development of today’s computer systems. The thesis — A Symbolic Analysis of Relay and Switching Circuits — was completed in 1937 and subsequently published in 1938 in the Transactions of the American Institute of Electrical Engineers.

Shannon’s doctoral work continued in the same vein of applying mathematics someplace new, this time to genetics. Vannevar Bush, his advisor, commented, “It occurred to me that, just as a special algebra had worked well in his hands on the theory of relays, another special algebra might conceivably handle some of the aspects of Mendelian heredity”. Shannon’s work again is revolutionary, providing a mathematical basis for population genetics. Unfortunately, it was a step further than geneticists of time could take. His work languished, although interest increased over time.

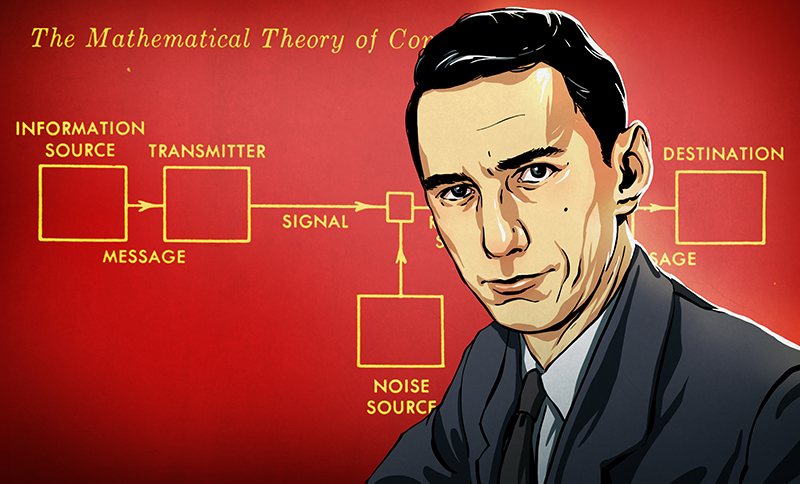

Shannon’s best known work is a 1948 article A Mathematical Theory of Communication published while working at Bell Laboratories. We’ve written about this work previously here on Hackaday since it is so fundamental to many of our activities. This first aspect of Shannon’s work determines the theoretical limit to how much information, how many bits, can be transferred over a communications channel. The second aspect is how to use error correction codes to approach that limit. Telephone circuits, radio communications, disk to read head data transfers, and the Internet are all are impacted by Shannon’s work.

The final contribution I’ll mention is the Nyquist-Shannon Sampling Theorem. This is important because it specifies how to sample an analog signal so that it can be reproduced accurately without creating aliases. If you are using an Arduino to sample a 1,000 Hz signal the samples must be at least twice the signal rate, in this case 2,000 Hz. An interesting implication from the theorem applies when a signal of interest is not based at zero-frequency. For example, an FM radio signal at 100-102 MHz can be sampled at 4 Mhz, twice the frequency of the interval, to extract a 4 Mhz bandwidth signal for decoding.

The Hacker

In addition to his more academic achievements, Shannon tinkered, or hacked, in other areas. There is a bit of confusion whether he or Marvin Minsky, the artificial intelligence guru, created what we now call the “Useless Machine” but which Shannon named “The Ultimate Machine”. It’s clear that Shannon created a nicely polished version of the machine that turns itself off.

Shannon also built a maze solving mouse. Again, the build is as nicely done as the concept. Like today’s micromouse competitions, Shannon’s mouse would work through the maze learning the pattern. It could then be placed anywhere on the maze and find its way to the end. The mouse was driven from beneath the ‘floor’ of the maze by a moving magnet. Appropriate to his MS thesis the computing mechanism is a large bank of relays. The mouse, Theseus, received attention from the press in its day.

Chess and juggling were favorite activities of Shannon so he turned his genius to creating machine versions of them. He created a W. C. Fields robot that could juggle and a relay based chess player. His analysis of an automated chess player is one of the first to address the problem.

Shannon battled Alzheimer’s disease late in life and passed away in 2001. Unfortunately he did not live to see, or understand, all the results of his achievements — especially how the impact of his work was magnified with the birth of the information age.

The last half of James Gleick’s “The Information, a History, a Theory, a Flood outlines just how much our current understanding of just about everything is rooted in Shannon’s work. Ironic indeed that it was the failure of his own information processor that did him in.

It is incredible to think of how foundational some of his ideas are.

Dementia is the worst. Your body can continue to function well for years as the RAM in your head becomes more and more unreliable. I just heard an NPR interview that quote 28 million out of 80 million baby boomers will be effected.

It’s heart breaking. I imagine Alzheimers might be even more horrific to frequenters of this site as well cherish the abilities of the brain.

Watch the video that pops up after the above one finishes. It’s a great summation of Shannon’s life. Watching I realized it was no wonder that Shannon was one of the people the Turing looked up when he came to the United States.

https://www.youtube.com/watch?v=z2Whj_nL-x8

“If you are using an Arduino to sample a 1,000 Hz signal the samples must be at least twice the signal rate” that should be “greater than twice”. To sample at exactly twice will produce a constant that only represents the phase difference of source and sampler. A very common error in text books and all over the Interwebs. Try it on paper with a drawing. Or calculate sin(2 pi f) every time it passes through zero – which occurs at twice its frequency.

Another error in thinking is that people think you can sample any frequency below the half-point of your sampling rate perfectly.

Suppose you have a signal arbitrarily close below half your sampling rate. That can be re-stated as: sampling a signal that is exactly half your sampling rate, but is being continuously phase-shifted by a slight amount. Your sample of the signal gets modulated in amplitude by that arbitrary difference.

The lower you go in frequency, the smaller the error. Takes about a quarter of your sampling frequency before it’s virtually all gone, so if you want to resolve a 1000 Hz signal and preserve the amplitude, a 2001 Hz sampling frequency won’t do. Your samples would get amplitude modulated at about 0.5 Hz.

Actually, Shannon/Nyquist is right. The amplitude modulation you mention is the sum of two frequencies that differ by the frequency of the modulation. One is half that difference below the sampling rate over 2; the other is half that difference above the sampling rate over 2. If you have an antialiasing filter that eliminates frequency above half the sampling rate, the upper frequency goes away and so does the amplitude modulation. The signal gets through **exactly** intact.

The trick, of course, is to have a low pass filter that will pass the lower frequency and not the upper one. So in real life circuits that’s where the limit lies, not in the sampling theory.

Sorry Dax but this is wrong. Nyquist-Shannon is exactly correct. Discretising the signal in time copies the frequency spectrum to multiples of the sampling frequency. As long as your original signal is band-limited to less than half that frequency, the copies (aliases) will not overlap, and low-passing them out will reconstruct the original signal (can do this in time domain by convolving with a sinc).

The only fly in the ointment is that a perfect low pass filter is hard to construct electrically, so common practice leaves 10% spare bandwidth for the reconstruction filter to ramp to 0. However, the information still exists, and a perfect reconstruction is possible at 100% of the limit.

Years ago I worked at IBM on a Digital Signal Processor chip that was used in IBM computers and Thinkpads. It could do many things, including dial-up MODEM at 48K Baud. We were working on a 52K version with Gerhard Ulbricht the inventor of Trellis Coding, When we finally got it to work under good line conditions, we had a meeting about the future of Modems. As I recall, Ulbricht said, in his perfect Jarfalla Laboratory accent:

“Dis is the Lasstt Modem on the Fone Line! Shannon ist already turning offeer in his Graff!

Regards, Terry King

…In The Woods in Vermont, USA

terry@yourduino.com

If you’ve never read it, The Idea Factory covers early Bell Labs and spends quite a bit of time on Claude Shannon. Really good book.

Fantastic book, I strongly recommend it!

That equations derived by Shannon (with Norbert Wiener and others) to calculate the entropy of a signal could be used in population biology to predict the diversity of species in a community, blew my mind as a student and forever changed how I approached many phenomena. Before Gleick, science writer Jeremy Campbell’s “Grammatical Man” attempted—with mixed success—to weave Shannon’s contributions to Information Theory with linguistics and genetics. In it he wrote how Shannon was wary of extrapolating too far beyond a narrow focus of his research to grand unifying theories.

This I was unaware od, thanks for bringing it up. Because of my involvement in ham radio I knew a engineer that work for Bell Labs, another for AT&T. . Now I wished I knew to pick their minds for some of the stuff they peaceably had to teach. Unfortunately we don’t live forever.

If you like the work of Weiner-Shannon-Nyquist et al checkout the work of David Donoho and Emmanuel Candes at Stanford. To the naive it looks as if sparsity (aka wavelet transforms) voids Shannon, but that’s not the case. You can sample at far less than Nyquist rate if and only if the *information* is band limited. This is the notion behind compressive sensing. The work of Donoho and his students, especially Candes, are as important as the work of Wiener-Shannon-Nyquist. Especially read the papers from 2004. For someone who spent many years doing DSP in the oil industry and worshiping at the shrine of Wiener-Shannon-Nyquist, Donoho’s work is mind blowing.