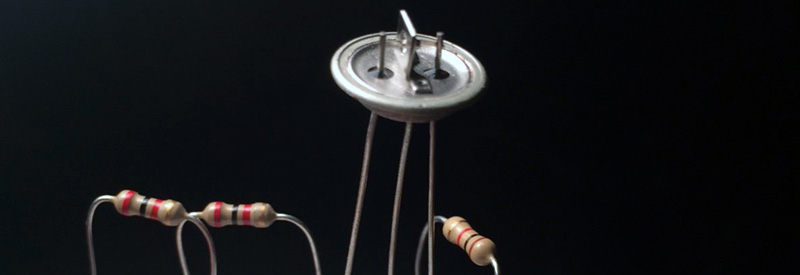

The first digital cameras didn’t come out of a Kodak laboratory or from deep inside the R&D department of the CIA or National Reconnaissance Office. The digital camera first appeared in the pages of Popular Electronics in 1975, using a decapsulated DRAM module to create fuzzy grayscale images on an oscilloscope. For his Hackaday Prize project, [Alexander] is recreating this digital camera not with an easy to use decapsulated DRAM, but with individual germanium transistors.

Phototransistors are only normal transistors with a window to the semiconductor, and after finding an obscene number of old, Soviet metal can transistors, [Alex] had either a phototransistor or a terrible solar cell in a miniaturized package.

The ultimate goal of this project is to create a low resolution camera out of a matrix of these germanium transistors. [Alex] can already detect light with these transistors by watching a multimeter, and the final goal – generating an analog NTSC or PAL video signal – will “just” require a single circuit duplicated hundreds of times.

Digital cameras, even the earliest ones built out of DRAM chips, have relatively small sensors. A discrete image sensor, like the one [Alex] is building for his Hackaday Prize entry, demands a few very interesting engineering challenges. Obviously there must be some sort of lens for this image sensor, so if anyone has a large Fresnel sitting around, you might want to drop [Alex] a line.

I decapped some ceramic dram, added a crude cover and played with refreshing them not quite enough. From memory this was 1977/8, and again from memory wrote a zero, and light made it bleed off to a one. It didn’t really work very well. Crude shapes – yes, images – no.

Did you use a lens? You might’ve been able to get greyscale by measuring how long it took each pixel to charge, or perhaps by using a sensitive analogue amp to read the bits.

I remember doing the same. One issue is that the RAM cells were not l;aid out evenly. Also, sensitivity was quite varying, and the readout was ‘1 or 0’ – no grey scale.

This has been covered in a Hackaday article a few years ago: http://hackaday.com/2014/04/05/taking-pictures-with-a-dram-chip/

The different thing about the featured webpage is that you need to write ones in the array, that will decay more or less quickly into zeroes depending on the lighting condition of each pixel. It also shows that focusing the light with a lense is necessary.

http://www.kurzschluss.com/kuckuck/kuckuck.html (in German)

how large a lens? i have 2 from a DLP TV

I make my own alpha detectors by uncapping transistors and applying the particles directly to the base-emitter junction. This works for older power transistors (2N3055) as well as small signal 2N2222. (With an appropriate amplifier you can detect individual particles, including heavy particles (muons?) from cosmic rays.)

I’ve found that the best way to decap the smaller 2N2222 transistors is to clamp them by their head (wires hanging down down) in a drill press and apply a file to the outside while spinning. Eventually the file breaks through and the uncapped transistor will fall into your hand.

Alpha detection sounds interesting, how do you shield the die from light without blocking the particles?

Alpha detection sounds interesting, but how do you shield the die from light without blocking the particles?

You’ve got a good point there. I think the entire assembly would have to be enclosed around the source. Alpha particles can be stoped by almost anything.

http://nuclearconnect.org/know-nuclear/science/protecting

Though a ‘hit’ by a particle may be louder than ambient light, one could just record the spikes. But I really don’t know for sure.

It would make for one cool Geiger Counter, IMO.

he could try a zone plate

https://en.wikipedia.org/wiki/Zone_plate

http://glsmyth.com/pinhole/zone-plate-patton.asp

I’ve only ever tried them with photographic film, shoe box cameras and an extension tube I modded

The Wiki article links to “photon sieve”, which looks like a more sophisticated version. It’s the same sort of thing, only using lots of different-sized holes, arranged, by hole size, in rings, like the zone plate. Interesting what developments computer simulation of physics has brought into the real world.

I use a large lens and a beowulf cluster of raspberry pi, the ones that detect light reset themselves and then log the reboot times. As you can imagine the frame rate sux.

+1

++1 Yeah :)

What is the spectral sensitivity of a Ge junction?

http://chemwiki.ucdavis.edu/Core/Analytical_Chemistry/Instrumental_Analysis/Spectrometer/Detectors/Detectors

http://chemwiki.ucdavis.edu/@api/deki/files/319/=Spectral_Response_curves.gif?revision=1

Germanium: 400–1700 I think.

Look up ‘Spectral responsivity geranium’.

The one that says:

Improved Near–Infrared Spectral Responsivity Scale – National Institute of Standards and Technology

It’s a PDF. Page 8.

Good luck!

Last post for awhile, going on a ‘vacation’!

There are also four or more big TX’s (naked) under the plastic cover (not potted) of those audio power amp modules STKxxxx and the TV related ones too. 2n3055’s are silicon.

Depends on the stk. Some have silicone on the larger raw dies (I’ve popped quite a few open back in the day as an ex TV repair tech)

Cool article Brian! I’ll be watching this.

Will this work with standard Geranium diodes in glass? I have several dozen still on old boards. Would be ultra low resolution but still cool.

https://upload.wikimedia.org/wikipedia/commons/a/a6/Soda-lime_glass%2C_typical_transmission_spectrum_%282_mm_thickness%29.svg

http://chemwiki.ucdavis.edu/Core/Analytical_Chemistry/Instrumental_Analysis/Spectrometer/Detectors/Detectors (thanks Dan#9445376854)

The overlap between the two spectra looks good Ge response is about 800nm to 1800nm and Soda-lime glass is does not attenuate much from about 350nm to 2750nm. The common region would be near-infrared and some of short-wavelength infrared.

(750nm to 1400nm) – near-infrared

(1400nm to 3000nm) – short-wavelength infrared

It was rather standard in the olden days of using decapped DRAM for “robot eyes” to bias the with an LED (red at that time). The bias light not only makes the chip cross the logic threshold faster, for a much higher frame rate, but it also makes the robot eyes glow red, just like in the movies. I think Carl Helmers wrote about that in the pre-Byte days…

A bias light might help here too, though the logic threshold of germanium should be lower than silicon so it should already be faster.

we had the Apple II kit that Steve Ciacia designed for that byte article.

It worked pretty well, being able to take a photo, then print it out on an Epson MX-80 was a real novelty

At some point when he has all the sensor circuits, he would still need to serialize the analog voltage and generate the video timing and the messy vertical sync signal. I hope he would at least consider using chips for those part of the project.

Given the project has 2 logs at this stage, it is not quite qualified for the “everything goes”. Not sure if the other themes applies.

Old overhead projectors have good sized fresnell lenses.

Alpha detection sounds interesting, how do you shield the die from light without blocking the particles?

While a lens could be nice, I like the idea of putting a bunch of discreet phototransistors on a ball as a giant model fly eye.

They could even get wried up with a neural network based on the nerves that connect a fly’s vision receptors to its brain.

Please, please someone do that!

It may need lenses though. Awhile ago some scientists made a camera with a ‘bug eye’ lens. They can take a picture and select anything as a virtual focal point. I won’t post the link, I do that waaayyyyy too often! ;)

And if it is an utter failure, it would be a piece of art I would consider buying!