Many stop lights at street intersections display a countdown of the remaining seconds before the light changes. If you’re like me, you count this time in your head and then check how in sync you are. But did you know that if the French had their way back in the 1890s when they tried to introduce decimal time, you’d be counting to a different beat? Did you know the Chinese have used decimal time for millennia? And did you know that you may have unknowingly used it already if you’ve programmed in Linux? Read on to see what decimal time is along with the answers to these questions.

How We Got Where We Are

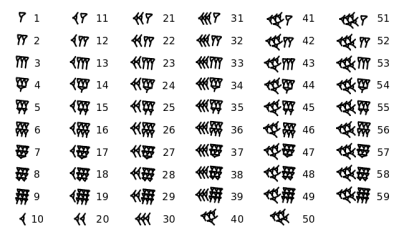

The use of 60 minutes in an hour and 60 seconds in a minute didn’t come into everyday use until the invention of mechanical clocks in the 16th century. Prior to that their use just wasn’t practical. The selection of 60 for the divisions stems ultimately from the Sumerians with their sexagesimal (base 60) number system, though it’s difficult to find just when they were chosen for the units of time. The words minute and second come from the latin pars minuta prima, which means “first small part”, and pars minuta secunda, or “second small part”.

The second was for a long time defined to be 1/86400 of a mean solar day (60*60*24 = 86400). It was recently defined more precisely as “the duration of 9,192,631,770 periods of the radiation corresponding to the transition between two hyperfine levels of the ground state of the cesium 133 atom”.

But as you can see above, though necessary and useful, all our units were derived from fairly arbitrary sources and are of arbitrarily selected lengths.

Metric Time Vs Decimal Time

Before getting into decimal time, we should clear up what we mean by metric time, since the two are often used incorrectly. Metric time is for measurement of time intervals. We’re all familiar with these and use them frequently: milliseconds, microseconds, nanoseconds, and so on. While the base unit, the second, has its origin in the Sumerian base 60 number system, it is a metric unit.

Decimal time refers to the time of day. This is the division of time using base 10 instead of dividing the day into 60, 60 and 24.

French Decimal Time

This allowed for time to be written as we would, 2:34 for 2 hours and 34 minutes, but also as the decimal numbers 2.34 or even 234. For timestamping purposes we’d write 2016-12-08.234. We could also write it as the fraction 0.234 of the day or written as a percentage, 23.4% of the day. The seconds can simply be added on as an additional 2 digits.

That’s certainly simpler than what we currently have to do with our standard system. To convert 2:34 AM to a single number representing the duration of the day in minutes we have to do:

(2 hours*60 min/hour)+34 min=154 minutes

As a fraction of the day it’s:

154 min/(60 min/hour * 24 hours)=0.1069

And finally, 0.1069 as a percentage is 10.69%. Summarizing, that’s the time 2:34 AM represented as 154 minutes, 0.1069, and 10.69%. You can hardly blame the French for trying. Vive la revolution!

Decimal time went into official use in France on September 22, 1794 but mandatory use ended April 7, 1795, giving it a very short life. Further attempts were made in the late 1800s but all failed.

If you do the math, each hour in the French decimal system was 144 conventional minutes long, each minute was 86.4 conventional seconds and each second was 0.864 conventional seconds. If you can get used to an hour that’s twice as long, probably not too difficult a feat, the minutes and seconds are reasonably close to what we’re used to. However, science uses the second as the base of time and that’s a huge amount of momentum to overcome.

Incidentally, in 1998, as part of a marketing campaign, the Swatch corporation, a Swiss maker of watches, borrowed from the French decimal time by breaking the day into 1000 ‘.beats’. Each .beat is of course 86.4 seconds long. For many years they manufactured watches that displayed both standard time and .beat time, which they also called Internet Time.

Chinese Decimal Time

China has as long and varied a history as that of the West, and for over 2000 years, China used decimal time for at least one unit of its time system. They had a system where the day was divided into 12 double hours, but also a system dividing it into 100 ke. Each ke was further divided into either 60 or 100 fen at different times in its history.

Fractional Days

But decimal time is in use today. The fractional day is also a form of decimal time and is used in science and in computers. The time of day is expressed as the conventional 24 hour time but converted to a fraction of the day. For example, if time 0 is 12:00 midnight, 2:30 AM is:

((2*3600 sec/hour) + 30*60 sec/min) / 86400 sec/day = 0.10417

As many decimal places as needed can be used.

One example where fractional days are used is by astronomers for Julian days. Julian days are solar days in decimal form with 0 being noon Universal time (UT) at the beginning of the Julian calender, November 24, 4717 BC. For example, 00:30:00 UT January 1, 2013 as a Julian date is 2,456,293.520833.

Microsoft Excel also uses fractional days within dates similar to Julian dates but called serial dates. The time of day is stored as a decimal fraction of the 24 hours clock starting from midnight.

Unix/Linux Time

We may be repulsed by the idea of switching to an unfamiliar decimal time in our daily lives but many of us have used it when calling the time() function in Unix variations such as Linux. This function returns the current time in seconds since the beginning of some epoch. The Unix epoch began on 0:00:00 UTC January 1, 1970, a Thursday. But at least those seconds are of the length we’re used to — no need to resynchronize our internal counter there.

Vive La Revolution!

But while the French revolution is in the past, rebels do exist here at Hackaday. [Knivd] is one such who has devised a decimal time called C10 that’s slightly different from the French’s. And he already has at least one fellow conspirator, [Danjovic], who’s already made a decimal clock called DC-10. How long before we’re all counting to the beat of a different drum, and crossing those intersections before the light has changed?

The imperviousness of the standard method of dividing the day stands more of a condemnation of base-10 than anything else. any quantity that needs to be divided and subdivided as often as time does needs to be expressed in a base with as many submultiples as possible, and that more than anything is the reason the 12/60 system persists. That is why decimal time has failed, and why the seven hours in the day ecclesiastical system failed to catch on despite being backed by the Church.

I don’t get what you mean with ” any quantity that needs to be divided and subdivided as often as time does needs to be expressed in a base with as many submultiples as possible”.

What are you saying and why is this not possible in base10?

Base-10 only has two and five as divisors, Base-12 has two, three, four, and six. Sexagesimal (base 60) has twelve factors, namely 1, 2, 3, 4, 5, 6, 10, 12, 15, 20, 30, and 60, of which 2, 3, and 5 are prime numbers. With so many factors, many fractions involving sexagesimal numbers are simplified. For example, one hour can be divided evenly into sections of 30 minutes, 20 minutes, 15 minutes, 12 minutes, 10 minutes, 6 minutes, 5 minutes, 4 minutes, 3 minutes, 2 minutes, and 1 minute. 60 is the smallest number that is divisible by every number from 1 to 6; that is, it is the lowest common multiple of 1, 2, 3, 4, 5, and 6.

time is about the only quantity that we routinely need to do mental calculations with, and this richness of common divisors is the major reason the current system has resisted attempts to change it.

thanks, I had not thought of that.

It’s a dumb argument. You can easily divide 10 – that’s where percentages and decimals come from.

Oh boy, I can’t wait to specify time in non-terminating repeating decimals

Why would you bother with all those awkward divisions? If the equivalent of an hour is divided into 100 units, then why the heck wouldn’t you simply (very simply) talk about the actual number of units? Why is it even necessary to break stuff down into awkward discrete ‘blocks’ of time? A slight aside – one hour divided by 12 is 5. Who (even amongst those wedded to speaking in vulgar fractions) talks about a fifth of an hour?

Again then you have to come up with some reason why there has been such resistance to decimal time, and why, in places where it was tried in the distant past, like China it did not survive. the theory that it was the utility of many submultiples is not mine – it has been extended by other on many other occasions to explain this.

I would say that the reason is very simple. People don’t like change. And with time so embedded in all aspects of how our society works, the change would be colossal and the reasons for change would need to be profoundly compelling. I don’t think they are. Would decimal time be better? I think so. Would it be better to the extent that it would justify changing just about everything? Nope – it would barely scratch the surface. It will remain a thought exercise for any rationally foreseeable future of our supposed civilisation.

You’re avoiding the question. Decimal time was tried by several cultures that were not wedded to the 12/60 system yet it never took hold. Furthermore the broad argument that decimal measures are so superior to any other should apply across all domains, yet there is resistance to conversion even in those places where the metric system has been in use for generations. You can’t simply claim that they are being reactionary.

I can’t comment on why decimal time did not take hold in earlier cultures; I simply don’t know. I don’t believe I am saying that this particular change is primarily reactionary – it would be unimaginably expense and dangerous. In general though, I reiterate my belief that resistance to change is generally not based on logic or reason, but is an emotional response to disruption of an established way of life.

I have answered you elsewhere in this thread and addressed this notion. I need not repeat it here.

On reflection, I think I’m saying that resistance to change is very much a visceral reaction, but there are also financial costs and other negative impacts on a society to consider. So yes, it’s not simply people being reactionary.

I also like the idea of a decimal time, but the argument is absolutely valid. Think of any thing with schedules, like trains. You very often want an integer number of events per hour, which shall also be spaced an integer number of minutes for convenience in calculation. Like 3 trains per hour, or 4 or 5 or 6. Here it is definitely an advantage to have many divisors. Although e.g. 8 per hour (vienna subway late night, before the even later 10 or 15 min interval) leads to 7,5 minutes or “7-8min” intervals.

Time is something where the whole world needs to be on the same page. It’s main utility is to synchronise ones activities with other fellow humans. Americans like to hold on the way they have been just because of emotional reasons be it the imperial units which even their originators like the British have given up.

They like the super divisibility of 12! So they would like to do the extra math e.g. like in calculatinging area, 4′ 7″ by 7′ 11″ they like converting the inches to feet or feet to inches then multiplying them to come up with square feet (or square inches then divide it by 144 to come up with square feet).

Well it doesn’t matter to me if Americans calculate distances and depths in miles, yards, feet, inches, fathom, furlong, nautical miles, etc. while I use the unit metre and its simple prefixed divisions or multiples to scale down or scale up. It would be one hell of a problem if we started using decimal time and as usual they would keep using the prehistoric sexagesimal time system.

They like super divisibility of 60, as if they say 3 minutes as a twentieth of an hour and communicate in such terms, While rest of the world says 3 minutes which means 57 mins more left in hour, which would be as good as 97 mins more left in an hour.

When we break a larger unit in fractions that perfect division is not needed and not always possible even with “60”. When precision is needed one switches to smaller unit.

Some people find it hard to remember easy prefixes like kilo, centi, deci, mili, micro, nano etc. which is used across different units to mean exactly the same. But they find nautical miles, miles, furlongs, yards, feet, inches, even smoots far easier and they say super divisibility of 12, 60 as the reason, haha

Then they love to have a whole new set of units for other quantities like for eg weights pounds, ounces, stones, etc etc. They find it easy to remember them all but deci-, centi-, mili-, etc which are common across all units difficult to remember. But they state the reason not that the have grown up using imperial units but super divisibility of 60,12 etc. Ohh How scientific!

A seven hour day may have been tried by a non-biblical church. (It’s news to me that the “Church” even tried to enforce a seven hour day, but I was ignorant I guess.) The Bible mentions a 12 hour day in a few places, and a night divided into four “watches” (which was likely a Roman construct before Christ). But what about the 7-day week? It seems pretty enduring without any church trying to enforce it. The French also tried a 10-day week which failed miserably.

I also find interesting the year — the dates you see online, in computers, and in pretty much anything related to universal timekeeping. We are currently 2,016 years after what? It’s benchmarked upon the birth of Christ. Happy Birthday, Jesus. Merry Christmas.

all in all it seems that any system other than astronomical observation is fairly arbitrary and any one system would probably replace any other fairly well, they are all based on abstractions to begin with after all.

Canonical hours mark the divisions of the day in terms of periods of fixed prayer at regular intervals. The Biblical references is Psalm 119:164 which states: “Seven times a day I praise you for your righteous laws” (of this, Saint Symeon of Thessaloniki writes that “the times of prayer and the services are seven in number, like the number of gifts of the Spirit, since the holy prayers are from the Spirit”) This system, with regular modifications was the way the day was divided in cloistered communities from about the eighth century on, and formed the schedule for the Office said in parish churches.

Oh and the seven-day week is Biblical as well. Exodus 20:9 – “Six days you shall labor, upon the seventh: rest”

It’s also based on the beginning of the age of Pisces. Maybe the currently popular religious myths were retrofit to that. Back then, people believed in astrology a bit more, so people of that day would’ve expected that something was going to happen around that time anyway. But then again, the idea of counting years upward from that time didn’t get implemented until 525 AD.

The “watches” were probably shift rotations of soldiers who were watching something (the royal guard perhaps), that’s what I always thought. If you are expected to stand and stay vigilant for 3 hours, I guess you might need a break.

“But what about the 7-day week? It seems pretty enduring without any church trying to enforce it.”

A week is duration between two successive cardinal (if that is the term) easily recognised phases of the Moon. A week from New Moon is First Quarter, a week later from that is Full Moon, a week from it is Last Quarter, and a week after that New Moon again.

I’m trying to think of mental arithmetic I actually perform involving time. The only division I can really think of is dividing by two, as in, I’m going for a 2.5 hour ride, so I need to turn around at (or a bit before) 1.25 hours, and as you can see that works as well in decimal and sexagesimal.

What I don’t do is think “I have 2 hours left till quitting time. I’m going to spend half on hackaday, a third watching porn, and the remaining sixth pretending to work. 2 / 6 equals… blast! A repeating decimal. Foiled again!” And I definitely don’t divide any lengths of time up into twelfths or fifteenths.

I’m not counting the way we round time in quarter hours (“quarter till five”, etc.), as that’s not because we care about dividing an hour into four equal parts, but because there’s a certain class of scheduling where we need a unit about 900 seconds long — a minute is too fine, and an hour is too coarse. If there were, say, 50 minutes in an hour, we’d probably reckon time in fifths of an hour (720 seconds) for the same purposes. (Or in the French decimal time system, we’d round to a tenth of an hour, 864 seconds.) It would be bad news if we had a prime number of minutes to the hour, but anything with a factor somewhere in the 10-20 minute range would serve well enough.

“And I definitely don’t divide any lengths of time up into twelfths” Then you are unusual if you have never used the expression “five to”, or “five after” a given hour, or do not intuit the hour divided as it is displayed on an analog clock – the point here being that most do.

Actually, I don’t — I don’t know if it’s a regional thing or what, but I, along with most people I know, read the time in hours and minutes like “ten oh five” or “eleven fifty-five” except when rounding to quarter hours, which are phrased as “past”/”after” or “till”/”to”. I can only think of one guy (former coworker) who would speak that way for five minute increments. (Now that I think of it, there’s a few exceptions, notably New Year’s Eve.)

But as I already explained, even if I did, that doesn’t count — it’s because of the pre-existing base of 12/60 for time that we use 12 divisions of 5 minutes each, not because clocks must be divided into 12 divisions. The test is simple: If you’re really dividing by n, then in a different clock system, you’d still be dividing by n, and cursing the odd fractions. If you’re taking some convenient factorization, then in a different clock system you’d use some other divisions that work out evenly. No decimal clock would be labeled with 12 divisions of 8-1/3 minutes; rather at 10 (or 20) divisions of 10 (or 5) decimal minutes.

In the final analysis, despite several attempts to introduce decimalized time, the 12/60 system survived and continued to while several other systems of measurement came and went. This persistence has deep roots and the question then becomes why is there deep resistance to change, rather than asserting that it would be just as easy to use a base ten division. The argument that is made the most to explain this is the utility of having many divisors and in the absence of any better ones, is at the very least, plausible.

Ultimately it is utility should drive any discussion in metrology – not some idiotic desire to create some unified system that appeals to the sensibilities of a small population whose sense of aesthetics is driven by symmetry. Measurements, after all are just a tool, and as a tool they should serve the needs of the user first and above all.

I would suggest that the reason for resistance to change is resistance to change.

Bullshit, people don’t divide time in repeated small units, at best in ten minutes or a quarter hour. And normal people don’t need pinpoint precision in daily life. (yes you do in industry, but there the division argument is irrelevant since you use the smallest unit as standard and then just use certain amount of those as required).

And as for the claim that’s why introduction failed in the far past: in the past they did not even have the ability to measure time with such precision, so that argument is ridiculous. They had sundials and they had sand or water running through a hole, which had no atomic clock real reference and isn’t that precise in the sense of reference to an hour.

So if anybody claims that you can safely assume he’s not an expert of any description.

Nevertheless the major division of the day (and night) was by twelve, not by ten, even though people counted by tens. That, and the fact that weight and linear measurement used divisions of twelve or sixteen more often than any other, and that division by ten is notable by its absence in these evolved systems is the question that you need to answer before extending the notion that metric has superior utility.

Apparently the Chinese used their decimal system for 1700 years, only changing to the Western system when the Church interfered. So it was a good enough system for them.

The reason we still use our system is pure inertia. Everybody knows it, all the clocks are made for it, appointments, calendars, everything. Nobody understands decimal time and you can’t buy anything that uses it, at least outside of some specialist product.

Britain changed from our weird, antiquated duodecimal system of currency (12d in 1s, 20s in 1L) to a sensible decimal one. It took a couple of years of getting people ready for it, and a big campaign to get the information out. But it was worth it.

I suppose for time the present way works well enough, and we don’t need to calculate time as often as we do money. So inertia, and no real incentive to change, are why we’ve kept the prehistoric system.

The ancient Chinese division of the day into 100 units coexisted alongside a system which divided the day into 12 double-hours – in other words they used both simultaneously. Other divisions of the day were used as well including 120, 96 and 108 units which were also subdivided sometimes into 100, sometimes into 60. Had they chosen to they could have insisted on decimal clocks – they were a large enough market that this would have been provided had there been a demand.

The ‘only inertia’ excuse is flimsy, even in France the introduction of metric weights and measures ran into stiff opposition and Napoleon I rolled some of it back, and started the practice of redefining traditional measures in rounded metric units. Adoption had to be forced by law, and they tried introducing metric time twice – failing both attempts. There are deeper underlying reasons why decimal time has failed, and it has little to do with bad attitudes on the part of the public and everything to do with poor utility.

You can’t make Americans use a sane system of lengths and weights, even when it’s them and North Korea against the rest of the world.

How are you going to sell a new system of time to them?

Americans already use the more sensible of the two common systems in use.

NASA certainly does.

Hmm, that’s a surprise, I thought they would have used that crap French system.

They DO use that “crap” french system, and when they didn’t it crashed.

Also your “incredible” inch is defined in millimeters.

The STEM fields, anyways. The rest of the population suffers instead.

Also, I don’t think a system that is only used by 3 countries of the world can be called “common”.

Even in countries that are officially metric, imperial measures still abound. Carpentry, the needle trades, and several other areas cling to the old system. Much of aviation still uses decimal inch, and decimal ounce as well. While in decline, this system isn’t dead by a longshot.

In the countries I’ve lived or worked in that are Metric (admittedly not enough to be considered a fully objective assessment), it would be fairer to say that imperial measures linger on rather than abound. The needle trades most definitely – hardly surprising as they chose to do a ‘false’ Metrication and adopted the cm (the ‘pseudo-inch’) instead of biting the bullet and going mm and m. Carpentry not so much – mostly the amateurs about my age and older that refuse to make the switch, and even less so in the building trade. Imperial very definitely is not dead – it’s putrefying carcass continues to ooze it’s stench across our society and it will take generations to air the place out.

You’re editorializing now, Leonard, exactly the sort of thing you were just accusing me of. The fact remains that if metric was so damned superior as all of its doctrinaire supporters constantly assert, it would have displaced the old units on that factor alone. That it hasn’t on that bases is the question you need to answer.

And my desk refernce shows well over a hundred of these metrified older units in official use throughout the world.

Sorry – I got carried away by the emotion of the moment.

Imperial clings on because people don’t like change. In a generation or two it will be almost entirely forgotten in any country that doesn’t officially use it. For example, and a small confession, being English we still use miles on the roads so I have a good understanding of what a mile is and relative speeds in mph however I have very little understanding of yards, feet and inches as I grew up using meters for all other measurements. Imperial would mean nothing to me if we had gone fully metric. At the end of the day they are both fairly arbitrary concepts constructed by man so your most likely to favour what you have gown up using. Though having said that I wish we would have gone fully metric as having a system that is mostly metric can get confusing at times.

Obviously not in everyday life. They mostly calculate in foot, pounds and stuff and °F. You only think it is more sensible, because you are used to it.

What could be more ‘Merican than units based on the length of arbitrary body parts?

Wait, how is it not perfectly sane to use an average extremity size as the base unit of the length? It guarantees that a average person will be able to roughly measure things with no other size reference. Even for people who are far off (so that, say, their foot is ~1.5 or 0.75) will just have to remember a number near 1 to convert.

Sucks that they didn’t originally make the meter one ten-millionth of the *circumference* of the Earth, rather than the quarter-circumference. Then the centimeter would’ve been ~roughly an inch (okay, 1.57 inches), and a decimeter would’ve been ~roughly a foot (okay, 1.31 feet).

The large units are pretty much arbitrary anyway.

the metre today is defined by how far light travels in vacuum in a second with the second defined by a certain amount of ground state oscillations of cesium-133

sorry not a second, 1/299 792 458th of a second, just checked to be sure and i was wrong.

How it’s defined *now* is pointless: it’s how it was originally defined that determined what its scale was. It’s not like a meter now is some hugely different thing from when it was founded.

They just did that to match the existing length. You could do the same with inches… pick a different interval or a different element.

They *did* do that with inches – an inch is exactly 2.54 centimeters, and a foot is 12 times that. The point is how the *original* unit came about. After all, since metric is *so* in love with powers of 10, wouldn’t it have made a lot more sense to just redefine the meter to be the distance light travels in 1/300,000,000 of a second?

Except that would’ve broken the original length, which everyone in the world already used. And the original unit was defined because it was something like 1/10,000,000 of the distance from the North Pole to the equator along the Prime Meridian.

The units of Common Measure use exactly the same standards as SI. The chosen size of the units are based primarily on convenience for the task to which they are applied. Common Measure has a much more rich set of units than SI because it has many domain specific units for length, volume, energy, etc. which is quite useful for everyday use within an industry. However, the standards upon which they are defined are exactly the same standards used for SI. For instance, one millimeter = the distance light travels in a vacuum in 1/2.99792458e11 seconds; one inch = the distance light travels in a vacuum in 1/1.18028526771654e10 seconds. In either case, the definition is based on the constant standard: the speed of light.

The U.S. has used the Metric system for 40 years now. We just define a set of customary units in terms of the metric ones as well. One inch is exactly 25.4 mm, and so on.

Back to the smoots!!!

https://en.wikipedia.org/wiki/Smoot

https://www.youtube.com/watch?v=-scs_yF59YE

Looks good:

https://upload.wikimedia.org/wikipedia/commons/c/c8/English_length_units_graph.png

You’re forgetting Angstrom, astronomical unit, parsec, light year, Planck length, radius of the earth, radius of the sun, and probably several others I’ve forgotten about.

Convenience units occur everywhere. The fact that Imperial convenience units are oddly named is just because they’re old, not because they’re dumb.

It’s not about the names. It’s about their relation to each other.

1yd = 3ft = 36in

The metric system makes conversion more convenient.

1m = 10dm = 100cm = 1000mm

Using one base unit (eg the meter) and combining it with unit prefixes is a quite good solution. Of course, the meter itself is based on an arbitrary piece of metal. Just like the imperial units.

Oh. By the way… Imperial units:

http://www.bilder-upload.eu/show.php?file=d571d1-1481868555.jpg

Damn.

https://picload.org/image/ralrwgdi/06.06.16-1.jpg

@SebiR:

Although just in this case it is quite funny. Even here in Europe the plumbers themselves use inch dimensions for pipes quite often. Although the definitions are sometimes so odd, that you can not measure 19mm on a “3/4 inch” pipe or the thread. It has something to do with relations of inside and outside diameters which changed over the development of new (higher strength) materials and manufacturing techniques. Like e.g. the inside diameter was the nominal, but for the thread of course the outside was more important and when they reduced the wall, then the outside changed, or at least similar.

@Martin

Yeah. I know. I’m from Germany and I work with pressure sensors every day. So I’m familiar with those measurements and what they mean ;)

And yes, our racks are 19″ wide and not 48.26cm. Our HDDs have 3.5″ and not 8.89cm and so on ;)

Not only are convenience units not dumb, they are precisely that: convenient, in that they are useful for the industry to which they are applied. SI wants all industries to use the same units which is just clumsy if data interchange between industries is infrequent.

SebiR, but we don’t often use yards and inches at the same time so that isn’t really a good argument. We either use feet and yards together or we are using feet and inches together, depending on what industry we are in, but I’ve never seen all three purposefully used together. No one would ever say 26 Yards 2 Feet and 3 inches because there is never a circumstance (that I’ve ever seen) where there is a need to do that. Moreover, when certain types of accuracy are necessary, Conventional Measure (what the U.S. uses, btw, not Imperial) industries use base10 representations of units. For instance, machining typically is done is 1/10, 1/100, 1/1000 and 1/10000 of an inch increments. It turns out that base ten can be applied to any unit system. How about that?

“It’s about their relation to each other.”

1 atmosphere is 101325 Pa. An astronomical unit is 149597870700 meters. A light year is 9.4607 petameters. None of those have that nice power-of-10 relation you said, and they still get used *everywhere* in science. Heck, I use feet because the speed of light is so stupidly close to 1 foot/ns that I really, really wish they would just shrink the inch by 1.6%.

“The metric system makes conversion more convenient.”

That’s the problem. Converting between nanometers and kilometers is stupid – there’s no point to doing it. They’re both originally derived from the definition of a meter. They’re really the same unit, it’s just that the French apparently didn’t like saying “one thousandth of a meter.” I mean, why is it smart to just invent a random name for 10^-9 meters? Why wouldn’t “neg9meters” be a better name? Then you wouldn’t even have to remember the prefix! Quick! What’s the atto prefix? Which one is deci, and which one is deca? How do you go from peta to milli? Why is this a good thing again?

The reason why convenience units stuck around is not only because they’re convenient, they can be more accurate, too. If you have a ‘standard’ object, you give measurements of construction based on that standard object. Measuring that standard object in “standard units”, and then giving measurements based on the standard units just multiplies the error in that original measurement. Gets even worse if you have fractions of that standard object.

The parsec exists in astronomy for exactly this reason. Distances were measured to nearby objects by parallax: so if you know the distance to 1 star is 3.2 parsecs, and the distance to a second star is 6.4 parsecs, you know that the second is twice as far as the first. Comparing objects measured in parsecs to objects measured in light years (via some different mechanism) required bringing the astronomical unit into play, which would have introduced errors far larger than the parallax error because the astronomical unit wasn’t measured well.

Pat: That is really just too much. Have you ever heard of the calculator? The computer? An inch is EXACTLY 25.4 mm. By definition. This makes all of the other measurements related to the inch equally well-defined and precise. You’re worried about rounding error? I don’t know about your computer, but mine can give me 15 digits of precision. I think that’s enough. I think you’re just arguing for the sake of argument.

A calculator gives you numbers, not measurements. But you know what I do have? A yardstick. When you show me a meterstick that can be divided in 3 as accurately as a yardstick, then you can call my argument pointless.

What an amazing conversion table!

Preciesely. Conventional Measure units and SI units all use the same standards, today. SI superiority is largely a case of Eurocentrist bias. As an earlier poster suggested, the factors of conventional measure (usually base 2 or base 12) are much more useful than base 10 and the size of the typical units are also more useful for the purposes they were made.

The only place where SI has a significant advantage is in the relationship of mass to volume.

**grabs popcorn**

i swear someone made a controversy plotter in jupyter at the HAD offices, it would explain so many headlines.

I imagine most will not care. Global commerce and communications helps with uniformity.

most probably wont care, but every time the metric vs imperial, decimal vs fraction debate rears its head it usually ends in pages of debate.

globalization have helped, the metric system is used almost everywhere for almost everything.

that said engine mounts and several other areas are still using imperial, even in metric countries.

personally i don’t think conversion is that hard.

USA aside, conversion of a country to Metric is not hard. Conversion of a measurement is also not hard, but it’s inefficient and error-prone, and should be avoided. If you’re going to use Imperial, then use Imperial. Equally, if you’re going to use Metric, then use Metric. Mixing the two is just plain confusing.

Yes, but especially in electronics we have to do it often. Older packages are often specified in inches and newer ones metric. And then you must not mismatch 0,63mm pin spacing (or is it 0,635) and 0,65. But as the dimensions get smaller they are mostly metric. Although a 0,4mm BGA is difficult to route out.

Egyptians had 12 finger segments? Just because people in the past didn’t have all computers and ipads doesn’t mean they were bloody chimps or anything, maybe they understood that 12 was a good number for fractions.

We all know that American units are stupid, but it’s not specifically because they aren’t decimal. decimal is actually pretty rubbish, we are just used to it. if we started using base 12 or base 16 or something else for everything it would be metric that seemed silly. and of course the 10 times table might be a bit harder but the 12 times table would become much easier. and the 3,4,6 etc. its all just patterns. of course the other reason nobody likes imperial is the number of units you need for one quantity.

but really we are doing this the wrong way around kind of.

12 is also conveniently the total length of Egyptian Triangle (a device Ancient Egyptians used for construction of right angles) sides (smallest Pythagorean triplet).

Theoretically a good argument, but then where did we get base ten from? From bloody chimps counting on their fingers.

Not that I buy the finger joint theory on the origin of base twelve numbering.

whoops, my reply was to pff.

super, I knew there would be some link with the pyramids in there but I’m not much of a historian.

it didn’t occur to me when writing this that I had 10 fingers but I suppose that was the point, base 10 for someone with 10 fingers is pretty intuitive isn’t it. thanks to hours of schoolyard practice I can lock my proximal joints straight while bending the distals but I just don’t have the independence to bend other fingers at the same time. from this I would say the base 12 finger system isn’t as useful because it would much more difficult to communicate with. I suppose instead of holding up finger segments like we hold up fingers one could point with the other hand which would at least offer an explanation as to why they stopped at 12 instead of rocking it all the way to 24 using both hands.

They could do much better than that, counting ones on the left hand and twelves on the right, for a total count of 144.

besides, people who promote the joint counting method as a possible explanation for base twelve propose that the thumb was being used to point to the appropriate joint. So why didn’t they develop hexadecimal, since you can point to each joint and the fingertips as well?

But I see it as important, that the measurement system has the same base as the number system we are sued to it, so that the conversion to a multiple unit is just a carry to the next digit. If we would all be used to hexadecimal numbers and would calculate with them routinely, then a base 16 unit system would be no problem. But 12 inch a foot, 3 foot a yard and some odd number like 1760 to the mile (which suddenly contains the divider 5) is what makes this system really difficult to handle. And the inch gets only divided down binary 1/4, 1/8,… So there is no chance to calculate with this units with a simple carry to the next digits, you have to treat them nearly as non related units like Kelvin and Meter.

First, if this was such an important factor, why did these odd conversions evolve in the first place? Second, how often do you need to do such conversions by hand anymore? And finally third, I submit that it is far easier to make mistakes doing conversions by hand by shifting decimal points, and potential errors harder to intuit. In other words a conversion of inches to yards yields a number so different that an error of magnitude is obvious. In the case of cm to meters the integers are the same alright, but a slip of a decimal place is not always easy to see. Now I know this is a weak argument on my part, but it underlines the fact that this ‘ease of conversion’ argument itself is largely specious, and has little real-world relevance.

You are wrong about Linux time. It is not decimal at all – time is measured as seconds past the epoch, all right, but in BINARY, not decimal. The fact that you can print that number out in almost any programming language and the default will be in base ten reflects on the bias of the programming language, not Linux.

does Linux measure time in binary or is this simply an imposition of the hardware?

That’s a tricky one. Most CPUs these days force things, at least at the machine language level, into binary. Some older machines (the IBM 650 comes to mind) was an inherently decimal system, so the time stored on it WOULD be in decimal. However, I don’t know of any decimal-based computers (or non-binary for that matter) that have any kind of Unix OS (or even a C compiler) ported to them. I’m hoping for some corrections on that. In this sense, you could say that the OS is imposing binary, just by not working on anything but binary-based computers.

Some architectures offered a BCD (“Binary Coded Decimal”) mode, where arithmetic behaves differently. 6502 comes to mind.

Yeah, the 8080 and Z-80 had an instruction for that too, which I think was just a marketing feature – the Decimal Adjust Accumulator instruction was just one of many changes that would be necessary to make BCD arithmetic work. To quote this article, http://www.kishankc.com.np/2014/06/decimal-adjust-accumulator-contents-of.html, “Of the hundreds of 808x programs I wrote over the years, I think the DAA instruction is the one and only instruction I never had a reason to use.”

But you’re right – the 6502 actually supported BCD arithmetic, having a mode that caused the various add and subtract instructions to behave correctly in BCD. So you COULD implement a seconds-since-the-epoch time function directly in decimal on a 6502.

I was thinking the exact opposite – I’m impressed by how accurately the *nix epoch was portrayed, “This function returns the current time in seconds since the beginning of some epoch.”

The easy (and wrong) way to think about the epoch is as a stopwatch that began at 1/1/1970. If you had such a (hypothetically perfect) stopwatch, its count wouldn’t match Epoch time. Why not?

Leap seconds. Your stopwatch would be off by the number of leapseconds implemented since then, because Linux time doesn’t count them at all.

That’s a very easy technical problem to step in, kudos to the author for dancing around it. Gonna steal that definition.

He didn’t just make that up – that IS how Linux time is defined.

And there’s no dancing around required.

You’re confusing elapsed time with time-of-day. If I started an atomic stopwatch on 1/1/1970, the number of seconds on that WOULD match the Linux time, because the stopwatch wouldn’t have leap seconds added to it, either. It’s only our time-of-day measurements (and the calendar dates that follow time-of-day) that have to deal with leap years and seconds.

It’s the functions in Linux (and all other operating systems that do real time) that convert seconds from the epoch to year-month-day hour:minute:second that take care of this. And yes, they do.

No, it wouldn’t match. The stopwatch would show the duration as being 35 seconds longer, the current UTC-TAI offset.

Unix time is not a continuous timescale. Inserting a leap second necessitates repeating a second (or smearing the extra second by slowing the clock) there is a duration of two seconds which is ambiguously identified by the same epoch second.

http://www.madore.org/~david/computers/unix-leap-seconds.html

What you’re saying is simply not true. Leap seconds are NOT added to the Linux time count. If they were then you would have discontinuities that would be difficult to calculate around. Please cite a reference.

Oops – I see you did cite a reference. And the reference says that it’s not clear. Wonderful. I’m not going to go looking for it, but I’m relatively sure that the Linux kernel does not adjust its seconds count when there is a declared leap second. Again, it’s up to the libtime functions to account for those in converting seconds-since-the-epoch to calendar time.

The problem, you see, is that any programs running during a leap second that depended on knowing elapsed time would get the WRONG ANSWER if leap seconds were added to the running count. So lacking evidence to the contrary, I’m going to stick by my assertion that Linux time is continuous.

Everyone needs to understand that the metric system was adopted, not because it was more elegant, not because it was more logical, not because it was easy to learn, but because it forced a broad standard in measurement in an era and a place where there was none. In France (and the rest of Europe) units varied based on what was being measured, varied by location even in the same country, and in most cases had no official body maintaining them. The British, their empire and by extension America HAD standardized weights and measures long before the Continental powers did and had no need to metrify, and would have seen no advantage from doing so.

The only valid argument for the use of the metric system is that it is used globally and nothing else. It is not intuitive beyond all units having the same base, it’s quantities are generally awkwardly large or awkwardly small in relation to human scale, and any argument about ease of calculation is nonsense given first the lack of common divisors beyond two and five, and the fact that most calculation is done my machines making arithmetic errors (at least) raritys.

There may be valid reasons involving the expense of maintaining the American system of weights and measures, but spare us the nerd esthetic ones, because either they no longer apply, or no one cares.

I give this comment my highest rating, 11 thumbs up!

Imperial thumbs. :-)

Yeah…. Maybe you should do some engineering calculations on dynamic systems in US and metric units and get back to me on just how convenient those US units are… Because they are about as elegant as a beached beluga whale in a tutu dancing the solo from swan lake.

I was probably working in those units before most of the commenters here were born, and I worked in decimal inches and ounces and I lived through the metric conversion. At no time during my working career was I forced to do any critical calculation with out the help of some machine. I don’t think I can recall any time where the value I had to use were nice and round – in the end one set of numbers is the same as another, and the onus was on me to supply answers that could be used – that is could be applied by someone using the measuring tools at hand. It simply wasn’t that big a deal. While it might have been more convenient if there was only one system in use, it really wouldn’t matter much what system it was and that’s the point: arguments over the assumed superiority of metric are not as meaningful as arguments supporting a single standard. By that could be anything, metric, in and of itself is not intrinsically superior and has many flaws.

There is nothing inherently superior about the meter vs. the inch. In fact, an inch is now (and has been for some time) DEFINED as 2.54 cm, so it is exactly as well defined as the meter is. An inch is no more the length of some dead king’s finger segment, than the meter is the length of a platinum-iridium bar in a vault in Paris. And it is no more difficult to measure or calculate distances in microinches or megainches or picomiles than it is in microns and kilometers. Nothing in the imperial system mandates that you convert from inches to feet to yards to miles in your calculations when the magnitude goes beyond certain ranges; the choice is yours.

And if you think the Americans are doing it all wrong, consider at the British, who can’t decide whether the word “billion” means 10^12 or 10^9.

10^12 is clearly the right answer to that. It’s again the muricunts who decided to throw half of the large-sized units away for no reason.

Yes.

Until you start doing dynamics calculations, and some supplier provides measurements in ounce-inches (or slug-yards as someone tried to troll me with once) when you need foot-pounds to keep the units consistent when you start calculating moment inertias and rotational accelerations. To name but a thing.

Inches, pounds, gallons and such are all fine and dandy if all you are doing is calculating basic things. But when you have to convert from inputs given in one combined unit to a different combined one in imperial it often just becomes obnoxious. On top of that in many calculations where the input in small units is just fine the results can be hard to handle in the resulting unit. Converting to more manageable units in metric is pretty much always easy to do. Doing so in imperial is a pain in the neck.

Yes, I’ve (had to) used both measurement systems. I VASTLY prefer metric because when it comes to my line of work metric just DOES make more sense. (And I’ve had many US co-workers who grew up with US-imperial agree with me)

And I used SI as a chemist and that’s fine – in most STEM applications it’s the better choice – but the benefits do not necessarily map into all commercial and everyday domestic domains. As I would not care to be forced to use the apothecaries’ system in my lab, I see no value in forcing the use of SI on the general public for trade.

Really? So if someone gives you a measurement of the electric displacement field in statC/cm^2, it’s just going to roll off the top of your head that you need to multiply by 2.65 x 10^-7 to get to C/m^2? Because both of those are metric units. Or if someone gives a measurement in dyn-cm, you just know that you need to divide by 10^7 to get N-m?

Metric has quirks too. Quirks show up more when you measure in conventional units… because you’ve got multiple conventions. Not because of the units, but because people are working with multiple conventions. Same in metric.

BTW, with regards to the size of units, you would really have to enlighten me on what units are awkwardly large in human scale. Because none seem that out of place to me.

A meter is about a good length stride from the average person.

A kilogram is rougly the weight of a liter of (room temp) water (That is the only unit that MIGHT be awkward if it wasn’t for that prefix kilogram).

A newton is the force needed to accelerate a mass of 1 kilogram at 1 m/s^2

A Joule is the energy needed to exert 1 newton over a distance of 1 meter.

Etc. etc. None of that is awkward or difficult to comprehend. The pascal (unit of pressure) is the only one I use daily that I find a tad annoying since that is rather on the small side. (One newton per square meter is a bit of a stupid definition, but matches the other unit definitions much better, making all other derived units much more understandable)

Speaking of the pascal, barometric pressure is not overly intuitive anymore.

Day-to-day use, say in the kitchen, grams and milliliters are not usefully intuitive, nor are they for trade in small quantities of fungible goods. That is why even in countries with the longest traditions of being metric, some older units survive. It is often a surprise to some that even in France, agricultural production in many domains are measured in traditional units or their metrified equivalents. Furthermore the naming system of the metric system while systematic is repetitive. Humans find words that are distinct easier to store. The metric system does not lend itself to this. In fact, the reason that non-standard names evolved separately in the old system was to increase the contrast and thus memorability of different units.

Wuh? Just grab the scales or jugs, and measure out the amount. There’s nothing unintuitive about metric in the kitchen.

they are unintuitive to him so that means it must be to everyone right?

It is often said that the metric system would have looked very different if there had been more input from women, rather than just mathematicians, scientists, and bureaucrats.

@DV82XL:

Then it is good, that they were not asked. Because it is quite good as it is :-) What problem do you have with a gram. The magnitude is quite useful. E.g. you give just several grams of sugar into your coffee, yes, we often call this a teaspoon full (or two) because we do normally not weigh the sugar on a scale for this. But “a teaspoon full” is no precise unit in itself.

You are making my argument: if the unit of mass in question is abandoned for a traditional measure of volume it is because the old one is more useful for the task at hand.

Hah! DV82XL – I enjoy your comments on HaD but “Day-to-day use, say in the kitchen, grams and milliliters are not usefully intuitive” is nothing more than your opinion dressed up as an absolute. Grams are supremely intuitive. So much so that I’ve converted most of my recipes from those god-awful pounds, ounces, tbs, tsp, cups, shovels and buckets to one unit. Grams. Just grams. That’s all that’s needed in a kitchen (unless you’re cooking on an industrial scale). Even my scale has been bodged so that it can only read in grams. For the rare occasions that I use volumetric measures (yes, I measure liquids in grams as well), ml works right across the board.

Leonard, it is simple: speaking as a chemist, there is simply no activity in domestic cooking that requires any measurement to three significant figures. Furthermore while mass alone may be appropriate in a laboratory setting, volume for liquid measures in the kitchen are simply more convenient.

Your remarks, and those of many other in this thread are basically suggesting that users should put up with weights and measures that don’t quite fit their needs, and are (even if only a little) difficult to use, because they meet your desire for symmetry, because in your view it would just be neater if metric was used universally. Yet the old systems persist to the point where in some jurisdictions the authorities have defined units like “metric pounds” (500 gm) and “metric gallons” (4 liters) and so on, and there are very many examples of this, even in France, the home of metric. In other words, all of you that want to dismiss any criticism of metric and disparage those that would continue to use traditional measures have to come up with a better explanation beyond people are being reactionary. After all, one of the claims you consistently make about metric is so easy to use and understand – so why isn’t it so popular that the issue would never come up?

Let me try explain my position re: domestic cooking. Ingredients like Psyllium husk powder need to be measured in 0.1 g increments, while major ingredients will reach into the hundreds of g, or possibly the thousands. The point I’m trying to make is that the gram very conveniently and practically covers that entire range. As for mass being appropriate in a domestic kitchen; sure, it’s rare – unconventional does not = inappropriate. It is very convenient – I’m using one measurement device for all my cooking (actually two – a scale reading in 1g increments up to 5000 g and one reading in 0.01 g increments up to 200 g), it allows me to measure ingredients by removing from a container (ie subtractively), measure ingredients placed directly in the receptacle (pot or whatever), and it means that results are very reproducible if I choose to observe exact quantities. It’s a mind set change that takes a bit of getting used to, but I would not go back to using volumetric measurements in my kitchen, simply because using one unit (in my opinion) heaps simpler. As a chemist, perhaps you could try the experiment of measuring (say 850 ml) of water, then measuring 850 g of water, using the same level of accuracy (say 25 g or ml). I would be very interested in hearing what your findings are.

“suggesting that users should put up with weights and measures that don’t quite fit their needs, and are (even if only a little) difficult to use”. Then I’ve not worded my comments appropriately. I have no difficulty with any country using Imperial measures. Familiarity is a huge advantage and there always needs to be good reason to go through change. Personally, after living through two bouts of Metrication as a consumer, an owner-builder and an Engineer, my opinion is now very firmly in the camp that says that Metric is the simpler of the two systems of measurement. I would say by far better. There are caveats – using all available Metric units (mm, cm, dm, m, etc, etc) is crazy stuff and I think that I would find it even more confusing than Imperial. Sticking to SI units is key to successful use of Metric, at least for day-to-day measurements. Based on my experience of needing to flip Imperial – Metric – Imperial – Metric, my conclusion (arrived at logically I trust) is that Metric is a simpler system for day-to-day and engineering use.

My point of departure (and I apologise if this is not clear in my comments) is not to disparage the Imperial system and its users, it’s response to the perceived attitude of superiority adopted by the users of that system, particularly when it’s clear that the comments are not necessarily from someone with a firm grasp of how Metric gets used on a day-to-day basis across the world. Just to be clear, that’s a general statement and is not aimed at you.

While I don’t wish to appear to be dismissive of criticism of Metric, all I can say is that I’ve heard many of the arguments over and over from people in the two countries that I’ve lived through Metrication in, only for those arguments to disappear as the practicality of the system becomes clear.

Re: “isn’t it (Metric) so popular that the issue (preferred use of Imperial) would never come up?” I work as a project manager and resistance to change is powerful and close to universal amongst us humans. There may a times be good, logical reasons for resisting change, but mostly it’s about rationalising positions not arrived at through logical thought. I believe that people being reactionary is a very sound explanation.

Beyond the fact that this resistance to change argument is sophistry, the fact remains that traditional measurements developed naturally, indeed evolved to serve those that used them. Despite the fact that base ten served counting across Europe, and despite the fact there were several systems of weights and measures being used from the end of the Roman Empire to the introduction of the metric system, the use of submultiples of ten in any of these systems is notable by its absence. It took a group of bureaucrats armed with legislative fiat to force this subdivision on users. If decimal units are so damned superior why didn’t they emerge on their own, instead of systems based on 12 and 16, or those based on doubling the previous unit. The only reasonable answer is utility. The point cannot be made too strongly: measurements are a tool, they exist for the convenience of the users, not to meet some aesthetic.

Traditional measurements, either in their original form or metrified persist because they are, in their domains, inherently superior. This thought may not appeal to some, but no other explanation really holds water. In the context of this thread, time during a given day is likely to be the measurement most considered, most used, by most people, most often in the course of their lives. The system for measuring time, that evolved, and that emerged as the survivor among many (including base-10) was one with many submultiples, and it was the one universally adopted long before international agreements of such matters. Sweeping statements of the sort that suggest that it is a desire to resist change in and of itself that stops the adoption of decimal time is simply not tenable in this instance. The current system is just plain better and that is why no one can mount a compelling argument it should change

Definition of intuition-

1

: quick and ready insight

2

a : immediate apprehension or cognition

b : knowledge or conviction gained by intuition

c : the power or faculty of attaining to direct knowledge or cognition without evident rational thought and inference

Don’t confuse intuition with familiarity. Familiarity is learned with repeated use. One has to become familiar with a standard before this intuition you speak of takes place.

Mentioning the kitchen one needs to have the tools to measure a cup or a fraction thereof as dry or wet measure. When it come to dry measure there is the level or heaping measure, in the event there’s such silliness in the metric system I’m unaware of it. In the kitchen the imprecision of intuition will it ruin a meal.

1 Atmosphere of pressure is intuitive 101.3 Kpa is not. Yes one can get used to anything, but that’s not the point here. What I am saying is that the claim that SI and metric in general is superior BECAUSE it is more intuitive is rubbish. It is routed in arbitrary magnitudes whose supposed elegant interrelationships clearly breakdown in scale in many of the derived units. In the case of SI in particular, naming conventions actually obscure meaning; cycles-per-second clearly described frequency in a way that Hertz does not, for example. It is a system designed by committee and it shows.

As for the kitchen, you should look at recipes from cookbooks that predate Fannie Farmer For centuries prior to standardized measuring spoons and cups level measurements were unknown, and people cooked well without them. Is it easier for someone that did not learn kitchen skills growing up working in one to use standard measurements? Sure. Are most recipes so sensitive that they need stoichiometric tolerances making them up? No. Modern digital scales are relatively inexpensive, and very accurate, as well as being easy to use. If you were forced to use a beam balance for all measurements in a kitchen, or deal with the inaccuracies of a spring balance when cooking I am sure you would see the utility of volume measurements in convenience, accuracy and time in comparison.

As I have asserted several times in this thread there are good arguments for a single set of weights and measures, and SI has the momentum in this regard – but do not argue that it is in any way inherently superior in any aspect, because it is demonstrably not.

“it’s quantities are generally awkwardly large or awkwardly small in relation to human scale”. No they’re not. I’ve heard this ‘human scale’ argument many times. I’ve lived in two countries that have gone through metrication and there is no validity at all to quantities of either system being more or less ‘human scale’. Generally ‘human scale’ seems to be shorthand for ‘what I’m accustomed to’.

Then you know little of the history of measures. The very names of many traditional units clearly references parts of the human body; a fluid ounce was basically a mouthful and so on. In historical metrology at least “Man is the measure of all things.”,

Then I’ve misunderstood the term ‘human scale”. I’ve taken it to mean units that are convenient to use on the scale that humans function on, rather than units based on portions of the human body. However, I would need to be convinced that it has any validity today as a point in favour of Imperial measurements.

Yes, and the “human scale” is quite a broad one: from 0,1mm thickness of a hair to km distances and from around a second (heartbeat) to nearly 100yrs of a life.

The first paragraph probably right. But it IS intuitive and in my opinion necessary (or at least a big advantage), that the units have the same base. I go even further, it is important, that they have the same base as our normally used number system: decimal. So you can convert with just a digit shift.

And what is awkwardly large on a meter? It is roughly the size of a step or around half my height. And, by chance, it is of similar length to a yard. But that is of course just the question to what you got used.

Still none of you arguing the natural intuitiveness of metric can answer the question of why there were so few traditional units of weights and measures that evolved using base-10. Are we to assume that the lack of these in the historical record is evidence of our antecedents’ stupidity? Or, given that even though they counted by tens, they recognized that it was not the best for measuring? Keep in mind that they, far more than us, needed to depend on mental calculation. Apparently they believed that highly decomposable numbers were more useful, than ten in this service.

Metropolis (1927) Fritz Lang had a 10hr day, with a 10hr dial clock. It would be a lovely wall clock actually.

I’d love to live in a world with decimal time, no timezones, no frickin daylight savings and a minimal of special cases.

it would make everything nice and easy, just cutting the timezones and running a universal time would simplify matters immensely

Without timezones you’d have to know the latitude, and longitude of every place to figure how far ahead, or behind they were.

no by cutting timezones there would be no ahead or behind.

the time the sun rose would be different from place to place, so your morning might be at 0800 and mine at 2400 (just an example using a 24h clock any measurement system could be used)

to know when morning was one would indeed need to know roughly where that place was located, which means one would have to look it up, which we already need to do every time we encounter somewhere we don’t know the timezone of.

one would basically trade a clock fixed for your local light to one fixed to a global and yes arbitrary fixpoint, it would probably mean that international scheduling over short times would become easier and i don’t see why it would have any inherent negatives for people locally, besides the obvious transition period of a couple of generations.

We all ready have it. UTC, Zulu, etc.

I have used it for decades and flaunt it as a thumb in the nose at dicked-with time. Or as we call it in Indiana, Daniels Stupid Time. Our gov now pres of Purdue, and the next one became VP.

The problem with decimal time, is when you expand it into days, and months.

If we have just 10 months in a year, when we will need to fit rockets to the Moon.

The rockets are used to slow down the speed the Moon orbits the Earth from 12 times a year to 10 times a year.

But if we slowdown the Moon too much, it may drop out of orbit into the Earth.

If we have 100 days in a month and 10 months, then we will have a 1,000 days in a year,

so we will need to move Earth into a bigger orbit from the Sun.

So you can say goodbye to summer. (And all life on Earth)

You would need to speed the moon up to increase it’s orbital period, not slow it down.

Oh, those darn, pesky orbital dynamics!

You do realise that a year currently has (on average) 365.2425 days? So it’s as though it currently divides up nice and neatly.

Of course the length of the day has to be the constant base (and the length of the year also). But I do not understand, why we have “too many” months with 31 days and one with 28 days.

Then you don’t understand politics. Here’s a clue: July and August, the two 31-day months that are adjacent, are named after rulers of Rome. and February is named after a purification ritual.

A 1 meter pendulum oscilate at 2Hz.

Convenient, but that 2·π·√(1m/(9.8m/s²)) ≈ 2s seems like it’s almost certainly coincidence rather than some emergent property

Is there a Babylonian character for 0 or 60?

The Babylonian symbol for one and sixty are the same, as they had no symbol for 0 it was the position of the figure relative to the rest of the text that determined its value.

Ah, no. If you count 60 seconds starting at one, you would count from one to sixty. On the NEXT second it would roll back to zero, so one and sixty-one must be the same, or the whole system wouldn’t work.

Nevertheless in surviving texts the symbol used for 60 is the same as the symbol they used for one, only one column to the left. ’61’ was the same character repeated close together. Understand that this has nothing to do with the measurement of time per se, but rather the convention for written numbers.

“the symbol used for 60 is the same as the symbol they used for one, only one column to the left.”

That’s like saying we use the same symbol for one and ten.

No, we have the use of a zero as a placeholder, they did not. Instead they relied on the position of the figure and context.

Can we just move to space time and be done with it. We’ll have to do it at some point when are neighbours get here……

Star Date anyone?

Time should be defined in light-seconds of expansion from the center of the universe.

Are you even paying attention to what you’re saying? Time should be defined in light-seconds of expansion from…? Are you aware that a light-second is the amount of time light travels in a second? This would make your definition entirely circular.

It was a joke on Space Time, lighten up.

Cool article. Didn’t knew about the use of decimal time in ancient times nor about the origin of 60 divisions.

As for the beats clock it considers that the day starts at 0:00, like conventional time and this is rather contradictory because the ‘Day’ begins at ‘Midnight’. Well, if 0:00 (12:00 am) is the mid of the night then the night should had begun at 18:00 (06:00pm) which makes more sense since you expect that Day has daylight and Night is dark.

For that reason I am considering that the day in DC-10 clock 0000 begins at the 06:00( 06:00am) in conventional time.

I hate decimal time, the boss instituted it at work and when I asked him when I could have lunch he said at 12!

Well, it’s 17:10 here (23:16 GMT in common base 10 time) but a couple of days ago I ‘made’ a hexadecimal clock. I was playing about with an Arduino clone, DS3231 clock module and a 7 seg display module and thought it would be amusing to display in hexadecimal,

As I remember IBM used a single decimal hour format in those timeclocks that punched directly on to an 80-column card. I never actually used this system, but I recall it being used on the floor of one facility I worked in briefly to charge labor to customers on a per-job basis.

A few years back, I helped my son make a scale with milligram precision as part of a science project (http://www.hive76.org/tag/milligram-scale) That exercise drove home the fact that it’s really easy to make rather precise weight measurements by repeatedly halving the distance between a fulcrum and the suspension point for a reference weight .. in fact, it can be done without anything but an arbitrary length of string as the reference for distance.

So something like that may explain why weights, lengths and liquid volumes in avoirdupois tend to be in multiples of two, four etc. (or fractions like 1/4th, 1/16th etc.).

For measuring time, let’s think in terms of sun-dials, and subdivisions of circles. The easiest subdivision of a circle is in 6ths. Take an arbitrary length to define the radius of the dial, and you can mark the perimeter off using 6 chords whose length matches the radius. Sub-divide those chords by 2 and you have a circle in 12ths .. again, no tools needed, you are just exploiting geometric properties. When we shifted to pendulum clocks, the movement was still circular, so maybe the old paradigm still felt cozy, and has probably continued to the present for complex, historical reasons.

As an aside, metric’s sole advantage is that we are taught to calculate base 10 .. I tend to use it more lately since I do a lot of prototypes, and 3d printera tend to presume that STL files are in mm .. conversions from inches to mm at various points in the tool-chain can actually result in annoying sub-millimeter artifacts, so I use mm throughout. When I am doing construction around the house, mental arithmetic in 1/4ths, 1/8ths etc. is actually often easier.

That’s why 12 inches to a foot is convenient, for same reason DV82XL stated above for 60: 12 is divisible by 2, 3, and 4 (and 6), making it easy to subdivide.

I believe I’ve heard that the Babylonians used a 60-based number system due to the different number systems used by the cultures that influenced their civilization. 60 made it easier to deal with the different systems because it was a multiple of the various bases.

” The easiest subdivision of a circle is in 6ths”. I would say that it’s at least as simple to divide a circle into 4, 8 or 16 equal segments, possibly more accurately. I suspect that the tools (ie something to measure and mark with) would be the same?

“As an aside, metric’s sole advantage is that we are taught to calculate base 10”. Having worked with Imperial, then been Metricated, then shifted countries back to Imperial and finally (phew) to Metric, the advantages are more than just base 10. That certainly is a major advantage, but another advantage is that the most common units used (distance, mass and volume) scale very simply across the ranges most commonly used, some may even say that it’s ‘human scaled’ … In places where metric has been adopted with success, you will find users (builders for instance) speaking fluently in mm and m (often without mentioning the unit because it’s obvious from the context). The use of vulgar fractions generally only lingers on in the soon-to-die generation, as things like 1/4ths, 1/8ths etc. are profoundly unnecessary and complicate what would otherwise be dead simple. Metric conventions seem to be problematic only for those who are changing mind-set.

Another advantage is the related nature of many units, but that’s of more interest to science and engineering and doesn’t come into play much in day-to-day use.

Slightly on topic; I posted elsewhere that my kitchen is based solely on grams, because these also have the advantage of scaling across the range used in a kitchen, ie 0.1 g up to about 5000 g.

Seriously, after having been in the Imperial space twice, I cringe at the idea of having to go back to it. A fate worse than death I tell ya!

In the forty years I was in industry I worked with S.I., Imperial, American, (keep in mind they had different values for volumes,) fractional and decimal inch-ounce systems, and often mixed in the same problem. That was just the way it was in aerospace manufacturing and maintenance during my career therein. Was it an annoyance? Yes, was it the major irritation I had to deal with in my job? Not by a longshot. There were times I had to consider the fluctuating values of several different currencies across large projects, I had to deal with people and have technical conversations with them in English despite the fact that English was not our first language for either of us; you cope. Having to do an extra step or two in a calculation isn’t that big a deal.

Perhaps it was no “major irritatition”, but these things had already lead to plane and rocket crashes, e.g. plane ordered fuel in kgs and got the same number of pounds.

At no point have I ever argued that a single system would not be ideal, and better. This is so true it doesn’t warrant discussion. And indeed, given its penetration, that universal system should be SI. But that is the only reason, because the fact remains that arguments of SI being intrinsically superior because it is base-10, or because of the interrelation of the units is simply spurious. It is not – and that is largely why timekeeping has been such a holdout if decimalized measurements were so intrinsically superior in and of themselves, time would have followed weights and measures.

I might add that during my tenure in aviation the number of accidents and incidents where the proximate cause was related to these sorts of measurement errors were very rare in comparison to other human-factor causes, like general inattention, or corner-cutting. Yes ones where there was a confusion of measurement systems made the news and may have been avoidable in some cases, but these do not represent a major cause in and of themselves.

“soon to die”? — That was a little odd. Pretty far in the future for me, I think, barring some random mishap.

I find that I actually spend a lot of time doing mental arithmetic (unit conversions being an occasional reason .. ha!), and for some problems, fractions are the right tool .. and computers like working in halves, quarters, eighths etc. In fact I think it would be awesome if the US standards were based on powers of 2 .. but units like miles, acres, horsepower etc. muck that fantasy up. And, as an aside, the term Imperial measure has been tossed around here. The Imperial system is a cousin of the standard measures used in the US, but it’s not quite the same.

The physical concordance of SI units, and the overall consistency of the SI system’s design are, admittedly, advantages. No one in this thread appeared to claim otherwise (there were a lot of comments and I did not read them all). What I did notice was some justified defensiveness in response to the weird “SI bigotry” and condescension in several posts .. as though the US is some luddite nation that clings fiercely to the Standard system out of stupidity, backwardness, or age-related stubbornness. Our continued use of US standard measures is almost certainly just due to the fact that we have a system (and tons of infrastructure) that works fine in practice, and the fact that the costs of suddenly switching would outweigh the benefits. It’s a big country, and there are a lot of scales, yardsticks, road-signs, teaspoons etc. out there. And technologically sophisticated folks in the SI world actually use stuff based on US measures daily .. perhaps without realizing it. For example, the dimensions of petri dishes, microscope slides, masks used in nano-fabrication, standard silicon wafer sizes, lots of IT hardware etc., etc. etc. are rooted in US standard measures. Our ostensible backwardness with regard to measurement standards doesn’t seem to hold us back all that much, really.

All that said, SI units are definitely easing their way into everyday use in the US. Most product packaging, for example, is dual-labelled in SI and US Standard units, and the nutritional information on food labels is almost exclusively SI. So the market appears to agree that SI offers advantages .. but those advantages nowhere near as profound as some of the more arousable SI boosters here are suggesting, else the shift would have been far less leisurely.

Our standards for paper sheets, on the other hand .. yikes .. now that is messed up.

We suffer all of this nonsense purely because noone has the balls to suggest simply slowing the earth’s rotation by 15%!

As long as I still get a chuckle when mentioning speed in “furlongs per fortnight” nothing else really matters.