How much effort do you put into conserving energy throughout your daily routine? Diligence in keeping lights and appliances turned off are great steps, but those selfsame appliances likely still draw power when not in use. Seeing the potential to reduce energy wasted by TVs in standby mode, the [Electrical Energy Management Lab] team out of the University of Bristol have designed a television that uses no power in standby mode.

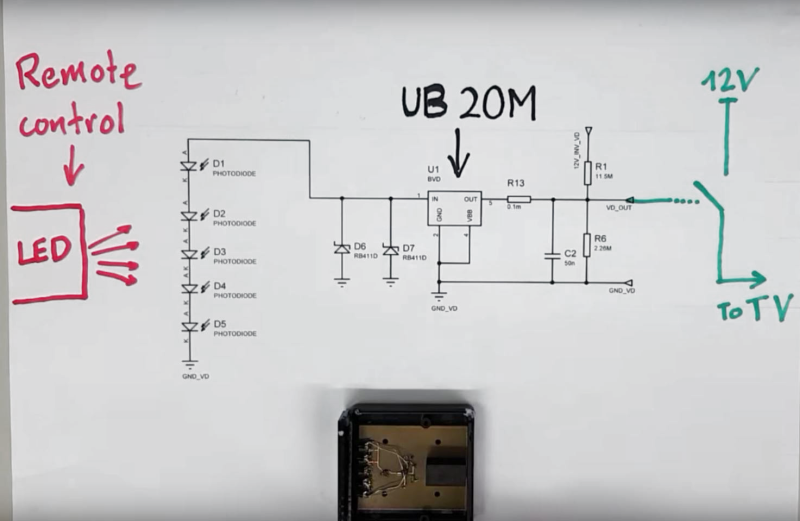

The feat is accomplished through the use of a chip designed to activate at currents as low as 20 picoamps. It, and a series of five photodiodes, is mounted in a receiver which attaches to the TV. The receiver picks up the slight infrared pulse from the remote, inducing a slight current in the receiving photodiodes, providing enough power to the chip which in turn flips the switch to turn on the TV. A filter prevents ambient light from activating the receiver, and while the display appears to take a few seconds longer to turn on than an unmodified TV, that seems a fair trade off if you aren’t turning it on and off every few minutes.

While some might shy away from an external receiver, the small circuit could be handily integrated into future TVs. In an energy conscious world, modifications like these can quickly add up.

We featured a similar modification using a light-sensitive diode a few years ago that aimed to reduce the power consumption of a security system. Just be wary of burglars wielding flashlights.

[Thanks for the submission, Bernard!]

It seems like a less extraordinary chip and a supercap.. although you would have to press the mechanical power button on the device if you didn’t use it for more than 3-4weeks.

What is this mechanical power button of which you speak? Haven’t seen one on a lot of equipment in quite some time.

Something doesn’t quite add up here. In a properly designed, modern low power TV, the standby power consumption is probably dominated by the power required to minimally power the remote decoding logic, and probably keep some SRAM alive to reduce power-up time.

Okay, so scratch our concern for instant power-on. Okay, so they have some fancy receiver circuit. Let’s get to the part that’s missing: the switching element. The problem is that’s where most of the power is going to be. The problem is they entirely left out that part of the circuit, and that’s probably the most critical detail. The receiver circuit which they’re advertising, is, bluntly, unimportant when it comes to solving this problem.

A super low leakage element might be a relay. Okay, now you have the problem of needing another switching power supply with some standby current consumption to turn it on, so you kind of lost. What about a triac? You need a few mA to trigger it, and there’s no way you can harvest enough energy from photodiodes an an IR remote to get enough energy, so you need a power supply with standby power consumption and you lost again. Or you can control a standby pin, but you’re still looking at 100s of uA – 1mA standby for most of those controller ICs.

Then there’s the problem where I’m not entirely sure if this IC even does any demodulation. If it doesn’t, this can be easily triggered by light noise. Each time you accidentally turn on your TV, you’d be wasting energy. As determined earlier, standby power consumption is going to dominate power consumption, not the receiver IC.

Basically, I suspect you could do better by buying with no considerable increase in standby power by buying an off the shelf 38KHz IR receiver IC (~0.5mA, can power using zener off whatever the SMPS IC is using as a dc source), a latch/filter of some sort (or even a low power 8-bit MCU), and using that to switch the SMPS IC. Picoamperes is so much smaller than the off current of a lot of the control ICs that you may as well spend a bit more on at least demodulating the signal properly and improving noise immunity. And you don’t even need a custom silicon run!

If there are any major improvements to be had in such systems, it’s going to be on the SMPS side of things. Anyway, in a real TV, this sort of thing is cut not because the engineers at television manufacturing companies can’t figure it out, but because it’s just not worth the BOM cost for one reason or another. And most people don’t like TVs that take a whole boot cycle to turn on.

>>A super low leakage element might be a relay.

click on their source link, it’s a mosfet.

>> Then there’s the problem where I’m not entirely sure if this IC even does any demodulation.

Well this article could have had a link to the IC, but if you click on their youtube link in the descriptions, go to their website, and then click on the datasheet, you’ll see it’s just a voltage comparator. So no demodulation.

Basically: press remote button -> power saving circuit triggers -> switches on existing demodulation circuit -> release remote button.

>> I suspect you could do better [….] using zener off

I doubt that, zeners are not known for being power efficient. But post a schematic and even better, wire up a circuit!

If you can do better than ~ 0.001 nA before triggering, like their circuit achieves, then that would be amazing.

Sorry for the slight rebuttal here but it seems like you’ve don’t read further than this article and are just spewing comments (critisicms?) without having read the linked source.

All valid comments from you, however:

> Sorry for the slight rebuttal here but it seems like you’ve don’t read further than this article and are just spewing comments (critisicms?) without having read the linked source.

There is no need for you to be sorry, you’re doing the right thing. Sadly a great many people do what you describe, “Here are 10 reasons why this won’t work, I’d do better with X, Y, Z” but of course post no proof of X, Y Z…

thanks!

Indeed, this is groundbreaking. The TV demo is interesting, but the radio one is even better. They demonstrate having a zero-power device wake up when a radio signal is received. Could be extremely useful for IoT applications that desire very, very long battery life.

Could be great for things like cupboard lights that only come on when the door is opened, or sensors that only need to activate when something interesting happens.

It’s not that it never occured to TV companies to do this. It’s that they don’t care – and they want the TV to be semi-awake all the time, so it can report usage stats (ie spy on your habits), download updates and program schedules, etc.

And where does the 12V come from?

Out of thin air?

And as there’s no filtering/detection at all, the sun shining onto the IR diodes will also wake the TV.

That’s a good question. (about the 12V) Could someone elaborate?

My solution would be a self holding mechanical switch like some coffee machines use nowadays. If it’s too much of a hassle to push a switch once, then you have another problem that wasted standby current :D

It’s a hassle to push the buttons on the TV because the numbskulls that *design* the TVs demand that the engineers who make them work put the buttons on the edge, or worse, around the back side.

The control buttons should be on the front, bottom center, along with the IR sensor. Some TVs have the sensor way off in one corner and either the reception angle of it or the beam angle of the remote is so narrow you can miss it simply by pointing the remote at the center of the screen – like nearly every couch potato does.

I have my 40″ in an entertainment center and cannot use the control buttons on it because they’re on the $%^$# EDGE of the housing.

“an energy conscious world”

I don’t think so for the consumer market… I’ve seen quite a few TV’s at work and most leave on the WiFi, Ethernet, serial port or even signal receiver board in order to boot faster, upgrade their software, their ads/apps or even allow remote control from your smartphone while on “stand by”. Quite a few steps are needed to properly make your TV energy conscious and the funny thing is that the energy class (A, A+) does not reflect these

The 12V comes from the mains adapter that came with the TV that we used.

TI have a solution to make these use almost no standby power. http://www.ti.com/product/UCC28730

The TV we used already had an (unused) mosfet to completely cut off all power to the TV. We use our UB20M to turn this on and off.

For our demo in the entrance of our building (see the video https://www.youtube.com/watch?v=ayeZMAmORkk) we used cheap infrared filters stuck on with blue tack. There are admittedly better solutions.

If the 12V comes from the adapter then you’ve got to include this in your “no power” measurement. TI only state “less than 5mW” for that IC. I’m sure the assembled adapter will be more.

I guess we should have said in the video that there are two problems, a small (0.1W) and a larger one (0.4W), and that in this demo we are only going to solve the larger one. We did start out doing it this way, and had a power analyser in line with the mains lead, but then ditched it, as we would have had to start talking about reactive power and other things that might distract from what we were trying to achieve. And that is to make a circuit that listens to an IR led without consuming mains or battery power. We are planning to do another video where we switch directly at the mains, so fingers crossed.

Solution is simple.

Small solar panel and a small bank of NiMh (or a SLA) – all the 12v you could ever need to switch a relay/mosfet for a picosecond

“Seeing the potential to reduce energy wasted by TVs in standby mode, the [Electrical Energy Management Lab] team out of the University of Bristol have designed a television that uses no power in standby mode.”

Oh awesome. But I have somehow smaller team and less grants to consume, so I have to use things that actually do work

http://i.imgur.com/a1YVAXq.jpg

> Oh awesome. But I have somehow smaller team and less grants to consume, so I have to use things that actually do work

Sour grapes? You’re implying this doesn’t work.

I almost wanted to call BS but then I looked at the datasheet, http://www.bristol.ac.uk/media-library/sites/engineering/research/eem-group/zero-standby/UB20M_Datasheet_Rev.1.pdf . The output is 5.5V @ 5.5 mA, which is more than sufficient to drive an opto-mos switch. They make a big deal about the UB20M’s power consumption and low threshold voltage because that affects what is available to drive the control switch. This means they only need about 30mW from those photo-diodes to make this work. They did some careful optimization inside that chip.

That’s correct. We tried to make the input current as low as possible (~6pA increasing with temperature), so that voltage generating sensors such as IR diodes, piezo-accelerometers, RF rectennas etc. are unloaded. This means the sensor output voltage is higher than if you used an off-the-shelf voltage detector which would load up the sensor with 10s of nA or more, thereby drooping its output voltage. We are not saying that off-the-shelf voltage detectors are worse, they are just designed for a different application, usually for supervising voltage rails with a lot of power behind them. A sensor on its own is quite a feeble source!

We designed a chip with a lower threshold (0.46V) than this one, but it consumed just over 100pA. We initially thought this would be fine, but it turned out to be enough current to make the circuits respond later than the UB20M with its higher threshold of 0.65V.

Put in your licensing agreement for manufacturers that to use this invention they have to at least put the power button on the front face of the television frame. Not on an edge, top bottom or sides. Not around the back corner.

On. The. Front.

(-:

+1

Somehow my reply got on the wrong thread level:

“Only” 30mW? That’s quite a lot of power, you never get this from five photo-diodes, you need about 2-3cm² of silicon in _bright sunlight_ for this. Do you really mean 30nW or 30pW? :-)

I suspected it was a typo. Yes, we are down at 10s of pW. This means you can trigger off all sorts of things, for example stray fields from the ring main!

But you still need the 5 mW for the UCC28730 you mentioned, so a 10 pW switch instead of a 10 microwatt switch (that any competent engineer could put together) isn’t really a big advantage when you consider the complete system.

I see it doing what its designed for but hold on a remote button too long and you shut the system down again, so no big adjustments to volume or rewinds for ten seconds. Biggest thing is most of the people I know who would use this already just flip the switch on their surge suppressor to stop vampire draw and would just see this as additional e-waste at the end of life of the product.

Wouldn’t a couple of low power solar cells (like the ones on calculators) and a tiny uC with some deep sleep routines be a better solution?

That could (at least in theory) even hog enough power for a capacitor to turn on a latching relay ;-)

I was thinking this as well, While watching.

Or a RF harvester + solar cell + mini wind turbine target the green market.

“Only” 30mW? That’s quite a lot of power, you never get this from five photo-diodes, you need about 2-3cm² of silicon in _bright sunlight_ for this. Do you really mean 30nW or 30pW? :-)

Also very strange is the 0,1mOhm resistor in the output line of the chip in the top picture. Useless in this high impedance circuit. This value could only be useful as a shunt in a 100A circuit.

This resistor is a jumper resistor (zero ohm link) on the layout, but in the schematics our software doesn’t allow for 0 ohm resistors, so we used a different symbol with the correct layout, sorry.

Or… you can have a mains switch at the wall, or on the TV, which turns it OFF.

Unless you are bed-ridden, the effort to walk over to your TV or whatever is minimal.

“Seeing the potential to reduce energy wasted by TVs in standby mode”

In fact this potential is merely null with today TVs. Yes I known it has been bashed all over the years that standby TV kills polar bears (don’t laugh, that’s a real gov paid campaign from the 90’s), but in reality what matters more is your top power contributor: heating, water heating, fridge whatever….

Without knowing your true power consumption by element, you only spend a lot of energy on really small amount of saving.

Air conditioning is my biggest power user. But with modern homes not using passive design its needed.

I typically find that the amount of power needed to build the extra components is greater then the amount of power they save over their lifetime.

As with most things there’s always power lost, but that’s what marketing guys are for they take the wasted power and brain wash the mass with new power saving technology.

My power conditioner has a big ol’ ON/OFF switch on it. TV etc are plugged into it. If I flip the switch to OFF then the TV gets no standby power and I get to set up resolution, color, netflix acct etc again. Simple hack that requires no modification of the power circuit. They also make a plain ol’ vanilla model that is a wall plug with a switch on it. Usually used on lamps but should support a modern TV no problem and under five bucks.

Cool, not sure how much it saves compared how much energy used to make it. I’m sure mythbusters did an episode on this.

Makes me laugh when friends turn everything off but use a cloths dryer (tumble dryer) for an hour. I air dry all my cloths, only takes 24 hours. Never used a cloths dryer since the 30 years I left my parents house. If you have a good modern washing machine with high rpm your cloths just need air drying. Save far more energy if we just got rid of cloths dryers.

The magic is in the UB20M IC, with a lot of info including a datasheet here: http://www.bristol.ac.uk/engineering/research/em/research/zero-standby-power/

If they were really that concerned about standby power usage….then it would be better to use a physical on/off switch and hack the tv to turn on when the switch is on.

As threepointone has pointed out using an external circuit to turn on the tv, is just moving the power consumption from the tv to whatever external circuit/device you are using — it is the same issue when talking about a device designed to take advantage of free energy…..it just doesn’t work.

I am fairly sure my brand new Samsung TV does not use IR to turn on. I think the trend is to move away from IR control to radio or hard wired LAN or WiFi.

There would still be some power consumption needed to receive the RF/networking signals

My point was that it probably will not be long until the majority of new TVs don’t have IR at all so this would not work.

If the TVs no longer have IR, of course an IR solution would not work…..but the circuit could be modified to wok over RF or the network.

But like I said in an earlier comment — those circuits still require power and all that would really be accomplished is moving the power usage from the TV to the external circuit.

The better solution here would be to go old-school with a power-on switch and to hack the TV to turn on when the power goes through the switch.

Please check out this video for zero-power radio receive modes https://youtu.be/YC_zeYeA27Y

In the video they say they circuit would be good for 2 years, using no stand-by power.

They use the wireless signal with a rectifier and voltage regulator with (what looks like) a mosfet acting as a switch that will then close and power the led.

It might not use any stand-by power but once a strong enough signal is detected, the battery will start to conduct power to the external circuit.

Here’s the problem with that (once the signal is detected):

1) The signal source has to be fairly strong (ie high-power) and must be fairly close to the antenna — this requires the external signal source to use some kind of power on a constant basis and is what I was talking about — moving the power consumption to a third-order device, the receiver is second-order, and the tv is first-order.

2) It’s possible to receive stray signals that are powerful enough to activate it, such that the battery dies quite quickly

3) How do you ensure that the switch is only activated once per signal ?

In other words even though the idea of zero-power radio receive modes might not use any stand-by power, it still requires power-usage somewhere.

PS: According the Conservation of energy: ‘Energy can neither be created nor destroyed; rather, it transforms from one form to another’

In the video they were using a cell phone and were detecting when a text-message/phone call was received and then lighting an led to demonstrate the reception.

The receiver circuit uses no stand-by power, instead that consumption of energy is done by the cell phone because it must be constantly on for this to function.

Again, this type of circuit does not change the Conservation of energy — someting must consume power to generate that signal.

We should have been clearer in the video, sorry. The UB20M voltage detector is using picoamps of power from the rectenna output. This is so little, that the information and the most of the energy in the signal are left intact for further use. Indeed, as this video stands, cleverness would need to be added to this circuit to work out if the signal is one it was supposed to be listening to etc. The unique thing about this chip is that it uses no power from the circuit to listen. So in applications where the total energy that is used for listening is significant, then this chip is useful. It does however use power from the sensor (picowatts). The 5.4 pA current draw from the sensor allows you to use sensors with output impedances in the 100s of MOhms: this means very tiny sensors.

There does seem to be an issue selectivity on the schematic as shown – it will activate the load anytime enough IR light of the right spectrum hits the sensors. Light tunnels and spectrum filters can help, but in order to be sensitive enough to detect the light from a battery operated hand-held remote, this circuit will also be activated by daylight. If your TV turns on every day at 11am when the sun comes in the window and stay on until you get home, that will kill any power savings from eliminating the more discriminating IR monitor circuit!

One strategy would be to have the UB20M be used to wake up an intermediate detector that could look for a properly modulated signal before waking the load, but now you have the problem that this detector is using power anytime it is looking for a signal.

You can add layers on top of this by having the detector sleep if it does not see a signal after a certain timeout, but that also uses power.

Ultimately I think you need a zero power detector to put in front of the UB20M. How do you make a zero power detector you ask? How about a resonant parallel RC driven by the output of the LED sensors and tuned to the modulation frequency with a high Q? That can act as both a filter and a voltage booster for the detected signal, and gets all of its energy from the incoming signal.

I have actually been working on this exact problem recently – look for an article soon on josh.com!

PS: It turns out that 850nm LEDs are better for this than the 940nm LEDs typically used for IR comms becuase of the increased forward voltage gap, and superbright blue LEDs can generate enough voltage to cause a pin-change interrupt to wake an AVR (i.e. Arduino) when connected to directly to an input pin. Data here if interested…

http://www.evernote.com/l/ABFHrVBGBjZDGpcVpNQEEzJUVbpcE3otFaE/