[Mark West] and his wife had a problem, they’d been getting unwanted guests in their garden. Mark’s solution was to come up with a motion activated security camera system that emails him when a human moves in the garden. That’s right, only a human. And to make things more interesting from a technical standpoint, he does much of the processing in the cloud. He sends the cloud a photo with something moving in it, and he’s sent an email only if it has a human in it.

[Mark]’s first iteration, described very well on his website, involved putting together all off-the-shelf components including a Raspberry Pi Zero, the Pi NoIR camera and the ZeroView Camera Mount that let him easily mount it all on the inside of his window looking out to the garden. He used Motion to examine the camera’s images and look for any frames with movement. His code sent him an email with a photo every time motion was detected. The problem was that on some days he got email alerts with as many as 50 false positives: moving shadows, the neighbor’s cat, even rain on the window.

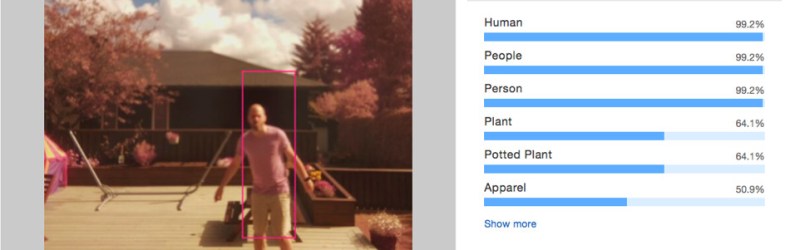

That lead him to his next iteration, checking for humans in the photos. For that he chose to pass the photos on to the Amazon Web Services (AWS) Rekognition online tool to check for humans.

But that left a decision. He could either send the images with motion in them to Rekognition, get back the result, and then send an email to himself about those that contained humans, or he could just send the images off to the cloud, let the cloud talk to Rekognition, and have the cloud send him the email. He chose to let the cloud do it so that the Pi Zero and the cloud components would be more decoupled, making changes to either one easier.

There isn’t room here to go through all the details of how he did it on the cloud and so we’ll leave that to [Mark’s] detailed write-up. But suffice it to say that it makes for a very interesting read. Most interesting is that it’s possible. It means that hacks with processor-constrained microcontrollers can not only do sophisticated AI tasks using online services but can also offload some of the intermediate tasks as well.

But what if you want to detect more than just motion? [Armagan C.] also uses a Raspberry Pi camera but adds thermal, LPG and CO2 sensors. Of if it’s just the cats that are the problem then how about a PIR sensor for detection and an automatic garden hose for deterrence?

Nice ad for AWS

You kids get off my lawn.

Seriously though…he’s using different capabilities hooked together in a fashion designed to solve a problem. Isn’t that what hacking means? If I take your comment to the extreme, this could be an rPi ad as well – “he didn’t even create his own bayer pattern processor for the image acquisition. sheesh.”

For what it’s worth, Azure has a similar service.

Google also

And Clarafai!

How does it know what I am using?

It wouldn’t detect a cat burglar then would it? Otherwise, very nice work!

There is no cloud, It is just someone else’s computer.

Heh! WINNER!

…. but the public fails to discern this…..

And you often happily (?) give him all your data. There must be an off line, local possibility to do this image processing.

OpenCV running on a networked tower. Given that traffic is low, there might be time to dump the images and number crunch on a second pi. But depending on the algorithm, each image may take a few minutes to process.

“Someone Else’s computer” isn’t scalable smart-ass. The cloud is the cloud.

Actually there is a ‘cloud’ for companies like Amazon and Google where data is not on one specific computer but on various server parks across the world.

And the inability to actually tell what servers the data is on is best described by the word ‘cloud’, it’s not pinpointable and not controllable once you let them have it. And there lies the rub, not controllable, bur certainly accessible by far too many.

“64.1% Potted Plant” – I know that feeling.

“99.2% People”

it’s calling you fat.

Am I the only one that is getting sick of hearing about “the cloud”. There is no cloud. There is just a server somewhere. This is hackaday and we can skip all the lame and deceptive marketing speak and hype.

language evolves guy get over it

But not much use if it dumbs down the article.

The cloud could mean anything accessible over the Internet.

To name the services or providers would make much more sense and be accurate.

It’s a useless marketing term used when talking to a less technically savvy audience.

Step one: getting rid of any interpunction as structure and meaning are overrated?

Thankyouthankyouthankyou!

Uh yes … I am married to a linguist, so I have learned a thing or two about the use of language (usually when I am trying to be “creative” with language) – but I am also a product developer working very closely with marketing and I can state that we (I) use language all the time to obfuscate facts or to trick consumers into believing they need “new” services or features, by re-labeling old concepts with trendy words. While “cloud” certainly is not synonymous with a dumb file server somewhere, it is still very bad marketing-speak and quite frequently part of a concept to rip off naive consumers. Yes, language IS evolving but it is also consciously deformed in an Orwellian sense to manipulate people/consumers and the use of such language seems to be a bad fit with the Hackaday crowd.

cunning linguist.

Yes, this is hackaday, so I am quite sure most of us know what this “cloud” is. I don’t like to use cloud services if not absolutely necessary (or ultra-convenient). But I know, what is meant.

“The cloud” is used to embrace a wide number of available services and hardware. The Cloud is scalable on demand. Your shitty rack in your back office is not. The term Cloud is legitimate. I work with it every day. Circle jerk.

Passing suspect images to Open CV on a local server would do just as well. Could easily add recognition. Is there a face? Yes, it’s little Johnny from next door getting his damn frisbee again. Not a burglar.

Come to think of it, using face alone would fail on balaclavas and hoods. Would have to look into how above recognises human shapes.

Nice that he’s done it but really I’m not interested. I plugged it into AWS pre exiting tool for job here’s a flowchart of how it could work in principle… job done

Writing the blog post was harder….Should of been a cool post but wasnt…Sorry guys

Now he just needs to pass the information on to a servo driven, human tracking crossbow. It doesn’t need to fire any bolts, it just needs to look like it. Problem solved.

Use interpunction in the title next time (or phrase it unambiguously). I’ve read it as “recognizes (humans using the cloud)”, not “recognizes (humans) using the cloud”.

Needs some End Effector Hardware to be complete:

Maybe the rather unproven Samsung SGR-A1 robot sentry gun or the deployed-for-real Raphael Systems Mini-Typhoon?

it seems, that the SGR-A1 is not only an end-effector, but makes the described system obsolete. It includes VIS and IR cameras and the necessary computer vision to discern humans from “cats and flowerpots”.

>just send the images off to the cloud, let the cloud talk to Rekognition, and have the cloud send him the email

in my day we used to call them WEBCAMS, or network cameras if standalone

>It means that hacks with processor-constrained microcontrollers

and now I learn 4x1GHz is constrained microcontroller. Sure, whatever you say mister 20yr old hip React/Clojure/Vue developer.

Lol, old hat asshole. ARM performance vs Xeon performance per clock performance, hmm?

its just laziness

Here is actual image recognition running on Pee, tensorflow no less.

http://svds.com/tensorflow-image-recognition-raspberry-pi/

there are benchmarks on github and it goes down to 0.5 second per image, NO CLOUD.