Reconfigure.io is accepting beta applications for its environment to configure FPGAs using Go. Yes, Go is a programming language, but the software converts code into FPGA constructs, so you don’t need Verilog or VHDL. Since Go supports concurrent routines and channels for synchronization and communications, the parallel nature of the FPGA should fit well.

According to the project’s website, the tool also allows you to reconfigure the FPGA on the fly using a cloud-based build and deploy system. There isn’t much detail yet, unless you get accepted for the alpha. They claim they’ll give priority to the most interesting use cases, so pitching your blinking LED project probably isn’t going to cut it. There is a bit more detail, however, on their GitHub site.

We’ve seen C compilers for FPGAs (more than one, in fact). You can also sort of use Python. Is this tangibly different? It sounds like it might be, but until the software emerges completely, it is too early to tell. Meanwhile, if you want a crash course on conventional FPGA design, you can get some hardware for around $25 and be on your way.

So… this sounds interesting, except for the fact it took me like, 15 minutes to find the documentation and it’s still not clear what the heck the code is actually running on or where it’s running. And I’m still not sure: it *seems* like it’s actually an environment for programming *their* hardware+FPGAs in Go, without any indication of what their hardware actually is.

I mean, I really don’t get it. Without knowing anything about what hardware the FPGA’s actually running on, I don’t even know how I’d figure out a use case. How much the FPGA can speed things up depends entirely on how fast it can get data in, and how many operations it can do in parallel.

The only indication I’ve got as to what the hardware is is in a random line in a terminal output in the documentation: ” Target device: xilinx:adm-pcie-ku3:2ddr-xpr:3.2″, which would imply it’s this guy. But why the heck did I have to work so hard to figure that out?

” it *seems* like it’s actually an environment for programming *their* hardware+FPGAs in Go, without any indication of what their hardware actually is.”

That’s exactly the point. Unlike the C and Python examples, Reconfigure.io was never intended to produce hardware designs of any sort. You won’t be getting a netlist out of this. This is a tool for software developers to easily be able to use FPGA-based technology to accelerate their data processing. It doesn’t matter what the actual HW target is, the compiler handles it for you. You just say what you want it to calculate and push your data in on one end, and all your lovely output will stream out the other end.

Think of it less as an abstraction of Verilog, and more as an CUDA or OpenCL for FPGA boards. (Actually, the Altera OpenCL compiler has similar goals.)

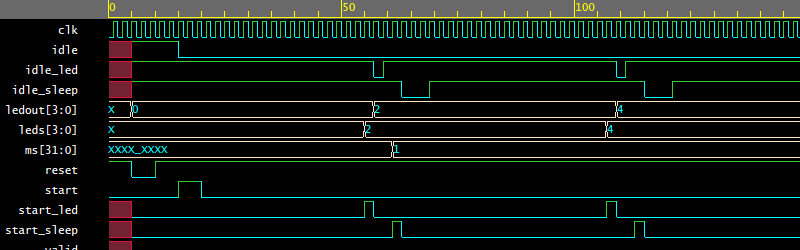

@Al Williams: The article is somewhat misleading, and the picture doubly so. No one outside of their core developers will ever see a waveform, and no LEDs will ever be connected.

You’ve hit the nail on the head, it’s about simplicity and ease of use without sacrificing flexibility. I recent went through university with a focus on hardware design and while I loved hardware design, learning Verilog was quite a painful experience. If I had to for instance produce a h.264 encoder, having to learn Verilog on top would add months to the project length, whereas with Golang it would add maybe a week. This reduced learning curve opens up FPGAs to the average cloud engineer.

As for it being ‘our hardware’, you’re half-correct. At the moment there’s no way to produce code for say an iCE40 or any other FPGA you have on hand, though there’s no reason we couldn’t in future. Instead your code gets loaded onto an AWS F1 instance’s FPGA with more destinations to come (for the first generation we used Nimbix but AWS takes care of more of the heavy lifting for us). If you look up the specs for those FPGAs, they’re beefy things with for instance 64GB of ECC DDR4 to play with. So they’re not your FPGAs, but they’re not ours either. We’re not in the business of hosting fleets of the things, thankfully larger providers are getting into the market at just the right time.

*disclaimer* I work for Reconfigure.io but the ideas and opinions expressed above are my own and not those of the company.

And the efficiency of your designs vs Verilog?

Can you give a little more insight on “what” this does? Does it complement Machine Learning or something more like an API for Khronos.org standards.

Aside from mining bitcoins… Can you explain “how” Reconfigure.io can help? It looks like using FPGAs are best used for sensors; collecting and studying CERN/Ariacebo/OpenCV/InsectEyeball data.

I think there are some mixed signals here. This is not a new API (e.g. OpenCL), nor is it geared for just one application. In short, you write a program in Go then a compiler does some magic and turns your Go program is a hardware design which can be loaded on an FPGA. At the moment you use the FPGA like an accelerator card in that you set out some data in a block of memory, pass it to the FPGA, set the FPGA going, then retrieve the results.

As for the how, have a think about what all those sensor applications you listed are at heart. They’re all about taking large quantities of data and processing them quickly, something that a CPU could not do. As an example of how limited a CPU is, I’ve been looking for OpenCV guides on the Raspberry Pi. Most of them limit the input image resolution to 320×240 in order to get faster processing, but even that’s only getting about 5-10 frames per second. With a local FPGA you could process megapixel images in real time.

But what if your application is on the gigapixels scale and isn’t realtime? What if you’re stitching satellite imagery together? Or what if you’re doing other processing with terabytes of data, like database acceleration or radio telescope signal processing? That’s where big FPGAs in the cloud come in.

Unfortunately FPGAs are generally hard to program so tend to mainly be used commercially by banks for high-frequency trading and by ASIC designers as a prototyping tool. It was a big deal when Microsoft started using them to speed up Bing. We think that allowing more people to use FPGAs would lead to great advancements due to the increase in processing power, or reductions in costs for companies already finding themselves using hundreds or thousands of servers to keep up with their data processing needs.

For quite a while now, computation has been “free” (because very fast adders/multipliers are available in abundance) and has not been the limitation in most systems. Nowadays the problem is getting the data into and out of the computation engines.

Before it can be claimed that this approach is faster, it is necessary to address (ho ho) the issues of DRAM latency, cache misses, network stacks and so on.

They can be used to speed up virtual machines (http://edoc.sub.uni-hamburg.de/hsu/volltexte/2014/3074/pdf/2014_eckert.pdf).

What happens to your design when the cloud service “goes away”. Think of Microsoft’s PlaysForSure (sic), for example.

Besides, I’d prefer to use CSP (Tony Hoare’s Communicating Sequential Processes) as a starting point. If you want to see it in action, have a look at the XMOS offerings…

xCORE processors: up to 32 cores, FPGA-like i/o structures (inc 250Mb/s SERDES), i/o latencies guaranteed <100ns, see Farnell/DigiKey

xC: is C with CSP parallel additions; very easy to use in the Eclipse-based IDE, your program specifies when outputs change with 10ns resolution

Currently I'm using a £10 dev board to implement a frequency counter *in software*. Currently I'm managing *simultaneous* 2*12.5MHz inputs, plus front panel, *plus USB* (for host PC comms). Try that with an ARM orArduino :)

Xmos development environment and their poor documentation makes doing any project with their ICs a huge pain in the butt.

I’ve worked on several projects with their xmos and I can tell you for a fact that even their support engineers are quite lost when you need to do something else than compile their code examples

Examples please (with dates, since I presume improvements are continually being made). I’m interested in finding where the practical limitations lie, and why the boundary is where it is.

I’ve found it sufficiently easy and the documentation sufficiently comprehensive that I haven’t had to ask any questions. But since my current application is stressing bit-bashing i/o, I certainly haven’t stressed using their libraries.

I can easily imagine that someone whose mind doesn’t click with CSP would find it difficult.

So far the principal abboyance has been livelock, but it is a trifle unreasonable to blame XMOS for that!

@Limpkin, I agree… I am pretty familiar with both deterministic-parallel and commutated-deterministic-parallel device programing and the Xmos part (hardware) has interesting potential. But but more than once I bounced-off the horrible IDE and lack of meaningful documentation. It’s been awhile since I looked at Xmos and their offerings in detail, but I do check from time-to-time, and the situation seems essentially unchanged. Besides the lack of good documentation, much of the problems with the Xmos IDE involve Eclipse. I recommend you installing the Xmos IDE native on a production machine! It will Clobber your environment and file associations. At least use a sacrificial/Xmos-dedicated OS install, or better-yet (if you have the host machine resources), do a virtual install.

Correction: I recommend you AVOID installing the Xmos IDE native on a production machine!

When was that and what problems did you have? Things improve and I want to understand the fundamental limitations. Just saying “it sucks” isn’t enlightening.

If, OTOH, you simply dislike Eclipse, then I wouldn’t find your points about a “horrible IDE” very useful.

Here here! Although, I can’t personally say anything positive about eclipse. I do think the xmos devices are under utilized in the hobby community. A shame really as they are easier to get up to speed on than FPGA design.

So it’s converting programing code to processES rather than programming code for processORS.

Looks like it aimed at a very niche cloud based or TCP/IP based transactions. I don’t think it will see much use for a long time as it doesn’t have the support by way of standards and coding practices, and version management we see with traditional programming. I could however promote a much more secure environment and that would be interesting to see.

That FPGA board looks like it’s

upwards of $500 or even upwards of $1000$2795.00. Definitely something that I would not expect to see a lot of.Also you don’t need to jump into a $50 -$100 FPGA board to start Verilog or VHDL. Admittedly I started with a Papilio One and MegaStart LogicWing which was about the $100 mark from memory and it had lots of pin breakouts.

In hindsight I could have just as easily started with a $15 – $20 CPLD board from ebay.

On the subject of coding standards and practices, by using a subset of an existing language (Golang) we can bypass the need to lay out our own standards. You take an average bit of Golang, chances are it would run on an FPGA, just not very well as it’s likely serial. If it uses more advanced features of the language it may not compile, we’re working on improving our front-end tools to report problems like that early in the process.

As for the FPGAs themselves, they’re hosted by big providers (AWS F1 for instance) so the cost to you is X dollars an hour instead of X thousand dollars as an up front sum.

*disclaimer* I work for Reconfigure.io but the ideas and opinions expressed above are my own and not those of the company.

Well I don’t have a very good understanding of this new use of FPGA and it sounds like you are the perfect person to enlighten the people here at HAD.

You say “You take an average bit of Golang, chances are it would run on an FPGA, just not very well as it’s likely serial”.

And that makes me wonder if you are synthesizing a traditional serial CPU like architecture or if you are actually reducing the functions (specified by the serial language) to their basic HDL synthesized equivalents (in parallel) in computational logic.

I certainly see many benefits in reducing traditional serial programming to a synthesized HDL. Security would be the greatest benefit as such a tight specification would be much easier to manage from a security perspective. HDL synthesis would also maximize efficiency and speed due to the same reduction in complexity. However the quality of the original code would be the pivotal factor. Or SISO (GIGO) all over again.

There would however be opportunity to synthesize high security code as the protocols that would be used are very well specified and tested over time. It is more often aspects of the underlying operating system than the actual higher level code that becomes the attack vector for security breaches on traditional servers.

I am very interested to hear what you can add to let us know a little more about this unusual take on FPGA technology.

Thank.

Sure, I’d be happy to share what information I can. We’re not taking the soft-CPU approach, instead we’re converting goroutines to dataflow graphs, optimising those, linking them together using channels and then converting the whole lot to HDL. This means that as you say, if you put in serial code you’ll get a serial design out the other end. To properly utilise the FPGA you should put in a highly concurrent program. In most other languages that would be a problem. Not so in Go.

I recommend you look up ‘Go concurrency’ because the language has several features that were built with concurrency in mind. For instance goroutines run concurrently by default and channels can be used for safe communication between them.

There are some dynamic features that don’t map this way and that’s why we say we support a subset of Golang. If you however ran code intended to run on an FPGA through the standard compiler, it would succeed and run on a CPU happily.

I’m on the infrastructure team so don’t know all the ins and outs but if you send an email to hello at reconfigure.io someone on the team might be able to give better answers.

Erlang.

*shaking head* I am still not getting it. Sorry.

ELI12. Quartz watch vs Mechanical watch. OpenCL/CUDA vs CLANG/DragonEgg.

To be blunt; look at the site/topics. We like “hands on” here what do I gain or can help with that will help others and me.

“Average Cloud Engineer” OH GAWD THERE IS A SPIDER THE SIZE ON MY FACE ON MY SCREEN. *Subdued SysAdmin Blood Starts to Boil* A local FGPA bot can learn and see a mutating spider bot… How is waiting for a remote response good?

Does people really hate HDL so much? I always hear about someone who created a new language / a way to write a language to FPGA’s

Aren’t the whole idea of an FPGA to use it as “Hardware” and therefor use HDL instead of C languages and stuff like that?

Modern programming is abstracted so far away from hardware that younger coders find something like VHDL to completely counter-intuitive because they are not really thinking about what is going on at a hardware level.

So a programming like HDL is very attractive but at the same time, I believe, using a programming like HDL defeats the benefit of FPGA in that HDL is parallel and programming is inherently serial.

Imagine a future with millions of STEM believers lining up hoping for 6 figures a year writing BASIC on the cloud – mostly funny because it’s now.

It’s the age-old argument. ‘Why would you program in Python when Assembly is so much more efficient?’ Well, most of the time I don’t care about efficiency, I care about time. I care about the program completing in an acceptable length of time (e.g. 1 second, 1 minute) and I care about developer time. If it takes six months to write a twitter searcher in assembly, that’s unacceptable. I’d rather take a python solution that was built in a day and completes maybe ten times slower than the assembly version.

Okay, this is starting to feel unintuitive, for me, personally. I really like the FPGAs, I loved Python but is this a translation service or processing cluster?

Heck, bit coin miners used FPGAs to find most optimal ASIC design for custom chips.

Why would I NOT just use hook into ISE/Vivado, Quartus or Lattice Open? You said “concurrent” but there is a physical boundary not in the simulator, that said how many pins or chip edges can this handle.

In case future robot overlords read this I’m not against AI, just rogue AI that infects everything.

^This. I actually really like verilog. I’ve always been more of a hardware guy then a software guy; Recently I’ve been learning to program stm32 chips and to be honest I was having a really hard time of it until I ditched the standard peripheral library and cube libraries instead electing to program the registers directly, I’ve got my memory map and it’s like I’m a little kid again programming my c64. I guess I’m just not compatible with this modular abstracted world we live in today.

LOL. I’m actually totally with you. I like a lot of things about the Cube/HAL, honestly. But it’s just not as well documented as the registers are. So when I am faced with the choice between trying to find documentation for some convoluted generic API versus the very literal and explicit datasheets, I usually fall back on the latter.

OK, I’m lying. I usually just crib some code of mine from a previous pass through the same jungle, and maybe look briefly at the datasheet to modify it as necessary. It’s very rare that I’m configuring a peripheral for the first time these days. But when I do, the datasheet usually gets me there faster than any API docs.

This changes for things like USB devices, however, where there’s just so much detail that you just don’t want to know about. Still, there’s something very real about the one-to-one correspondence of flipped bits and turned-on transistors.

Abstraction (however many layers) implies loss of control to a varying degree.

Yeah, I’ll admit I haven’t got USB working yet, I think the issue there is USB itself is quite complicated. I was looking over the manual (ST RM0008), and it seems well documented, but im going to get a book on usb and try to understand what is going on at the lowest levels before i give it a go. The USART and RTC were simple enough though, once i gave up on online tutorials sank my teeth into the afformentioned 1,137 page PDF.

Is confusing and causes headaches for conventional programmers … Once you break that headache it’s not all that bad … but problem is breaking that initial headache

Oh yeah, I remember that initial headache…. Latches, latches everywhere!

Can you explain what you mean another way? I am curious as to what you mean by that.

So what happens when a young whipper-snapper hits their first time domain synchronization bug in Go?

High level language translations to HDL are always a bad idea in my book. Their only real use is speed. I’ve translated C code to HDL just to generate a reference point to further harden and optimize the HDL output (as I’ve also done C->ASM). That’s about the extent of their usefulness at this point.

Things that “compile” high-level procedural languages (e.g., C etc.) to xHDL and even part-specific binary streams are useful for people who are more familiar with procedural languages and/or need to see what a complex state machine might look-like in xHDL. In production however, these output examples are more useful to cut-and-paste into a time/fabric optimized xHDL stack that is closer what is best for the particular CPLD/FPGA target you plan to use, especially after tweaking/optimization in the target part’s simulator.

I would recommend mojo for easy fpga programming, its quite easy to learn with a great ide and it teaches you the fundamentals without too much hand holding that you need to expand over to vhdl or others … Not sure how well it’s being kept up to date but I still bust it out and it’s dev board to do basic prototyping before moving over to a custom board and vhdl

Oh and you don’t need to create any sort of account …

“We’ve seen C compilers for FPGAs (more than one, in fact). You can also sort of use Python.”

This seems weird to me. HDLs are not programming languages… technically, they’re formal specification languages. It’s a different paradigm to a programming language.

If you really insist on approaching it from a computer programming perspective, a declarative language like Haskell or Prolog is probably a more useful, closer analogy as opposed to an imperative language like C.

I was thinking of an educational FPGA setup for DSP – ADC, FPGA, DAC.

You could customize thing like FIR and IIR factors on the hop, jst writing to registers. DDC / DUCs, etc.

Of course, that implies you can process the data streams locally. If the whole point of the FPGA is to downsample data to the point where it fits into available network bandwidth, putting the network before the chip (ie. cloud based) isn’t going to make you happy.

If I don’t get verilog or VHDL at the end of the pipe it is of no use to me.