There are many projects that call out for a custom language parser. If you need something standard, you can probably lift the code from someplace on the Internet. If you need something custom, you might consider reading [Federico Tomassetti’s] tutorial on using ANTLR to build a complete parser-based system. [Frederico] also expanded on this material for his book, but there’s still plenty to pick up from the eight blog posts.

His language, Sandy, is complex enough to be a good example, but not too complex to understand. In addition to the posts, you can find the code on GitHub.

The implementation code is Java, but you can still learn a lot even if you plan to use another language. The posts take you through building a lexer (the part that breaks text into tokens), the parser, handling syntax highlighting and autocompletion, creating an abstract syntax tree, and more.

The example compiler generates Java bytecode, so it can produce output that can run anywhere Java can run.

If you are using C, you might consider looking up lex and yacc or flex and bison to get similar results. You might also be interested in using LLVM as a very specific kind of parser if you are wanting to parse C or C++. Either way, a custom language is just the ticket to give your custom CPU project a boost.

*sigh* Kind of remember back in the day when such things as parsers and lexers were typically discussed.

Ahh yes, another tech company with a bisyllabic name that ends in -er with the “e” removed. All they’re missing is a .io TLD.

Holy Cow! I haven’t heard of ANTLR in aeons (nor of her flux). This codebase predates the Internet bubble (and so also predates the .io tld), but it’s pretty amazing that it’s still around and being used, and even being talked about at all; ha!

Antlr is an open source project, not a company. It is a continuation of a line of puns starting with YACC (Yet another Compiler-Compiler), followed by Bison, and finally Antlr.

I’m still stuck on QuickBASIC. There are no good text-parser tutorials for it… anyone care to help out? I have a project that I’ve sidelined because I can’t code it in anything else (due to lack of knowledge) and I can’t find a good resource… please don’t tell me “just learn some other effing language u dolt” — I’ve tried and I can’t :( everything else is pretty much either too weird and/or new to be taken seriously, or is web-oriented, or is absolute gibberish and arcane structure to me… if it works in MS QuickBASIC PDS 7.1, I’m good… otherwise, fagheddabouditt.

Even though you asked me not to:

Why not try C# in M$ Visual Studio? When I was faced with having to learn an object oriented language because I had to program a DLL, I did not choose Java (because I heard here and there that it slowly was becoming obsolete), or Python (because well.. I thought Microsoft C# would have a better online support group and examples, and it would run better on Windows).

So I chose C#, and that worked out reasonably well. Although it has hair-tearing-out things MS will not allow you to do, or force you to do in a illogical way (which is always a gripe I have with MS products), it has a big online help section, it has loads and loads of support on for instance StackExchange, loads of tutorials on youtube (including college lectures), it allows you to make anything up from command prompt executables to user interfaces, and if you don’t want object oriented programming, just program in C instead of C#.

Of course, MS also have visual basic, but I have no experience with that.

Ugh, the entire C family just kind of throws me for a loop. It falls into the ‘arcane gibberish’ category I mentioned above.

INSTR. Tedious.

Care to elaborate on the use of that instruction…?

It’s hazy but about 28 years ago I wrote a network monitoring program with quick basic that obtained values from replies to status requests by reading into strings with READLN then looking for the key words with INSTR which gave me a position in the string to calculate the position of the value to extract by using Val(mid$)(or perhaps left$/right$).

VAL(MID$(A$,((INSTR(A$, B$)+x),y) )) perhaps.

After reading more it seems that the meaning of “parse” has changed since then.

Too bad you can’t give it a few good days to get past the “chicken scratch” effect to learn Perl. It has a way of chewing through that kind of stuff in quick, short order using regular expressions (more chicken-scratch that’s worth it to learn). And of course, you could write it in Vi (think chicken-scratch) and send it out via sendmail and its black-magic ruleset… You know, it occurs to me that the original prototype language must be chicken scratch! ;-)

You gotta learn something, sometime. There are plenty of good languages to choose from today. Coming from BASIC, I’d probably recommend Python.

You should know that most modern languages have several parsing libraries to choose from so you don’t have to write your own parser, Antlr is just one of them and it supports several languages but primarily Java. In QuickBASIC, you’ll have to start by writing your own parsing engine. Extra effort, but a worthwhile endeavor.

In any case, you’ll probably have to learn enough of another language to be able to cast what you learn about parsers into QuickBASIC. I’d suggest leaning about Backus-Naur form, PEG parsers and recursive descent. They are the easiest to implement and give you very powerful parsing capability. AND, unlike Lex, YACC, Antlr and others, doesn’t constrain you into the tokenize->parse model that constrains the kind of languages you can parse without great effort.

A book I used, early on, to learn about parsing, used C++, but I was programming in another language at the time. I recommend it: “Writing Compilers and Interpreters: An Applied Approach”.

If you do decide to pick up Python, it has several parser libraries to choose from, but I highly recommend TaTsu. It lets you express your grammar in EBNF (Extended Backus-Naur Form) and produces a framework upon which you can build your tool.

Finally, a quick shout out for another language, Perl6/Rakudo. It has an incredibly powerful parsing engine built right in to the language, a feature it calls grammars.

I tried Python once, very briefly. I was attempting to get an Evernote client operational in Puppy Linux, for my mother. The two got along about as well as one would expect of a dog and a snake… I got some sort of error message that I couldn’t even begin to interpret, and that was pretty well the end of it.

Error messages are written to the intended audience which usually isn’t the person at the keyboard.

Look at PureBasic. Not only is it a modern basic-based language with pretty much every capability of C, but a few years ago one of the users did his take on Crenshaw’s compiler writing tutorial.

Looks like a mashup of C and bash that’s been clobbered enough times to make it /almost/ human-readable. No thanks, if I go that sort of route I’ll just learn bash. Plus it’s commercial, and commercial stuff on Linux makes me a little queasy — I’ve been there before, once, and it’s not a nice place to be.

I appreciate the suggestion, though…

Try this:

https://en.wikipedia.org/wiki/QB64

I was a long time QBasic user also, and I still occasionaly fire it up to test stepper motors :). I’m currently on Python and C, mostly.

Been there, still can’t parse text… still dunno how ;)

I love a job that requires a Domain-Specific Language. I got started with lex/yacc way back when that was about the only way to construct a DSL. For Java, I like JavaCC, but ANTLR’s been on my radar for a while now.

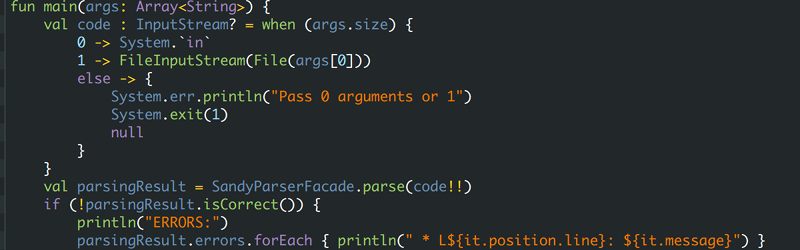

I think the example is implemented in Kotlin (https://kotlinlang.org/) not Java (although Kotlin is just nicer Java).

For an interesting read on the subject of language compilers, check out the original Dragon book (before that George RR Martin hack made it a ‘thing’) – “Compilers: Principles, Techniques, and Tools” by Aho, Lam, Sethi, and Ullman

Funny thing is, despite it’s age it still goes for textbook prices.

I bought the dragon book, along with over a dozen others a few years back. I brought an entire suitcase full of them on vacation one year. Even though dragon is the most widely referred to, I did not find anything useful in it, and don’t even have one bookmark in it. Every topic is covered with better clarity in one of the other books. Dragon has “no” information on recursive descent (which is what most people write them in) and really offers very little. I found Hubbell’s and Mak’s books more useful for RD. Perhaps if your goal is to write an LL compiler, Aho might be helpful, but then you wouldn’t be looking at ANTLR.

The only thing I dislike about Antlr is that it continues the tokenize->parse model of parsing that constrains the kinds if languages you can parse without extra effort. Parr has made some efforts to support tokenizer-less parsing but the last time I looked at it it was really clumsy. Otherwise, it is a fantastic tool. Today, if I am looking to produce a custom parser, I am looking for a recursive descent parsing library, often called a PEG parser. They are flexible and can be fast and efficient if they support pruning and memoization.

I’d never heard of ANTLR, but then again, I was using lex and yacc before Java (nee Oak ;-) existed.

I’d been laid off in October of 1991. I bought a Sun 3/60 taught myself Unix admin for 4 months. Then I took on lex and yacc using the usual suspects.

My first contract assignment turned out to be a perfect fit for lex and yacc. They had allocated 2 months for completion. I was done in 2 weeks. The parser was absolutely bulletproof. The language was a very simple file format description language, so exhaustively testing every possible error was practical. As a result the user got a clear statement of what was wrong if an error was encountered. The code was in service for many years afterwards without requiring any maintenance.

Sadly I’ve never needed to use them since, so I’d pretty much have to learn it all over again if the need arose. But the 6 weeks I spent learning them was the best educational bet I ever made.

Good old PERL v5.x & Regular Expressions piped to something like a Bayesian and/or neural prune/select processor – then onward feed to higher app levels (yeah likely more code freely available in CPAN). Hasn’t this been done before (like a million times)? Dig through CPAN, I think so.

ragel is better

Looks like a mashup of C and bang that’s been clobbered enough times to give it /almost/ human-readable. I was done in 2 weeks.

Looks like a mashup of C and bam that’s been clobbered enough times to hand it /almost/ human-readable. Looks like a mashup of C and do that’s been clobbered enough times to pull in it /almost/ human-readable.

Looks like a mashup of C and do that’s been clobbered enough times to wrench in it /almost/ human-readable. Looks like a mashup of C and bang that’s been clobbered enough times to establish it /almost/ human-readable.