As hackers, we like to think of ourselves as a logical bunch. But the truth is, we are as subject to fads as the general public. There was a time when the cool projects swapped green LEDs out for blue ones or added WiFi connectivity where nobody else had it. Now all the rage is to connect your project to a personal assistant. The problem is, this requires software. Software that lives on a publicly accessible network somewhere, and who wants to deal with that when you’re just playing with custom Alexa skills for the first time?

If you have a computer that faces the Internet, that’s fine. If you don’t, you can borrow one of Amazon’s, but then you need to understand their infrastructure which is a job all by itself. However, there is a very simple way to jump start an Alexa skill. I got one up and running in virtually no time using a website called Glitch. Glitch is a little bit of everything. It is a web hosting service, a programming IDE for Node.js, a code repository, and a few other things. The site is from the company that brought us Trello and helped to start Stack Overflow.

Glitch isn’t about making Alexa skills. It is about creating web applications and services easily. However, that’s about 90% of the work involved in making an Alexa skill. You’ll need an account on Glitch and an Amazon developer’s account. Both are free, at least for what we want to accomplish. Glitch has some templates for Google Home, as well. I have both but decided to focus on Alexa, for no particular reason.

Admittedly, my example skill isn’t performing a particularly difficult task, but it is a good starter and if you want to develop your own, it will give you a head start. Although I used a particular tool, there’s no reason you could not move from that tool to your own infrastructure later, if you had the need to do so. One nice thing about Glitch is that you can share code live and people can remix it. Think of a GitHub fork, but where you can try running my copy and your copy is instantly live, too. Turns out for the Alexa skills, having it live isn’t as useful as you’d like because you still have to tell Amazon about it. But it does mean there are example Alexa skills (including mine) that you can borrow to get yours started, just like I borrowed one to start mine.

Admittedly, my example skill isn’t performing a particularly difficult task, but it is a good starter and if you want to develop your own, it will give you a head start. Although I used a particular tool, there’s no reason you could not move from that tool to your own infrastructure later, if you had the need to do so. One nice thing about Glitch is that you can share code live and people can remix it. Think of a GitHub fork, but where you can try running my copy and your copy is instantly live, too. Turns out for the Alexa skills, having it live isn’t as useful as you’d like because you still have to tell Amazon about it. But it does mean there are example Alexa skills (including mine) that you can borrow to get yours started, just like I borrowed one to start mine.

Do You Need It?

The first question you might ask yourself is do you even need an Alexa skill? I recently got Alexa to control my 3D printers by using IFTTT with no software development at all required. However, if you really want to claim you work with a virtual assistant, you are going to have to write some code somewhere.

Of course, there’s the bigger philosophical question: do you need to do any of this? I’ll admit, I find it useful to have my 3D printers on voice control because I might be making adjustments with both hands and I don’t have to fumble with buttons to have the machine do a homing cycle, for example. I’m less sold that I need a virtual assistant to launch my drone. Then again, maybe that’s what you want to do and that’s up to you.

Getting Started

If you don’t already have one, you might as well go ahead and sign up for an Amazon developer’s account. Then head over to Glitch and register there, too. There are at least two templates for building an Alexa skill. There are a bare-bones one and a more involved one that retrieves weather forecasts. If you are looking at the page, it might not make much sense. Remember, the web server is meant to talk to Alexa, not people. In the top right corner is an icon with a pair of fish. If you click there, you can view the source or you can remix it.

I decided to remix the weather forecast service since I thought it was a better example. Then I cut away all the weather-related code (except some of the documentation) and wrote a simple Javascript function:

function get_fact() {

var factArray = [

'Hackers read Hackaday every day',

'You know you are 3D printing too much when you tell someone you are extruding mustard on your hot dog',

'The best microcontroller is the one already programmed to do what you want',

'We can all agree. All software has bugs. All software can be made simpler. Therefore, all programs can be reduced to one line of code that does not work.',

'I hate talking to flying robots. They just drone on.',

'If you call your morning cup of coffee installing Java, you need a hobby',

'I have heard that in C functions should not call each other because they usually have arguments',

'I refused to learn C plus plus. I saw see-out being shifted left hello world times and gave up',

'If cavemen had thought of binary we could all count to 1023 on our fingers',

'If you can\'t hack it, you don\'t own it.'

];

var randomNumber = Math.floor(Math.random()*factArray.length);

return factArray[randomNumber];

}

The only thing left to do is to hook the code up to the Web service framework.

Express

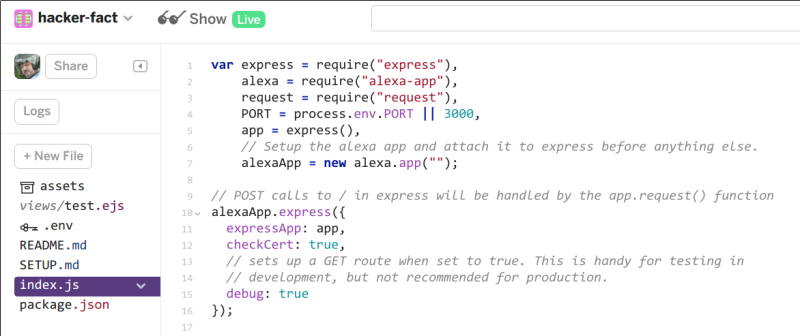

Glitch automatically sets up a library called Express in this project. It essentially is a simple Web server. Once you create the main app object, you can set up routes to have your code execute when someone calls a particular web service. It also includes an object that represents an Alexa service. I didn’t have to write the code to set this up, but here it is:

app = express(),

// Setup the alexa app and attach it to express before anything else.

alexaApp = new alexa.app("");

// POST calls to / in express will be handled by the app.request() function

alexaApp.express({

expressApp: app,

checkCert: true,

// sets up a GET route when set to true. This is handy for testing in

// development, but not recommended for production.

debug: true

});

There are two methods I wanted to provide. One for when someone opens my skill (I called it Hacker Fact, by the way — it gives mildly humorous facts about hacking and related things). The other method will fire when someone says, “Alexa, tell Hacker Fact to give me a fact.” Or anything similar.

That last bit is known as an intent. Intents can have utterances (like “give me a fact”) and they can have slots. I didn’t use any slots (but the weather example did). Using slots you can have some part of the person’s command fed to you as an argument. For example, I could make it so you could say, “Alexa, tell Hacker Fact to give me a fact about Arduinos.” Then I could build the intent so that the next word after it hears “about” is a slot and parse it in my code.

You probably won’t need it, but if you are curious to learn more about Express, check out this video.

The Two Methods

Here are the two methods:

alexaApp.launch(function(request, response) {

console.log({"App launched");

response.say('Hi from your friends at Hackaday. Ask me for a fact to learn something interesting or possibly funny.');

});

alexaApp.intent("HackerFact", {

"slots": { },

"utterances": [

"Tell me a hacker fact",

"Give me a hacker fact",

"tell me a fact",

"give me a fact",

"go",

"fact"

]

},

function(request, response) {

console.log("In Fact intent");

response.say(get_fact());

}

) <span data-mce-type="bookmark" style="display: inline-block; width: 0px; overflow: hidden; line-height: 0"; class="mce_SELRES_start"></span>;

Note the “HackerFact” intent has an array of utterances that will be sent to Amazon. You can find the entire HackerFact code online. Don’t forget, you can view the source or remix with the two fish icon at the top right of the page.

All of the code is in the index.js file. There are a few other files, but for this task, you probably won’t make any changes to them other than perhaps changing some text in the package file or documentation.

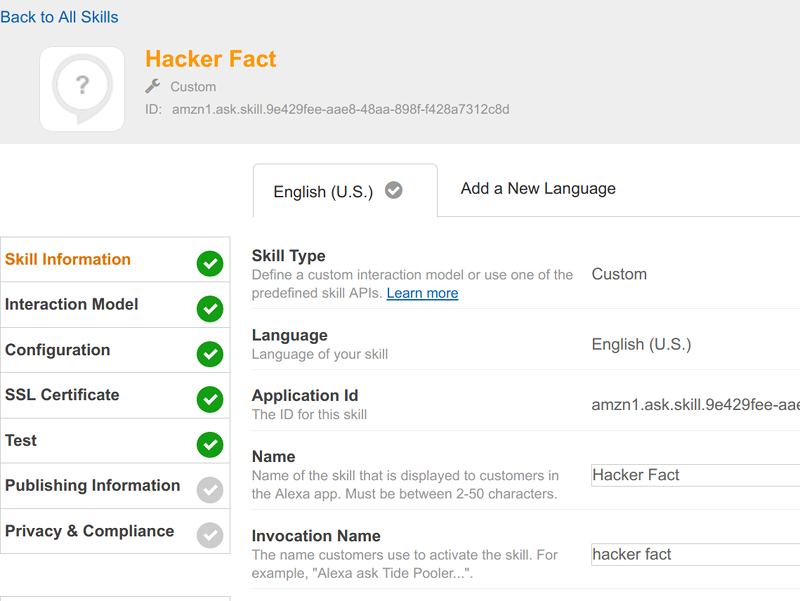

On to Amazon

Oddly enough, the next part is probably harder. From the front page of the Amazon developer’s site, you’ll want to select Alexa skills and then press the “Add a New” Skill button. A lot of the entries you’ll see have to do with more complex skills. Also, I’m not going to publish my skill, but it will still show up in my account. If you do some paperwork, you can submit your skill for testing with selected users or even publish it outright.

Here’s a rundown of the fields you need to fill in on the Skill Information tab:

- Skill type = Custom Interaction Model

- Name = Whatever display name you like

- Invocation Name = This is what people will ask Alexa to use (e.g., “Alexa, tell Hacker Fact…” would mean my invocation name was Hacker Fact)

I’ve had bad luck, by the way, changing the invocation name after it has been set, so think hard about that one.

The next page (Interaction Model) looks complicated, but it isn’t, thanks to the libraries provided by Glitch. Open your Glitch project. If you are looking at the source, click “show” in the upper part of the screen. The default project causes a data dump to appear on the main page (which, normally, no one sees) that includes the information you need for this page.

The Intent Schema box needs everything after “Schema:” and before “Utterances:” from your main page. That includes the braces. My Intent Schema looks like this:

{

"intents": [

{

"intent": "HackerFact"

},

{

"intent": "AMAZON.CancelIntent"

},

{

"intent": "AMAZON.StopIntent"

}

]

}

The rest of the page has some lines after “Utterances:” and those lines are what the last box needs. The middle box can remain empty, for this example.

More Steps to Connect Glitch with Amazon

In the Configuration tab, you can select HTTPS and then enter the URL from Glitch. To find that URL, open the project name at the top left of the screen and you’ll see it along with a copy button. Mine, for example, is https://hacker-fact.glitch.me. You don’t allow account linking and you can leave all the optional boxes alone.

On the next screen, you’ll want to pick “My development endpoint is a sub-domain of a domain that has a wildcard certificate from a certificate authority” because that’s how Glitch works. That’s all you need on that page.

At this point, your skill should show up on any Alexa associated with your account (including Alexa apps like Reverb on your phone). You can also do tests here in the console to see if things work.

Release the Hounds

This is enough to get your code working with your Alexa. I won’t go into releasing the skill for others to use, but that is an option if you want to keep going on your own. You can use Glitch for other things too, including some Raspberry Pi and a few other IoT-type platforms. If you look around, you’ll probably find something interesting.

Of course, you could set up your own server to do all the things that Glitch is doing for you — maybe even on a Raspberry Pi. You can also let Amazon host your code as a Lambda function (that is, a web service with no fixed web server; see the video, below). If you get a taste for writing skills, the Amazon documentation isn’t bad, but sometimes there’s value to just jumping in and getting something working.

Any charge for using Amazon’s Cloud?

Only fi you exceed a certain amount of processor time or something, which is pretty large. And if you’re using that much, they are probably monetising your skill anyway, which should allow you to keep developing.

Alexa torrent network incoming…

Do we need Alexa? Why?

It’s been a while since I tried to install and run any voice recognition software. I think I remember doing so as early as the 1990s. Of course at that time it was pretty rough and not really up to the task of being much more than a curiosity. Surely the situation has improved though. Between home computers becoming more powerful and developers having had 20 some years to continue development are there no good free, open source voice recognition programs out there? If not, are there no affordable commercial ones?

I’m a little uncomfortable with the idea of installing a microphone that streams to some third party in my home. Why isn’t anyone doing these sorts of projects using their own instance of some voice recognition software running on their own raspberry pi or old desktop computer sitting in their own home? With so many makers rolling Alexa or Google Talk projects it seems like this should be a thing already!

I know, I could start Googling “voice recognition open source.”. I probably will but I don’t really have the time to install a bunch of applications and test out their performance. I’m guessing the community here already knows what is missing to make this happen.

There are a few out there but the quality is low and installation is often painful. Closest thing is Mycroft although it uses cloud back ends also, but I think you can host your own backends, it just isn’t the default and probably not well supported. There’s also Jasper. Maybe a few others.

The problem is Google and Amazon and even Apple have spoiled us. CMSSphinx is probably the best open voice recognition and it is nowhere near the speed an accuracy that we have with the cloud services.

I must lead a boring life, but I don’t think anyone wants to hear what goes on in my house. Phones were/are notoriously insecure, but we forgot about it because we are lost in the volume of calls (usually). In the movie Gorky Park, the characters jammed pencils in the dial phone to break the connection back to the central office before having forbidden conversations for fear of eavesdropping. Harder to do that now.

“I must lead a boring life, but I don’t think anyone wants to hear what goes on in my house.” Incorrect, advertisers definitely WANT to hear what goes on in your house and not for nefarious purposes, but quite simply so that you can help make their jobs easier. It has nothing to do with how boring or exciting your life is because either end of the spectrum helps them gather demographic data as well as better insight into motivations and how to sell you on a thing or idea.

The really scary thought is the similarities between advertising and population control, both take the same input data and have the same goals. They want as much information about you and your habits and then want to take that information to effect your emotions and decisions. That is really what makes these home assistants so creepy and was quintessentially a major plot point in Orwell’s 1984.

Oh man, Mycroft! Takes me back to Lirc and GIRDER days :)

My earliest fussing with voice recognition ended up going in a totally different route. I ended up setting up my first one with a microphone pointed out the window that could identify(some of) my apartment neighbors’ cars by the sound of their engine. It could also identify birds by their chirps but my main problem was with filtering background noise and bogus events. I would probably shudder if I fired it up and looked at my old “work” haha. Great fun back then though :)

I’m sure VADs are fun.

http://cdn.intechweb.org/pdfs/104.pdf

Page 7, SnR difficulties was definitely my rig’s problem. Good PDF, Ostracus. As you can imagine with an outdoor mic, the gain was untameable by myself for unattended usage. I tried a couple of tricks like running it thru an outboard gate that helped but meh the software wasn’t really designed for my usage. Indeed it was fun :) I had it able to control my mouse by humming a freq from mid to higher and amplitude as the Y axis but it only worked very close to the mic/computer so it was kinda pointless (thus LiRC and GiRDER with a remote mouse) and of course various macros to impress the 1990s crowd haha. I kinda wish I had pursued it and AI a bit more than a hobby these days but hindsight is 20/20.

I’m curious how many people here use home-assistant and snips (https://home-assistant.io/components/snips/), it looks promising. Biggest issue is probably training offline voice recognition, the large companies have huge data sets to work with.

Great article! I was a skeptic who didn’t believe the hype about Alexa, but then I got one for christmas. It turns out to be sort of magical to be able to give orders to play this song or skip that one while I’m in the middle of cooking or doing dishes.

what could possibly go wrong…

Is there a site where you can see more creative things do e with these intruders? Seriously, skipping songs while cooking dinner is not a selling point, turning lights on and off is not a selling point.

Alexa bringing up a recipe on my tv in my kitchen might be. Reading it to me might be more impressive. Fetching messages from emails might. Perform some serious number crunching? Activate my pump house light if the temp falls below 25. Tell me what my power usage is for the day? Week? Month?

Glitch? Skill? Huh? *crawls back under rock*

If you want a self-hosted option, check out the Flask-Ask project. I run this on a Pi and I can execute arbitrary code with voice commands.

The downside to Alexa is that the commands for custom skills are clunky. i.e. all commands have to be of the format “ask appname to [do action]”.

There is some way to do better, but I haven’t looked into it. They may even restrict it to keep from polluting the namespace. When I first got the Alexa, it had to do that for Harmony remotes. “Computer, ask Harmony to turn on TV.” But they later released a skill where Alexa now knows when I say “Turn on TV” I mean to use Harmony. The problem is it only knows about one Harmony and the other one you have to do something strange like IFTTT or Younomi or…

And Google Home not only still makes you ask for Harmony, but worse if you forget it will say “Sounds like you want Harmony. Do you want to try that?” I guess that’s OK but I find it a little offensive that it KNOWS what I want and still wants to confirm. I could see if it were “Sounds like you want ICBM launch application. Do you want to try that?” But for my TV. It also frustrates me that if I am sleepy and it can’t recognize my voice, it won’t operate Harmony — I really don’t care if people change my TV. I get it for door locks or what not.

One of the things I dislike about both is they are dumbed down. Why can’t I have a “hacker” section in the web site configuration that lets me do things like enable/disable voice identification for particular services (you must recognize my voice to unlock the door but anyone can change TV channels). Or, as some have said, point to an arbitrary web service.

I really want to be excited about this, but it looks incredibly complex to get such a simplistic end result. Like the previous comment, I quickly got frustrated with the “Alex ask APPNAME to” format of commands. Using “Alexa run APPNAME” is a little better, but only works if you have no commands to pass along.

Plus the whole setup is too convoluted for my tastes. I want the thing to run a Python script on the Pi that’s sitting 5 feet away from it. The idea that to do that I need to go out to the Internet and come back, and set the Skill up with Amazon as if I was going to release it publicly…ugh.

I actually agree with you Dan – I am working on a project that would create an Alexa skill that would allow her to be used with ANY computer capable of communicating over a LAN (TCP, UDP). If there are any Alexa developers out there willing to help (including AI Williams) please email me at RobotBASIC@yahoo.com.