First Google gradually improved its WaveNet text-to-speech neural network to the point where it sounds almost perfectly human. Then they introduced Smart Reply which suggests possible replies to your emails. So it’s no surprise that they’ve announced an enhancement for Google Assistant called Duplex which can have phone conversations for you.

What is surprising is how well it works, as you can hear below. The first is Duplex calling to book an appointment at a hair salon, and the second is it making reservation’s with a restaurant.

Note that this reverses the roles when talking to a computer on the phone. The computer is the customer who calls the business, and the human is on the business side. The goal of the computer is to book a hair appointment or reserve a table at a restaurant. The computer has to know how to carry out a conversation with the human without the human knowing that they’re talking to a computer. It’s for communicating with all those businesses which don’t have online booking systems but instead use human operators on the phone.

Not knowing that they’re talking to a computer, the human will therefore speak as it would with another human, with all the pauses, “hmm”s and “ah”s, speed, leaving words out, and even changing the context in mid-sentence. There’s also the problem of multiple meanings for a phrase. The “four” in “Ok for four” can mean 4 pm or four people.

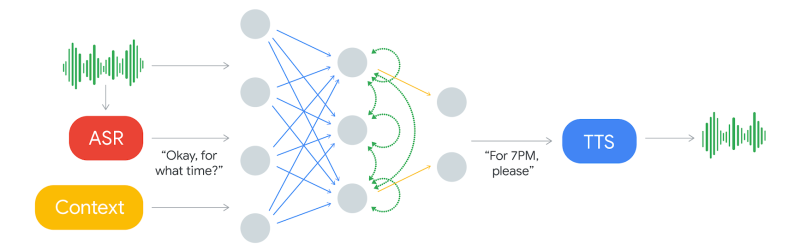

The component which decides what to say is a recurrent neural network (RNN) trained on many anonymized phone calls. The input is: the audio, the output from Google’s automatic speech recognition (ASR) software, and context such as the conversation’s history and the parameters of the conversation (e.g. book places at a restaurant, for how many, when), and more.

Producing the speech is done using Google’s text-to-speech technologies, Wavenet and Tacotron. “Hmm”s and “ah”s are inserted for a more natural sound. Timing is also taken into account. “Hello?” gets an immediate response. But they introduce latency when responding to more complex questions since replying too soon would sound unnatural.

There are limitations though. If it decides it can’t complete a task then it hands the conversation over to a human operator. Also, Duplex can’t handle a general conversation. Instead, multiple instances are trained on different domains. So this isn’t the singularity which we’ve talked about before. But if you’re tired of talking to computers at businesses, maybe this will provide a little payback by having the computer talk to the business instead.

On a more serious note, would you want to know if the person you were speaking to was in fact a computer? Perhaps Google should preface each conversation with “Hi! This is Google Assistant calling.” And even knowing that, would you want to have a human conversation with a computer, knowing that it’s “um”s were artificial? This may save time for the person whom the call is on behalf of, but the person being called may wish the computer would be a little more computer-like and speak more efficiently. Let us know your thoughts in the comments below. Or just check out the following Google I/O ’18 keynote presentation video where all this was announced.

So creepy…it will be interesting, ethically, whether this becomes mainstream or is put on the back burner.

They’re pitching it as if it’ll be consumer-oriented, but it will almost definitely be business-facing. And it probably won’t be used to do things that make your life easier in most cases. This is possibly incredibly exclusionary, irritating tech being sold as helpful, time-saving tech.

Consider that we’ve had the technology to automate calling and setting an appointment since the 1980s or so. It’s not difficult to have a computer dial a number and play a recording that goes “HELLO THIS IS A ROBOT I’D LIKE AN APPOINTMENT FOR 2:30 PM THANK YOU.” This software doesn’t do that. This software helps to deceive people into thinking they’re talking to a human. Cool? Yeah maybe. Great leap for AI? Sure. Good for you? Probably not. The “uhhs” and “umms” are especially eyebrow-raising. It doesn’t have to be designed to be deceptive. Oh, you might say, they’re just making it sound human to make humans comfortable. Yeah sure.

Enjoy the fact that you’ll never be able to get a human on the phone again when you call your insurance or internet company.

https://pbs.twimg.com/media/CSP2Oe8W0AENG9o.jpg

The factory of the future will have only two employees, a man and a dog. The man will be there to feed the dog. The dog will be there to keep the man from touching the equipment.

-Warren G. Bennis

Absolutely! It seems like it would be a violation of trust. Though we would all prefer to talk to a real human on the phone, I would much prefer a robotic-sounding AI attendant over a human-sounding one. Unfortunately, most companies are for more focused on profit than the people they are supposed to be serving.

This will no doubt be bought by big business who are solely ‘profit ahead of people’, and everyone knows they offshore call centers to save bucks. It’s almost impossible to get somebody from a big company who knows more than ‘turn it off and back on again’, so perhaps this will actually improve the situation!

“It’s almost impossible to get somebody from a big company who knows more than ‘turn it off and back on again’, so perhaps this will actually improve the situation!”

Nope. It just means you will get put through to an AI, who will arrange a call back from another AI that will tell you to turn it off and back on again, and bill you $200 plus tax automatically by speaking to your iPhone and sounding just like you, thus eliminating the humans at both ends of the conversation entirely.

Actually, if this technology is as good as Google claims it is, then perhaps this has already happened. How would we know?

/me phones up foilhats-r-us to arrange an appointment to get his tinfoil hat adjusted.

The uncanny valley just got a whole lot more frightening!

But I totally agree; this will probably be used on every robocaller to fool people into listening to it instead of just hanging up on what sounds like Stephen Hawking’s computer voice. I already get so many robocalls and wrong numbers that I only assign audible ringtones to blood relations.

I actually took a phone number in an area code i have no business, friends, or relatives from and downloaded an app to block that area code and all the ones around it. Most of the voip spoofers assume you live in the area your number is from and imitate nearby numbers.

It’s a nuclear option. I really wish the fcc would stop slurping corporate demon dick for like five seconds and add some kind of security measures to voip, but they’ll never be done servicing cox and time warner and comcast.

“but they’ll never be done servicing cox “.. would those be corporate cox too? ;¬)

Does that matter? The truth is, a human caller will be ol always be available when the context becomes too complicated for a machine to hwndle. All you can do is ask if you are talking to a real person. Ethically, it would be very wrong for them to lie to you about something like this.

Yes I’m sure everyone will continue being extremely ethical and make it very straightforward to bypass this AI and talk to the two human operators left on staff for the North American continent. In fact, I bet they’ll just connect us to the ceo’s personal cell! That’s so nice of them.

I’m sorry for the bitterness. I worked at a tech support call center when I was very young and I know how the sausage is made. Ethics never enters the mind there.

I wonder if this will/could put phone sex operators out of business? Will it drive everyone to video instead if all of a sudden it gets good enough that people accept or even prefer speaking with a cloud based machine? What has been happening to the business side of phone sex over the last decade or two anyway?

Phone sex operators are still in business? Haha, oh man.

Millions of US based people still use dial-up.

“In Securities and Exchange Commission filing from 2015, AOL said its average subscriber has been paying for dial-up service for almost 15 years.”

“AOL still has 2.16 million dial-up customers in the U.S., according to the company’s 2015 first-quarter earnings report.”

Most dialup services users aren’t actually people, but legacy systems like home security devices that still need an uplink.

Up until couple years ago, there was a Danish-made wind turbine nearby that had to be frequently reset by calling it over the landline with a 9600 baud modem, and it would call you back whenever it lost grid sync or had any other malfunction. The electric company had an old 486DX66 sitting in the corner running DOS just to communicate with their wind turbines.

These things have a 25 -year designed lifespan, so the oldest systems are from the early 90’s, and the actual designs can go back to late 80’s.

Bot have been pretending to be commenters online for many many years already though.

In fact you might be a bot, it’s hard to tell.

I’m pretty sure I had ‘people’ chat with me that were highly likely bots long ago already, experiments from students in chat groups. But because it can be hard to tell if it’s a very odd person or a bot I tend to play along.

Seems like the problem these days isn’t so much in computers sounding like more or less intelligent but in people sounding like more or less stupid … the tricky part seems to be to build artificial stupidity. Maybe that is exactly what they missed all those decades after Turing’s tests were first run.

The “gotcha” in the restaurant sample sounds like SAM. But that’s fine. I sound like SAM when I haven’t had coffee.

“artificial stupidity” – That was a good one :) Seriously, I think you are on to something here…

Then, the businesses will also employ this machinery to handle the conversation for them. Lovely.

I fogily remember I have read a sci-fi story decades ago about a tech city from which all people vanished but it continued to operate as before…

“There Will Come Soft Rains” from Bradbury’s Martian Chronicles?

What a fine example of how broken the whole thing is. Perhaps the computer in my phone can “talk” to the computers that call to sell me credit cards, and leave me the hell out of it.

But really, this is supposed to be a good thing?

“is this supposed to be a good thing” was a common initial reaction to cars, telephones, aeroplanes, computers and the internet.

@harritech said: “is this supposed to be a good thing” was a common initial reaction to cars, telephones, aeroplanes, computers and the internet.

“is this supposed to be a good thing” is an open question bounded by the context of this HaD post – and I think it is a GOOD question.

I just wish our Elected Politicians asked themselves the SAME question (on our behalf) EVERY TIME they take Re-Elect-Me money from special interests – like The out-of-control Google Behemoth!

My point was, like all new tech, the question is moot. You can’t uninvent it. It just is.

More important, I think, are the questions “what problems does this create / solve” and “how can we maximise the benefits of this and reduce the harms”, and “how the heck do we explain this to our elected politicians!”.

And just think of how much better our environment in general would have been if the “is this supposed to be a good thing” had been asked first, rather than getting deeply involved into the consequences, then asking “how do we fix everything”? e.g. coal generation for starters, etc, etc, etc.

Agreed. But we’d need science literate leaders for that, and most western nations govs are dumbing down, sadly.

Sure, it can’t be uninvented, just like the bomb can’t be uninvented.

Apart from a few meglomanics, society has decided that although it was invented, we don’t have to use it.

Well, coal came in use very long time ago, and in its time brought improvement of air quality in crowded cities, in comparison with wood as household fuel. For the coal, there was never that time to decide ahead of deployment. Instead it is an example of long used technology growing past its point of ballance between convenience and harm.

>”and in its time brought improvement of air quality in crowded cities”

Actually, it didn’t. Cities were less crowded because the motive power to run factories wasn’t available and manufacturing was concentrated up the hills with water power, or in the nearby woods where charcoal could be made.

With coal power, the manufacturing could be moved closer to the final consumers – the bourgeoisie upper classes – and so the cities became overcrowded with the insult to injury that now all the factory smokestacks belching out sulfurous ashes would cause deadly smog.

Many were increasingly of the opinion that they’d all made a big mistake coming down from the trees in the first place, and some said that even the trees had been a bad move, and that no-one should ever have left the oceans.

Had to. We were drowning in plastic.

That’s from Hitchhiker’s Guide to the Galaxy, no? (c:

Curious if your automated program can even legally consent to anything? What if they think you signed up for a card after talking to it? Did you even form a contract if you never even talked with them in the first place?

do you form a contract if you order from amazon or a similar webshop ? the ordering is done by a computer, the order processing is done by a computer, the product is put in a box by a human for now, but that will also be replaced by a robot someday. and the only information the human gets, is to put item A in box A. everything is automated, the invoice is created automatically, the payment is done by computers charging your paypal or credit card.

why should there be any difference in talking to a computer and writing to a computer. if the company is willing to use these machines, they are also responsible for any mistake they make. the calls will most likely be recorded, if you signed up for anything you don’t want by mistake, you can complain, and only then will a human review the case, and thanks to the recording, there will be no doubt. if the machines get it right 99.99% of the time, the companies will happily embrace this tech and save a lot of money for call centers, and just fix the 0.01% by hand.

>”why should there be any difference in talking to a computer and writing to a computer. ”

Writing is unambiguous and explicit. Your keyboard isn’t trying to interpret what you mean, whereas the voice recognition software is trained to understand speech – even if there’s nothing to understand – so the potential for error is much greater and the question of consent comes into play.

Actually, it goes a bit deeper than that. When you order something online from Amazon, you make an explicit order yourself. YOU check the boxes, select the delivery option, payment method, enter your passcodes and identifications etc. and click OK at the end.

With the AI caller though, it’s the AI that first forms its own idea of what you want to do by interpreting your speech, and then the AI fills in the order based on this second hand interpretation of what you want, which may or may not be what you actually ordered, and the ordering process may or may not go as planned if eg. a magazine salesman tricks the AI into saying “yes, thank you” to their special offer, and then they have it on record “consenting” to a subscription that you never ordered.

Ask and thou shalt receive:

http://www.jollyrogertelco.com/

They had an example on a tech podcast where if your car needs a maintenance check or something you say to google ‘make an appointment’ and it would make the call and set up one without you having to bother.

I suppose it can be handy sometimes but in my mind you tend to want to have clarity when making appointments and for that reason you want a human to do it because there’s always that ‘you mean blah blah?’ moment in the chat that you ask because of what they say, and I’m not convinced a bot can either ask the right questions or relay the subtleties to its user.

Or the person might say ‘we don’t have time this week, is next week OK?’ or some such thing where it needs your immediate response which an AI has no way of knowing what you want since perhaps you would call another company or want to do it in two weeks or want to insist it needs to be this week and such things, and it might even lead to them offering a discount or incentive, which a person could evaluate for himself but not an AI.

This technology is going to be abused to infuriate a lot of people. :(

All technology is abused to infuriate a lot of people.

Ok Google, do a prank call.

I’m not sure wether to be amazed or afraid.

I foresee botnets calling people for phishing attacks.

A bit like the microsoft scam (remote desktop install) but then multiple orders of magnitude worse.

I feel bad for the guys whose career is calling those scammers and getting them to damage their own computers. It sucks to have your job replaced by automation. Without a human to screw over, there won’t be any trouble. Of course, smart guys will figure out what to say when to escalate their privileges in the system, so there will be fewer and more competent anti-scammers out there.

How many scammers do you need to run a botnet?

E-mail spam got reduced by 90% or more some 10+ years ago by arresting a handfull of people.

https://www.youtube.com/watch?v=XSoOrlh5i1k

It’s already happening. More as a defensive application in this example but this version is fairly rudimentary.

https://m.youtube.com/watch?v=-7OgWcwgB50

Tom mabe murder scene… My favourite.

What happens when they call each other? A glitch in the matrix, matter and anti-matter, a disturbance in the force? Aaaaaahhhhh!!!

They would probably revert to 56 kbps and still be oblivious to the fact that they are talking to another computer.

If I were implementing this, I would include a high kHz sound in the AI voice, higher than human hearing. If both ends detect the signal they drop all humanisms (um.. ahh..) and probably even drop back to raw data transfer. What was taking 1 minute before, now takes 1-2 seconds, freeing up valuable company resources to chase the next $.

But, in POTS (Plain Old Telephone System) the high frequencies were severely limited to save bandwidth.

Google Assistant: “How can I help?”

Me: “Lower my Comcast cable bill.”

Google Assistant: “OK”

…6 hours later…

Comcast: “All representatives are busy, please stay on the line…”

This is why I say “banana” at random during phone conversations with people I don’t know.

Kumquat!

“I am Groot!”

But what about it being used in the opposite direction? Sounding more human will probably give robot calls more traction, placate callers so they wait longer for customer service issues. And worst of all, give the folks from the Teckernickle Department a new lease of life.

Yeah mentioned in the blog post.

Perfect for handling website info like opening times. Where a real person is asking simple questions.

Is being able to telephone enough to start global thermonuclear war? I guess when combined with inteligence of google/skynet, the computer can do basicly whatewer he wishes once he’s able to convince others that he’s human person.

The two most obvious applications are first, add it to google voice.

Hello, this is tz’s secretary, who is calling and what is this about, and I will see if he is available, is (caller ID) a good number to return your call?

The other side to the robocalls would be sans indian accent

Hello, you need repair insurance for you recently purchased car…

Hello, I’m a nigerian prince who has his money locked up…

Hello, you have just won the lottery, but we need a small fee to release…

Hello, this is the IRS – you owe $2000, and if you don’t drive to a store and purchase a gift card we will call the police…

It’s alrady part of google voice.

Where do you think google got all the data from that they needed to teach this thing?

Nearly a decade worth of voicemail with speech to text output.

Which people willing let google use for other purposes in the TOS.

We’re building skynet :)

I hate those robocalls that say “Is Darren there?”

Me: “You have the wrong number.”

Bot: “Well, maybe you can help me…”

Me: click!

ME:”Sorry Darren’s unavailable. I’m trying out this new cookbook ‘To serve man’ on him”

B^)

Well, G***gle devices have been listening in on home conversations for quite a while now,

so they had plenty of material to work with.

Cloud’s good for something.

I almost pitied the computer that had to talk to the asian restaurant woman.

B^)

Why? If anything, IMHO, it’s a better test for the AI tech. because it’s a much more colloquial conversation compared to the hairdresser one.

Anyway, colour me impressed. Although, knowing that it was an AI, I noticed there were a few giveaways. For instance, in the hairdresser one it kept saying “12 PM” when I think most people would’ve said “noon”. Also, in the restaurant call it kept repeating “Wednesday the 7th” when asked. I think most people would’ve repeated it (probably in a slightly more annoyed tone) before being asked again.

Let’s not teach the computers to get annoyed with us, please.

B^)

That call was probably a lucky break, and they didn’t actually disclose what happened afterwards – did the AI manage to “make” the reservation for the client? What did it report back? Did it even understand that it had not actually made any reservation or did it conclude that no reservation was possible?

This kind of “Turing test” doesn’t reveal what’s really going on. When the AI says “Gotcha”, what did it get? After all, it’s been programmed to appear natural, so it’s just giving plausible replies to the conversation.

AI in general is largely a cargo cult science, where appearances are taken to be meaningful. If it looks good, it can be said to work because nobody can tell otherwise – and so you can apply for funding.

It recognised there was no booking. Once she said they don’t take bookings for less than five people it changed straight from ‘I want a reservation’ to ‘How long is the wait?’ which it wouldn’t do unless it accepted there was no option of a reservation. You don’t need to worry about wait time if there is a reservation.

If it was just giving plausible responses, that’s a damn lucky break it happens to change from asking about reservations to wait times as soon as she says a reservation isn’t possible.

Here’s ” a better test for the AI tech”…

https://www.youtube.com/watch?v=SsWrY77o77o

GE healthcare’s website definantly doesnt use anything that sophisticated – Maybe its just a realy bad employee.

Automated phone services have been with us now for a number of years and they havent got any better and I still detest using them – Telstra are you listening :lol:

Back in the early days of automated phone systems I would have agreed with you, that hitting 0 to get to a human was much better. Today, and as one who has designed a few automated phone systems, I can tell you that if the person who designed it was any good, they are far better than pressing 0. The thing is, if you can even reach a human you are going to get the lowest paid noob in the company and all they will do is try and figure out where to toss your call. The chance if them having any real understanding of you or your issue is very low. On the other hand, if you can make intelligent choices and go through a couple sets of menus, the chance of your call winding up in the hands of the person you actually want to speak with is very high. Again, depending on how well the system was implemented.

FWIW, I though the google thing was neat, and I hope I will be able to play with it someday.

My nephew handles customer’s calls, he is “next level up” from the outsourced Customer Service Reps.

When he answers the phone, and the customer often says something like “Finally! Someone who understands English!”

This could be great for Alzheimer’s patients. A bot companion, with a familiar voice, re-explaining their world to them on demand.

primates need social contact. there was a big issue back in the 60’s with experiments that turned chimps into cannibals through isolation. just because tech is new and shiny doesn’t make it better.

I don’t mean instead of any human contact. I mean in addition to. Of course.

Have you been to a retirement home? a robot to talk to is better than nobody to talk to.

Yeah and I was specifically talking about Alzheimer’s, where sufferers get anxious and need reassurance of basic facts up to 159 times a day. That tests a person, I can tell ya. Really.

I empathize…

Hmm, I think call-centre workers would be at risk in the near future with this technology. I’m sure they wouldn’t be completely replaced, but you could certainly multiplex the humans using this technology. When one call is ending with the human,have another connect to the AI and do the start, then when the human is finished they can take over/ wait for AI to handover.

An A.I. driven FAQ.

IBM’s Watson for call centres can already do that.

Oh how far we have come since ELIZA.

A.I. Pen-pals.

Don’t forget about good old Dr. Sbaitso!

I want a Jeff Goldblum version of this…..

https://www.youtube.com/watch?v=gEJKQI_ht1I

Kevin Mitnick likes this.

(Well, he *would had liked* this back then)

“Should people know they’re talking to an algorithm? After a controversial debut, Google now says yes”

https://techxplore.com/news/2018-05-people-theyre-algorithm-controversial-debut.html

Soon they will pass all possible turing tests

I think both these examples are somewhat rigged by Google.

Neither caller bots made any attempt to immediately verify that they were calling their intended numbers. I would have expected “Hi is that —‘s Hair Salon” and act on the response, in case it had a wrong number. Also the callees did not identify themselves properly, the bot shold have attempted to verify that before proceeding.

“Hello, this is the Strategic Air Command. No one is able to answer the phone at this time. If this is a National Emergency, you may enter Nuclear Launch Codes with your Touch-Tone keypad, followed by the pound sign.

You will then be asked to enter target coordinates or Zip Codes, weapon yields and number of weapons to deploy.

Thank you and have a nice day!”

The abuse this will enable is staggering. I found myself questioning whether I was speaking to a bot with every phone call I made last night after listening to the samples yesterday.

I wonder if you can hack these technologies with speech. Like whispering sweet nothings to get its login information.

Yes you can hack them. Most of the systems like Alexa and Siri can pick up sounds a human can;t hear and process them as commands according to a article on TechCrunch.,

Also SouthPark did a episode where they caused viewers Alexa to take commands from the tv show.

The fact is you do not want this technology in your house connected to anything let alone being able to listen in on your conversations 7×24 while connected to some Amazon or Google server farm. This is Orwell’s Big Brother on steroids.

Has no one read Avogadro Corp? Because i’m pretty sure this is EXACTLY how it starts….

All that, and yet it still can’t recognize when I start screaming “SUPERVISOR!” into the damn phone.

I’d like to see a sample of the machine calling a bank or finance company, and changing a person’s password, account details etc.

Does it not feel odd that both business answer their call with “How may I help you?” and none mention their business name? You would think the person picking up the phone should answer with the name of the restaurant at least?

While listening to this I kept seeing a shiny T1000 talking on the phone with one arm shaped into something swordlike pinning a human to a refrigerator…

Since they are trained from humans, there probably will be a lot pizza orders to empty data centers.

“… would you want to know if the person you were speaking to was in fact a computer? Perhaps Google should preface each conversation with ‘Hi! This is Google Assistant calling.’ ”

I would like to know, but it wouldn’t change the way I interact with the other side of the conversation. I think most people would feel unconfortable, even hang up, defeating the usefulness of the system.

Would it be different if it preface each conversation with ‘Hi! This is John Smith’s assistant calling’? It’s the truth, and this assistant certainly is more capable than a lot of assistants I have known.

This is just a silly commercial for Google.

I’m detective John Kimble!!! https://www.youtube.com/watch?v=Oy0E5eZfVAU

The best case scenario this useless fluff will result in, is the worst tele-communication protocol in history with machines calling other machines for simple requests, being routed through various AI systems in order to manage the reservation of a fucking cab! It will be incredibly inefficient ans hilariously silly.

Worst case is the complete collapse of inter human relations and the death of our species.. Not that this would necessarily be a bad thing!

It just means that computers will be able to screw up more of your real world life… What joy!