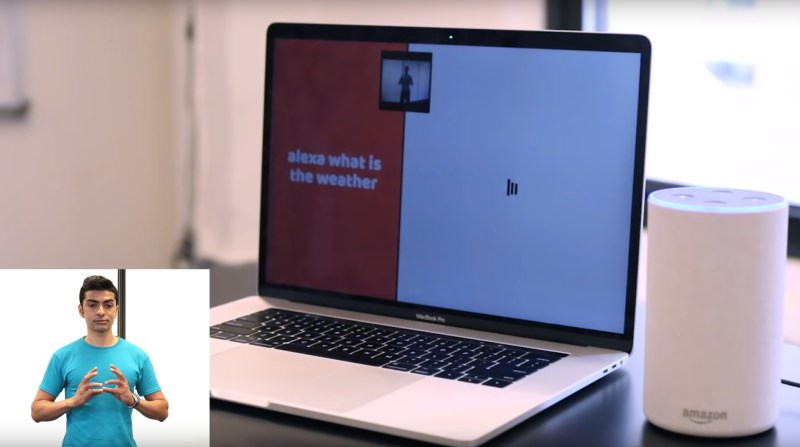

As William Gibson once noted, the future is already here, it just isn’t equally distributed. That’s especially true for those of us with disabilities. [Abishek Singh] wanted to do something about that, so he created a way for the hearing-impaired to use Amazon’s Alexa voice service. He did this using a TensorFlow deep learning network to convert American Sign Language (ASL) to speech and a speech-to-text converter to interpret the response. This all runs on a laptop, so it should work with any voice interface with a bit of tweaking. In particular, [Abishek] seems to have created a custom bit of ASL to trigger Alexa. Perhaps the next step would be to use a robotic arm to create the output directly in ASL and cut out the Echo device completely? [Abishek] has not released the code for this project yet, but he has released the code for other projects, such as Peeqo, the robot that responds with GIFs.

[Via FlowingData and [Belg4mit]]

Hmmm … this is confusing.

I personally know hearing-impaired people who have learned to talk. So this does not seem to be a criterium. Why would you need to first create a speech output from signing if the end result is a text anyway? Why not convert from signing to text directly?

Or did the author of the article mean that there are two ways of communication covered, both the convert-signing-to-text AND the other direction(!) from Alexa’s speech to text? Somehow that isn’t clear in the article.

Unfortunately from where I sit I cannot watch/listen to videos, so the article is all I have for information.

Dude, just stop. You’re embarrassing yourself.

Dude, I don’t use any of those spy-devices. I assumed you could interface with them in a natural way – i.e. through a text interface. Obviously that is not the case.

Do you see the picture in the article? It shows a laptop next to an Amazon Echo.

The laptop translates the sign to spoken English so that the Echo can hear it and respond, when the Echo responds in spoken English the laptop translates that to text.

Thanks, your comment makes more sense than that of the dudiotic above.

So those devices by Amazon, Google etc don’t understand a “real” interface, you HAVE to use spoken words to use them? That sounds unnecessarily complex to me. After all, what you probably want to do is … let’s say: Google something. Or order pizza. Or whatever.

Wouldn’t it make more sense to use a text-based interface for that? Without the need for loops of spoken word in between?

I never understood what the advantage of that “I have to speak to computers” is. Except, obviously, for those who are disabled on arms/fingers/toes and cannot use a keyboard. There it makes perfect sense …

Well obviously an Echo or Google Home etc does not have a keyboard and a monitor. If you want that use a PC. It’s a microphone, a speaker, a lightweight embedded computer and an API into the cloud service, basically. You don’t *have* to use audio to interact with those APIs.

This is unnecessarily complex. Almost all American Deaf can read and write English. Just type.

Of course, there are no unnecessarily complex solutions on hackaday!

Almost all American hearing can read and write English too… and yet there is still desire for the easy of access and immediacy of the Echo and similar devices.

We can’t make assumptions like that. No doubt some can read and write English to an acceptable level, but for others it may be poor – it’s effectively a second language.

This is part of what makes accessibility difficult: you can’t make assumptions in the general case: every disability and circumstance is different.

Actually literacy in the US isn’t all that close to 100% as sometimes presented.

And apart from that it becomes extremely hard to learn certain things if you are deaf, which is a reason why in the old days people thought deaf was equal to stupid instead of just being hampered by their disability.

That’s also why they often use sign language translators and have TV shows with signing instead of subtitles, because there are still a lot of deaf people who just didn’t get the reading skills that come easy if you can hear and can make the connection between what you see and hear but are hard if you don’t have that natural tool and where reading becomes a standalone thing.

Or so I’m told.

“DO NOT TOUCH” is perhaps the worst thing you can read in braille.

B^)

I think this is very cool. How can I add this functionality to a magic mirror? Can I add an additional module to convert the Alexa or google output back into a visual image (an animated ASL output)?

There goes another advantage those who can’t speak had over the rest of us…

As someone who is HOH and communicates with ASL, this feels like a solution looking for a problem. To use this, I would have to have the laptop up and running, the app for this running, stand in front of the camera and sign the question, wait for alexa to respond and then read the response. Why not just type directly on the keyboard? That way you don’t need the overhead of the app with camera and language recognition, just a text to speech app.

I can also tell you that I would have my phone out and googled it quicker than someone could sign the question. There are also all the nuances of ASL that I’m not sure this could pick up. I.e. the sign for AWESOME and AWFUL is the same just the expression on your face changes. Facial expressions and how fast or slowly you sign are part of the language.

My biggest nitpick is that he is not using ASL, he is just signing english. There is a huge difference between the two.

I like the work

I would consider this as a proof of concept or at best a prototype, it does show some promise. I’d see it going forward with a more proper integration with the Echo, perhaps the Echo with the screen. The whole laptop part just wouldn’t be there, it would be an always looking service with a wake sign or signs.

I would expect a true sign language interpreting program to be able to discern facial expressions and all sign language grammar.

I have been learning British Sign Language (BSL) for a few years now and thought that he was using what we’d call Sign Supported English rather than true sign language. I’ve not studied ASL though, so I wasn’t confident enough to say it.

this is a great idea and could be set up to run from a much smaller computer than a laptop and who wants to carry a keyboard and a display yes you can use a smart phone and do the same task the he did but what about home automation IOT devices Alexa can communicate with all to all those trolls that have nothing better to do than whine about stuff this is just a demo proof of concept the world is getting more advanced every day keep moving forward.

It’s an interesting proof of concept.

Visually interpretting ASL is a nice idea. Maybe expand it to OpenHA with OpenCV for home automation projects.