The Unix operating system has been around for decades, and it and its lookalikes (mainly Linux) are a critical part of the computing world. Apple’s operating system, macOS, is Unix-based, as are Solaris and BSD. Even if you’ve never directly used one of these operating systems, at least two-thirds of all websites are served by Unix or Unix-like software. And, if you’ve ever picked up a smart phone, chances are it was running either a Unix variant or the Linux-driven Android. The core reason that Unix has been so ubiquitous isn’t its accessibility, or cost, or user interface design, although these things helped. The root cause of its success is its design philosophy.

Good design is crucial for success. Whether that’s good design of a piece of software, infrastructure like a railroad or power grid, or even something relatively simple like a flag, without good design your project is essentially doomed. Although you might be able to build a workable one-off electronics project that’s a rat’s nest of wires, or a prototype of something that gets the job done but isn’t user-friendly or scalable, for a large-scale project a set of good design principles from the start is key.

As for Unix, its creators set up a design philosophy based around simplicity from the very beginning. The software was built around a few guiding principles that were easy to understand and implement. First, specific pieces of software should be built to do one thing and do that one thing well. Second, the programs should be able to work together effortlessly, meaning inputs and outputs are usually text. With those two simple ideas, computing became less complicated and more accessible, leading to a boom in computer science and general purpose computing in the 1970s and 80s.

These core principles made Unix a major influence on computing in its early days. Unix popularized the idea of software as tools, and the idea that having lots of tools and toolsets around to build other software is much easier and intuitive than writing huge standalone programs that reinvent the wheel on every implementation. Indeed, at the time Unix came around, a lot of other computer researchers were building single-purpose, one-off monolithic blocks of software for specific computers. When Unix was first implemented it blew this model out of the water.

Because of its design, Unix was able to run easily (for the time) on many different computers, and those computers themselves could be less expensive and less resource-intensive. It was known for being interactive because of the nature of programs to be relatively small and easy to make use of. Since all of these design choices caused Unix to become more intuitive, it was widely adopted in the computer world. Now, this model isn’t without its downsides, namely efficiency in some situations, but the core idea was good and worked well for the era.

Worse Is Better

Another interesting idea to come out of the computing world around that time was the saying “worse is better“. Although this idea wasn’t a direct relative of the Unix philosophy, it’s certainly similar. The idea references the fact that additional features or complexity doesn’t necessarily make things better, and a “worse” project — one with fewer features and less complexity — is actually better because it will tend to be more reliable and usable. Most of us can recognize this idea in at least one of our projects. We start work on a project but midway though the project we decide that it should have another feature. The feature creep causes the project to be unusable or unreliable, even given its initial simple design goal. An emphasis on simplicity and interoperability helps fight feature creep.

Keeping these design principles in mind can go a long way to improving anything you might happen to be working on, even if you’re not programming in Unix. We’ve seen some great examples around here like the absolute simplest automatic sprinkler that does the same job expensive, feature-rich versions would do: water plants. There have also been simple tools that have one job to do and don’t allow feature creep to corrupt the project.

Even large-scale manufacturing processes have benefitted from design principles that are shared with Unix. The eminently hackable Volkswagen Beetle was designed in a way that made it one of the simplest, most reliable vehicles ever made that also had the benefit of having a small price tag. As a result of its durable, simple design and ease of maintenance, it became one of the most popular, recognizable cars of all time and had the largest manufacturing run ever as well, from 1938 to 2003.

Even large-scale manufacturing processes have benefitted from design principles that are shared with Unix. The eminently hackable Volkswagen Beetle was designed in a way that made it one of the simplest, most reliable vehicles ever made that also had the benefit of having a small price tag. As a result of its durable, simple design and ease of maintenance, it became one of the most popular, recognizable cars of all time and had the largest manufacturing run ever as well, from 1938 to 2003.

On the flip side, there are plenty of examples where not following a simple design philosophy has led to failure. Large infrastructure can be particularly susceptible to failure caused by complexity, as older systems are often left neglected while new sections are added as population increases. While failures such as the Flint water crisis weren’t caused by complexity directly, whenever damage is caused to these systems they can prove extremely difficult to repair because of that complexity. It’s difficult to build large pieces of infrastructure without a certain level of complexity, but simplicity, ease of maintenance, and using good design principles can save a lot of trouble down the road.

Another example of complicated design can be seen in many modern cars, especially luxury brands. The current trend in automotive design is that more features, rather than higher build quality, means more luxury, so upscale cars tend to have tons of features. This can be great on a new car, but the downside is apparent in the repair bills that come after just a few years of ownership. The more features a car has, the more opportunities there are for failure, or at least for hefty maintenance costs.

Even though modern Unix and specifically the monolithic kernel found in Linux are incredibly complicated now, they still retain a lot of the original design philosophy that they had in the early days. This philosophy is a large part of the reason they’ve been so successful. The idea of simplicity and doing one job well is still ingrained in the philosophy, although you can certainly find examples of software that doesn’t follow all of these ideas anymore. Regardless of the current state of modern software, the lessons learned from Unix’s early design philosophy are universal, and can go a long way whether you’re working on software, cars, infrastructure, or simply watering some plants.

Linux is really too complex today. Even Linus doesn’t know all the code anymore.

It’s time microkernels finally rise !

> Even Linus doesn’t know all the code anymore.

And that’s a good thing. Very good indeed. Linux users don’t have to rely on a single guy to have updates. In this scenario, every developer must be the master of his own code, and not care much about the others.

The point is that every component is made to do only one thing, and do it well. You need that program to do 2 tasks? No, write 2 programs.The kernel is complex, but every module is responsible for one task/device/filesystem.

IMHO, microkernels will add even more complexity.

Sure, and now someone explain that to the systemd fanboys, please.

Don’t waste your breath.

Like all fanbois, they are absolutely convinced that Sievers and Poettering are right and that anyone who disagrees is the enemy, to be destroyed without mercy.

That’d require them to unlodge their heads from their asses so they can hear it.

Doing something monolithic, complex, binary and “different” without citing good reasons for it makes the ‘put thy cranium up thee rectum’ a mandatory action.

Exactly… systemd is completely antithetical to the UNIX philosophy. Which is why my Linux server runs Gentoo, as it is one of the few Linux Distro’s out there that does not require the use of systemd.

They seem to think that newer automatically equates with better. They throw backhanded insults at non poettring ways by referring to System D as “modern”, as if that automatically confers superiority. Just read the forum posts from the D-team and you can’t miss their arrogance. Devuan and some other distros are keeping the flame alive until the day old lenny-n-friends finally bork things up irreversibly.

Microkernel may be better from a security standpoint.

http://www.osnews.com/story/30657/The_jury_is_in_monolithic_OS_design_is_flawed

A kernel is a program that does many things. A microkernel is a program that does fewer things. Less is better.

QED

That’s pure concentrated demagoguery.

Linux is complex, because modern computing is complex.

These are not the olden days…

– Unix was never expected to run on hardware ranging from embedded devices, to smartphones, to supercomputers.

– Unix never had to deal with all the abstractions necessary to to achieve some semblance of control over a modern computing-capable device. The 99.9% of users and developers don’t know the precise details, down to the register and interrupt level, about the hardware that’s powering their device. Not only are such details often deliberately hidden from the end-user, the sheer complexity of a modern desktop computer or smart phone makes the effort a daunting task that now same human being would dare undertake. By contrast, the original PDP-11 for which Unix was written for could be summed up in detail in a 100 page user manual.

– Unix was never expected to be “performant”: And it wasn’t. By design, Unix traded away system efficiency for simplicity of implementation and extensibility. The original Unix manifesto advocated that complex software applications should be written using a series of simple “single function” command line utilities written in C, all glued together though a scripting language (namely sh) and passing data around through piping. This is **slow** even by today standards, but it was unbearably so in the 70s and 80s. That’s the reason why Unix was used on Big Iron exclusively: commodity hardware of the time was simply not able to cope with it.

And as for “microkernel design”… Just like moving a shirt from the closet and placing it on bed doesn’t remove it from your home, removing complexity from the kernel and putting it in user space dones’t remove the system. Therefore, any and all efforts to reduce complexity by moving thing around is a fool’s errand.

I don’t think it’s a fools errand, and I don’t agree with your analogy. It’s like taking your pants, shirt, and socks that are all lying on your bed and moving them into various dresser shelves which is more organized and intuitive. Yes I can leave all my clothes on my bed and try to demarcate boundaries between stacks but it’s better and neater to have them in organized on different shelves in a dresser. Not to mention if I have too many clothes it may not all fit on my bed. I think this analogy is more accurate that describes the motivations toward moving to a microkernel.

He was talking about the bed as an analogy of the userspace, whereas the dresser is the kernel. The problem of sorting and folding away your clothes is the same whether you keep them in the closet or pile them up on your bed.

This tells me that our systems have become way too complex, what was wrong with a READY. prompt?

Works with 1 MHz and should still work flawlessly with 3,4 GHz, an OpenGL extension and 16 GByte of BASIC memory free. :P

Thats a whole lot of handwaving to say that computers are complicated, because computers are complicated.

It also ignores the question of whether they are more complicated than they need to be (yes).

The only purpose of microkernels is to provide isolation in spite of the implementation language and memory model failing to provide it adequately, to mitigate ambient authority of kernel components.

A kernel implemented on a capability-addressed system or in a memory-safe language would be able to implement adequate isolation without being broken up in such a way.

https://www.catern.com/microkernels.html

The follow your analogy, the purpose is to prevent your networked pants from deciding to subsume all of your shirts because they saw a packet malformed in just the right way.

The vast, vast majority of *nix-based systems in the wild are microkernel based. I speak, of course, of IOS and MacOS. Avie Tevanian, VP of software engineering at both NeXT and Steve 2.0 era Apple was one of the developers of the Mach microkernel project at Carnegie Mellon in the 80’s and took that with him to Apple. IN fact the first Linux variant I ever ran on a Mac (back in the PowerPC days) was MKLinux… https://en.wikipedia.org/wiki/MkLinux

Mach wasn’t a true microkernel until 3.0, and NEXT didn’t use 3.0. Also, the later additions to macOS make it far from a microkernel. However, I can’t speak for their mobile OSs.

While UNIX and its descendants have been hugely influential in the history of computing it has always felt like something that was optimized to be easy to implement rather than to truly meet users’ needs. Some things they got right:

* Byte oriented files

* Accessing block devices as files

* Use of command lines

* Proved you can write a real OS in a high level language

Some things that leave me scratching my head:

* Case sensitivity

* Cryptic command names

* No protection from user errors (rm -rf)

* Overlapped IO added very late (DEC OSes had this in the 1970s)

I would even go so far as to say the “ease of implementation” led to the massive propagation to so many platforms and the dominance these OSes have in the server market. But we shouldn’t let the popularity of these OSes prevent us from ever studying anything else. There are some neat features in VMS, MVS, AmigaOS, and such.

It was made for users’ needs, but for different users than windows. Unix was made for programmers, researchers and scientific computing, so it shines on servers. Windows was made for typical office users and it shows (easier to use). MS tried to make it good for servers, but failed (azure cloud windows instances are hosted on linux…)

But you’re confusing some things.

An operating system is really the kernel, unified I/O so you don’t have to rewrite the same thing for every program. The various utilities run on that, including application programs.

There is philosophy to the kernel, which permeates the utilities.

Unix was multitasking/multiuser from day one. Piping, feeding the output of one program into another, was another. Every configure file is readable by a person, and can be edited by hand. Every file and directory has permission bits attached, allowing for fine bits of control, and security.Unix

So Unix is robust, made more so since it’s about fifty years old. Any work has been built on that foundation. There has been some additions to Linux, but most of the kernel growth has been drivers for endless hardware. In the early days hardware compatibility was a big issue, now it’s mostly a problem for the latest hardware.

Windows evolved over time, and I have the impression that they may have started from scratch a few times. Certainly Microsoft markets various releases like they are new things. There is a different philosophy to it, which doesn’t seem to make it as robust. Though with time Windows has added things that were in Unix long ago.

In the late seventies people wrote about in glowing terms, making it an OS many of us wanted. But it was out of reach, the OS too expensive, even when it was commercially available, and the hardware too much (though at least Unix was transportable to different CPUs and hardware). So we made do with unix-like operating systems and some legit clones, and got all excited when GNU was announced and even more when Minix came along.

A lot of the user end stuff is not “Unix”, one could write “Unix-like” programs to run on the kernel. But it would probably be more robust.

Michael

So this is a pet topic of mine and I’ve been dismayed to see so much “bad” Linux software in the last decade that strays from this.

I can tell you why cryptic command names were a killer feature in its day: 110 baud modems and teletypes. If you’ve ever punched out My_Really_Long_Pascal_Variable_Name := 0;

On an ASR 33 you start really liking things like ls and cd.

However — and this is the great thing about Unix/Linux — you can fix that yourself if you want. I’m always surprised there isn’t more use of:

alias rm=’rm -rf’

alias dir=’ls’

And whatever else you want.

Some filesystems let you disable case sense and you can also tell matching in the shell to be non-case sensitive. It just isn’t the default.

To me what we have lost in the GUI era is small building blocks you can easily put together. I sometimes dream of a DBUS GUI that shows all your windows/processes and what they can sink and source so you could graphically build GUI pipelines but I never quite write it.

You should not use CLI at all when using your computer on a daily basis. CLI should be reserved for SHTF situations.

The only reason they developed GUI for Unix/Linux was that they needed multiple CLI windows at once…

Why do you think that?

I disagree. You can be very productive at the command line for many daily tasks that are typically difficult from the GUI (find all files older than X and bigger than Y, archive them and delete them, while saving them to a list of archived files).

That doesn’t sound much more than a couple points and clicks, unless your file browser GUI really really sucks.

I’m sure it would be more time consuming just to google up the correct syntax for the CLI search command. That’s one of the points of that old Apple useability study: people using CLI find they’re being more “productive” because they’re doing more thinking and typing which makes them feel like they’re accomplishing a lot, whereas people using a GUI may be “slow” in performing any particular part of the task, but the task itself is much simplified.

Some file managers incorporate both. Handy, as well as a great teaching tool.

I love the command line but examples like that are more efficient in a GUI if the GUI has been designed for that task. It’s probably just a personal preference. Even though the command line can cope with unusual situations that’s a bit like saying being able to write your own software lets you cope with even more unusual situations. Besides, a modern terminal blurs the lines: How often to you use the cut buffer to “type” file names rather than trying to use tab-completion, or drag files and folders from Nautilus to your terminal? Of course, the command line is awesome because it works and feels the same when you’re SSH’d in to a host on the other side of the planet. VNC just doesn’t cut it. Hopefully the future will bring the best of both worlds.

Your first statement is so broad as to be meaningless. Who is the “you”? Grandma? System admins at Fortune 500s? What does “using your computer” mean? Doing my accounts? Or analyzing terabytes of experimental data?

Even Microsoft, world leaders in introducing the GUI to the masses, acknowledge that at scale, and for repeatability, the CLI is the way to go. In some ways its the entire basis of the flowering of the whole DevOps/infra-as-code movement.

It’s not either or. It’s both. The right tool for the job.

>”you can fix that yourself if you want”

You could, but nobody else follows your conventions so it’s a moot point when you have to use other systems. That’s why the “you can customize it” is a false argument. Yes you can, but then you -have- to, every single time, until you just accept the defaults and get on with your life.

Put all the customizations in an .alias file, and load it automatically when you log in. I’ve been copying my set of customized files from system to system for the last 20 years.

How is that possible when the system the customizations apply to changes underneath you? Or do you just refuse to use any distribution that isn’t compatible with your mods?

Being a unix sysadm for roughly the last 30 years I still recall something my mentor taught me. Unix will let you do some either really clever or really stupid things.

Your Second list = PEBKAC

Case sensitivity is important

Why? Not trying to start a flamewar or troll you, I really want to know why you think case sensitivity is important. To me it isn’t.

Case sensitivity is simple. A filename is what it is, just a string of ASCII or Unicode. Case insensitivity is a shitload of extra work, meaning the number of aliases for a filename doubles with each **letter** in the filename, and there are many languages in UTF-8 with no concept of case at all. If case insensitivity actually solves problems for you on a regular basis, you’re probably too brain damaged to be working directly with a filesystem and should just get an iPacifier to drool on.

It hardly requires an exponential number of filenames, every permutation of capitals. You just stick toupper() in there. Easy. Then, just like English and all Latin-alphabet languages, a word means the same regardless of capitalisation.

In practice you’re only ever going to find all lower, all upper, and maybe first-letter-capitalised. Words with capitals in the middle are for advertisers, brand managers, and other assorted scum.

I had to look up PEBKAC, sorry!

https://en.wikipedia.org/wiki/User_error

With great powers comes great responsibilities.

UNIX is an excellent example of what happens when you put a group of extremely talented PhDs in a lab with an unused computer in the corner and tell them to amuse themselves.

RIP Bell Labs, and thank you for UNIX — the OS that has stood the test of time.

I don’t think android uses Linux for its design philosophies, but because it was free for Google to grab.

B^)

Yep. That’s pretty much the case :)

Also they were able to take and modify the source code without some arcane arcane licensing and sale agreements until it was somewhat decent and usable. In other words something non-Unix/Linux…

Why this site is so Unix and has no Edit button?!

++1

Drop your teletype, bro.

I never understood the Android thing. Why wouldn’t it just run applications in Linux natively? What’s the whole Java layer for?

To obfuscate and confuse.

to not pay Oracle actually, which I’m fine with because they don’t care about Java the way Sun Microsystems did

Because ARM SoCs are such a heterogenous platform that you can’t make sure the same code runs between two devices without some glue layer in between.

That may have been the case years ago, but if today a small team of coders can adapt an entire Linux distribution to dozens of boards with different processors, speed, data length and peripherals, I would expect at least the same from Google. Just look at how many boards are supported by Armbian. https://www.armbian.com/download/

There’s a different level of standards between just making a Linux distribution run on the hardware, and having software that is readily and reliably accessible in a way that can actually make use of the system resources rather than just defaulting to the smallest common set.

Oh no, not worse is better again. Oh boy.

Modern cars are usually considered more reliable, and more efficient. If you think they lack character, note that the worse is better crowd pushed ideas about exposed and visible functional parts, and often hates decoration.

Software has no weight or manufacturing cost. There’s no such thing as heavy code. There’s just slow code, and slow code comes from bad design, not too many features.

An intel chip is fast because it’s complicated. If they applied worse is better, we mighty not have branch prediction. Where do you draw the line? Pipelines? Optimizing compilers?

The idea that a program should do one thing and do it well comes from the days when a program was a function. Writing a BASH pipeline is basically programming. Modularity is great when designing a library.

Now we have GUIs, and one of the great things about GUIs is the high levels of integration possible. Would you be happy if your image editor only accepted images in bmp, and required a converter program to do anything else?

If the integration with the external program is seamless, great, you’ve saved yourself some work. If you actually have to manually load a second program… Why would I use that app?

With embedded firmware, simplicity leads to not taking full advantage of the possibilities of hardware, which creates early obsolescence and e waste.

An excellent design won’t slow down as features are added.

So, by all means keep things modular, readable, performant, easy to use, reliable, and secure. But as far as I can tell, KISS is a heuristic that doesn’t apply universally.

The core problem with “worse is better” so called philosophy is that it translates to “crappy code is okay, or even encouraged, as long as it works for some people”. Everyone loves to criticize, how crappy, slow or inferior Windows is when compared to Unix/Linux, but they don’t see, how crappy Unix/Linux is compared to Windows. I can install proper version of Windows on any computer and in 99.99% of cases it just works, has compatible drivers and almost no configuration is needed – just install software and change the wallpaper. Few years ago I tried both Ubuntu and Mint on my hardware – I had to invoke strange and bizarre commands, edit configuration files, and one of solutions even suggested recompiling everything from sources. Fuck that, normal people want things that work out of the box!

Worse is better? More like crap is crap, no matter how shiny you make it!

And no single tool in Unix/Linux has single purpose. They made them into crappy multitools that perform multiple tasks, and none of them well. Crap is crap, no matter how many bugs (features in Unix/Linux) you introduce!

You are spreading FUD. Linux Mint (for example) does work out of the box, and all the programs are there or few repository clicks away. Windows comes with few usable programs, a lot of trial versions of this and that, and it takes hours or days to get somewhat usable system. And it is full of various spyware and backdoors, even with newly purchased machine.

Bill Gates: “Gee Mom! All the other kids are spying with their software, why can’t I???”

That’s the spirit! It’s a lost battle. Just let the corporations do whatever they want with the data, laadidaa. /Sarcasm

>”does work out of the box”

Of course it works, in the same sense as how a LiveCD boots up with generic drivers on just about any system. Actually getting it to WORK is another thing entirely.

Ever had to keep your laptop screen from closing with an eraser stuck between, because Windows wouldn’t wake up from suspend due to buggy drivers?

Every time one of these discussions springs up I just keep getting reminded that computers aren’t easy. Case in point. Installing Calibre on a server. Following directions and something as simple as “enter DB location” becomes an issue of having the right dead chicken handy. Point being isn’t the big details that Linux has gotten right. It’s all the little details that take “easy” into “difficult”. It’s why Apple is popular, even if constrained.

“Installing Calibre on a server”

That’s not a good example by any stretch of the imagination.

Calibre’s codebase is an unholy horror and a perfect example of what happens when you ‘build’ things without some sort of overall design.

There is a very good reason why it has “private versions of all its dependencies”.

Just because you were constantly raped by your “friends” listed there, doesn’t mean from now on everybody should walk naked, and it also doesn’t means rape should be legal, unless you like it.

People literally died fighting for the rights you (still) have, yet you want to toss away a big chunk of your freedom in exchange for a “like” on whatever social media platform, platform that will be so obsolete in 30 years from now that your kids won’t even bother to watch a “Kids react to Facebook”.

Instead of calling names to those that have a different opinion, you’d better try to understand the long term implications.

Ask yourself, in the eventuality of a “shit hits the fan event”, would you want to be caught naked?

Spyware is not mythical. My HP Windows 10 laptop came with HP preinstalled keylogger. It was even discussed on Hackaday … To improve user experience, blah, blah … Suuuure.

This slows down any computer. I didn’t pay for my computer so that malware/spyware/crapware authors use it. I paid for it so *I* can use it.

I don’t use any social media. Stopped years ago. Privacy is very important. It equates with freedom, actually.

You’re implying that’s a good thing, and that ideally they shouldn’t be fined so it actually hurts?

I was running Mint, on a Dell notebook. Got a brand new shiny Lenovo Ideapad. I took out the HDD from the Dell, put on the Ideapad, boot up, and done. Reinstall drivers? Change resolution? Activate Linux? No, just boot and run.

Do the same on a Windows…

If you don’t need to even change drivers between two completely different systems, you’re probably wasting your hardware using only the bare minimum features of it.

Pull the HD from an AMD machine and put it on an Intel system. Still works? Power management features and all?

Course it will. All the drivers are allready installed. The files get loaded as needed. No registry no install needed. Granted it is assumed your hardware is actually supported.

As for the ealier generic driver comment, the live cd holds the same drivers as anbinstall would.

Obiously not using then propriatery drivers of parts. Supported hardwarde and all that.

>”All the drivers are allready installed.”

Yes, generic drivers that barely let you use the hardware.

It gets especially bad on the peripheirals, because you’ve got a lot more variety in hardware, but simple things like configuring a sound card to play right can be mission impossible, and even if you do it there’s still no good userland software to access it. “Nobody needs more than 2.0 stereo”.

Or, “Global equalizer? We’ve got one. It just doesn’t do anything”.

Or, let me tell you about the story how I got my printer working under Ubuntu. Wait, it still doesn’t…

“configuring a sound card to play right”

Bad example. The audio subsystem is another Poettering/Sievers balls up and *precisely* the reason for Linus finally bringing the hammer down on them. Not the actual mistake itself, but their ‘break it and refuse to fix it while blaming someone else’ attitude.

However in general, the apparent lack of proprietary drivers for Linux is mostly due to the Windows certification program. Supporting other operating systems is frowned upon so most manufacturers simply don’t do it.

“Bad example. ”

No, it’s exactly a good example – just one of many. Linux has troubles with audio, video, and just about any user peripherial that isn’t a standard keyboard or a mouse.

Like, “How dare you try to use your USB-TV dongle to actually watch TV? These devices are clearly meant for hackers to implement SDRs and not for your viewing pleasure. Write your own damn drivers if you want it.”

Crumple zones are a result of “worse is better” because it absorbed more fprce that would otherwise transfer to the occupants of the vehicle. Also, you might wanna look into Formula One racing, or any high-performance racing, to get a glimpse of why exposing functional parts are a good thing.

Slow code? Code can only run as fast as the system it’s on runs it. With that said, there is always an initial high investment in programming that many neglect under the assumption that it is not a physical object. However, if you expect your program to run on hardware, you need to ensure that it actually works on the target environment and adjust as best you can. This is a time and money investment that can slow down the production of a physical system if it requires that software. Also, remember that bloatware is a thing.

Intel chips, as well as many other chips, are fast due to the use of RISC architecture which gets more done with less by getting rid of other instructions the took too much real estate on the chip to execute. They had to balance it out by using more of the ones they had left, but the time boost they got was enough to make it worth doing so.

While the “one thing well” may have came from functions, understand that the high-level languages didn’t really support such a concept explicitly. Not because the languages were incapable, rather because these new languages were developed to steer away from the ideas of mathematical based functions.

GUIs didn’t add anything to our ability to integrate programs, it was better operating systems that did. GUIs just changed our ability to interact with the system. The underlying mechanisms were simplified programs, that worked in the background that wasn’t immediately available to directly manipulate the file system, that allowed an abstraction of all processes that the user didn’t need to know about just to get something done.

Last I checked, Microsoft Office Suite is built like that as well many other software suites. Having it like this can help reduce development time by focusing on one program at a time while pushing out what’s already done and ready for use.

You have it a bit backwards. In order to gain simplicity, one must have full knowledge and access to hardware. Obsolescence and e-waste is due to chip sizes and integration.

Too many features can hog up resources regardless of excellent design.

The last one I can actually agree with.

>>”Crumple zones are a result of “worse is better” because it absorbed more fprce that would otherwise transfer to the occupants of the vehicle”

I don’t think that’s an example of making things worse – rather it’s an example of graceful failure, where the rigid chassis was worse. In a high speed crash, the whole chassis bends out of shape anyhow and rips out of its seams – in addition to turning the passengers into pulp.

It is. When first suggested, there were many who thought it insane to do so as it would mean the vehicle would damage easily instead of being rock solid all the way through. I mean, the whole notion of graceful failure is to let the worst happen but in a controlled manner that mitigates most of the risk involved.

But that was a misconception. The energy of the crash still dissapates somewhere, so the rigid chassis still gets damaged because something has to give – it just does so in an uncontrolled fashion that doesn’t protect the passengers.

Whereas on the software side, the question of worse is better is about feature inclusion where more features is an objective measure, and the “worse” means having less features, taking features away, instead of adding something analogous to crumple zones to the design to mitigate a problem.

With the less is more philosophy, you’d be building cars without special crumple zones and simply made out of such soft steel that they fold like taffy anyhow. They’d probably be very heavy with thick pillars and beams to withstand the normal loads, but they’d be “naturally crash safe”.

“worse-is-better software first will gain acceptance, second will condition its users to expect less, and third will be improved to a point that is almost the right thing”

I’m pretty sure x86 CPUs haven’t been “RISC with a translator on top” for a few years now. That was the case in the 90s, when it let them push clock speeds ever upwards. But now we’ve hit the physical (or at least practical) limits on that, modern CPUs are ludicrously complicated. It’s all about squeezing as much extra stuff in there as possible, that might save half a cycle in some particular instance. Branch prediction certainly isn’t RISC.

I’ve used Linux for 17 years, and Unix-like going back to 1984. And I’m still learning, every so often I bump into some small utility that’s always been there, but I missed in the past. It’s some simple program but I may find myself using it a lot.

Before Linux, most people experienced Unix as a user. And there were books for that userbase, detailing all those useful utilities. I’ve found some at used book sales, and they are still useful.

When Linux came along, it made users the system administrators, so the books spend a lot of time about the OS and installing it, leaving little space for the user.

I don’t know if it’s still being done, at one point there were GUI programs which were simply fancy interfaces to command line programs. That seems a good route.

Michael

Webification is the in-thing, like Webmin because it’s so easy to do, as well as how ubiquitous everything is.

This kind of made me chuckle. While true, I only discovered Webmin a little while back – love it even though it’s slow, and was slightly disheartened to hear them say “this is old/legacy perl” stuff – I do perl, but was sad to hear this was old stuff, not maintained much. No need, it works – it hasn’t worn out. I even use it on pies, odroids and other “LAN of things” machines around here, and it can even maintain the main webservers those have – NGINX. It can almost do anything you can do with VNC, but “knows all the fiddly bits options” and adjustments which is really time and typo saving when maintaining webservers, databases, shares and the system as a whole – even if it wasn’t headless, it’s often easier to use Webmin than to walk over there and do it on the metal. And for whoever mentioned it above – yes, a lot of linux GUI’s are just layered on top of the old CLI utilities – I see nothing whatever wrong with that – but sometimes still use CLI for more options and power….it’s a swiss-army chainsaw which can be used for good or ill, like any powerful tool.

I guess doing one thing and doing it well is why Linux programs can be a dependency quagmire. There are benefits to plunking it all together every time.

? You must not be using a deb-based system then…or have forgotten DLL hell in every other system as well. MS now attempts to get around that by shipping the entire mess even with what should be modular little .net apps…just in case. Glad bits are cheap, eh? Having used all of the above, I find less trouble with Linux than I ever did with windows, both as a dev and as a user. But there’s basically no perfect solution on the horizon. I do like the use of symbolic links to make sure an app grabs the right shared library in linux, so you still only need at most one copy of each version, not one per application like windows setups.

I agree that symbolic links help a lot in getting applications use the right shared object libraries At the same time I see the same “install the library for each application”-approach growing in popularity on linuxes as well. Docker, virtualenv and Appimage being cases in point.

To me at least it appears that no system has really solved the dependency problems completely, even if most linux distributions have more elegant partial solutions than Windows had. With disk storage becoming cheaper by the minute, solving this issue by just delivering every dependency with every application seems to be the way all systems are going.

Now if only my phone’s disks were as big as my desktops…

” … no system has really solved the dependency problems completely, … ”

Because the problem is not with the system, is with the libraries and the software that uses them. Better, it is with the developers of said libraries / software. If you already have myLib version 1, and release version 2, it shouldn´t break the way either version works. Extend yes, break no. It is part of idea of shared libraries from the beginning.

Then some programmer releases a not-well-tested, not-well-planned version of that library, and the OS takes the blame for the problem.

uh, nixos?

Indeed. And guix too, but I haven’t tried it

Yup, agree. Dll hell (or .so hell) isn’t solved and may never really be solved. Lots of moving parts in a system these days.

The halcyon days of “Doing one thing and doing it well” on UNIX are long gone.

All bow down to that egotistical moron Poerttering and his lapdog RedHat!

A thousand pardons, but you forgot (I’m absolutely certain) the real lapdog in this debacle: Debian.

********************************

“…we’re going to talk about something that is clearly not progress. Systemd. Roughly 6 years ago, Systemd came to life as the new, event-based init mechanism, designed to replicate the old serialized System V thingie. Today, it is the reality in most distributions, for better or worse. Mostly the latter…”

“…As far as Systemd is concerned, I am concerned, because it is a technology that does not correlate to knowledge or experience, and it poses a great risk to the prosperity of Linux…”

“…you might as well practice Linux installations, since they may be the [only] answer to when Systemd goes bad, as I cannot foresee any easy, helpful way out of trouble…”

—“Systemd–Progress Through Complexity”

This is so meta lol

On modern systems some of the UNIX features are outdated, ex. The fork/clone system call, which works only on systems with virtual memory management (MMU). Most MCUs does not have MMU, so you can’t port UNIX on them. (most of the computing systems are MCUs).

Or the everything is a file, is just not true. (also on MCUs without filesystem you can’t handle anything). The other thing with the everything is file, that every part of the system is connected to every other part by files, and file handles (your system becomes a spaghetti monster https://en.wikipedia.org/wiki/Flying_Spaghetti_Monster). ex named sockets in networking. And you have only one namespace, there isn’t any type for the objects !!! You have to handle the types at your own! UNIX is like a untyped scripting language, it was fun and easy in the 60s-70s, but nowadays are little outdated.

Unix is a lot like C. In the case you mention, and also in every other case.

It enforces a sortof Unix-like thinking, the idea of heirarchies and libraries and things calling ever-lower things, with God keeping everything in it’s proper place. As well as the undecipherable stupid names everything has. Also the ability to cut your throat by spelling something wrong or missing some punctuation. 3 kinds of quotes including BACKWARDS!? It’s a nifty thing but it’s an ugly hack!

Linux is like a Linux programmer. Who seem to mostly have personality disorders and be German. Too many chiefs, not enough Indians. Which is why the idea of a library that isn’t utterly compatible with it’s predecessor is even allowed to exist as a possibility, never mind shipping the bleeding thing. Even Microsoft have never fucked that one up. Or not since Windows 3.1 at least.

“Unix is a lot like C…”

As the British–as well as a lot of others–would say, “Not to put too fine a point on this, but…”

…might the fact that ‘C’ was created (and, yes, I know that it has its origins in ‘B’) in order to write the UNIX operating system have a slight something to do with this? Just asking.

Systemd–Progress through Complexity —

OCS Magazine, October 19, 2016, dedoimedo.

(not hard to find at all; prominently displayed in any search of “Systemd–Progress Through Complexity”)

Go a little further and read the prominent Linux kernel developer, Ted Ts’o ‘s take on ‘systemd’ ‘s authors; and read about Linus Torvald’s banning Kay Sievers permanently from performing any further commits to the Linux kernel.

The future of Linux is not what it used to be.

> The future of Linux is not what it used to be.

I am not sure. It’s only few people who “CAN” systemd, the rest of them fails to fix and boot it, so it’ll be replaced with something more realiable. Thanks for the article. It’ a commercial decision, from one(few?) corporation who want total control, but UNIX have had several failed companies behind it, so the idea of UNIX in itself is timeless. I see this as a hump on the road, where “new” peoples needs to improve themselves. Knowledge is power:

http://without-systemd.org/wiki/index.php/Linux_distributions_without_systemd

http://without-systemd.org/wiki/index.php/Debian_derivatives_which_are_now_systemd_encumbered

https://en.wikipedia.org/wiki/Systemd#Criticism

an user suffers with static ip and systemd, what is wrong with ifconfig compared to netctl / netcfg?

https://www.raspberrypi.org/forums/viewtopic.php?f=53&t=54080

“Arch Linux has deprecated ifconfig in favor of iproute2” ->

https://wiki.archlinux.org/index.php/Network_configuration#Network_management

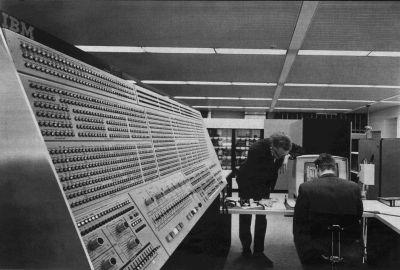

You could have used Wikipedia’s image of a PDP-7, since that was the first computer that Unix ran on.

https://upload.wikimedia.org/wikipedia/commons/thumb/5/52/Pdp7-oslo-2005.jpeg/450px-Pdp7-oslo-2005.jpeg

That’s a handsome computer, but the chair’s in the wrong place.

Not really. That’s where you RTFM

They didn’t need to RTFM…they were *writing* it :-)

ok

I am still impressed by UNIX, it’s so old but still feels effective and “modern”.

Wow, these people who created it in 1970 was really smart, and I thank them at Bells + more for their fine work.

Microsoft are still copies some of the functions (can’t come on something right now).

I hope that systemd (=regedit) will sort itself out, it’s sad that they don’t follow the usual rules, such

as “choose the least surprising way to do things” , “have sane defaults”.

“Those who don’t understand Unix are condemned to reinvent it, poorly.”

@Atle– …“choose the least surprising [simplest?] way to do things…”

“Complexity is not a goal. I don’t want to be remembered as an engineer of complex systems.”–David L. Parnas

@ jawnhenry ; least surprising [simplest?] way

a little note; it’s more subtle than that.

Windows is a fine example, they usually changes the desktop. We live now in a icon-age, which everything readable should be replaced with a little picture resembling vaguely of what it should do. I have to ask my friend everytime I try to unmount an usb-hdd when I borrow his computer. :)

– The systemd / sysV / init.d /rc.d is another mess Linux has. It’s has unique flavours, but when will it mature? Compare it with package tools, it’s mature now, nobody dares to mess with it. :)

– Replacing old programs is the new and “fun”. Other name, same or extended function, other options. What problem are they really trying to solve?

– Networking; ifconfig, iproute2, and absolute the systemd’s version of “netctl / netcfg”.

You would really enjoy reading, and get a lot to think about, from

“Modern Software Development is Cancer”.