One of the fun things about old video games, besides their obvious nostalgia, is that some of the more popular games have been pried apart and tinkered with for years, leading to a lot of new “development” within the games. This often uncovers some hidden gems that gamers might not have had any knowledge of during the game’s heyday, like this coding oddity found in Final Fantasy 7 that illustrates a lot about how 32-bit processors do math.

The original PlayStation used a 32-bit RISC processor, but the most significant bit could be used for integer signing. This means that if you have an integer that has a value of 2,147,483,647 (01111111111111111111111111111111 in binary) and you add one, the value is suddenly negative 2147483648 because the most significant digit is also an indicator of the integer’s sign. In this situation, the integer is said to “overflow”. In Final Fantasy 7, if you can somehow get a character to deal 262,144 damage in one hit (much less than two billion, due to the way the game does damage calculations), the game has a little bit of a meltdown.

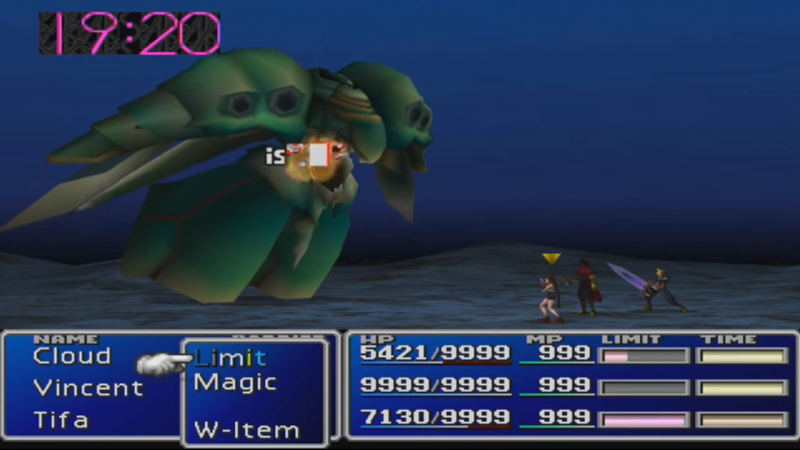

[4-8Productions] had to do a lot of work to show how this glitch can be exploited in the game as well. Usually damage in this game is limited to 9,999 but under certain configurations (admittedly obtained by using other exploits and tools available for FF7 like a savegame editor) two of the characters can deal more damage than this critical value, exposing the 32-bit processor’s weak spot.

Even though integer signing is a pretty basic concept for most of us, the video is definitely worth a watch especially if you’re fans of the classic game. Of course, Final Fantasy 7 isn’t the only classic that has been exploited and reverse-engineered to the extreme. You can use a Super Mario World level to implement a calculator now, too.

I doubt it is a processor weakness. The processor is doing what it does and the software just told it to do something dumb.

Is it really the software’s fault if there is no way of hitting this “issue” without external editing hacks?

You can do it without cheating. In the video he talks about how two characters can trigger the glitch if you get the right gear etc. For the other characters you need to cheat.

At least it’s not so bad as the major FF6 bug. Where the damage reduction always uses magic defense also for physical attacks, making normal defense useless…

Thankfully, the modding community has corrected many of the bugs in the game. Here is the version I’m playing:

https://www.romhacking.net/hacks/1386/

Of course it’s not a weakness, that is how it’s specified to work. However, overflow of signed variables has been a big source of security vulnerabilities in recent years, so it’s something every programmer should be aware of, and is not limited to games.

Of course it’s not a processor weakness, you can calculate any number on any processor, if it has the RAM to store the number. You just carry to the next byte / word. That’s why CPUs have carry flags.

In this case they didn’t bother, because they didn’t think there was a way of causing over 9,999 damage, which would fit into 14 bits. 262,144 is 256K, or 18 bits worth + 1. No idea what particular limit is being hit there, you’d have to look at the code.

I let a lot of this stuff go over my head in school, but why doesn’t adding one to that value make negative zero?

For bit-wise adding, you do just like decimal, start on the right, add corresponding columns, and carry as necessary. Binary is cool because the carry can only ever be 1, which makes things simple to implement. The most basic component is a half-adder, which takes two bits and gives a one-bit result from adding them, plus a carry, which you carry to the next bit in a chain. The last bit just falls off the end (overflow).

Let’s use 4-bit integers. Say you have 0100 (4), and want to subtract 1 to get 0011 (3). Instead of having both add and subtract hardware, the CPU instead just has add hardware, so instead of subtracting 1, you rather add -1. If you look for a pattern which adds to 0100 to give 0011, that pattern is 1111 (note it will overflow), so let’s call that -1. 1110 is -2 (versus 0010 for 2), 1101 is -3, and so on to 1001 (-7). Note that 1001 + 0111 is exactly right to add together to 0, so things are consistent.

Importantly, this pattern also makes it easy to subtract 1 from something, like a counter. Adding/subtracting 1 is extremely common, so CPUs have hardware for that specific case. Adding 1 to 0000 is a simplified add, because you only have an input to add to the right digit, the rest are just carries. Subtracting 1 from 0000 is similar but backwards (inputs and outputs inverted relative to a 1-bit add). Subtracting 1 from 0000 gives 1111, which is consistent with the pattern I described above. Subtracting 1 again gives 1110, 1101, etc.

This is called 2s-complement arithmetic. It wasn’t so much invented and then computers built to calculate it, it’s more of a derivation of what kind of math computers built out of simple adders like to do. In early days there was 1s-complement, which used the high bit to indicate negative and inverted the other bits, but it didn’t work as simply. For instance, 0001 + 1110 doesn’t add to 0.

Sorry for the tl;dr. I figured this out on an Apple ][ back in the day, and IT WAS SO AMAZING HOW IT ALL WORKED TOGETHER!

In simple arithmetic, like the binary adding on most CPUs, there is no negative zero.

Think of this a bit like those mechanical counters that count 0000 – 9999. If you add 1 to 9999 it goes round to 0000 again. Similarly if you start with 2, and subtract 4, it goes 0002, 0001, 0000, 9999, 9998.

2s complement is the same as that, except when you want to represent negative numbers you invert than add 1. Which is weird, but it makes everything add up correctly, without having to keep track of whether you’re adding positive or negative numbers and perhaps having to use separate adder circuits for each. It just happens to work.

Similarly using the same mechanical counter, -2 would be the same as 9998. In this case “invert” means “subtract from 9”, the same way inverting in binary is the same thing as “subtract from 1”. So subtract 0002 from 9999 gives 9997. Then add 1. 9998, or -2. So it also works in decimal!

That is, as long as your system rolls around from the maximum value to zero, and back again, which any arithmetic will if you only have a fixed number of digits, 4 decimal digits in this example, 32 binary ones in a Playstation’s CPU.

Like Scott says, try it out with 4-bit binary numbers, see it working, then you’ll understand it. It’s pretty simple.

BTW it seems to me, that the “add 1” stage is to compensate for the innate “off by 1 error” of our counting system. Which is to say, that 0 itself, or 0000 or any other zero, is itself a number. So to count from -1 to 1 you go -1, 0, 1. Needing to add 2, not just 1.

The “off by 1 error” is commonly experienced in programming, particularly stuff like C where there aren’t really arrays, just calculations using an address offset mode. So the first element of an array is [0], rather than [1]. As you can see here, it’s not just a computer thing, more a counting thing. Because the first digit is 0.

Hence “invert (ie subtract from highest digit) then add one” is the method to get negative numbers to work in, I assume, any number base. I’m not gonna bother with odd ones like Pi or fractions or negative bases, because I just don’t care that much about stuff that doesn’t have a single real-world application that I know of. Even using bases other than 10 doesn’t crop up much in the world. In computers you get decimal, hex, binary, and maybe octal on some museum-piece with incandescent bulb displays.

That last paragraph was a deliberate trap, btw. Because the best way to get an answer isn’t to ask a question. It’s to venture a wrong opinion. I’d be interested in the real uses of weird number bases.

The “add 1” stage isn’t a fixup that the CPU does, it’s a fixup the humans do to understand what the CPU is doing. In the example, the CPU never subtracts 2 from 9, it subtracts 2 from 0, yielding 8 plus a carry, which subtracts from 0 yielding 9 plus a carry, etc, until you get 9998.

In the original Microsoft Windows Solitaire, it stored the cash on hand in a 32-bit number, so if you selected ‘deal’ over 630 times, it would underflow past -$32,768, to $32,767.

The [32768, 32767] isn’t 32 bits.

16 bit int.

Sounds a bit like a dev might have considered the possibility of HP wrapping and written a test for it. One thing that puzzles me a little, why did they use a signed int rather than an unsigned int? I’m going to presume that the compiler default was that a straight int was signed…

Back when dealing with 16-bit integers, you often had overflow events, which gave you a signal of “Hey, something’s up, here”. 32768 is a human-scale number. With 32-bit integers, most programs simply don’t have it happen enough to really notice. Like if in 3 weeks of play-testing this happened once, would anyone dig into it? Keeping in mind that there’s likely an RNG component, and that other bugs probably happened 300 times during the same testing period.

Even with modern software projects, the startup argument over whether to use unsigned or signed ints is intense, almost as intense as the arguments about indentation rules. The answer which is obvious for your newly-written code isn’t so obvious when you interface that code with existing libraries and systems. So, IMHO, it’s not an easy thing to answer even today.