We live in the future. You can ask your personal assistant to turn on the lights, plan your commute, or set your thermostat. If they ever give Alexa sudo, she might be able to make a sandwich. However, you almost always see these devices sending data to some remote server in the sky to do the analysis and processing. There are some advantages to that, but it isn’t great for privacy as several recent news stories have pointed out. It also doesn’t work well when the network or those remote servers crash — another recent news story. But what’s the alternative? If Picovoice has its way, you’ll just do all the speech recognition offline.

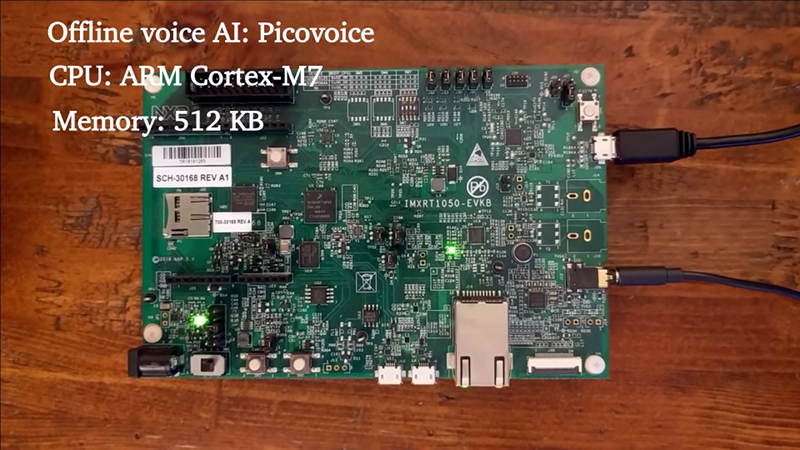

Have a look at the video below. There’s an ARM board not too different from several we have lying around in the Hackaday bunker. It is listening for a wake-up phrase and processing audio commands. All in about 512K of memory. The libraries are apparently quite portable and the Linux and Raspberry Pi versions are already open source. The company says they will make other platforms available in upcoming releases and claim to support ARM Cortex-M, Cortex-A, Android, Mac, Windows, and WebAssembly.

We imagine that’s true because you can see the ARM version working in the video and there are browser-based demos on their website. They say the code is in ANSI C and uses fixed point math to do all the neural network magic, so the code should be portable.

The libraries on GitHub include:

- Rhino – Speech to intent (in other words, do something in response to a spoken command)

- Porcupine – Wake word detection

- Cheetah – Speech to text

The fact that this is open source is exciting and we’ll be interested to see what you do with the technology. If you build a voice-controlled beer brewing rig — or anything else — be sure to let us know on the tip line so we can share it with everyone.

Yes it is. Now for the other part of the clod solution. Data mined enough that one can go beyond just dictionary lookup. In other words the “I” in AI.

“Clod” – Admins… Do you think we could get some “I” round here in the form of an EDIT button?

Nope.. thought not. };¬)

I don”t believe that was a typo.

Can”t tell if trolling or not.

It’s of course the huge dataset, that companies like google/facebook/etc get for free, to train their models that makes their face-recognition/voice-recognition/etc so cutting edge. Hopefully these trained models will be made publicly available at some point.

It’s a shame it’s not open source – all the github code is just a wrapper round a proprietary shared library. It’s not very useful to me if I want to do anything more than a smart lamp or coffee maker.

Well we have open-streetmap, and a bunch of other large data set open source projects, so why not open speech recognition?

There is such a project: https://voice.mozilla.org/

You would need a few phonetic pangrams (in Mandarin Chinese, Spanish, English, Hindi, Arabic, Portuguese, Russian, Bengali, Japanese, Punjabi, …) read out by hundreds to millions of individuals around the world of various ethnicities and language abilities. That is going to take a lot of storage (and there will be widely varying quality, timing and background noise distributions in each contributed recording). Then you need processing power to use all that collected data to train individual neural networks to recognize each individual phoneme. And then you would need a database to translate from strings of phoneme into individual words, slangs or colloquialisms.

I would see it as a more difficult project to open source than most.

For all my beefs with Mozilla, it still qualifies as “open source”. The Mozilla Foundation is working on this, it seems:

https://blog.mozilla.org/blog/2017/11/29/announcing-the-initial-release-of-mozillas-open-source-speech-recognition-model-and-voice-dataset/

Remember when, as an answer to Linux, some bigwig at Microsoft (was it Gates himself) said, well, an operating system isn’t that difficult, but free software could never manage such a complex thing as a browser?

Never underestimate free.

Excellent data set, thank you. I think that I find the linked project far more interesting than the the one in the article.

there are a few open source projects

https://cmusphinx.github.io/

there is a training tool, once you get it working, it’s good for

“move forward 10 metres”

“turn right 180”

that kind of thing

It works for a lot more than that, even continuous speaker-independent dictation. The problem is the language and accoustic models – in order to train those you need a TON of training data. So if the shipped models don’t fit your purpose (not everyone wants to do telephone voice menus) you are very much screwed.

However, this is the same with any similar tech – whether it uses deep learning or hidden Markov models (Sphinx). That’s why open source, on device systems are so rare – only the large companies with the deepest pockets can afford to train the models. In some cases they make them available, most often they don’t.

The code for a well performing system has been around for many years (OS/2 4.0 Warp in the 90s shipped with a very decent one, Dragon Dictate has been around for years too), but without the required models it is useless.

Actually if you make use of Pocketsphinx grammar models you can create some very accurate and complex dialogs. Including branching dialogs and optional wordings. I use it for my own custom voice assistants transcriptions every day. But I agree the size of the data set restricts even higher quality.

try snips.ai thats even better

How is Snips any better that the subject of this article here? It’s just a closed source generated model. I used it a few months back and was completely unimpressed. I ended up using Baidus Snowboy hotword for my own assistant as it let’s you create your own wake word or voice assistant name while being more extendable.

with snips.ai you can create you own personal hotword, maybe you just missed to read the documentation ;)

Finally!

There were systems for X-10 home control in the 80’s that did voice recognition using various home micro computers – just not very well. The computers just weren’t fast enough to digitally sample the voice and do realtime compare to trained word and phrase data. The lack of memory and storage space limited the size of the training data.

Offline speech recognition was also a standard feature in Mac OS 7.5 in ’94… It was less awful than the 80’s equivalent if only by virtue of being able to actually record sound at a decent sample rate but it was still pretty trash, what do you expect from a line of computers that ran at 80Mhz at the top of the line.

I just want to point out that 80 MHZ is how fast the Cray-1 supercomputer ran. ????

I’m here pointing out that Dragon Dictate was a consumer product, for dictating to word processors, back in the 486 days. My Mum had a copy, I could ask her how good it was. I believe Dragon started as an independent, and were bought by IBM, probably how come it ended up in OS/2.

IIRC it could do continuous speech with an ordinary standard English vocabulary. It learned the user’s voice as it went, getting better with more experience, but was usable more or less out of the box.

Whatever happened to it? They seemed pretty far ahead of the game. Would be odd to find out they died in obscurity.

Dragon is still around. I think they are struggling to stay relevant with Amazon, Apple, and Google all doing quality speech recognition. Most people don’t seem to care about (or understand) the benefit of offline speech recognition and why they should have to buy an expensive program for it when it is free on their phones. If I were them I would lean heavily into the automotive market before automakers finally give up and offload voice commands to google, et al.

>The fact that this is open source is exciting

Except it’s not…

They only distribute binary BLOBS under a (modified) Apache License

Oh damn. Here goes my hope of running THAT on ReSpeaker :-/

How does snips.ai compare?

with the right training snips can be used as an alternative to online

If Alexa gets sudo, you are going to make her sandwiches.

Or…

She will make you (into) a sandwich!

I, for one, welcome our new digital assistant overlords.

Or perhaps one day, the AIs will be calling us their “Meat Assistants”.

When my dad was making just about the first speech formant synthesizer in the US for the military (because, reduced bitrate and encryption long before microprocessors…) – he found, among other things and along with other workers, that it was actually easier in most cases to tell the difference between speakers than to transcribe any one of them. This is really important to understand (and I worked in speech recog for awhile myself for a startup company that built transcribers for medical purposes – we trained our model per-speaker to make it viable).

It’s FAR, FAR easier to understand a particular individual than “just anyone” and that is the reason the the big services – Google and Amazon and friends – need this huge training set – and even then fail on different accents in the same language! Different people slur different things, run different syllables together (no one really pronounces all that they say – the listener’s brain fills in a lot as you can prove with detailed listening).

Thus things like IBM’s old ViaVoice or maybe Dragon could be trained to do really well on one speaker, or a few if they were somehow told which person was speaking.

The real reason to put stuff like this on the internet is to be able to do facebook-like data mining, to be an intermediary who somehow collects rent. There’s really very little utility (to me) in any of these services being able to understand what some random stranger says in my home. But a good bit of security risk as has been pointed out, humorously and otherwise – I think you’re better off if your assistent only understands you, and maybe your immediate family. This is basically the same class of security threat that the bad implementation of IoT is. Someone right now might provide a “free” service you can get to on the internet – but they also get all your data, and may someday decide to charge you rent to use your own house – or simply go out of business. It’s a bad deal for the user.

Of course, the XKCD: https://xkcd.com/1807/

Other tales of people making an Alexa play loud music while the tenant was out by shouting through the door – or the authorities demanding recordings should be chilling.

Alarm bells: attempt to appear open source by having stubs up on github, number of places they take the opportunity to mention “deep learning”.

https://mycroft.ai/ also provides an open hard- and software solution to this. Still partly in the cloud, but I can imagine moving more and more over to the devices as the project evolves. Especially their usage of a Xilinx Ultrascale+ MPSoC with quite a bit of programmable logic one can use for neural networks and processing.

I just went through figuring out how to get this running on a pi. It’s not terribly difficult but muddling through it all took more time than it should have. I made a blog article to make it easier for the next:

https://bloggerbrothers.com/2019/02/20/hot-wording-on-a-raspberry-pi-computer-do-my-bidding/

Sorry – should have been more accurate – I have the hot word portion working.