[Erik Knutsson] is stuck inside with a bunch of robot parts, and we know what lies down that path. His Open Personal Assistant Robotic Platform aims to help out around the house with things like filling pet food bowls, but for now, he is taking one step at a time and working out the bugs before adding new features. Wise.

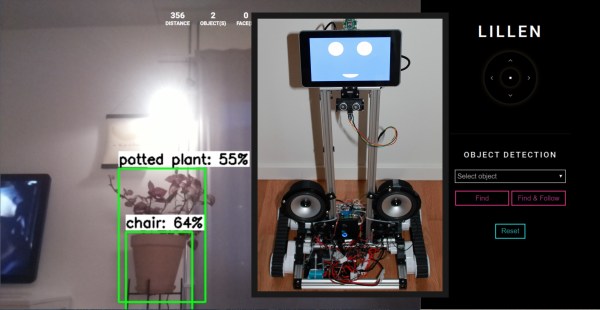

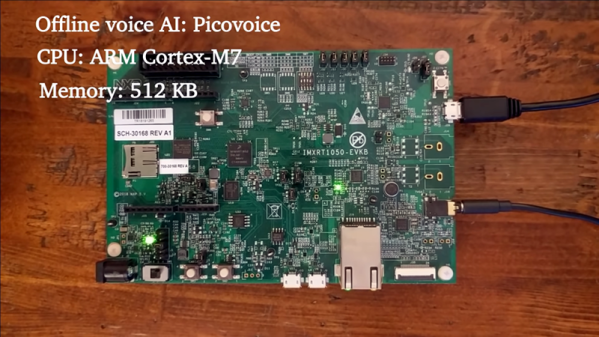

The build started with a narrow base, an underpowered RasPi, and a quiet speaker, but those were upgraded in turn. Right now, it is a personal assistant on wheels. Alexa was the first contender, but Mycroft is in the spotlight because it has more versatility. At first, the mobility was a humble web server with a D-pad, but now it leverages a distance sensor and vision, and can even follow you with a voice command.

The screen up top gives it a personable look, but it is slated to become a display for everything you’d want to see on your robot assistant, like weather, recipes, or a video chat that can walk around with you. [Erik] would like to make something that assists the elderly who might need help with chores and help connect people who are stuck inside like him.

Expressive robots have long since captured our attention and we’re nuts for privacy-centric personal assistants.