Videogames have always existed in a weird place between high art and cutting-edge technology. Their consumer-facing nature has always forced them to be both eye-catching and affordable, while remaining tasteful enough to sit on retail shelves (both physical and digital). Running in real-time is a necessity, so it’s not as if game creators are able to pre-render the incredibly complex visuals found in feature films. These pieces of software constantly ride the line between exploiting the hardware of the future while supporting the past where their true user base resides. Each pixel formed and every polygon assembled comes at the cost of a finite supply of floating point operations today’s pieces of silicon can deliver. Compromises must be made.

Often one of the first areas in games that fall victim to compromise are environmental model textures. Maintaining a viable framerate is paramount to a game’s playability, and elements of the background can end up getting pushed to “the background”. The resulting look of these environments is somewhat more blurry than what they would have otherwise been if artists were given more time, or more computing resources, to optimize their creations. But what if you could update that ten-year-old game to take advantage of today’s processing capabilities and screen resolutions?

NVIDIA is currently using artificial intelligence to revise textures in many classic videogames to bring them up to spec with today’s monitors. Their neural network is able fundamentally alter how a game looks without any human intervention. Is this a good thing?

“So you take this neural network, you give it a whole bunch of examples, you tell it what is the input and what is the exact expected output; and you give it a chance to try, and try, and try again trillions and trillions of times on a super computer. Eventually it trains, and does this amazing thing.”

– Jensen Huang, CEO of NVIDIA

Artificial Intelligence, Revisionist History

We all stopped being able to count on Moore’s Law as transistors pushed towards 10nm dies, and NVIDIA knew this better than most. Alongside the announcement of their RTX series of GPUs the company stated that they would leverage neural network technology to boost overall performance of their cards. By feeding this neural network thousands of game screenshots taken at a higher resolution than the GPU can render natively, their AI model is able to learn how to display the higher quality imagery with no change to the on-board processing power. Their press release called this process “AI Up-Res”.

AI Up-Res is essentially a hands-off approach to increasing the overall resolution of model textures in games … which is exactly the problem. The traditional method of increasing a game’s resolution was to port a game to a newer, more powerful platform and have digital artists create new textures. Regardless of which development team performs the update process, there is the back-and-forth approval process where people intimately familiar with the game make decisions regarding its artistic direction. These type of projects additionally serve as proving grounds for up and coming developers who could lead to future creative projects.

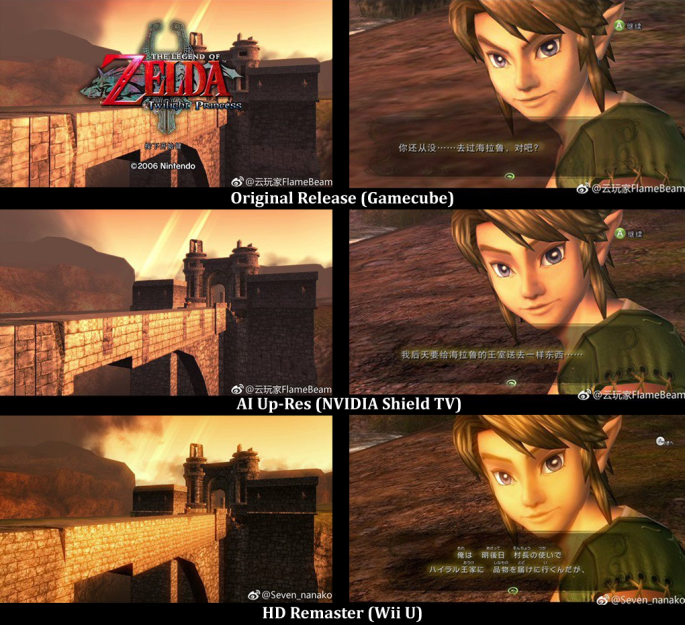

A great example of this process is The Legend of Zelda: Twilight Princess which has seen multiple releases in recent years. The original game was created for the Nintendo GameCube which ran at a 480i resolution, at a time before high definition televisions were in mass market adoption. A decade after the original, Nintendo commissioned Tantalus Media to create a port of the game that would run the game at 1080p resolution for the Wii U console.

Each step in this remastering process was signed-off by the original game’s director, Eiji Aonuma, and required constant communication to ensure the artistic intent behind of every texture in the game was preserved. Nintendo also recently made the 2006 original available on NVIDIA’s Shield TV platform in China which employs the use of the AI Up-Res technology. So here we get to see how the AI stacks up against the team of humans.

Zelda makes a great testing ground for the new technology, as there are Legend of Zelda fans out there who care more deeply for Link than their extended family members. These same people carry with them a great deal of nostalgia that is only satiated by replaying these classic games unaltered. So how does the AI stack up? The results of each approach can be seen in the screenshots and video below.

Connected Consoles Disconnected From The Heart

This all comes a time where the entire videogame industry is contemplating a switch to the cloud computing model. The concept potentially opens the door to true parity amongst all devices, but its requirement of constant connectivity makes every game an online-only experience. If there has been anything learned from the lifespans of online-only games, like World of Warcraft or Fortnite, it is that everything a player sees is subject to change. The 1.0 releases of those two previously mentioned games hardly resemble what they have gone on to become. Online-only games are continually under revision, for better or worse, and do not allow players to revisit them in a state that is just how they remember them being. It wouldn’t take much for someone to envision a future fraught with multiple “Berenstein vs. Berenstain Bears” type conspiracies.

But we’re talking about revisiting the classics here. We certainly don’t prefer the AI textures. The “improved” textures generated by a neural network are larger than the originals they replace, but without really adding anything new or artistic in those extra pixels. Stochastic gradient descent is not a method that can measure beauty, and it takes what is a purely subjective pursuit is thrust into an ill-fitting exercise in objectivity. NVIDIA are not the only ones doing it, because a similar process has been used in an open-source capacity with Doom (1993), but if no one seeks to preserve a game’s original vision we are destined to forget what made the game so special to begin with.

Hmm, hadn’t heard of the Berenstein conspiracy, but Berenstain seems to make no sense to my memory.

Perhaps it’s as how there is actually no “Ford Perfect” in the Hitchhiker’s Guide to the Galaxy, even though I read him in like that until reading a commentary that said he was actually named “Prefect” after a car.

I had thought it was spelled “Bernstein” too, but I never paid very close attention, have second rate spelling skills, and figured the name was either Nordic or Germanic.

Bernstein is the German word for amber. “Stein” simply translates to stone. But since this is about bears, the first halve should have been Baeren in German (and Beeren if it was about berries). And no, beers’ stone is not a plausible variant, as that translates to Bierenstein, which by the way might be overheard as kidney stone.

It’s Fnord Prefnect.

Fjord Redneck

I’ve seen this same kind of idea used to make HD texture packs for older games. So it’s not running while you’re playing the game, only once to generate the textures and background images. https://github.com/xinntao/ESRGAN It’s very cool.

I am a little worried that people are going to start seeing GANs used to create and modify pictures and think somehow they are showing real things. It’s just a computer creatively modifying an image to make it look better. It’s not CSI, you can’t zoom into a door knob and read a license plate in the reflection.

“It’s not CSI, you can’t zoom into a door knob and read a license plate in the reflection.”

The trick to do that is to say “enhance” before clicking a button. ;)

Also note these very important rules of enhancing:

1. You tell “enhance” to another operator. You never do it yourself.

2. The operator then uses the keyboard to select the area of interest. Never use a mouse.

Don’t forget all the clicks, whirs and beep noises of old hard disks and floppy disks. It’s essential, otherwise a CSI computer doesn’t work!

Then another flunky suggests building a UI in visual basic to trace the IP address, which turns out to be 192.168.256.000 for legal reasons.

You can with the appropriate analog camera and film negatives.

Sure you can do it with digital cams too, you just need to have to have the appropriate one ;)

I never understand why they do not use those nice 8k RED cams as security cameras instead of those ugly B/W VGA once you can see all the time.

cause they are horribly expensive and lack good low light performance which is essential to a security camera. The other problem is the data stream is extremely large. unless your the us government chances are the costs would put most companies out of business.

Still you can’t – you’re still limited with a grainularity of the negatives.

The map is not the terrain. And never has been. And with this technology, maybe it never will be.

More to the point of the story though – I think what NVIDIA is doing it certainly interesting. There’s definitively an original, then something that we know has been altered. Online games that change all the time in form though seems like a different thing altogether to me. Good? Bad? Who knows.

Probably the future though – same with more serious software, like various CAD packages for example. Software companies hope to have improved things (I assume), but at some point the cost of learning new features would outweigh the benefits – something that may not be considered as much as it should be.

As someone who loves Zelda, I’m always happy when they port old games to the more current systems. However, those three screenshots don’t really look that different to me.

There’s something very fishy about the Zelda comparisons, actually… Look at the lacing on Link’s tunic. The “AI Up-Res” one has the larger lacing holes from the Wii U version, not the small ones from the Gamecube version. Something’s fishy here.

The brick texture on the bridge is from the Gamecube version, but Link’s tunic is from the Wii U version.

First thing I noticed too… They should focus on a non-Nintendo game anyway. I think the best thing that Nintendo does is ignore graphics quality and make good games that ‘click’ with the graphics that are available. I just went back and beat OoT, and the blocky graphics didn’t bother me at all. I also got a PlayStation Classic, and after trying each game, I doubt I’ll ever turn it on again.

Remember that the AI has been trained with another image set, if there’s already a higher resolution image set available it’s the perfect thing to use.

I actually find the Wii U version most displeasing. It’s got too much bloom. Link’s face is glowing like he’s radioactive.

I’d be more impressed if they were able to reliably reverse normal mapping which reduces the detail of 3d meshes by applying textures.

Or better yet, increased the polygon count so that Link’s tunic looked like it was made of cloth instead of folded on a press brake.

https://youtu.be/00gAbgBu8R4

It was hand wrought from “green steel” plants! It’s armour – how else can he take so many hits without dying? They carefully cut down and cut up those indestructible green plants that are sword proof, and somehow use the plates for his tunic.

What? It makes more sense than most of the game!

As an old gamer (emphasis on “old”), I see this as something with mixed potential. Some will like it, some won’t. Maybe it would be good if it was left as a user selectable option.

I tend to take the mantra that flashy graphics aren’t going to change anything… It was the gamePLAY that made it fun. As long as the mechanics of the game don’t change aesthetics don’t matter much to me, make it look pretty if you want but a crap game is still a crap game.

On the positive side, this is attracting some of the younger generation to pick up the classics and try them out, which is a great thing in my book.

Maybe enhanced graphics will improve the game “Custer’s Revenge”…

https://en.wikipedia.org/wiki/Custer%27s_Revenge

Not sure if it’s me but it doesn’t look mush different at all. Could be the low resolution of Nvidia’s video…

Just apply Nvidia’s AI superres to the Nvidia video.

If you look at the original Doom and one of the later variations, the graphics definitely do not compare.

Naturally, companies want their games to look as realistic as possible. Granted, the old graphics were clunky, blocky

and were the absolute limits of the graphics hardware of the time, the push to make games as realistic as possible goes on.

This isn’t only happening in the games industry. They have dolls now that look and feel and act like newborn babies.

Look at the new Star Trek VR game as another example. While the graphics aren’t there yet as far as being indistinquishable

from reality, that time is coming. They have these short throw laser TV devices. I’ve only started looking into them, but

the gist is, you place them a couple of inches from a wall, and you get a 120 inch video display.

Who knows? In 5 or 10 years, we may have Star Trek’s holo emitters and a crude version of a holo diode.

The weather channel did some video tests (probably can find them on youtube) of showing a person in full 3D that was

in the shot, but wasn’t actually in the shot, and you couldn’t tell the difference. They’re now having some movies where

you can see a scene from different angles. For decades, my father worked for NBC, and I remember him telling me

that digital TV was coming long before the switch in 2009. Now that TV is digital, the more bandwidth that can be dedicated

to the visual experience, the more realistic that experience is. Another example is Google Earth’s streetview feature.

You can “stand” in an intersection and have an almost 360 degree view. I hope I can experience a holodeck in my lifetime.

Lots of bandwidth needed for those Pov-Ray transmissions. ;-)

… Early DTV transmissions were in 1998 and Hauppauge had a WinTV-D card out by at least 2000.

I find it worrying. There’s already, clearly, many people who can’t tell the difference between real life and computer simulations, yet in another 5 years it’ll be as real as anything filmed. Already the world is falling apart.

Shouldn’t have used Link for the article. Mario and Luigi are going to look like this: https://en.wikipedia.org/wiki/Super_Mario_Bros._(film)#/media/File:SMB_Movie_Poster.jpg

I guess I fail to see the problem in the given context. In the comparison image with the gamecube, nvidia shield, and Wii U; It appears, to me at least, that the nvidia shield is more true to the original. It just looks sharper and has more contrast, which I guess for some could be less desirable. The Wii U version looks much more blurry/soft and washed out than the gamecube and shield versions. Another glaring issue is the fact that the bridge on the Wii U looks like it has a greater number of smaller stones in it. The Wii U looks to be the most guilty of the “revisionist history”.

Can´t wait to upgrade the graphic of my Pong console in real-time. With Tetris also this might be a *game changer*.

Then my whole digital photos library. Oh and all archive videos from the past.

The past won´t anymore be like it will be.

Brave new upgraded world !

As long as it is not able to re-texture Prince of Persia 1 and 2 from the MsDos era in real time while playing, this tech is far from perfect.

If Nvidia wants to do something really useful to preserve old games, it might as well start with supporting nearest neighbor upscaling for old arcade games. Or use their machine learning algos to do just that if marketing department insists on fancy words…

Looks like a minor texture upgrade.

I’ve seen Quake and Doom re-textures make more of a difference, that can reuse no specific video card.

Hackaday I love you, but you HAVE to get the tech details right…

Zelda TP was GC ported to Wii(cake with the hardware). Both versions can and will do 480p and 480i. You had to have a special cable for the GameCube not every GameCube had the port to use it. The cable was expensive it had Hardware in it.

This might be missing the point a little but not starting from 480i

and dont forget it knows how many bitcoins you want to mine

I think the author tries to draw an if/or comparison of technology vs the artistic decision but I dont see it that way. If Nvidia can try so many options trying to increase the resolution of a game or affect the look of the game doesn’t that just give the artist more options to choose from? If also seems like with that many iterations you might find some look you had never thought of as well.

Another NVIDIA Gimmick.

after physx

after “3d” glasses

after RTX

this. I will let you judge by yourself if this will be revolutionary.