In 2016, a Tesla Model S T-boned a tractor trailer at full speed, killing its lone passenger instantly. It was running in Autosteer mode at the time, and neither the driver nor the car’s automatic braking system reacted before the crash. The US National Highway Traffic Safety Administration (NHTSA) investigated the incident, requested data from Tesla related to Autosteer safety, and eventually concluded that there wasn’t a safety-related defect in the vehicle’s design (PDF report).

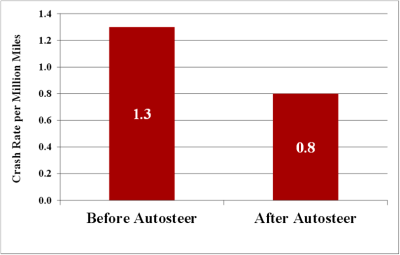

But the NHTSA report went a step further. Based on the data that Tesla provided them, they noted that since the addition of Autosteer to Tesla’s confusingly named “Autopilot” suite of functions, the rate of crashes severe enough to deploy airbags declined by 40%. That’s a fantastic result.

But the NHTSA report went a step further. Based on the data that Tesla provided them, they noted that since the addition of Autosteer to Tesla’s confusingly named “Autopilot” suite of functions, the rate of crashes severe enough to deploy airbags declined by 40%. That’s a fantastic result.

Because it was so spectacular, a private company with a history of investigating automotive safety wanted to have a look at the data. The NHTSA refused because Tesla claimed that the data was a trade secret, so Quality Control Systems (QCS) filed a Freedom of Information Act lawsuit to get the data on which the report was based. Nearly two years later, QCS eventually won.

Looking into the data, QCS concluded that crashes may have actually increased by as much as 60% on the addition of Autosteer, or maybe not at all. Anyway, the data provided the NHTSA was not sufficient, and had bizarre omissions, and the NHTSA has since retracted their safety claim. How did this NHTSA one-eighty happen? Can we learn anything from the report? And how does this all align with Tesla’s claim of better-than-average safety line up? We’ll dig into the numbers below.

But if nothing else, Tesla’s dramatic reversal of fortune should highlight the need for transparency in the safety numbers of self-driving and other advanced car technologies, something we’ve been calling for for years now.

How Many Crashes Per Million Miles?

How is it possible that the NHTSA would conclude that Autosteer reduces crashes by 40% when it looks like it actually increases the rate of crashes by up to 60%? And what’s with this shady “up to” language? The short version of the QCS report could read like this: Tesla gave the NHTSA patchy, incomplete data, and the NHTSA made some assumptions about the missing data that were overwhelmingly favorable to Tesla, and fairly unlikely to reflect reality.

Crucially, none of the data about miles driven with Autosteer engaged have ever made it out of Tesla’s hands. What Tesla gave the NHTSA, and which they claimed represented a trade secret, was the mileage on the odometer before and after Autosteer was installed, the mileage on the car at last check, and whether or not there was an airbag deployment. From this, the most you can conclude is about the accident rate per mile of cars with Autosteer installed, rather than the rate of accidents per mile with Autosteer active. Only Tesla knows what percentage of miles are driven with Autosteer on, and they’re not telling, not even the government.

Crucially, none of the data about miles driven with Autosteer engaged have ever made it out of Tesla’s hands. What Tesla gave the NHTSA, and which they claimed represented a trade secret, was the mileage on the odometer before and after Autosteer was installed, the mileage on the car at last check, and whether or not there was an airbag deployment. From this, the most you can conclude is about the accident rate per mile of cars with Autosteer installed, rather than the rate of accidents per mile with Autosteer active. Only Tesla knows what percentage of miles are driven with Autosteer on, and they’re not telling, not even the government.

How You Get a Range of +40% to -60%

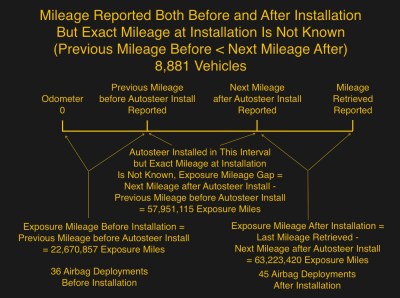

So did the NHTSA get data on how many miles were driven with Autosteer installed? Not quite. Tesla gave them data with the “Previous Mileage before Autosteer Install Reported” — an odometer value. They also gave “Next Mileage after Autosteer Install Reported” — another odometer value but there was no guarantee this would match up with the previous readout and the data varies quite a bite here.

For data samples where these two numbers did match, you can assume that’s the mileage when Autosteer was installed. We’ll call these cars “known mileage” — they correspond to Figure 1 in the QCS report. Known-mileage cars make up 13% of cars in the sample, and for these cars the accident rate increased by 60% after Autosteer was installed. There were 32 airbag deployments for 42 million miles driven before Autosteer was installed, and 64 deployments over 52 million miles afterwards. At first blush, this looks like Teslas with Autosteer installed appear to crash a lot more often than Teslas without. (Remember, we don’t know anything about miles driven with Autosteer actually engaged.)

Another 34% of the vehicles in the data had Autosteer installed at odometer mileage zero, so we’ll call these “factory Autosteer” cars. They correspond to QCS’s Figure 2. These cars have a crash rate that’s similar to the pre-Autosteer period from the first sample, reducing what looks like a negative safety effect from Autosteer. If you combine these two samples together, it looks like cars with Autosteer crash 37% more frequently than those without — almost the mirror image of the NHTSA’s original result but better than a 60% increase in accidents.

The remaining 53% of the sample consists of cars with a pre-Autosteer mileage and a post-Autosteer mileage that don’t line up. For example, maybe we know that the car didn’t have Autosteer installed at 20,000 miles on the odometer, but it did by 60,000 miles, leaving a 40,000 mile murky middle zone. The car could have had Autosteer installed at the 20,001 mile mark, or at 59,999.

The remaining 53% of the sample consists of cars with a pre-Autosteer mileage and a post-Autosteer mileage that don’t line up. For example, maybe we know that the car didn’t have Autosteer installed at 20,000 miles on the odometer, but it did by 60,000 miles, leaving a 40,000 mile murky middle zone. The car could have had Autosteer installed at the 20,001 mile mark, or at 59,999.

When adding up the airbag incidents and dividing by the mileage, which do you use? The NHTSA assumed 20,000 miles — the “Previous Mileage before Autosteer Install Reported”. How you treat this incomplete data, and whether you use it at all, can make the difference between the NHTSA’s +40% safety margin for Autosteer and the -37% to -60% margin you’d get otherwise. Yuck.

Doesn’t Pass the Smell Test

My first instinct, as a former government statistician, is that there’s something fishy with this data. Why does Autosteer seem to have such a huge effect in the group of cars where it was added aftermarket, but apparently no effect when it was added in the factory? I don’t know what explains these differences, but I’d be wary to call it an Autosteer installation effect. Maybe these early adopters drove less cautiously? Maybe they used Autosteer more recklessly because they wanted to test out a new feature? Maybe early versions of Autosteer were buggy? And for the missing data, how can the conclusions reached be so sensitive to assumptions we have to make, swinging between a +40% and -40% effect?

To be fair, the NHTSA originally asked Tesla for incredibly detailed information (PDF) which would have made their work much more conclusive. Tesla replied that the odometer readings were a trade secret, and the NHTSA got a judge to rule that they weren’t.

To be fair, the NHTSA originally asked Tesla for incredibly detailed information (PDF) which would have made their work much more conclusive. Tesla replied that the odometer readings were a trade secret, and the NHTSA got a judge to rule that they weren’t.

Still, in the end, Tesla gave them data with this “exposure mileage gap”. Tesla apparently also informed the NHTSA why it couldn’t give them the data they requested (PDF) but this reason is redacted in the FOIA report. All we know is that the NHTSA accepted their excuse.

Tesla probably knows the exact odometer readings. After all, Tesla prides themselves on the detailed data they collect about their cars in use. How can they not know the odometer mileage when their own Autosteer software was installed, for more than half of the cars in the study, more accurately than within 20,000 miles? Feel free to speculate wildly in the comments, because we’ll probably never know the answer.

The bottom line in terms of data analysis is this: for the data with solid numbers, it looks like Autosteer being installed is associated with between a 35% and 60% increase in the number of crashes per million miles. The remaining half of the sample has imprecise data, and whether it is favorable toward Autosteer or not depends on what assumptions are made based on poor data.

Cold Hard Numbers Instead of PR Spin

When the NHTSA came out with their report citing the huge decrease in accidents attributed to Autosteer, Tesla was naturally ecstatic, and Musk was tweeting. Now that the results have gone the other way, he has declined to comment. Just prior to the release of the QSC paper, Tesla began releasing quarterly safety reports, but they’re light on useful data. The note that the NHTSA has updated its assessment of their system’s performance is a vague asterisk footnote in small type: “Note: Since we released our Q3 report, NHTSA has released new data, which we’ve referenced in our Q4 report.” Right.

Tesla’s safety report is very brief, short on numbers, and vague to boot. In particular, Tesla reports only miles driven using Autopilot, which is an overarching name for the sum of Tesla’s driver-assist features including automatic emergency braking, adaptive cruise control, and lane-keeping or Autosteer. It’s abundantly clear from other carmakers that automatic emergency braking systems help reduce collisions, but Tesla doesn’t disaggregate the numbers for us, so it’s hard to tell what impact Autosteer in particular has on vehicle safety.

Finally, the Tesla report includes both crashes and “crash-like events”, whatever that means. Still, if you assume that Tesla is reporting more events than it otherwise would, their cars seem to have great crash statistics, even without Autopilot, when compared with the US national average. Why would that be? The Tesla advantage narrows significantly when you compare them with other luxury cars, driven by a demographic that’s similarly free of new and inexperienced drivers. Tesla’s report also shows that there are fewer accidents per mile with Autopilot engaged than without, but since you’re only supposed to drive with Autopilot on the highway under clear conditions, this is hardly a surprise either.

It’s simply hard to say what these numbers mean in terms of the safety of the underlying features. Their evasive behavior with regard to the NHTSA, coupled with the odd metrics that they’re voluntarily reporting are hardly confidence inspiring.

In summary, the data Tesla supplied to the NHTSA was short of what was requested, and 50% of it was blurred for a reason that was redacted — that’s not our idea of transparency. And we can’t expect more from their voluntary safety reports either — no company releases information that could have a negative impact on their business operations unless they’re forced to. So maybe rather than berating Tesla, instead we should be asking our government to take transparency in highway safety seriously, and supporting their efforts to do so whenever possible. After all, we’re all co-guinea-pigs on the shared roadways.

“So maybe rather than berating Tesla, instead we should be asking our government to take transparency in highway safety seriously”

Hey, maybe both? Tesla did something that, while not uncommon for corporations, was pretty shitty. I have no problem berating them for that, or for any other shitty thing they do.

“Tesla’s report also shows that there are fewer accidents per mile with Autopilot engaged than without, but since you’re only supposed to drive with Autopilot on the highway under clear conditions, this is hardly a surprise either.”

This fact right here is the one that drives me insane when people report on this. Oh look the numbers go down with autopilot. Which could be reworded to. Oh look there are less car accidents under ideal road conditions. It’s near impossible (I assume) to get road safety data for accident rate per mile ONLY under ideal highway road conditions but if there were, I suspect autopilots grand claims of huge safety improvement would nose dive to somewhere around 0 + or – a bit.

I recall the old saying…

“Figures don’t lie, but liars can figure.”

Ren, there is another similar saying….”there are lies, dam lies, and then there are statistics!”

If torture data long enough they will confess everything

By intentionally calling a product by the incorrect name, while also noting the usage, espouses malice to the product. Thereby devaluing ones’ research into said product. It is not my intention to dispute your methodology, I just call into question your motives. That alone may preclude any true objectiveness to your conclusions.

By Tesla’s own words “Every driver is responsible for remaining alert and active when using Autopilot, and must be prepared to take action at any time.” it is clear that Autopilot (autosteer) is not the be all, end all of autonomous driving!

But, if it is used as directed (or even in suitable off label applications such as well marked non-highway roads), it can’t help but improve driving safety, because while it can’t handle all situations/ conditions, those it can (some of which are beyond human abilities, like seeing through bushes, or around vehicles), it does without getting distracted!

The problem arises, not with autopilot’s capabilities, but with the operator failing to pay attention, especially when pushing it far beyond it’s abilities!

Sure, but that’s exactly the point: drivers expect more of the system than it can objectively handle. That is of course due to the name of the function — Autopilot — which is intentionally misleading, and the marketing hype done by Tesla. Without such high expectations, drivers wouldn’t stop paying attention to the road and allow the system to cause accidents.

If people are mislead by the term auto pilot, ignore ALL the warning and the constant noise and alerts if you remove your hands from the wheel too long, is it Tesla’s fault?

Companies use marketing gimmicks all the time. Tesla has appropriate warnings etc, but people ignore them and then have fatal crashes. Because they’re idiots.

Yes, it is. At best, it’s bad product design which leads people into complacency.

More likely it’s deliberately deceptive advertising which oversells the capabilities to increase sales, hoping they can shout “user error” whenever it fails.

But regardless, if you’re creating products which can be dangerous, especially if they can be dangerous to other people, then you’ve got a responsibility to do what you can to ensure they are not only safe but also used safely. There’s a lot of evidence that systems like auto steer which give a semblance of working but actually require constant user attention are not safe as humans do not work well in those situations.

We are responsible for our claims. Specially if we take money for that. Advertising is no different. If you claim to make autopilot better than human don’t expect human to intervene in critical situation.

I the term auto pilot was first used in the aviation industry in the early 1900’s. Regulations may vary in different countries, but in the US, when autopilot is engaged, there still must be 2 crew members in the cockpit and someone must be at the controls, ready to take control of the airplane at all times.

That sounds an awful lot like the same ‘auto pilot’ wording that Tesla uses.

So they are using the same term that has been used for over a hundred years, in exactly the same way that it has been used for that hundred years.

In a completely different situation. And the common man’s understanding of autopilot is that it automatically flies the plane.

And planes don’t suddeny have other planes pull out in front of them at T-junctions.

So you’re linguistically correct, but the term is still going to mislead most people, and the ability of humans to respond in a critical situation is very different.

Not to mention (except I am mentioning it)..Pilots fly planes. Drivers drive cars. So Auto Driver maybe?

Aviation got a lot of its nomenclature/jargon from maritime traditions…boats have auto-pilot as well.

I agree, it’s very similar to autopilot on planes.

Autopilot on planes doesn’t spot other planes pulling out in front of you.

The scenario is completely different between planes and cars.

Do you sell Teslas on the side?

In all honesty. Saying that something can only improve safety because “when used as directed” it can only improve things sure is an interesting way to look at the problem. In reality Tesla calls the product autopilot to fool customers into thinking it posses a much wider range of capabilities then it actually does. If the statistics are telling us that accidents increase after installing autopilot then there is a problem. You can blame this on the drivers all you want but the problem will still exist and it is clear that Teslas one liner buried 30 pages into a 100 page document is not effectively informing customers to not trust the autopilot system to automatically pilot the vehicle safely to the destination (wow what a great name don’t do what it implies).

Let’s say I go and sell after market tires. These tires have very strict requirements to only be driven on highways but I call them the ‘go anywhere tires’, and bury the highway notice in some document. People start using my tires on side streets. Cars start falling apart killing both the drivers (who didn’t read my document/waiver) and innocent by-standards (who never even had a chance to know of my waiver) is not some liability on me the seller of this product. I have made tires less safe. I have falsely made people believe they are more safe. I have given people a sense of confidence on the product when the statistical reality clearly flys in the face of that.

Because airplanes have autopilot then I believe that since a Tesla has an autopilot it can also fly.

OTOH Tesla crashes are front page news, so no one has to read 30 pages in to see what they cannot avoid.

My autopilot is 100 % safe.

(Buried on page 113 of the EULA: only while the car is not moving.)

I seem to recall Microsoft doing that. They touted Windows NT as having some high security rating , but in the fine print that turned out to be only if it was not on the network.

That is assuming the autopilot only fails to act when it should, rather than act when it shouldn’t. In other words, that the autopilot cannot in itself cause an accident when used as directed.

Also, it can’t see through bushes or around vehicles. That’s just not how it works.

Right. Telsa’s “Autopilot” function is a level-2 automation function — the driver is responsible at all times. Which means that you should _never_ have your hands off the wheel, and _never_ assume that it correctly perceives a barrier.

This is clearly not the way people are driving, and some would argue that it’s not the way Tesla is marketing their car’s functionality, despite it saying this in black and white in the owner’s manual.

But the few well documented fatalities weren’t caused by pushing the system past its abilities. They were system failures. And aside from these, and internet anecdotes, we don’t know how frequent these failures are, b/c Tesla isn’t telling.

Humans are predictably flawed, the system works as anyone with common sense can see it would. It reduces driver attention, which is absolutely necessary in the infinite corner cases the artificial stupidity and sensor technology will screw up. Purely for safety there are obvious alternatives, lane drift warnings, automatic steering only emergency obstacle avoidance etc. These will accomplish the same safety advantage without reducing attention, but that doesn’t sell electric cars to idiots who want to take their eyes off the road …

That’s what all this is about, compromising safety to find some unique selling point for Tesla. Sooner or later a Tesla on autopilot is going to kill an emergency responder or some innocent who plays well with the media and suddenly everyone will see it.

– Yep, it’s a load of marketing BS and beta testing a system on public roads without consent of others involved. As great as I think Tesla’s are in some respects, I’d be happy to hear someone sue’d them out of existence over this system which has no business controlling a vehicle at speed. And the other article regarding ’emergency braking’ being disabled because it had too many false positives… Seriously? – if your safety system has too many false positives, ‘lets just turn it off’? Seems like taking the batteries out of your smoke detector and leaving them out when you burn some food and trigger it… Anyway, since you should never take your hands off the wheel or eyes off the road, then this system should not even exist. Any ‘convenience’ is a false sense of security, and an accident waiting to happen to someone innocent not misusing this system. A lane departure warning, or blind sport monitor, sure, those are assisting you, not letting you think the vehicle can take handle things on its own. My condolences go out to anyone who loses a spouse or kid to someone driving with this autopilot convenience-marketing jackassery.

When an auto system handles 90% or worse 95% of tasks how can the human stand a chance of staying alert always and ready to take the wheel in a split second in a bad situation at 70mph? The human mind while wander if not actively engaged. Also less used skills degrade. If 95% of your driving experience is taken away from you, now you have to respond instinctively to the worst 5% of situations with diminished capacity? It’s a bad outcome and use of tech. . I don’t want these on the road with me. Don’t remove drivers with tech, enhance them.

If the withheld data had shown Tesla in a positive light I’m sure we would have it, so there’s only one conclusion I would draw there. But I would also expect current versions of the Autosteer software to be markedly better than the first versions, so without incorporating the software version in use at the time of an accident into the analysis the result would be meaningless anyway.

>” But I would also expect current versions of the Autosteer software to be markedly better than the first versions”

That’s an excuse they will be milking far into the future. You can always claim that the old data doesn’t apply to the new version, and therefore you have to wait yet another few years to show that the new version didn’t improve significantly over the old, but then you have a new new version and the criticism is again invalid…

It’s not Tesla et al it’s the person behind the wheel using the auto steer function so they can text people and post on facebook.

Lanes keeping, auto braking, adaptive cruise control.

Take a look in traffic and see who is driving and who is paying a bit less attention.

Car makers could check the driver to see if they are using their phone or not.

It’s illegal to do so in many places

Customers dont want them to stop them using their phones.

But customers do want “safety” features, even if (because…) they help them do illegal things.

If you can’t take your eyes off the road because the car might do something stupid, then you can’t take your hands off the wheel because it would take too long to react otherwise, and if you can’t take your hands off the wheel nor your eyes off the road, then you might as well steer yourself – so what’s the point of the autopilot?

If it’s just there to act as a safeguard so you don’t swerve into traffic, then it should work entirely differently – like how rumble strips work to wake you up when you drift towards the shoulder. Imagine if instead they just gently eased you back onto the road and let you continue sleeping…

Features that let drivers be lazy and not focus on driving are anti-features that do not belong in a car.

Goodbye cruise control. Goodbye automatic transmissions.

Cruise control, yes, but why automatic transmission? Not doing something unnecessary isn’t laziness.

Actually neither.

When used correctly cruise control is helpful.

I’ll sit at a set speed on a clear mtoroway and I’ll put up with people over taking me then slowing down then over taking me then slowing down.

Perhaps if they used their cruise control…

As for auto’s, depends on the tech.For many cars with high pwoer they are better than a manual.

No clutch. More gears. Faster changing than a human.

I have both manuals and auto’s in my stable and they are for different jobs.

I think this is true for autopilots, both for cars and airplanes, as well. If used properly, they can be helpful. They can improve safety (in part by reducing driver fatigue) and improve the pilot/driver’s experience. Used improperly, they can be very dangerous.

Cruise control is sometimes used improperly, as well. And like we will with autopilot, we adapted to the name and learned about its proper use.

There’s a societal benefit to deploying these technologies even if they are neutral for safety at first: They advance the technology for self-driving vehicles which can eventually become much safer than human drivers and can allow increased mobility for those who cannot themselves safely drive. No amount of controlled tests are a substitute for operational experience with a technology.

I will give up my personally owned car when we have driverless Uber.

“Only Tesla knows what percentage of miles are driven with Autosteer on, and they’re not telling, not even the government.”

Now why would this be witheld? How could it possibly be a trade secret? Obviously this changes the statistics in a bad way for tesla.

Because the airbags deploy doesn’t mean the car was at fault for the accident. So if someone gets rear-ended and airbags deploy, you blame tesla?

Even though there was an accident the car ‘could’ be reducing fatality.

So more data is needed. You are just as bad at skewing the meaning of the results.

Yup, a rear-end could be a false positive auto-braking. Could be the car behaved erratically and caused an accident.

The aim of driving isn’t to cause fewer accidents, it’s to be in fewer accidents. If you keep being in accidents we’ll above the average, even if the police say they’re all the other driver’s fault, there’s something wrong (and the insurance company will say so too!).

Guy e-signs waiver saying he won’t take his hands off the wheel. Later that day on YouTube: “Woo! Look Ma, no hands and I’m exactly one fifth of a car length to the next bumper going 70 mph!”

While Tesla has good intentions, I much prefer being in control of my own vehicle. I don’t what the vehicle steering or braking for me. I’m fine with anti-lock brakes and traction control systems, but not a system that stops the vehicle without my input. Same for steering and throttle control. The machine is there to serve me, not the other way around.

Regarding frequency of accidents, I believe the it increases with autosteer. The reason is, people rely on the technology to do the thinking for them. Part of the issue has to do wtih the marketing name put on the technology. If we are going to adopt a technology, it is critical that the people using it understand the limitations. I don’t think this has been true in the case of these accidents. I believe the technology performed exactly as it was designed. It was the humans that did not test it correctly…..

“While Tesla has good intentions, I much prefer being in control of my own vehicle.”

You don’t have to buy the package and, if you do have it, you don’t need to use it. It doesn’t force you to use it (i.e., activate automatically whether you want it to or not). Without it Tesla cars are just (electric) cars that you can control to your heart’s content. It won’t even stop you from driving straight into a brick wall at full speed. It would warn you that there’s an obstacle ahead, but it wouldn’t stop you.

For now – but the government is already negotiating to mandate automatic braking in new vehicles – technically it’s voluntary, but they’d make it a regulation if the carmakers didn’t do it. They’re agreeing because the requirement pushes out cheaper competitors who don’t/can’t/won’t have these systems; crony capitalism in action.

Once they “prove” that automatic steering is “better than the average driver”, autosteer becomes mandatory as well, pushed by the automakers who have the technology, who collude with the government to make their technology a legal requirement on the road on the argument that it makes people safer.

The trick is that the “average” driver is much worse than most people you’d encounter on the road, because most of the accidents happen to a few bad drivers, druggies/drunks, kids, etc. who push the average down for the rest of the people. The AI doesn’t need to be particularly good to pass that low bar, and even as the end result is more accidents and greater risk to the average able and sober driver, it’s going to take years and years and multiple lawsuits to admit the difference.

Is that true about the brick wall? I couldn’t find reliable info anywhere about whether emergency avoidance braking was always on / only on when Autosteer was engaged / or an extra feature.

I’ve never tried to drive into a brick wall, but here’s something that just happened a couple of nights ago. I was driving through a neighbourhood (i.e., 2 lane, a bit narrow with cars parked along the curbs, left-hand easy curve) when it sounded a collision alarm with a parked car in front of me marked in red on the console. I was really nowhere near going to collide with it and, as I drove past, i saw the car was empty so it wasn’t going to pull out in front of me. I just took it as a false positive and a false alarm. The point is, though, I didn’t feel my car engaged the brake on its own, it just sounded the alarm.

There are also a few videos on YouTube of people experimenting with auto/emergency braking under different circumstances. From what I can see, if TACC or AP isn’t engaged, the braking is left to the driver.

FWIW. Thanks.

@Wretch: Thank you!

The whole point of this article is about (begging for) more openness, arguably that our government should be securing for us if necessary. If there’s a YouTube community of Tesla owners trying to figure out how the safety system in their own car works, that’s a problem.

I had the recent “pleasure” of re-doing my driver’s licence when I moved to Germany. What I thought was really cool was that it’s apparently standard practice to take the car out and get up to speed and then hammer on the ABS brakes just to get a feel for it.

As an oldster (and decade-long motorcycle rider) I’m pretty aware of my traction, and it actually took un-training on my part to just mash the clutch and brakes as hard as possible. But now I’ve got a good feel for how my car’s ABS reacts in bad situations, even on good pavement. Cool.

In the nordics they do the same thing, but they take you to a skid pad which is a track with half asphalt and half steel plates covered in a mist of glycerine and water to simulate driving on wet ice.

They make you do a hard brake with half the wheels on the skid plate, with the ABS on and then off. Obviously you can’t do this in a Mercedes A-class car, because it would flip over instantly, like it did when they tested the car for just this situation in Sweden.

Oh, of course, when I had a bit less space in my garage, every time I pulled in the alarms would go nuts telling me to stop and blaring collision warnings. But, again, it never stopped on its own. Mind you, obviously I wasn’t pulling in at full speed either.

These statistics are very important for another reason. You may be certain that the designers and implementers of the upcoming fully autonomous vehicles are looking at this information about autosteer data and conclusions very carefully since everything that applies to autosteer will apply to fully autonomous vehicles as well, but much more so. The sensor systems under current consideration are very expensive, and by themselves still inadequate. We are being told that only a combination of sensor types will be sufficiently safe. But, we are also being told that such a combination would be too costly, and so will not likely become the common approach. Right now, the best “single sensor” is the human driver. But, the real question is what is being done with the “sensor data”? If an autonomous system can react quicker and more reliably than a human driver, will this override the accuracy of the sensing capability? We need much more, better, and very much more transparent and honest data input to the statistics.

More sensors isn’t an answer due to the problem of sensor fusion.

You have two sensor systems, A and B, which measure different aspects of the same scene and return different type of information. When interpreting their data, you get a certain false positive and false negative rate for both sensors.

Suppose sensor A says there’s danger ahead, but sensor B is giving you all is safe. What do you do? Sensor A could be giving a spurious alarm, but then again B might be wrong. This is what happened with the Tesla that drove under the trailer: the microwave radar gave ambiguous information because it could see under the trailer and reported that there are obstacles ahead AND the road is clear. The computer then turned to the camera to look at what’s ahead, and saw the side of the trailer and went “Billboard – false alarm”, and plowed right into it.

Combining different types of information from different sources doesn’t improve your safety when your AI doesn’t understand what either of them means. It may even create more confusion and more potential for bizarre errors.

One issue that all self-driving and lane-assist systems will have is telling the stationary objects (whizzing by) on the side of the road from dangerous obstacles. They need to make classifications, and when the system sees a X% of it being a truck and a 100-X% of stationary billboard, it needs to make a decision.

Set the threshold too conservatively, and the thing is slamming on the brakes needlessly. Set it too loose, and accidents increase.

The end goal is a system that’s so good that it returns 100% confidence in billboard or truck every time, or at least classifies better than people. When it gets there, that will be a big win. We don’t know where it is now.

That’s why they’re using radars and sonars instead of relying on computer vision.

Computer vision has real trouble telling anything apart from anything, whereas radars can employ doppler filtering because the return signal has a frequency shift that is proportional to the relative speed – and so if the car knows its own velocity it knows what frequency bands to ignore in order to eliminate a whole bunch of data that is currently “not relevant” – i.e the road surface, roadside bushes, etc.

This method was developed in the WW2 for radar targeting, so a plane flying above can see another plane below without confusing it with the ground – the ground is moving at a certain relative speed, therefore the return echo has a predictable frequency, so you filter that out and everything that isn’t moving at that speed will become visible on the radar.

Of course, that ignored data could become very relevant very fast, but this is a compromise between a system that has a snowball’s chance in hell, and something that kinda sorta works. The computer vision is mostly employed to identify road signs, lane markers, and other stuff that has consistent appearance. Tesla uses it to track other cars’ tail lights, rather than identifying the cars themselves as cars.

Having radar and ultrasonic sensors as well is the right way to do these type of things.

A little known fact is that human eyes have a “doppler radar” built into them – the retina already processes motion information and extracts moving edges out of the image before it goes to the brain, so the brain has an easier time telling objects apart from the background by parallax motion – as soon as something moves even slightly, or you mover your head/eyes slightly, all the things at different distances move different amounts and the brain can pick up all the objects without doing much anything, before it even tries to understand what those objects are.

This feature is missing from digital cameras, so the AI has a far harder time telling one thing from another. In fact, despite their superior resolution, digital cameras are actually far worse for computer vision. For example, it can’t tell a shadow from a dark rock, but a person who is able to see some faint features in the shadow (thanks to our eyes’ superior dynamic range), is able to instantly recognize that these features move consistently with a flat plane and automatically conclude that it’s not an object.

Traditional AI research is prone to the belief that it’s “all in the mind”, i.e. intelligence can be described as simply an algorithm acting on data, but that’s missing the way of how you obtain that data, and what information you’re looking for. That’s part of the reason for Moravec’s paradox – thinking that you can just throw information into a black box, and have it intelligently compute the right actions out of it – like trying to walk by trying to consciously think about how to move your legs, by continuously measuring your joint angles with protractors. Not only is it incredibly slow and inefficient, the problem is too large to define and understand so you could program the algorithm.

Of course you could develop a digital camera that does the same, but the point of Tesla is to use off-the-shelf components to spend minimum effort (money) into actually developing anything.

That’s part of Elon Musk’s “first principles” engineering method: you don’t invent a better wheel, you simply observe that the wheel exists and can be bought at a certain minimum price, which combined with all the other parts (individually) makes the end result (car) as a sum of their properties. Of course the parts don’t necessarily fit or work well together, but that’s a problem for the actual engineers. You already promised a product with features X at a price Y, and if your engineers can’t meet that, you shift the goalposts and make new grand plans to excite everybody so nobody notices.

The fact that the autopilot doesn’t work very well isn’t a concern for Elon Musk – only whether it can be made to look like it’s working by clever spin on statistics or passing the blame, or re-defining it as “autosteer” and telling everyone to not use it like it was originally marketed.

Like the old joke, man goes to a doctor with a sore knee and says “It hurts when I press my finger here.”. The doctor replies, “By god, don’t press your finger there!”

Also, the question of “Billboard or truck?” cannot be answered by improving the sensors – because it depends on understanding what the situation is, where you are and what you are doing.

There are no billboards built into the middle of an intersection. Of course the car doesn’t know that – strictly speaking it doesn’t even know what an intersection is. Unless you specifically program the car to identify when it is at an intersection, and also to check for all the things that should not be present (which is an endless task), it will always miss something that is perfectly obvious for a conscious observer. You can program in many special cases and exceptions, but there are so many of them it’s virtually impossible – you simply run out of memory and processing capacity.

Point in case, Tesla’s engineers forgot to program in to check for toll booth barriers, so one of the cars drove straight through. Again, same issue: radar says “something is returning an echo, but the road seems clear”, camera says “I don’t see any objects included in my training data”.

In other words, the AI needs first-hand understanding of what a road is, what it means to be driving, what the objects surrounding it are rather than simply what they look like. It needs to be able to imagine what the road ahead will be, what to expect, in order to deal with all the unknown events that might happen – rather than just react to those events that it was programmed to identify and deal with.

The idea at the moment is to just let the cars crash and kill people, and then patch up that particular problem, and then when something else happens you patch that “bug”, and again… until presumably you’ll have squashed 99% of the most common causes of accidents and the system is “good enough” – or you lower the standards and build the road infrastructure to accommodate the cars instead.

Don’t forget the false positives. The better you get in properly identifying things, the more false positives your system will generate and react as if they were real. If the car sees a truck that’s not there and applies the brake, you (as the maker of the car) will have a problem if that happens too often.

My 2 cents is that they don’t know the exact millage that autosteer was “installed” – because it was done on an over the air update to the software version rather than an in house update. I feel like they might have more detailed information per car – but maybe not anything useful that they can send over for 10,000 cars in a PDF. Just saying a crash with autosteer engaged leaves a lot of grey area as well. What were the road conditions? What was the past history during that trip with the driver. Was auto-steer shut down previously during the same trip for the driver removing his hands from the wheel? What version of Auto Steer was it. I’m sure version 1.0 was a bit more buggy than even Tesla wants to admit

That version 1.0 was buggy would explain both the difference in accident rates between the group with known install mileages and those that had them in from the factory. The factory ones are presumably later, and thus better versions. It does make one wonder if Tesla was trying to conceal knowledge that a first version of Autosteer was released prematurely.

“reduces crashes by 40% when it looks like it actually increases the rate of crashes by up to 60%” generally a little annoyed that this gets picked out of the article. Generally, if you look at their conclusions, the real problem is that the dataset is broken and incredibly incomplete. To draw the conclusion that it, without qualification, “increases the rate of crashes by up to 60%” is as unreasonable as it is to draw the conclusion that the NHTSA made that autopilot is safer. Honestly the dataset seems so questionable I would be wary of making any conclusions about the actual crash rate whatsoever. Note that the important conclusions the paper makes are that the NHTSA should not have made such a careless conclusion, and that this data need to be made much more openly available.

“Good data is missing” is exactly the point of the article. So you do what you can with what you got.

If you wanted to do a before/after test on the effects of Autosteer on crash rates, you’d absolutely use the first dataset because it keeps the driver/car combo constant and only changes the relevant variable. That dataset shows an increased crash rate of 60%.

When compared to the other complete dataset — those with Autosteer pre-installed — the numbers look different enough that you pretty much have to conclude that the data come from different populations. I.e. something in the underlying data generating process has changed between the two samples. What, we don’t know. Better software? Different type of drivers? Whatever, we’re not comparing apples and apples.

So lumping the two together doesn’t really make sense, but it’s probably better than nothing. That results in a 35% increase in accidents from Autosteer.

Are you arguing that “up to 60%” overstates a +35% to +60% change?

How about this: when looking at the most appropriate data subset for drawing inference about the impact of Autosteer, its installation came with a 60% increase in airbag deployment events per mile.

Obviously, the data is crappy. That’s the real point. So I’m being cautious in my interpretation with the “up to”. However you slice it, it looks really bad.

And really, “without qualification”?? Did you read the three or four paragraphs I spent qualifying things? Perhaps you are angry at coverage of this in the popular press? I had to hold myself back — I bore people at cocktail parties by talking about statistics…

You need to consider what the results imply though – if you read the stats as “60% worse”, but you’re wrong, Tesla are told to turn it off until it’s better. They lose some revenue. If you read the stats as “40% safer”, but you’re wrong, people die.

This is why the bar for “guilty” in a court is so high.

I have a Tesla with autosteer and I find there are pluses and minuses. The good news is that it helps prevent distractions impacting safety. You are not going to drift out of you lane or hit the car in front while distracted. Given the Tesla control screen, that matters. The bad news is that you can easily forget whether you are on Autopilot or not. It it not good to forget that you turned it off! Also, it is not perfect. It can only sense it’s immediate environment. I can look far ahead and see that traffic has slowed down long before Autopilot detects it. That means I can avoid sudden braking when that happens. Autopilot reacts fairly late and so you may not hit the car in front but the car behind may hit you.

I’ve always wondered how this plays out in reality. I was taught to look not at the taillights of the car ahead, but the car one ahead of that one. Buys you more reaction time, and helps undo some of the chaining-of-reaction-times that can result in multi-car pileups. Early braking is a signal to other drivers, and is a service to road safety. Late braking is hazardous.

Ideally (and impossibly?) I’d like a smart cruise control to have some of this lookahead. This is also some of the promise of the radio systems that have been proposed, but which may have other insurmountable difficulties. (https://hackaday.com/2019/02/21/when-will-our-cars-finally-speak-the-same-language-dsrc-for-vehicles/)

So then the hope is that the single-car-ahead systems can get fast enough to react in time. But that shifts the burden to the driver behind you. If all cars have autopilot and are driving within their stopping distance, this is probably OK. When it’s people, you’re gambling a little.

Waymo and GM’s Cruise test vehicles get involved in many more accidents than a human driver, but they’re almost never “at fault” because they’re always getting rear-ended.

Consumer Reports recently tested Tesla’s autopilot+navigation system, and it cut off a semi-trailer trying to get to an offramp. Technically, the semi would have been at fault in a collision. It made the driver/occupant nervous enough to write about it.

It’s awesome that we can talk about cars driving themselves and argue about whether they’re good or polite drivers. Just 15 years ago, this was still a pipe dream. (https://en.wikipedia.org/wiki/DARPA_Grand_Challenge) But now that we’re there, transparent safety information is lacking.

If Tesla are hidding the data – and they clearly are – it only means one thing ie that the system increases the number of accidents..

Personally, I think all these features and gadgets are a distraction, which is the number one cause of accidents. It gives a false sense of safety, which allows to many to feel comfortable doing other things, besides be focused on driving. Seat belts didn’t stop people from having accidents, and basically a coin toss on reducing serious injury or death. The seat belt, actually added some serious injuries, and have be the direct cause of fatalities. But, for the most part, they save the insurance companies money on the more common accidents. We can’t really trust the numbers, but if they were as accurate, as claimed, when they were pushing to make them mandatory in all vehicles, we wouldn’t have needed airbags. Seat belts are just mandatory equipment, it’s also now a primary traffic offense. You can get pulled over, and a ticket, for no other reason. The still don’t prevent accidents, injuries, or death, neither do airbags, they just give some folks the idea that they won’t get seriously hurt, insurance will take care of that, and their wrecked car, no big deal. Basically, either drive your car, or pull off the road and do something else, don’t try to do both. Personally, driving while distracted should be just as much a crime, as driving under the influence. A cell phone in view of the driver, should be the same offense, as an open container. Too many people are addicted to their phones, and getting their fix on, while driving is hazardous. Perhaps the drivers I’ve witnessed were also impaired on drugs or alcohol, but the screen glow of the cell phone was obvious enough, and the erratic driving was the very same as if the were impaired.

Doesn’t really matter how many safety features you add to these already expensive cars, they aren’t going to protect you from other drivers. Probably, the only real safety feature, would be each car being equipped with a transponder, and no car would be allowed to get too close to any other. Those involved in accidents, or other crimes, would be immediately identifiable, so a lot of incentive not to drive careful, or drive while commiting crimes, no chance of getting away with anything, consequences for your actions.

You make some good points.

Re tracking bad drivers, some car insurance companies already have phone apps which can lower your insurance premiums if installed. They track you via GPS and record accelerometer data. You get points if you drive well, and … it’s hard not to tell them truth about an accident when they already have all the data. I suspect this will become the norm.

If your phone falls out of your pocket and into the floor of the car, do they subtract points for reckless driving?

Methinks they have other motives for tracking your movements, because things like cellphone GPS and accelerometer data are far from reliable in any real case. Rather, what they’re really doing is seeing if you frequently drive/park around “bad neighborhoods” with high instances of crime and/or accidents and up your premiums accordingly. This of course means that underprivileged people get to pay higher rates while people up in gated communities pay less – but that’s what the system was supposed to do, right?

To claim that seat belts and airbags have no impact on reducing death an injury overall is ludicrous

In the 6 months that I’ve had my Model 3 the early warning system has warned me about 3 times of legitimate rear end collisions that I might have had. I’ve never had an accident in my 20 years of driving, but it warns me when I didn’t notice before to became a slamming on the breaks scenario. It certainly works for parked cars in your lane. The hard thing is it sounds like they need a dedicated training set for simi’s pulling out in front of you.

A confounding factor in the data analysis is the fact that the hardware and software of the autopilot has changed significantly over the years. Lumping these together makes no sense.

It’s weird that some of the most in-depth, critical, and analytical tech news reporting around is on a blog that essentially started as a project collation website

In a word, Tesla’s autonomous driving needs to be improved. The drivers are too confused. Now is not the time to pursue responsibility, but to improve the technology and prevent accidents.

If there isn’t a threat of a lawsuit, Tesla wouldn’t do jack.

No – it is high time to pursue responsibility. We did it quite well without this tech but we went deep in crap with not taking responsibility.

ALL auto-assist technology needs relabeled and re branded as DRIVER ASSIST safety technology.

The DEATHS keep happening over and over with Uber & others because humans stop caring & start doing stupid things, the worst idea of all, trusting their life to sensors and software – ending in tragic deaths of other bystanders.

Subaru Eyesight will help reduce harm & crashes, but that does not mean home owners should drive 65 MPH into their own garage.

Let’s count up Tesla’s enemies:

1) The oil and gas industry – because they are going to lose their biggest product application (gasoline)

2) Everyone that works at a car dealership (because they can’t sell this one)

3) Traditional car manufacturers who dropped the ball on “electric” even though the tech was there for the taking.

4) Auto Mechanics who won’t have any engines to fix

Phillip Morris (makers of Marlboro Cigarettes) used to provide “statistics” that said smoking wasn’t additive. With that as an example of how “honest” companies are (on both sides of the coin), and knowing Tesla’s long list of very powerful interests, I think I’ll pass on all of the reporting above (which may be compromised as well) and wait for a full disclosure of the facts.