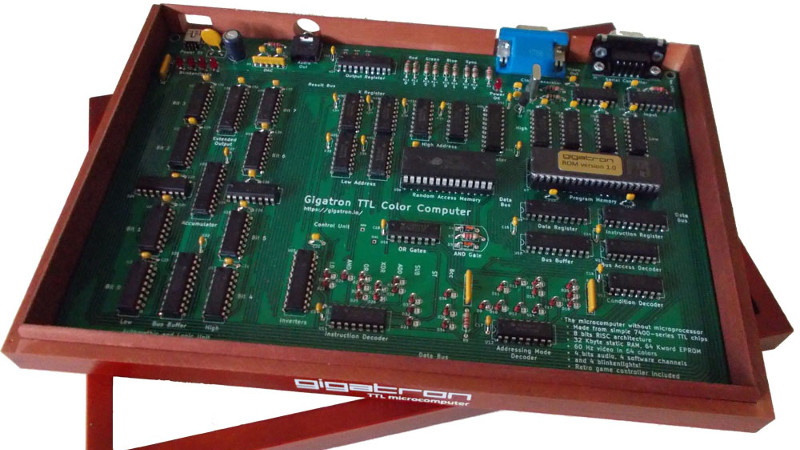

About a year ago when Hackaday and Tindie were at Maker Faire UK in Newcastle, we were shown an interesting retrocomputer by a member of York Hackspace. The Gigatron is a fully functional home computer of the type you might have owned in the early 1980s, but its special trick is that it does not contain a microprocessor. Instead of a 6502, Z80, or other integrated CPU it only has simple TTL chips, it doesn’t even contain the 74181 ALU-in-a-chip. You might thus expect it to have a PCB the size of a football pitch studded with countless chips, but it only occupies a modest footprint with 36 TTL chips, a RAM, and a ROM. Its RISC architecture provides the explanation, and its originator [Marcel van Kervinck] was recently good enough to point us to a video explaining its operation.

It was recorded at last year’s Hacker Hotel hacker camp in the Netherlands, and is delivered by the other half of the Gigatron team [Walter Belgers]. In it he provides a fascinating rundown of how a RISC computer works, and whether or not you have any interest in the Gigatron it is still worth a watch just for that. We hear about the design philosophy and the choice of a Harvard architecture, explained the difference between CISC and RISC, and we then settle down for a piece-by-piece disassembly of how the machine works. The format of an instruction is explained, then the detail of their 10-chip ALU.

The display differs from a typical home computer of the 1980s in that it has a full-color VGA output rather than the more usual NTSC or PAL. The hardware is simple enough as a set of 2-bit resistor DACs, but the tricks to leave enough processing time to run programs while also running the display are straight from the era. The sync interval is used to drive another DAC for audio, for example.

The result is one of those what-might-have-been moments, a glimpse into a world in which RISC architectures arrived at the consumer level years earlier than [Sophie Wilson]’s first ARM design for an Acorn Archimedes. There’s no reason that a machine like this one could not have been built in the late 1970s, but as we know the industry took an entirely different turn. It remains then the machine we wish we’d had in the early 1980s, but of course that doesn’t stop any of us having one now. You can buy a Gigatron of your very own, and once you’ve soldered all those through-hole chips you can run the example games or get to grips with some of the barest bare-metal RISC programming we’ve seen. We have to admit, we’re tempted!

Is it compatible with a paper tape reader?

I took an Computer repair course in the 80’s where we got to play with a older mini that was donated. The CPU drawer was nothing but a card cage with TTL boards. So it is fun to see people still recreating the old hardware, or in this case, spinning their own design that may have gave Apple a run for their money.

“Is it compatible with a paper tape reader?”

It has a port to interface with it, send commands to the program loader, keyboard input, etc.

The github repo comes with an arduino sketch to bridge between this port and a pc and lets you upload programs to memory.

So you’d need to make a tape reader controller interface, but so long as you know how to talk to the tape reader, everything else is in place to make it happen.

This is a small computer but since it doesn’t use a microprocessor, it’s not a “microcomputer”.

So what is it ? :-)

True. :)

I respectfully disagree.

In the 40 years or so that I’ve been using computers, a “microcomputer” was defined by its physical size, about small enough to fit on a desk or smaller. A “minicomputer” is one about the size of a desk or a small room, depending on what computer you’re referring to. By these same terms, a “computer” would be something larger than these. These terms, as far as I have been lead to believe, were originated prior to the invention of the CPU in the early 1970’s, though “microcomputer” may be slightly more modern. By the time I was in school (late 1970’s), the term was used to describe any small personal (or similarly sized) computer.

I may be incorrect, but in my experience this has always been the case.

As someone who lived through the mini/micro transition, the term “minicomputer” was generally used to refer to a computer with a TTL implementation of the CPU, and the “microcomputer” was used when a single (V)LSI part provided the CPU function.

e.g.: The DEC PDP-11 was a minicomputer, the LSI-11 was a microcomputer

“There’s no reason that a machine like this one could not have been built in the late 1970s, but as we know the industry took an entirely different turn.”

That’s a misconception. The logic of the Gigatron might seem as extreme minimalistic but the truth is that the number of transistors you save there are replaced by the huge amount of transistors in the memory. When you would count the transistors in the Gigatron compared to any other computer in the 70’s you would realize that the Gigaron needs much more.

Nevertheless is the Gigatron a nice design idea.

True, but 32K SRAM is not wildly outrageous for early 1980s, even at the required speed for the video signals. And you could always drop to 8 colors at the expense of an additional TTL chip, and get away with 16K. Few years later, you could go to roughly 14 MHz.

SRAM in the 80’s was very expensive, so you must use dynamic RAM. Anyway, if you do a clever design, you can avoid the refresh circuitry, by using the video generation for that task.

16K of 70 ns SRAM would set you back $3.15 per 4096×1 bit module (Intel 2147) in 1981, according to BYTE magazine listings at the time, or $100.80 total. That’s $280 in today’s money according to an online inflation calculator.

In those days you would make somewhat different choices anyway. VGA requires 4 times more bandwidth than NTSC to begin with, for example.

Except that companies gouged or charged a premium for being in the computer race. Companies did not know how to manage customer support at that time either.

in the early 80s there were no large EPROMs (like on the Gigatron) and DRAM refresh was a constant problem…

A bank of smaller ROMs could have been assembled in the larger chip’s place.

Avoiding that was the main reason most of the early microcomputer monitor/operating software was written in pure assembler.

It wasn’t unusual to have to remove and/or rewrite code to fit an 8KB ROM.

I guess you would do it somewhat different in those days without VGA anyway. The system with BASIC can run from from 8K. The larger EPROM is mostly cold storage for the shipped applications.

Rockwell Automation Engineer of the year Jonathan Engdhal wrote the entire original Allen-Bradley Data Highway assembler (Zilog) code to fit in 2Kx8 memory space back in the day. Later, he fit all of Data Highway Plus and Remote I/O assembler code into a 32Kx8 memory chip (used 28k) for a Zilog Super 8 uP on what we called the Domino Plug. Amazing code work.

In 1980 or 1981 my home built 6809 machine refreshed DRAM with software by reading sequential locations in the DRAM. That was well known at the time. Only a few percent of the processor’s time. This could be done easily with the Gigatron too.

In the early to mid 80s I was writing firmware for terminals that had a pair of 27512 EPROMs (arranged as 4 banks of 32K bytes) and a pair of 32x8K static ram chips (1 bank of 32k plus a shadow bank that the CPU could indirectly write but only the display controller could read). DRAM was a terrible choice for display memory.

Remember having to build a CPU from logic chips. Lots of boards involved, and a rats-nest of wiring.

Is this a fully static design? Video generation aside, could the clock be slowed to a crawl, or to a stop, and started again?

yes it is fully static. Not sure that it deserves the RISC moniker. Whilst it has a minimal instruction set like the PIC16 and PDP8, both these were not RISC processors.

RISC is kind of a nonsense word because “reduced” doesn’t mean anything specific. Whether something is a load-store arch or not is a more useful question.

Looking forward to seeing a version of this made only with 555s :D

It’s a machine for manipulating symbols according to rules. It is therefore a computer by Turing’s definition. Micro? Doesn’t matter, really.

am trying to emulate a Gigatron on a PIC :) in C…

but, where’s the ALU or rather how is it accessed, its flags I mean… and how are they defined? thanks!