If you ever tried to program a robotic arm or almost any robotic mechanism that has more than 3 degrees of freedom, you know that a big part of the programming goes to the programming of the movements themselves. What if you built a robot, regardless of how you connect the motors and joints and, with no knowledge of itself, the robot becomes aware of the way it is physically built?

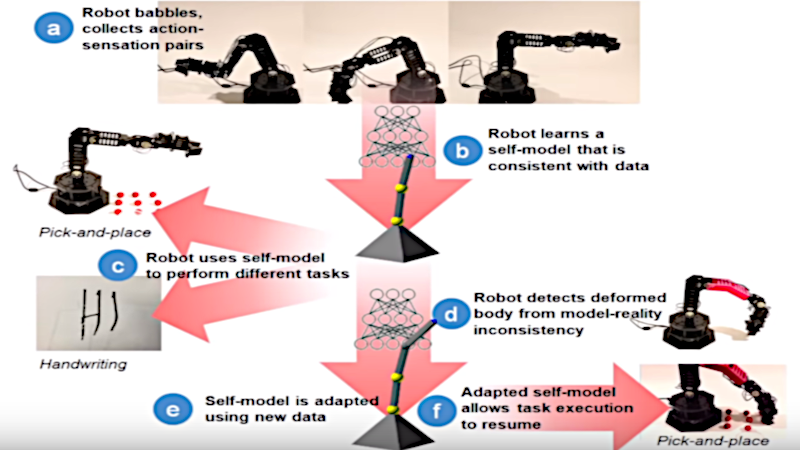

That is what Columbia Engineering researchers have made by creating a robot arm that learns how it is connected, with zero prior knowledge of physics, geometry, or motor dynamics. At first, the robot has no idea what its shape is, how its motors work and how they affect its movement. After one day of trying out its own outputs in a pretty much random fashion and getting feedback of its actions, the robot creates an accurate internal self-simulation of itself using deep-learning techniques.

The robotic arm used in this study by Lipson and his PhD student Robert Kwiatkowski is a four-degree-of-freedom articulated robotic arm. The first self-models were inaccurate as the robot did not know how its joints were connected. After about 35 hours of training, the self-model became consistent with the physical robot to within four centimeters. The self-model then performed a pick-and-place task that enabled the robot to recalibrate its original position between each step along the trajectory based entirely on the internal self-model.

To test whether the self-model could detect damage to itself, the researchers 3D-printed a deformed part to simulate damage and the robot was able to detect the change and re-train its self-model. The new self-model enabled the robot to resume its pick-and-place tasks with little loss of performance.

Since the internal representation is not static, not only this helps the robot to improve its performance over time but also allows it to adapt to damage and changes in its own structure. This could help robots to continue to function more reliably when there its part start to wear off or, for example, when replacement parts are not exactly the same format or shape.

Of course, it will be long before this arm can get a precision anywhere near Dexter, the 2018 Hackaday Prize winner, but it is still pretty cool to see the video of this research:

To test whether the self-model could detect damage to itself, the researchers 3D-printed a deformed part to simulate damage and the robot was able to detect the change and re-train its self-model.

I was having the same thought, why not apply some heat or what not and melt and deform the machine while it was running and see how well it could deal with that.

Because the only way to verify that the machine learned correctly is to know exactly how much deformation was introduced. It was an engineering proof of concept study, not a production model test. They’re likely seeking funding for additional study and needed to show something concrete for where the money they already spent has gone.

Very nice. I wonder how the robot gets it’s feedback – is it motor current sensors? Endstops in the motors? Limit switches? Cameras? More details would be good, but I’m too lazy to read the paper myself.

Agreed, super interesting, my bet is on a camera system for feedback but I also can’t get to article to find out

Seems it’s the article : https://www.creativemachineslab.com/uploads/6/9/3/4/69340277/task-agnostic_self-modeling_machines.pdf

But there is not much technical information in there, nothing about what the positional sensors are.

There is a supplementary PDF there : https://robotics.sciencemag.org/content/robotics/suppl/2019/01/28/4.26.eaau9354.DC1/aau9354_SM.pdf

They don’t use an absolute positional tracking system, they rely on the measured angle at each joint. So basically the robot learns how to attain specific angles, although that data should already be available to the robot.

They argue that it would defeat the purpose of their study to use that data directly and that it would perform poorly with more joints. That’s a lot of unproven assumptions in a research paper I’d say, even if in principle it’s interesting.

I really don’t get why they didn’t use a cheap absolute tracking positional system instead, they just had to buy a PS Move/PS Eye to do that ($30 at most) which has even several open source implementations for positional tracking.

If it only achieved specific angles in its joints, then how could it detect a position inconsistency with its internal model from a structural segment deformation between two joints. The joints would all achieve their correct angles but the distal appendage would be in a different point in space. I’m betting they would have to have had a position based comparator.

I think this is great work, but the laymen explanation of the red deformed part accomplishment was off-putting to me. Showing that the learned weights were somewhat versatile to minor geometric shapes with the benefit of “transfer learning” can’t be taken for granted, but the description of how this was achieved seems to imply this didn’t require external retraining of the model weights, which is obviously not the case.

Interesting. I called this “babbling learning” in my thesis, in 2006 ;)

Full paper, in french:

https://hal.archives-ouvertes.fr/tel-02075685

Abstract : In this thesis,we present a multi-agent architecture giving a robot the ability to learn in an unsupervised way. An autonomous learning is achieved by using emotions which represent basic needs for the learning entity. The learning process we propose is inspired by the organization in cortical colums and areas of a libing being brain. The organizational multi-agent architecture is used to describe the interaction among entities involved in the learning process.

It’s not self-aware.

It’s a robot that has one task. When given a 3D point in space, make the number that represents the 3D location of the end actuator move to that point. That’s all that “exists” for the robot. Think of a PID controller that is tasked to make two numbers equal each other – that’s the extent of the intelligence of this system.

The “babbling” in between can be equated to a self-tuning PID loop. They’ve found a more efficient tuning algorithm by throwing a neural net at it. The self-model is a method already used in robotics for this task, and the auto-tuning algorithms are used to correct the model once the machine is told the configuration. The only difference here is building the configuration by random trials.

And yet again something fundamentally dumb is sold as “Artificial Intelligence”.

Or, to put it more bluntly: When you write the algorithm yourself, you see it’s not intelligent.

When you define the inputs and desired outputs of the algorithm to a black box machine that runs an evolutionary algorithm on it, and you don’t understand what the resulting code does, though it’s essentially the same algorithm, it becomes “intelligent”.

The reason is that neural nets in AI are used differently than how biological things use them. Neural nets are trained, and then they are frozen for use, because otherwise they’d drift away from the correct solution and stop working very quickly. In essence, the neural nets are used in the form of “write me a computer algorithm by trying things at random until it does what I want it to do”.

When you run the resulting algorithm, it’s no different in terms of “self-awareness” or intelligence than the Book in the Chinese Room Argument.

Computer programming through natural selection.

In my thesis, robots learn in two phases: firstly they discover their body (what I have called the babbling learning): for doing so they create some “base” blocks that group sensors and actuators by “similarities”. Then, in a second phase, they “learn” how to use theses blocks to “behave” (and to satisfy themselves). With the time, they build more and more blocks to be able to learn more and more complex tasks.

These “blocks” are closed to what cortical colums are for primates (or some mammals). Inside of these blocks is a behavioral matrix (reinforcement learning) that is used to trigger actions based on perceptions. I also used some fuzzy logic to avoid thresholds problems. Behavior is reinforced based on “emotions” (basic needs). Every action or perception modifies the values in this matrix: as the result the “being” evolves continuously and is able to adapt itself to variations in their body (such as sand in a wheel), or in their environment: just as living beings do.

As for living beings, I think that behavior of a learning machine is deterministic because the behavior is based on known algorithms, with no (real) random. BUT if you don’t know what the machine has done before (its experiences), you cannot predict what it will do, an thus, you must consider it as non deterministic.

>”an thus, you must consider it as non deterministic.”

Let me share with you an old story:

In the days when Sussman was a novice, Minsky once came to him as he sat hacking at the PDP-6.

“What are you doing?” asked Minsky.

“I am training a randomly wired neural net to play Tic-Tac-Toe.”

“Why is the net wired randomly?” asked Minsky.

“I do not want it to have any preconceptions of how to play.”

Minsky shut his eyes.

“Why do you close your eyes?” Sussman asked his teacher.

“So the room will be empty.”

At that moment, Sussman was enlightened.

That pretty cool!