We’ve all come to terms with a neural network doing jobs such as handwriting recognition. The basics have been in place for years and the recent increase in computing power and parallel processing has made it a very practical technology. However, at the core level it is still a digital computer moving bits around just like any other program. That isn’t the case with a new neural network fielded by researchers from the University of Wisconsin, MIT, and Columbia. This panel of special glass requires no electrical power, and is able to recognize gray-scale handwritten numbers.

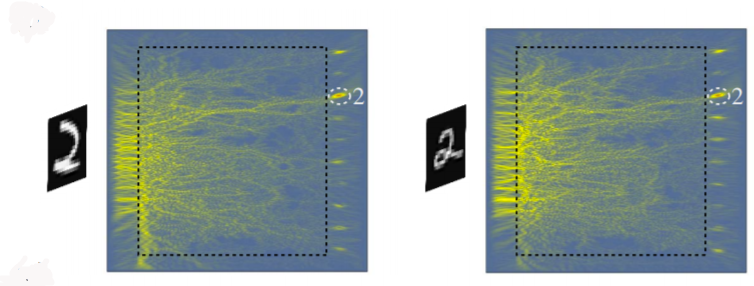

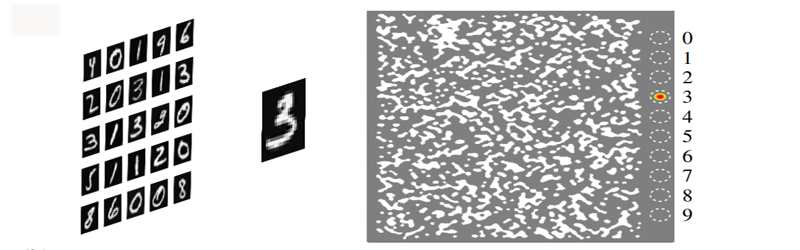

The glass contains precisely controlled inclusions such as air holes or an impurity such as graphene or other material. When light strikes the glass, complex wave patterns occur and light becomes more intense in one of the ten areas on the glass. Each of those areas corresponds to a digit. For example, here are two examples of the pattern of light recognizing a two on the glass:

With a training set of 5,000 images, the network was able to correctly identify 79% of 1,000 input images. The team thinks they could do better if they allowed looser constraints on the glass manufacturing. They started with very strict design rules to assist in getting a working device, but they will evaluate ways to improve recognition percentage without making it too difficult to produce. The team also has plans to create a network in 3D, as well.

If you want to learn more about traditional neural networks, we have seen plenty of starter projects. If TensorFlow is too much to swallow, try these 200 lines of C code.

Okay, wtf. This is incredible.

Is this equivalent to a network lacking nonlinear activation functions? If not: where do nonlinearities come from?!?

Nonlinear optics

Yeah, just that nobody ever doubted that such an approach would work and they can’t make a practical device but just simulate.

This is pretty cool, but once you start thinking fiber-optics it loses a little of its cool factor

What about fibre optics? I don’t see why they would lessen the achievement made here.

Could you imagine if you buy a special ‘compute’ cable and it converts say raw video to x265 as it moves from the storage medium to computer or camera sensor data to encoded files on an sd card…

And yes that example probably wouldn’t work because of complexity but something like this could be the beginning of light based computers. Intel has been working on those for decades but this is an actual proof of getting something useful from light based computation. Fucking awesome.

Kind of like an…optic nerve.

Interestingly, you can do something very similar to this with holograms, which are pretty close to being Fourier transforms of the light field. The idea is that you take a hologram of each character you want to recognize, then a hologram of the page, put them on top of each other, shine a laser on the assembly, and tada! You get a bright spot where the character was recognized. The brighter, the better the recognition. It’s true “optical” character recognition.

So we’ll finally catch that guy that keeps pressing his face to the windows.

You misspelled willy

would that fall under “tool marks” in the investigation ?

Are weird analog machines the future of hardware acceleration? Wait till we get analog SHA256

Was just thinking this. Bitcoin hashing at lightspeed?

Yes. Yes it will: https://www.nextplatform.com/2019/06/12/will-analog-ai-make-mythic-a-unicorn/

Correction: IN A SIMULATION, so all the “The glass contains precisely” needs to be “The glass would contain precisely”

And the big deal is not that this is an unpowered glass neural net but that it’s not a DIGITAL neural net.

There was no network actually fielded or any proposed method of manufacturing such a neural network, just the hypothesis that such an optomechanical network could be created along with their simulation and training data that they say proves their hypothesis or something, Look I just actually read more than the synopsis and the captions under the graphics it’s not hard.

I might not understand the science but I speak fluent Scientist.

They did mention the possibility of using laser made inclusions.

yep, its a bit like those kickstarter campaigns that only have renders.

Look for a company called LightOn. They are doing much better than this and it’s working in real life.

BTW, this is not unpowered method, since it needs light to process information (just as a GPU uses electricity).

*ambiently powered

It’s as unpowered as a crystal radio, or an NFC card, or a magnetic pickup, or a book for that matter, the method of data transmission provides the power.

Yeah I said book, it’s engineered to selectively reflect specific bands of electromagnetic waves in a way that conveys information and ceases to transmit data when deprived of those wavelengths.

I should also note, there are both Active and Passive optical networks and by requiring no externally powered light amplification and relying only on the passively received light this can be termed an “unpowered” network.

Well, that’s disappointing. The idea’s an interesting one. Reminds me of how the first neural networks were actually done in hardware using variable resistors.

Other groups have built similar devices and tested them in the real world, not just simulation. E.g. here’s a multi-layer character detection system using 3d-printed diffractive screens: https://science.sciencemag.org/content/361/6406/1004.abstract (pdf here: https://arxiv.org/ftp/arxiv/papers/1804/1804.08711.pdf)

Scroll to bottom of the PDF to see the 3d-printed layers.

I wasn’t trying to cast doubt with the neutral tone in my first post, just trying to characterize my own layperson understanding of the technology.

Also the example you cite in the first sentence of the second paragraph says “[…]where the neural network is physically formed by multiple layers of diffractive surfaces […]” making it fundamentally different than the tech here.

Mostly what this paper seems to be is a concept for miniaturization and cost reduction of optical neural nets by using a piece of continuous material that processes in all directions not just front to back as well as “[…] by tapping into sub-wavelength features.” and doing it all “using direct laser writing”.

All in all the paper says “Here is a bunch of stuff unlike what is being done right now and here is a computer model that seems to support it being possible” but they produced no end product or concrete path to one.

Any chance this, combined with holograms in some way, could be even more amazing? Just a random thought, and holograms seem like lasers did back in the day. We had them, but until tech advanced, they were often solutions looking for problems.

This looks like one of those basic advancements that might lay down a path for the hologram.

The idea to use analog optics for image recognition isn’t new at all. Holograms (diffraction gratings) can “convert” images both ways: a spot of light into a character as well as a character into a dot at an exact position. The grating is prepared, so different characters (images) produce spots at different positions which may be detected with an array of photosesnors.

This isn’t a “neural network in glass”, this is the resulting logic implemented as an optical interference device.

There’s a great difference, because the neural network is, or should be, a device that can alter itself to adapt to training. If you fix the algorithm, then it’s just a program, or a piece of glass with specific refraction properties.

You can implement the same thing by putting pegs on a board and dropping ball bearings through it – the locations of the pegs are solved by the neural network simulation while it is being trained, but the pegboard itself isn’t the network. It’s just a board with pegs that implements the solution found by the network. This is simple: if you train a neural network to implement an OR gate, and then build the OR gate as described by the network, then you’ve simply built an OR gate.

Calling it a neural network is a smoke-and-mirrors thing that AI researchers and reporters like to use to “simplify” it to the public, but it ends up obfuscating the matter. In reality, confusing the logic produced by the network with the network producing the logic is the same as saying that a book is telling a story, rather than the author of the book. It’s a trick of language.

Exactly. From the paper: “Although one couldenvisionin situtraining of NNM using tunable optical materials [3], here we focus on training in the digital domain and useNNM only for inference”.

Seriously, thank you!

“There’s a great difference, because the neural network is, or should be, a device that can alter itself to adapt to training.”

And SLNN or NN undergoing training will modify internal weights, but once a network is trained you can operate it with no further modifications (‘inference mode’ in modern parlance). There is NO requirement for a NN to continue to modify weights in operation to be called a neural network. There are all sorts of ways to implement a trained NN including many electronic methods, mechanical methods, optical methods, organic methods (not jsut neurons, but fun things like guided fungal growth or independent agents), etc.

Technically wrong. A “trained” neural network is just generic software. A neural network by very definition is self-modifying. Trained neural networks are, what the neurology department would call, “brain-dead”.

There’s a reason every single “practical” ML image-recognition system out there sucks 10 kinds of balls – they were trained and then “locked-in”.

That’s where every single one of them fail. They are dumb. Figuratively brain-dead.

There’s not a single system out there in use that isn’t terrible. Google and Facebook are particularly terrible, despite the stupid amounts of hype around their hilariously bad “AI”. Google, the AI-first company. lmao

Of course, the very thought of a self-modifying NN being run live out in the wild scares the absolute pants off any AI researcher because if any of it is responsible for human life, it WILL fail. Spectacularly fail.

A middle-ground is the best solution, a collection of systems trained differently that will converse on a proper solution to a scenario. No agreement = full stop. (gracefully, that is, god forbid a car handbrake’d middle of an interstate!)

Ah yes, the armchair know it all…. whatever.

Arm chair Indeed, have you used a NN in an application? Because what you are describing is a human neural network, not a machine learned neural network. At the heart of this application the ML-NN is nothing more than a classifier (from a set of known possible classes determine which best characterizes a given input). To date there is no schema capable of training a ML-NN “on the fly” while it is being used as a classifier, you are either training it or using it, never simultaneously.

You are confusing theory with practice.

> There is NO requirement for a NN to continue to modify weights in operation to be called a neural network.

And that’s the semantic magic trick right there.

You can call it whatever you like, but if you train an NN to act as a logic gate and then stop the training, the remaining program or algorithm no longer functions as a neural network – it functions as a logic gate. It IS a logic gate.

If you wire a transistors to act as a logic gate to they cease being a transistors?

Besides, before you operate the network in “inference mode”, what usually happens first is:

1) Pruning. You get rid of all the bits that don’t contribute to the correct answers so you don’t have to compute them.

2) Fusing. Multiple layers or multiple nodes of the network are fused into single computational steps wherever possible.

After the training. you distill the network down to a simpler form that can be computed more efficiently. If your trained function is something simple like a logic OR gate, after the pruning and fusing steps you’d be ideally left with a very short program that sums the inputs, and any resemblance of the neural network would vanish.

That was my conclusion as well.

👏👏👏

My favorite oddball machine learning paper involves using a bucket of water: https://www.semanticscholar.org/paper/Pattern-Recognition-in-a-Bucket-Fernando-Sojakka/af342af4d0e674aef3bced5fd90875c6f2e04abc

Simplicity of implementation means when the apocalypse comes we don’t have to leave all our technology behind.

Do you think the next civilization will worship it?

Something somewhat similar is available in reverse. There is a sundial design out there that displays the time digitally depending on the direction from which sunlight enters.

I am not mentioning this to detract from this work, it is actually very different except for having the same sort of “wow, cool”

https://en.wikipedia.org/wiki/Digital_sundial

A quick google suggests that there are 3D-printable designs out there now.

Gues this is combinatoric processing in a glass no sequential just combinatorik, think it would be easier to just programm the combinatorik logic into an fpga. But worth mentioning both the glass and the fpga contain Silizium.

even though its not realy worth mentioning

So you are saying that the fact you can make silicon-free glass by the thermal decomposition of Germane is not germane to this discussion?

If a German would make this Glass out of Germane it could be germane, maybe. :)

I was thinking about that. Phosphor?

This is great. Looking at the light moving through the glass is like oberving the tensor coeficients varying as they move from one layer to another. This is a visualisation of the tensor flow itself. A lot more easier to grasp than a bunch of numbers in a matrix.

By the way it is very similar to ligth going through an hologram. As the waves front move in space there is reinforcement and weakening at different points in the 3D space recreating the illusion of 3D objects.

I think that it should be possible to encode an AI pattern recogniser in an hologram.

Art & magic rolled into one.

Their NN accuracy is limited by the current IC manufacturing methods. Traditional mask-based optical manufacturing technologies are weak at rendering arbitrary patterns with lots of local variation. That’s why IC designs are strictly gridded with orientations in one direction in advanced technology “nodes” (everything ≤90 nm). The projection lens that demagnifies the mask image filters away the critical information needed for this application. Ideally you need an e-beam direct-write method for that, but single e-beam is too slow.

Amazing, though it is still a simulation. A few weeks ago I saw a measuring device created by laser induced disorders in the glass structure. Pushing the idea a bit further: For fixed system, the learned AI can be put on a glassplate with some optics, and can serve complex decisions for practically no power consumption. My system (classic simulation based) needs about 1.5kW 24/7…… and a pretty amount of maintenance. An upgrade/repair would be nearly as simple as replacing a fuse. oh Stargate memories are popping up xD

This is just a glorified sieve, there is no memory capability, no accumulators so series data does not work with it without preprocessing via a fourier transform.

OK, it is “just” a fixed algorithm. However, what strikes me is the processing speed of this number-recognition device. Has anyone noticed that it operates at the speed of light? Probably this is physically the fastest “number cruncher” which is technically possible. No machine using any kind of electric circuit will ever surpass this one.

Yeah the speed is insane. It wasn’t emphasized in this article but in the last analog computer article it was

https://hackaday.com/2019/05/03/swiss-cheese-metamaterial-is-an-analog-computer/

“The computation is very fast. Using microwaves, the answer comes out in a few hundred nanoseconds — a speed a conventional computer could not readily match. The team hopes to scale the system to use light which will speed the computation into the picosecond range. “

Isn’t this purely mechanical, and non-dynamic approach?

And non-programmable piece of filter materiel based sensor attached to a digital computer?

If they can do this, which is amazing, it seems to me they could have two points for input and make a NAND or any other of the sixteen possible logic gates. Then you would have the building blocks for more complexity.

79% is a lot of misdirected mail

Still, a fascinating approach.

79% is a lot of misread ZipCodes

Regardless, interesting article.