The best part about the term “Artificial Intelligence” is that nobody can really tell you what it exactly means. The main reason for this stems from the term “intelligence”, with definitions ranging from the ability to practice logical reasoning to the ability to perform cognitive tasks or dream up symphonies. When it comes to human intelligence, properties such as self-awareness, complex cognitive feats, and the ability to plan and motivate oneself are generally considered to be defining features. But frankly, what is and isn’t “intelligence” is open to debate.

What isn’t open to debate is that AI is a marketing goldmine. The vagueness has allowed for marketing departments around the world to go all AI-happy, declaring that their product is AI-enabled and insisting that their speech assistant responds ‘intelligently’ to one’s queries. One might begin to believe that we’re on the cusp of a fantastic future inhabited by androids and strong AIs attending to our every whim.

In this article we’ll be looking at the reality behind these claims and ponder humanity’s progress towards becoming a Type I civilization. But this is Hackaday, so we’re also going to dig into the guts of some AI chips, including the Kendryte K210 and see how the hardware of today fits into our Glorious Future.

Introducing the K210

The Kendryte K210 System-on-Chip is the AI-on-the-edge chip du jour and combines a dual-core, 64-bit RISC-V processor, along with the usual slew of peripherals. As for the target market of this chip, Kendryte summarizes it as:

Kendryte in Chinese means researching intelligence. The main application field of

this chip is in the field of Internet of Things. The chip provides AI solutions to

add intelligence to this.

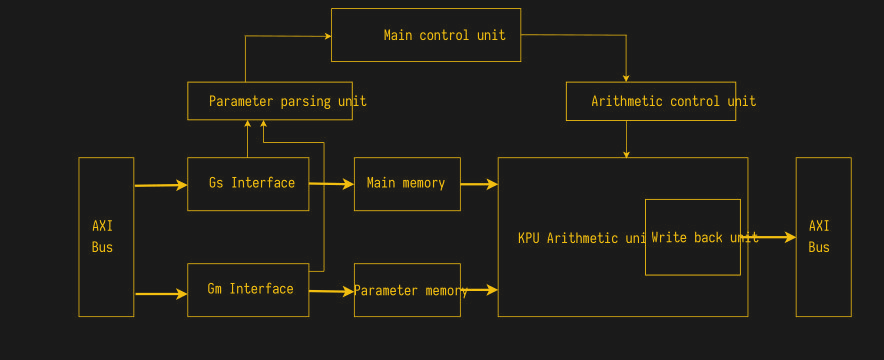

Of relevance there is the KPU processor which the K210 datasheet describes as:

KPU is a general-purpose neural network processor with built-in convolution,

batch normalization, activation, and pooling operations. It can detect faces or

objects in real time.

In the same PDF we can find more detailed information about what this KPU’s feature set is:

- Targeted towards convolutional neural networks (CNNs).

- Supports CNN kernels of dimensions 1×1 or 3×3.

- Supports any kind of activation function.

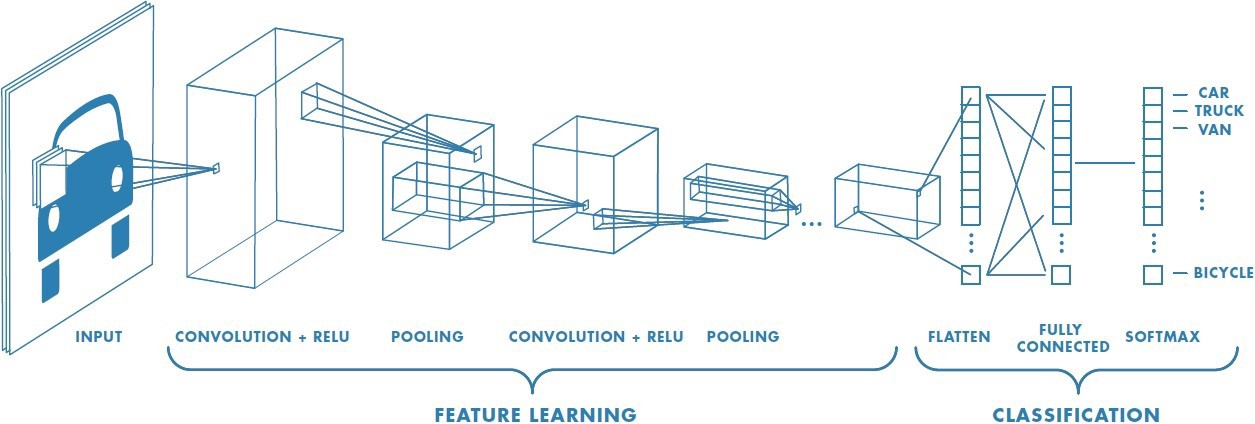

This all gives us a clear hint that what we’re dealing with here is a bit of silicon that is aimed at speeding up the processing of convolutional neural networks (CNNs), which are commonly used in areas involving machine vision. In a nutshell, convolutions are applied as filters, enhancing some features like edges, lines, and points, and then these features are used as inputs for deeper layers in a neural network. Machine vision, and more specifically object- and face-recognition, is probably what they’ll be good for.

So where’s the intelligence in this contraption?

Making Machines See with CNNs

The point of CNNs is to add something akin to a retina to a computer system, much like how other algorithms like Recursive Neural Networks (RNNs) along with Hidden Markov Models (HMMs) are used for natural language recognition. In essence it adds something akin to the senses and (depending on the type) part of a cortex associated with that sense. (If a CNN is doing what a human retina does, and a CNN is an artificial intelligence, do I actually have “smart retinas”?)

Much like a retina, a CNN used for machine vision is used to reduce the amount of raw image information. With the K210 SoC the ‘KPU’ peripheral is then used to offload CPU-intensive operations to dedicated hardware in order to speed up the processing. This is essentially the same as using a video processor to accelerate similarly parallel tasks with general-purpose computing on graphics processing units (GPGPU) processing, as made popular by CUDA and OpenCL.

A CNN consists of a number of consecutive elements, generally the input layer, a number of convolution layers with each its own pooling layer and finally a fully connected layer which works much like a classical artificial neural network (ANN). So-called kernels are applied to the convolution layer, with the kernel (a usually 2×2 or 3×3-sized grid) applied to the layer. This kernel functions essentially the same as a CUDA or OpenCL kernel, in that it applies the same instruction(s) to many data instances (SIMD).

The essential goal of a CNN is to reduce the raw data density (red, green and blue channels, for RGB data), so that the fully connected layer ends up with just the essential data, such as the rough outlines or shape of an object, that can then be classified by a trained neural network like a feedforward neural network. This network would output a probabilistic result based on its training data. More complicated implementations would use a fully connected layer that also has feedback to improve its classification.

It’s Scalars and Vectors All the Way Down

So the K210 is essentially a vector processor. It’s optimized for the particular math of taking convolutions and building weighted sums. And it does this very fast. It’s like a GPU without the graphics hardware.

Or conversely, a GPU is like an AI accelerator. Even a low-end graphics card or built-in GPU in an older laptop is also an ‘AI engine’ that is many times more powerful than that found in a K210, NVidia Jetson system, and similar embedded ‘machine learning’ or ‘machine vision’ targeting systems. With some knowledge of algebra and a GPGPU framework (or using GLSL shaders if you’re hardcore) you too can be using your GPU for all of those ‘AI’ applications. Or doing materials science, or whatever. It’s just math.

This Isn’t the Future Yet?

Unfortunately, the reality painted for us by marketing departments is quite unlike that of the reality outside of those corporate walls. Even though decades of research has given us new ways to process information and categorize input faster than ever before, we do not have little brains embedded in the hardware we buy.

What we do have is a wonderful application of algebra and vector processors, the latter of which have become more powerful and affordable than ever, largely courtesy of the GPU-fueled development. Because of graphics cards, vector processing capability has expanded rapidly, in some ways outpacing CPU development. For scientific, medical, and many other fields this has been an enormous boon. Maybe vector processors will one day underlie the first artificial intellects, as they’d likely get called by then, but for now we can at least have your car tell you whether it thinks it saw a cat or a small child.

Have you put AI co-processors to good use? Let us know in the comments.

If that article is tl;dr for you, simple analogy: https://xkcd.com/1838/

” But frankly, what what is and isn’t “intelligence” is open to debate.”

Emotional intelligence.

“With some knowledge of algebra and a GPGPU framework (or using GLSL shaders if you’re hardcore) you too can be using your GPU for all of those ‘AI’ applications. Or doing materials science, or whatever. It’s just math.”

Just math that’s more efficient on some devices. e.g. TPUs.

What about emotional intelligence? Just the term itself? Any idea how to quantify that in a machine, or even in a human?

rate your current mood from 1 to 10, 1 is very sad, 10 is very happy. if you think you can tell the number precisely, the machine can emulate it using a 4 bit integer. if you can’t, the machine likely can use 3 bits even 2 bits to save some space ;-)

but honestly, emotion probably will be emulated by an array of vectors, surrounding scene, time duration, temperature, humanity, background noise all need to be factored in. the outcome probably can’t be described by words, but it will drive the machine’s behavior – it is scary.

what qwert asked.

And is it just the ability to feel empathy? To not be “suffer” from psychopathy like bankers/traders/etc?

Serendipity indeed “Zero, Echo, Shadow, Prime” by Peter Samet describes how a character gave her avatar emotions. IRL there has been work on giving machines emotions, crude as it may be.

There is a very simple way to discover what is intelligence is by observing what it’s not. If you assume that the word has meaning and actually refers to something, then it must distinguish something that exists in reality.

If your system can be reduced to some rule-bound algorithm, aka. “just math”, then it cannot be intelligent because no difference in quality can be made between it, and any other thing that merely obeys rules. The way you distinguish things is by their quality, not by their quantity, and merely adding more rules does not release the algorithm from being rule-bound, so it does not change the quality of the algorithm. No matter how complex your AI program is, it cannot be intelligent as long as it’s merely following rules.

Intelligence also cannot be plain chance without any rules, because the system is supposed to react to things, and random events are by definition not causally connected to any inputs to the system. Intelligence is not up to chance either.

There’s the temptation to write a program that changes its own rules randomly and say that it’s neither random nor bound by rules, but this is no different from simply having many fixed programs running in parallel and choosing one of the outputs by chance.

If intelligence exists, it not computable. It simply cannot be implemented by a Turing-machine type of computer. If it doesn’t exist, then talking about AI is meaningless either way.

Also, before someone complains about the Sorites paradox: notice that in order to observe a difference in quality, you must already define the difference.

In the paradox of the heap, it is recognized already that many grains of sand form a heap, or that few do not. This is defined before we even ask the question. The paradox is in finding the point where it changes from one to the other, which is actually just because we’re ignoring what makes a heap: stacking. When there’s not enough grains to stand one on top of the other, it stops begin a heap, and vice versa.^

In the question of intelligence, we do not know in advance what constitutes intelligence, so we can’t claim that adding enough complexity to a program would or even could do it. It’s just completely unfounded.

^(The actual number of grains is three, because a heap is also wider at the bottom than the top. Anything less cannot be a heap)

> If intelligence exists, it not computable. It simply cannot be implemented by a Turing-machine type of computer.

You can write a physics simulator for a Turing machine, a brain implements intelligence with physics, therefore you can emulate a brain using a physics simulator in a Turing machine, therefore intelligence can be implemented by a Turing machine. QED. (If you say a brain isn’t intelligent, then your definition of intelligence is non-useful.)

If you assume classical physics in order to justify an AI through simulation with a classical Turing Machine, then the concept of intelligence is meaningless anyways because the resulting AI has no choice over its own behavior – that is dictated by the simulation – or the choice has nothing to do with the inputs to AI since it’s fundamentally random in the classical sense.

You can implement a physics simulator in a Turing Machine, but it won’t replicate physics as we know it (i.e. quantum physics). It’s necessarily an approximation. What a non-classical Turing Machine would look like, I have no idea. Maybe it looks like a real living brain.

>” (If you say a brain isn’t intelligent, then your definition of intelligence is non-useful.)”

If we assert that brains are intelligent, we may nevertheless find that there’s no difference in quality between a brain and a rock and therefore we have no reason to invent or use such terms – or we might find that there indeed is a difference in quality, and therefore we can use this difference to define the word “intelligence” such that brains are intelligent and rocks aren’t.

Whether you say a brain is or isn’t intelligent, you have to know intelligence. Otherwise you’re talking nonsense.

Quantum mechanics is just part of physics… it follows physical rules that can be simulated mathematically. I feel like in some people’s dictionary, there is an entry:

“Quantum. kwahn.t@m. adj. Mysterious, unknown, magical.”

Maybe it’s necessary to account for quantum effects to simulate a brain – I doubt it, simulating it on the neurophysiology level will almost certainly work fine, and I’d be surprised if not astounded if even that level of simulation is really needed simply to create a useful intelligence as opposed to a faithful replica of a particular human mind. But it’s not impossible that it needs a more detailed simulation, sure. That would have a great deal of relevance for the practical question of how hard it is for a brain to be usefully simulated on a computer, but none at all for the theoretical question (and Turing machines are theoretical entities — that’s something I’d like to see on Hackaday, a physical approximation of a Turing machine…)

If, somehow (and this seems distinctly improbable to me) pseudorandom numbers generated by a conventional algorithm are not sufficient for emulating an accurate simulation of the quantum effects supposedly necessary for intelligence, then your modified Turing machine would probably be “a Turing machine with a true RNG attached.” I’d bet on the machine looking like a computer with an OMG-Quantum-Wow hardware RNG in it, fundamentally similar to the ones you can buy for like fifty bucks now.

> Quantum mechanics is just part of physics… it follows physical rules that can be simulated mathematically.

In principle, if you have infinite time and memory.

>”it follows physical rules that can be simulated mathematically.”

Although not really, because you can’t compute non-determinism.

There’s a difference between a mathematical expression that outlines a probability distribution, and the probability itself. There is no rule, no mathematical formula, that could actually behave as the real thing – it’s just a description of the event, not the event itself.

For example, if you want to have any definite answer out of your quantum AI simulation, for example in order to turn a motor in a certain direction, you have to take all the probability distributions you have calculated and throw a random number to decide what the result is – but oh – your computer cannot (or shouldn’t) actually produce a random number because it’s a classically deterministic machine.

You can observe the fact instantly by noting that if you give your random number generator the same seed and start the program over from all the same parameters, it will always run the same way. It can’t do any otherwise. That’s not how quantum systems operate. That makes your “simulated quantum AI” collapse back to being just a non-intelligent program. It’s a complex look-alike, but not the real deal.

>”“a Turing machine with a true RNG attached.” ”

Maybe you do that by coming up with genuinely random seeds for your RNG.

But then, what that does is simply randomize the program you’re running – and swapping one non-intelligent program for another non-intelligent program does not really make any difference for the quality of the system being non-intelligent.

>”simulating it on the neurophysiology level will almost certainly work fine”

Here’s the rub. In order to have an accurate simulation of neurophysiology, you need an accurate simulation of neurochemistry. Simply folding proteins is a “quantum problem” and some of their behaviors cannot be modeled in classical terms. Whether that’s important for how the brain acts is anyone’s guess at this point.

But the point is, you can take a simple flash memory card, and abstract it away as a bucket of bits like you’d abstract neurons away into CNNs. However, take a real flash chip and you already have a quantum system that depends on weird probabilistic effects like quantum tunneling across an insulator. If your system behavior depends on it retaining or flipping some bit between 1 and 0 then you have to account for these effects – and reproduce them if you want to make something that actually behaves the same way.

>> Quantum mechanics is just part of physics… it follows physical rules that can be simulated mathematically.

> In principle, if you have infinite time and memory.

The tape on a Turing machine is infinitely long, the table of symbolic rules can be as large as it needs to be, and the time efficiency is not considered. So, then, a Turing machine can run an accurate simulation of physics including quantum mechanics, which is contrary to the original assertion that intelligence is not Turing computable.

> There’s a difference between a mathematical expression that outlines a probability distribution, and the probability itself. There is no rule, no mathematical formula, that could actually behave as the real thing – it’s just a description of the event, not the event itself.

I don’t disagree with this in pure philosophical terms, but I don’t see how it’s relevant, unless you’re suggesting that the non-deterministic behavior of quantum systems is due to a complex internal state which only appears to be random. Isn’t that considered discredited by physicists?

> but oh – your computer cannot (or shouldn’t) actually produce a random number because it’s a classically deterministic machine.

In the unlikely event that this matters, as I said, you can just add a source of quantum randomness, like noise from a reverse-biased semiconductor junction near the breakdown voltage. You could potentially construct a hardware RNG to be used directly, too, rather than just seeding a PRNG (not sure about the details of how you’d do the noise whitening, but my point is that you can have every simulated non-deterministic event use its own real random number, if you wanted.)

> That makes your “simulated quantum AI” collapse back to being just a non-intelligent program. It’s a complex look-alike, but not the real deal.

I do not believe this follows. Unpredictability is definitionally required for intelligence? That seems as bad as defining a thermostat with a bimetallic strip as being intelligent, but in the opposite direction.

>>”simulating it on the neurophysiology level will almost certainly work fine”

>Here’s the rub. In order to have an accurate simulation of neurophysiology, you need an accurate simulation of neurochemistry. Simply folding proteins is a “quantum problem” and some of their behaviors cannot be modeled in classical terms. Whether that’s important for how the brain acts is anyone’s guess at this point.

I don’t agree that this is likely to be important on the level of an individual AI / simulation. Important to understand to make such a simulation, sure. But neurons have a lot of ion channels. You can model the various types as several groups, and get a model of a neuron that behaves very similarly to a real one. You can divide the model up spatially in a discrete or continuous fashion if you need to model the propagation delay of the action potential, etc. All this you can do without modeling events on the neurochemical level, however sophisticated an understanding of neurochemistry was necessary to produce the model. I would be very surprised if it is necessary to consider individual ion channels to simulate a brain. If it is, it’s unlikely to be necessary to simulate their exact folding and chemical interactions; we could use a mathematical model of the behavior of each of the individual ion channels. They change conformation of course, but not in wildly different ways each time they open/close/interact with a neurotransmitter. We can get their actual structures from crystallography if their folding is uncertain.

Most proteins fold pretty consistently (possibly with help from other proteins that exist to help them fold right). Protein folding is not a good random number generator or source of non-determinism. The usual response of the cell to a misfolded protein is to recycle it because it’s totally non-functional…

>”So, then, a Turing machine can run an accurate simulation of physics including quantum mechanics”

In a sense, but again, not really.

The Turing Machine could compute how a quantum system evolves over time, given infinite time and memory, but only in the sense that it calculates the probability distribution of the system as it evolves from the starting parameters. If you want the simulator to collapse the probability distribution at some point and actually do something – answer a question or make a choice – you must choose a parameter for that, and the outcome will be perfectly deterministic.The Turing Machine, being totally rule-bound, will compute exactly the same simulation with exactly the same outcome every time you run it.

In essence, your Turing Machine can run an accurate simulation of physics including quantum mechanics – assuming you model quantum mechanics with hidden variables.

Meanwhile, the real system as we know it has no hidden variables, and would turn out differently almost every time regardless of your meddling because it is not rule-bound. This is the difference in quality between your simulation and the real world.

There’s also a third possibility which satisfies the Bell inequalities, which is that our reality is “superdeterministic”, which means it’s actually just playing out like a movie with no connection between events, but that would again mean that “intelligence” is totally meaningless.

> I would be very surprised if it is necessary to consider individual ion channels to simulate a brain.

I wouldn’t. Consider insects with only a handful of neurons – yet they have more complex behavior than supercomputers trying to model the same nematode worm at the level of abstracted ANNs.

> I wouldn’t. Consider insects with only a handful of neurons – yet they have more complex behavior than supercomputers trying to model the same nematode worm at the level of abstracted ANNs.

Actually, the progress of AI has blown past the benchmarks you set here. First, I’m not aware of any insect with a “handful” of neurons. Even worms have hundreds of neurons. A cockroach has a million. With near infinite combinatorial states even not accounting for the complexity of the biological neuron, I have no problem imagining that the complete behavioral repertoire of these organisms is accounted for by the current neurophysiological models; no magic subtleties are needed.

Now, with regard to computation, you could model the 300 neurons of a worm or then ten thousand odd neurons of a sea slug at a neurophysiological level using off the shelf hardware… you can certainly make more “abstract” neural networks of that complexity on a desktop PC. Supercomputers on the other hand are approaching modeling mouse brains (70 million neurons) at an intermediate level of abstraction (spiking neural networks).

As to the observed complexity of behavior of nematodes vs supercomputers, well, supercomputers aren’t programmed to have much in the way of behavior. There are certainly autonomous mobile robots (conventionally programmed or using ML approaches) that have far more sophisticated behavior than nematodes, but I’m not sure what the comparison was supposed to demonstrate.

Great, now instead of my washing machine having fuzzy logic it can have artificial intelligence. At one time I was just happy just to have a ‘turbo’ model.

I occasionally hear doom and gloom about the onset of the second “AI winter.” I absolutely hope it does happen, and sooner rather than later. This AI stuff is generally a bad idea, and I hope we all come around and figure out that right now it’s just marketing hokum and bay area grifting. Most importantly, we oughta learn that this is a great privilege and we should pray it takes as long as possible to make this stuff a reality.

We aren’t ready for this, especially from a regulation point of view.

You know that the automatic choke on your car is artificial intelligence? it replaces the humans who were originally tasked with the job. The ABS in your car is artificial intelligence, as is the traction control. You could not watch that overhead camera in the NFL without the AI to drive all those motors to move the camera smoothly. It could easily kill many people if it went haywire. The phone company has been using AI for decades to figure out the best way to run their wires. Just about every delivery route today has been computed with AI to optimize for efficiency. You have AI all around you right now, should we go back to manual chokes and stationary NFL cameras, and go back to wasting gas and time?

It’s not.

At some point, a regular bi-metal thermostat got re-defined as being “intelligent” in order to appropriate the label for all the AI systems that are just as non-intelligent and merely more complex.

Riddle me this: if something cannot have a choice, is it intelligent? If you put a piece of expanding wax in hot water, how can it choose to expand? Therefore, how can the thermostat that opens and closes the choke lever in your car be intelligent?

‘ if something cannot have a choice, is it intelligent?’

you don’t have a choice either, you HAVE to breath and eat for example

I am just saying whether you have a choice or not your are intelligent i don’t think they are mutually exclusive.

>” you don’t have a choice either”

On some matters.

The question is whether you’re bound in all matters to behave as dictated by something other than yourself. If you are incapable of making choices, yet you claim to be “intelligent”, then a falling rock is “intelligent” – everything is intelligent, and therefore nothing is because your term “intelligence” becomes meaningless. It cannot point to anything within reality, so it’s just as well you didn’t say it.

Of course AI is not “marketing hokum and Bay Area grifting,” no matter how cool you felt coming up with that phrase, and if that’s all it is then it doesn’t need regulation, only “buyer beware.” Image recognition, 2- and 3-D and video scene generation, game-playing, car driving, speech recognition, speech generation, question answering, disease and cancer detection, … are all tasks that AIs perform far better than they did just 5 years a ago, and in most of those fields better than humans. And is only in the *specifics* that any of these advances is a bad idea.

It needs regulation in the sense that companies shouldn’t be allowed to sell you bunk. You already can’t falsely advertise products or sell what isn’t there, but when it comes to “AI” all that goes to hang because neither the people, nor the administrators, nor the manufacturer knows what it is. The engineers know what it isn’t, but they’re being silenced by the marketing and the CEO.

The problem is that people are forced to take part in a massive scale Turing Test. Companies like Tesla are arguing that their system is intelligent and sufficient to drive a car – okay, so how do you prove it? By driving the car. Only, since road fatalities are actually very rare, it takes billions of miles in all road conditions, with newer and older cars, to show whether the car actually behaves at least as well as the average driver. Then there’s the whole argument of whether it should be better than the average driver, or better than the 2/3rds driver etc.

If it’s not better, then you’ve killed hundreds or thousands of people while figuring it out, and having enough statistical evidence implies that this product is already well established on the market and you cannot pull it out any longer. You just have to accept that it ISN’T intelligent enough to drive, yet you’re letting it anyways.

And we know this is going to happen because a company like Tesla has all the incentives to push the product out before it’s ready anyways, and then drag their feet and fuzz with the evidence in hopes that they can patch up the problems before it becomes a class-action lawsuit…

On the contrary, we need an open source artificial general intelligence moonshot right now – or perhaps more aptly, an AGI Manhattan project. Otherwise, AGI is going to come from authoritarians (PRC) or private corporations (Amazon), and we are going to get the Bad End as a species.

I really think they should drop the term artificial, I prefer synthetic, it better describes what is attempted here.

Artificial means man-made, synthetic usually means man-made in chemical process, so artificial is better suited here.

More suitable: Approximated Intelligence.

Anybody ever using the K210 for its “AI accelerator” ?

The official doc is quite succinct and incomplete, I wish there was a document that describes from the beginning (using TF/Keras for design and training of the NN) to the final application (export the trained NN, optimize it, then flash it in the K210, and use it with the right C/C++ software hooks from the API)

All what I’ve found so far is:

A piece of code to optimize the NN for the K210: https://github.com/kendryte/nncase

A Tensorflow workspace for the K210: https://github.com/kendryte/tensorflow-workspace

A merely introduction to K210 by Seed Studio: https://www.seeedstudio.com/blog/2019/09/12/get-started-with-k210-hardware-and-programming-environment/

An instructable that is a good starting point: https://www.instructables.com/id/Transfer-Learning-With-Sipeed-MaiX-and-Arduino-IDE/

Anybody has a practical, documented use case ?

There may be a perfomance mismatch but I can imagine the processing for depth sensing here qualifies as a target application, at least for low profile tasks like gesture recognition or such:

https://www.extremetech.com/extreme/301183-researchers-develop-tiny-depth-sensor-inspired-by-spider-eyes

Not yet. I did just get my hands on a Maixduino board, which has a Sipeed M1 module (a K210 with some other goodies to make it useful for prototypers) and an ESP32 on an Uno form factor board. Seeed Studio has the line of Sipeed Maix development boards, but I ordered mine off Amazon for like $20, with an OV2640 and an ST7789 based LCD display.

The toolchain for hobbyists using Arduino or Sipeed’s version of MicroPython, MaixPy is still kind of weak. I’m waiting them to finish with the 0.4.0 version of MaixPy to really dig into their MicroPython implementation, but it looks pretty cool. They’re also porting this to the M5StickV AI Camera, which has more or less the same K210 hardware (probably a Sipeed M1 Module).

Support is slowly improving, so I’d expect to see more documentation coming along to better use these chips. In the meantime, the K210 (well, at least the Sipeed M1) is also a fast (400-800MHz), dual core 64-bit RISC-V CPU with a bunch of I/O including dedicated camera and display ports that can be programmed with the Arduino IDE.

Wait, does this require training the system on another system first? I mean, obviously if you’re making a product you will want to transfer over a working system. But, I thought it was possible to run a static version of most CNN and RNN systems on low-power hardware.

I’ve been trying to find the article about doing so for a while, actually. It talked about use-cases, where a distributed sensor net was capable of identifying a normal range for each sensor that was time-of-day and seasonally aware (in the case of sensors affected by that) but would still raise a flag if the sensor read outside of that range. I find the idea of minimally context-aware sensor networks to be an interesting way to cut down on data requirements for a sensor network.

Training elsewhere, I think so. Most of the AI-on-the-edge stuff is just about _running_ the models on the edge.

Of course, you can simplify any ANN model down to where you can train it on a microproccy, but there’s not much point. (OK, maybe some online learning stuff would be neat.)

The “promise” here is that you just import a model that’s sufficiently generic, and it does it’s thing. So if you need a cat detector, you’re in luck.

Or less sarcastically, the training and data selection/collection are the hard parts. You don’t want to do them on constrained systems if possible.

Yet the training and data selection/collection are exactly what you’d like to have in an “intelligent” system.

Otherwise it’s just an algorithm with a funny implementation, and that’s going to be “brittle” in the sense that it won’t fail gracefully by adapting to the situation. That’s exactly what AI is supposed to solve.

Thank you for your work.

I think you did a great job on the story.

Yes! Excellent intro to the subject.

it would be interesting to have a real bionic eye. The trick is to tap into the optic nerve and provide it the same information that a normal eye with 20/20 vision supplies.

my esp8266 says ‘AI Cloud’ on the silkscreen, does that count?

That’s the most entertaining part of brand/company names, and can only hope “AI Cloud” did so intentionally.

There used to be (is?) a “Wireless LLC” that sold USB charger adapters, which made the equally hilarious “Wireless USB Charger” labeling on the package, containing an obviously wired power adapter.

“What isn’t open to debate is that AI is a marketing goldmine.”

Tell that to Spielberg.

B^)

A K210 is like a single cortical column in the brain so they do in fact do a perfect job of emulating 1/1,000,000 of a brain. The trick is to use a hierarchical mesh of them to accomplish higher level tasks.

I have a jetson nano for my robot tank and just picked up a small vision system In taiwan, can pick out 10 object classifications. Not sure what I will do with it. More playing than anything.

As for AI, I hate that word everyone is using. Just say machine learning it’s more fact of what is happening hear. Training a machine to detect objects or ability to mimic human voice.

We are far off from an AI machine capable of human thought. Sarah connor stopped that back in the 90s.

Anyways, my company had that k210 chip a couple years ago and never did anything with it. We had plans to add it to our ptz cameras. I think the next big thing for my area is better object detection in security cameras. Good for improving motion detection for capturing video. And bags/bombs left in secure areas such as airports. Just managing all that object detection and using it in a useful way is going to be tough. Mostly making it easy to use for end users.

Being on the camera makes it fast for ptz tracking. Would be great if a camera could record and use that data to learn who someone is. Biggest problem I see is that video quality on each camera has to be the same or you will need to train for each camera type. Ie 2mp 3mp 4k resolution etc..

Good post though I agree AI is not what most people think it is and tech companies are making money using that word. We may even use ai on our not so ai cameras.

https://m5stack.com/blogs/news/introducing-the-k210-risc-v-ai-camera-m5stickv

Ah yes the video stick I purchased uses the k210 chip. So yah I’ll start playing with it and see how good it is.

“Anyways, my company had that k210 chip a couple years ago …”

A couple of years? I thought the k210 is quite new. How old is it ?

It came out Sep 2018. Okay so one year ago they had it. Felt like a couple years ago.

I’d like to add, that first of all, the assumption that intelligence cannot be reached by accumulation of more and more rules cannot be proven, since we may just not have been adding enough rules yet.

In the context of Artificial Intelligence, in the real sense of general intelligence, let us assume, that we humans are generally intelligent.

If we were able to create a complete physically accurate model of a brain, or even a complete human, that we can simulate in a physical system (assuming physics can be completely computed, which based on the current state of research we have always been able to find solutions to compute physical interactions to explain what we observe in the universe) and let interact with some world, and this human the model is based on is intelligent, then we have created an artificial intelligence, which by definition of the fact, that it is an accurate physical simulation, is completely rule based, thus intelligence can arrise from just stacking rules.

Also, lets assume (not that I would suggest that it is not true, but this is a mind-game, so lets work with assumptions) biological evolution is how we as humans have evolved. From what we have found thus far in the field of biology, the first “organisms” inhabiting this world were singular cells.

I think you would agree, that a singular cell does not fullfil your definition of intelligence.

Going through the evolution of multicellular organisms we arrive at some point at the first organims that have nerve cells/neurons, allowing them to react to stimuli, but they are still only following rules, more complex rules, but still rules with the added ability to change these rules based on prior experience (this is were our current “AI” systems are, they learn based on prior “experiences”, i.e. data).

At some further point in the evolution of organisms on this planet came the first animal capable of self-reflection, this is where general intelligence begun, it is a fixed point in time, where the stacking and changing of “rules” in the form of physical interactions of particles and molecules lead to the first being capable of general intelligence.

Now, the only question that we still have to solve is, what exact change was it that lead to this capability of self-reflection.

It might be a change in the way the brain (which is just some structured connection of billions of neurons) was connected within itself, a restructuring, or it might be just the stacking of more and more neurons.

If it is the number of neurons, it will take us some time to be able to test that hypothesis, since current AI systems are only in the millions of neurons at best, while we would need several billions.

If it is structuring (which based on current psychological research definitly plays a part, combined with the number of neurons), we need to learn more about the structuring of the neural networks in the human brain, or start with something smaller like mice and work our way up.

Thus saying, intelligence is not computable is not possible to be proven at the current time of development of our computational abilities and research, but everything we know so far from the different disciplines of the sciences suggests, that intelligence is, in fact, computable.

If you are interested in learning more about general intelligence, its capabilities and also dangers, I can recommend “Superintelligence” by Nick Bostrom. A really good book, although nothing to be read to relax, as it is very philosophical.

This was actually a reply to Luke, don’t know why it’s not showing as such.

How good is Chip ‘AI’ ? I would say, it’s as good as ‘YES, NO, VERY GOOD’

You don’t need AI chip to make this kind of AI solutions !

Need Ratina ? Grab 25 LDRs and arrange in 5×5 LRD matrix sensor with 10 ADC channels on a generic MCU. (Input)

Next do some intensive mathematical matrix mambo-jumbo on those arrays with CPU/DMA/PDL. (model)

Then output your numbers/Cat/Dog/Yes/No or whatever with LEDs. (output)

Add a feedback push button to know your system whether it identified properly or not (feedback)

Now the mambo-jumbo will adjust some weighted-values/parameters to do things better (learning)

Finally market it with the name ‘Time Variant AI’ , much cheaper than ‘Real Time AI’ !